“The History of Computing: an Introduction for the Computer Scientist”

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microprocessors History of Computing Nouf Assaid

MICROPROCESSORS HISTORY OF COMPUTING NOUF ASSAID 1 Table of Contents Introduction 2 Brief History 2 Microprocessors 7 Instruction Set Architectures 8 Von Neumann Machine 9 Microprocessor Design 12 Superscalar 13 RISC 16 CISC 20 VLIW 23 Multiprocessor 24 Future Trends in Microprocessor Design 25 2 Introduction If we take a look around us, we would be sure to find a device that uses a microprocessor in some form or the other. Microprocessors have become a part of our daily lives and it would be difficult to imagine life without them today. From digital wrist watches, to pocket calculators, from microwaves, to cars, toys, security systems, navigation, to credit cards, microprocessors are ubiquitous. All this has been made possible by remarkable developments in semiconductor technology enabling in the last 30 years, enabling the implementation of ideas that were previously beyond the average computer architect’s grasp. In this paper, we discuss the various microprocessor technologies, starting with a brief history of computing. This is followed by an in-depth look at processor architecture, design philosophies, current design trends, RISC processors and CISC processors. Finally we discuss trends and directions in microprocessor design. Brief Historical Overview Mechanical Computers A French engineer by the name of Blaise Pascal built the first working mechanical computer. This device was made completely from gears and was operated using hand cranks. This machine was capable of simple addition and subtraction, but a few years later, a German mathematician by the name of Leibniz made a similar machine that could multiply and divide as well. After about 150 years, a mathematician at Cambridge, Charles Babbage made his Difference Engine. -

Anna Lysyanskaya Curriculum Vitae

Anna Lysyanskaya Curriculum Vitae Computer Science Department, Box 1910 Brown University Providence, RI 02912 (401) 863-7605 email: [email protected] http://www.cs.brown.edu/~anna Research Interests Cryptography, privacy, computer security, theory of computation. Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Computer Science, September 2002 Advisor: Ronald L. Rivest, Viterbi Professor of EECS Thesis title: \Signature Schemes and Applications to Cryptographic Protocol Design" Massachusetts Institute of Technology Cambridge, MA S.M. in Computer Science, June 1999 Smith College Northampton, MA A.B. magna cum laude, Highest Honors, Phi Beta Kappa, May 1997 Appointments Brown University, Providence, RI Fall 2013 - Present Professor of Computer Science Brown University, Providence, RI Fall 2008 - Spring 2013 Associate Professor of Computer Science Brown University, Providence, RI Fall 2002 - Spring 2008 Assistant Professor of Computer Science UCLA, Los Angeles, CA Fall 2006 Visiting Scientist at the Institute for Pure and Applied Mathematics (IPAM) Weizmann Institute, Rehovot, Israel Spring 2006 Visiting Scientist Massachusetts Institute of Technology, Cambridge, MA 1997 { 2002 Graduate student IBM T. J. Watson Research Laboratory, Hawthorne, NY Summer 2001 Summer Researcher IBM Z¨urich Research Laboratory, R¨uschlikon, Switzerland Summers 1999, 2000 Summer Researcher 1 Teaching Brown University, Providence, RI Spring 2008, 2011, 2015, 2017, 2019; Fall 2012 Instructor for \CS 259: Advanced Topics in Cryptography," a seminar course for graduate students. Brown University, Providence, RI Spring 2012 Instructor for \CS 256: Advanced Complexity Theory," a graduate-level complexity theory course. Brown University, Providence, RI Fall 2003,2004,2005,2010,2011 Spring 2007, 2009,2013,2014,2016,2018 Instructor for \CS151: Introduction to Cryptography and Computer Security." Brown University, Providence, RI Fall 2016, 2018 Instructor for \CS 101: Theory of Computation," a core course for CS concentrators. -

Analytical Engine, 1838 ALLAN G

Charles Babbage’s Analytical Engine, 1838 ALLAN G. BROMLEY Charles Babbage commenced work on the design of the Analytical Engine in 1834 following the collapse of the project to build the Difference Engine. His ideas evolved rapidly, and by 1838 most of the important concepts used in his later designs were established. This paper introduces the design of the Analytical Engine as it stood in early 1838, concentrating on the overall functional organization of the mill (or central processing portion) and the methods generally used for the basic arithmetic operations of multiplication, division, and signed addition. The paper describes the working of the mechanisms that Babbage devised for storing, transferring, and adding numbers and how they were organized together by the “microprogrammed” control system; the paper also introduces the facilities provided for user- level programming. The intention of the paper is to show that an automatic computing machine could be built using mechanical devices, and that Babbage’s designs provide both an effective set of basic mechanisms and a workable organization of a complete machine. Categories and Subject Descriptors: K.2 [History of Computing]- C. Babbage, hardware, software General Terms: Design Additional Key Words and Phrases: Analytical Engine 1. Introduction 1838. During this period Babbage appears to have made no attempt to construct the Analytical Engine, Charles Babbage commenced work on the design of but preferred the unfettered intellectual exploration of the Analytical Engine shortly after the collapse in 1833 the concepts he was evolving. of the lo-year project to build the Difference Engine. After 1849 Babbage ceased designing calculating He was at the time 42 years o1d.l devices. -

What Every Computer Scientist Should Know About Floating-Point Arithmetic

What Every Computer Scientist Should Know About Floating-Point Arithmetic DAVID GOLDBERG Xerox Palo Alto Research Center, 3333 Coyote Hill Road, Palo Alto, CalLfornLa 94304 Floating-point arithmetic is considered an esotoric subject by many people. This is rather surprising, because floating-point is ubiquitous in computer systems: Almost every language has a floating-point datatype; computers from PCs to supercomputers have floating-point accelerators; most compilers will be called upon to compile floating-point algorithms from time to time; and virtually every operating system must respond to floating-point exceptions such as overflow This paper presents a tutorial on the aspects of floating-point that have a direct impact on designers of computer systems. It begins with background on floating-point representation and rounding error, continues with a discussion of the IEEE floating-point standard, and concludes with examples of how computer system builders can better support floating point, Categories and Subject Descriptors: (Primary) C.0 [Computer Systems Organization]: General– instruction set design; D.3.4 [Programming Languages]: Processors —compders, optirruzatzon; G. 1.0 [Numerical Analysis]: General—computer arithmetic, error analysis, numerzcal algorithms (Secondary) D. 2.1 [Software Engineering]: Requirements/Specifications– languages; D, 3.1 [Programming Languages]: Formal Definitions and Theory —semantZcs D ,4.1 [Operating Systems]: Process Management—synchronization General Terms: Algorithms, Design, Languages Additional Key Words and Phrases: denormalized number, exception, floating-point, floating-point standard, gradual underflow, guard digit, NaN, overflow, relative error, rounding error, rounding mode, ulp, underflow INTRODUCTION tions of addition, subtraction, multipli- cation, and division. It also contains Builders of computer systems often need background information on the two information about floating-point arith- methods of measuring rounding error, metic. -

Cryptography

Security Engineering: A Guide to Building Dependable Distributed Systems CHAPTER 5 Cryptography ZHQM ZMGM ZMFM —G. JULIUS CAESAR XYAWO GAOOA GPEMO HPQCW IPNLG RPIXL TXLOA NNYCS YXBOY MNBIN YOBTY QYNAI —JOHN F. KENNEDY 5.1 Introduction Cryptography is where security engineering meets mathematics. It provides us with the tools that underlie most modern security protocols. It is probably the key enabling technology for protecting distributed systems, yet it is surprisingly hard to do right. As we’ve already seen in Chapter 2, “Protocols,” cryptography has often been used to protect the wrong things, or used to protect them in the wrong way. We’ll see plenty more examples when we start looking in detail at real applications. Unfortunately, the computer security and cryptology communities have drifted apart over the last 20 years. Security people don’t always understand the available crypto tools, and crypto people don’t always understand the real-world problems. There are a number of reasons for this, such as different professional backgrounds (computer sci- ence versus mathematics) and different research funding (governments have tried to promote computer security research while suppressing cryptography). It reminds me of a story told by a medical friend. While she was young, she worked for a few years in a country where, for economic reasons, they’d shortened their medical degrees and con- centrated on producing specialists as quickly as possible. One day, a patient who’d had both kidneys removed and was awaiting a transplant needed her dialysis shunt redone. The surgeon sent the patient back from the theater on the grounds that there was no urinalysis on file. -

Theoretical Computer Science Knowledge a Selection of Latest Research Visit Springer.Com for an Extensive Range of Books and Journals in the field

ABCD springer.com Theoretical Computer Science Knowledge A Selection of Latest Research Visit springer.com for an extensive range of books and journals in the field Theory of Computation Classical and Contemporary Approaches Topics and features include: Organization into D. C. Kozen self-contained lectures of 3-7 pages 41 primary lectures and a handful of supplementary lectures Theory of Computation is a unique textbook that covering more specialized or advanced topics serves the dual purposes of covering core material in 12 homework sets and several miscellaneous the foundations of computing, as well as providing homework exercises of varying levels of difficulty, an introduction to some more advanced contempo- many with hints and complete solutions. rary topics. This innovative text focuses primarily, although by no means exclusively, on computational 2006. 335 p. 75 illus. (Texts in Computer Science) complexity theory. Hardcover ISBN 1-84628-297-7 $79.95 Theoretical Introduction to Programming B. Mills page, this book takes you from a rudimentary under- standing of programming into the world of deep Including well-digested information about funda- technical software development. mental techniques and concepts in software construction, this book is distinct in unifying pure 2006. XI, 358 p. 29 illus. Softcover theory with pragmatic details. Driven by generic ISBN 1-84628-021-4 $69.95 problems and concepts, with brief and complete illustrations from languages including C, Prolog, Java, Scheme, Haskell and HTML. This book is intended to be both a how-to handbook and easy reference guide. Discussions of principle, worked Combines theory examples and exercises are presented. with practice Densely packed with explicit techniques on each springer.com Theoretical Computer Science Knowledge Complexity Theory Exploring the Limits of Efficient Algorithms computer science. -

The Computer Scientist As Toolsmith—Studies in Interactive Computer Graphics

Frederick P. Brooks, Jr. Fred Brooks is the first recipient of the ACM Allen Newell Award—an honor to be presented annually to an individual whose career contributions have bridged computer science and other disciplines. Brooks was honored for a breadth of career contributions within computer science and engineering and his interdisciplinary contributions to visualization methods for biochemistry. Here, we present his acceptance lecture delivered at SIGGRAPH 94. The Computer Scientist Toolsmithas II t is a special honor to receive an award computer science. Another view of computer science named for Allen Newell. Allen was one of sees it as a discipline focused on problem-solving sys- the fathers of computer science. He was tems, and in this view computer graphics is very near especially important as a visionary and a the center of the discipline. leader in developing artificial intelligence (AI) as a subdiscipline, and in enunciating A Discipline Misnamed a vision for it. When our discipline was newborn, there was the What a man is is more important than what he usual perplexity as to its proper name. We at Chapel Idoes professionally, however, and it is Allen’s hum- Hill, following, I believe, Allen Newell and Herb ble, honorable, and self-giving character that makes it Simon, settled on “computer science” as our depart- a double honor to be a Newell awardee. I am pro- ment’s name. Now, with the benefit of three decades’ foundly grateful to the awards committee. hindsight, I believe that to have been a mistake. If we Rather than talking about one particular research understand why, we will better understand our craft. -

Finding a Story for the History of Computing 2018

Repositorium für die Medienwissenschaft Thomas Haigh Finding a Story for the History of Computing 2018 https://doi.org/10.25969/mediarep/3796 Angenommene Version / accepted version Working Paper Empfohlene Zitierung / Suggested Citation: Haigh, Thomas: Finding a Story for the History of Computing. Siegen: Universität Siegen: SFB 1187 Medien der Kooperation 2018 (Working paper series / SFB 1187 Medien der Kooperation 3). DOI: https://doi.org/10.25969/mediarep/3796. Erstmalig hier erschienen / Initial publication here: https://nbn-resolving.org/urn:nbn:de:hbz:467-13377 Nutzungsbedingungen: Terms of use: Dieser Text wird unter einer Creative Commons - This document is made available under a creative commons - Namensnennung - Nicht kommerziell - Keine Bearbeitungen 4.0/ Attribution - Non Commercial - No Derivatives 4.0/ License. For Lizenz zur Verfügung gestellt. Nähere Auskünfte zu dieser Lizenz more information see: finden Sie hier: https://creativecommons.org/licenses/by-nc-nd/4.0/ https://creativecommons.org/licenses/by-nc-nd/4.0/ Finding a Story for the History of Computing Thomas Haigh University of Wisconsin — Milwaukee & University of Siegen WORKING PAPER SERIES | NO. 3 | JULY 2018 Collaborative Research Center 1187 Media of Cooperation Sonderforschungsbereich 1187 Medien der Kooperation Working Paper Series Collaborative Research Center 1187 Media of Cooperation Print-ISSN 2567 – 2509 Online-ISSN 2567 – 2517 URN urn :nbn :de :hbz :467 – 13377 This work is licensed under the Creative Publication of the series is funded by the German Re- Commons Attribution-NonCommercial- search Foundation (DFG). No Derivatives 4.0 International License. The Arpanet Logical Map, February, 1978 on the titl e This Working Paper Series is edited by the Collabora- page is taken from: Bradley Fidler and Morgan Currie, tive Research Center Media of Cooperation and serves “Infrastructure, Representation, and Historiography as a platform to circulate work in progress or preprints in BBN’s Arpanet Maps”, IEEE Annals of the History of in order to encourage the exchange of ideas. -

Milestones in Analog and Digital Computing

Milestones in Analog and Digital Computing Contributions to the History of Mathematics and Information Technology by Herbert Bruderer Numerous recent discoveries of rare historical analog and digital calculators and previously unknown texts, drawings, and pictures from Germany, Austria, Switzerland, Liechtenstein, and France. Worldwide, multilingual bibliography regarding the history of computer science with over 3000 entries. 12 step-by-step set of instructions for the operation of historical analog and digital calculating devices. 75 comparative overviews in tabular form. 200 illustrations. 20 comprehensive lists. 7 timelines of computer history. Published by de Gruyter Oldenbourg. Berlin/Boston 2015, xxxii, 820 pages, 119.95 Euro. During the 1970's mechanical calculating instruments and machines suddenly disappeared from the scene. They were replaced by electronic versions. Most of these devices developed since the 17th century – often very clever constructions – have been forgotten. Who can imagine today how difficult calculation was only a few decades ago? This book introduces the reader to selected milestones from prehistory and early history of computing. The Antikythera Mechanism This puzzling device was made around 200 BC. It was discovered around 1900 by divers off the Greek island of Antikythera. It is believed to be the oldest known analog (or rather hybrid) computing device. Numerous replicas have been built to unravel the mysteries of this calendar calculator. It is suspected that the machine came from the school of Archimedes. 1 Androids, Music Boxes, Chess Automatons, Looms This treatise also explores topics related to computing technology: automated human and animal figures, mecha- nized musical instruments, music boxes, as well as punched tape controlled looms and typewriters. -

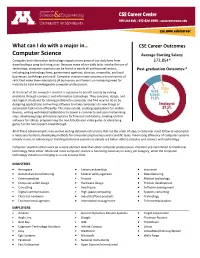

Computer Science Average Starting Salary

What can I do with a major in… CSE Career Outcomes Computer Science Average Starting Salary: Computer and information technology impacts many areas of our daily lives from $73,854* downloading a song to driving a car. Because many of our daily tasks involve the use of technology, computer scientists can be found in nearly all professional sectors, Post-graduation Outcomes:* including big technology firms, government agencies, startups, nonprofits, and local businesses, both large and small. Computer science majors possess a broad variety of skills that make them valuable to all businesses and there is an increasing need for industry to have knowledgeable computer professionals. At the heart of the computer scientist is a passion to benefit society by solving problems through computer and information technology. They conceive, design, and test logical structures for solving problems by computer and find ways to do so by designing applications and writing software to make computers do new things or accomplish tasks more efficiently. This may include, creating applications for mobile devices, writing web-based applications to power e-commerce and social networking sites, developing large enterprise systems for financial institutions, creating control software for robots, programming the next blockbuster video game, or identifying genes for the next biotech breakthrough. All of these advancements may involve writing detailed instructions that list the order of steps a computer must follow to accomplish a necessary function, developing methods for computerizing business and scientific tasks, maximizing efficiency of computer systems already in use, or enhancing or building immersive systems so people are better able to socialize and interact with technology Computer scientists often work on a more abstract level than other computer professionals. -

The History of Computing in the History of Technology

The History of Computing in the History of Technology Michael S. Mahoney Program in History of Science Princeton University, Princeton, NJ (Annals of the History of Computing 10(1988), 113-125) After surveying the current state of the literature in the history of computing, this paper discusses some of the major issues addressed by recent work in the history of technology. It suggests aspects of the development of computing which are pertinent to those issues and hence for which that recent work could provide models of historical analysis. As a new scientific technology with unique features, computing in turn can provide new perspectives on the history of technology. Introduction record-keeping by a new industry of data processing. As a primary vehicle of Since World War II 'information' has emerged communication over both space and t ime, it as a fundamental scientific and technological has come to form the core of modern concept applied to phenomena ranging from information technolo gy. What the black holes to DNA, from the organization of English-speaking world refers to as "computer cells to the processes of human thought, and science" is known to the rest of western from the management of corporations to the Europe as informatique (or Informatik or allocation of global resources. In addition to informatica). Much of the concern over reshaping established disciplines, it has information as a commodity and as a natural stimulated the formation of a panoply of new resource derives from the computer and from subjects and areas of inquiry concerned with computer-based communications technolo gy. -

Implications of Historical Trends in the Electrical Efficiency of Computing

[3B2-9] man2011030046.3d 29/7/011 10:35 Page 46 Implications of Historical Trends in the Electrical Efficiency of Computing Jonathan G. Koomey Stanford University Stephen Berard Microsoft Marla Sanchez Carnegie Mellon University Henry Wong Intel The electrical efficiency of computation has doubled roughly every year and a half for more than six decades, a pace of change compa- rable to that for computer performance and electrical efficiency in the microprocessor era. These efficiency improvements enabled the cre- ation of laptops, smart phones, wireless sensors, and other mobile computing devices, with many more such innovations yet to come. Valentine’s day 1946 was a pivotal date in trends in chip production.2 (It has also some- human history. It was on that day that the times served as a benchmark for progress in US War Department formally announced chip design, which we discuss more later on the existence of the Electronic Numerical in this article.) As Gordon Moore put it in Integrator and Computer (ENIAC).1 ENIAC’s his original article, ‘‘The complexity [of inte- computational engine had no moving parts grated circuits] for minimum component and used electrical pulses for its logical oper- costs has increased at a rate of roughly a fac- ations. Earlier computing devices relied on tor of two per year,’’3 where complexity is mechanical relays and possessed computa- defined as the number of components (not tional speeds three orders of magnitude just transistors) per chip. slower than ENIAC. The original statement of Moore’s law has Moving electrons is inherently faster than been modified over the years in several differ- moving atoms, and shifting to electronic digital ent ways, as previous research has estab- computing began a march toward ever-greater lished.4,5 The trend, as Moore initially defined and cheaper computational power that contin- it, relates to the minimum component costs ues even to this day.