What Every Computer Scientist Should Know About Floating-Point Arithmetic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Anna Lysyanskaya Curriculum Vitae

Anna Lysyanskaya Curriculum Vitae Computer Science Department, Box 1910 Brown University Providence, RI 02912 (401) 863-7605 email: [email protected] http://www.cs.brown.edu/~anna Research Interests Cryptography, privacy, computer security, theory of computation. Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Computer Science, September 2002 Advisor: Ronald L. Rivest, Viterbi Professor of EECS Thesis title: \Signature Schemes and Applications to Cryptographic Protocol Design" Massachusetts Institute of Technology Cambridge, MA S.M. in Computer Science, June 1999 Smith College Northampton, MA A.B. magna cum laude, Highest Honors, Phi Beta Kappa, May 1997 Appointments Brown University, Providence, RI Fall 2013 - Present Professor of Computer Science Brown University, Providence, RI Fall 2008 - Spring 2013 Associate Professor of Computer Science Brown University, Providence, RI Fall 2002 - Spring 2008 Assistant Professor of Computer Science UCLA, Los Angeles, CA Fall 2006 Visiting Scientist at the Institute for Pure and Applied Mathematics (IPAM) Weizmann Institute, Rehovot, Israel Spring 2006 Visiting Scientist Massachusetts Institute of Technology, Cambridge, MA 1997 { 2002 Graduate student IBM T. J. Watson Research Laboratory, Hawthorne, NY Summer 2001 Summer Researcher IBM Z¨urich Research Laboratory, R¨uschlikon, Switzerland Summers 1999, 2000 Summer Researcher 1 Teaching Brown University, Providence, RI Spring 2008, 2011, 2015, 2017, 2019; Fall 2012 Instructor for \CS 259: Advanced Topics in Cryptography," a seminar course for graduate students. Brown University, Providence, RI Spring 2012 Instructor for \CS 256: Advanced Complexity Theory," a graduate-level complexity theory course. Brown University, Providence, RI Fall 2003,2004,2005,2010,2011 Spring 2007, 2009,2013,2014,2016,2018 Instructor for \CS151: Introduction to Cryptography and Computer Security." Brown University, Providence, RI Fall 2016, 2018 Instructor for \CS 101: Theory of Computation," a core course for CS concentrators. -

Cryptography

Security Engineering: A Guide to Building Dependable Distributed Systems CHAPTER 5 Cryptography ZHQM ZMGM ZMFM —G. JULIUS CAESAR XYAWO GAOOA GPEMO HPQCW IPNLG RPIXL TXLOA NNYCS YXBOY MNBIN YOBTY QYNAI —JOHN F. KENNEDY 5.1 Introduction Cryptography is where security engineering meets mathematics. It provides us with the tools that underlie most modern security protocols. It is probably the key enabling technology for protecting distributed systems, yet it is surprisingly hard to do right. As we’ve already seen in Chapter 2, “Protocols,” cryptography has often been used to protect the wrong things, or used to protect them in the wrong way. We’ll see plenty more examples when we start looking in detail at real applications. Unfortunately, the computer security and cryptology communities have drifted apart over the last 20 years. Security people don’t always understand the available crypto tools, and crypto people don’t always understand the real-world problems. There are a number of reasons for this, such as different professional backgrounds (computer sci- ence versus mathematics) and different research funding (governments have tried to promote computer security research while suppressing cryptography). It reminds me of a story told by a medical friend. While she was young, she worked for a few years in a country where, for economic reasons, they’d shortened their medical degrees and con- centrated on producing specialists as quickly as possible. One day, a patient who’d had both kidneys removed and was awaiting a transplant needed her dialysis shunt redone. The surgeon sent the patient back from the theater on the grounds that there was no urinalysis on file. -

Theoretical Computer Science Knowledge a Selection of Latest Research Visit Springer.Com for an Extensive Range of Books and Journals in the field

ABCD springer.com Theoretical Computer Science Knowledge A Selection of Latest Research Visit springer.com for an extensive range of books and journals in the field Theory of Computation Classical and Contemporary Approaches Topics and features include: Organization into D. C. Kozen self-contained lectures of 3-7 pages 41 primary lectures and a handful of supplementary lectures Theory of Computation is a unique textbook that covering more specialized or advanced topics serves the dual purposes of covering core material in 12 homework sets and several miscellaneous the foundations of computing, as well as providing homework exercises of varying levels of difficulty, an introduction to some more advanced contempo- many with hints and complete solutions. rary topics. This innovative text focuses primarily, although by no means exclusively, on computational 2006. 335 p. 75 illus. (Texts in Computer Science) complexity theory. Hardcover ISBN 1-84628-297-7 $79.95 Theoretical Introduction to Programming B. Mills page, this book takes you from a rudimentary under- standing of programming into the world of deep Including well-digested information about funda- technical software development. mental techniques and concepts in software construction, this book is distinct in unifying pure 2006. XI, 358 p. 29 illus. Softcover theory with pragmatic details. Driven by generic ISBN 1-84628-021-4 $69.95 problems and concepts, with brief and complete illustrations from languages including C, Prolog, Java, Scheme, Haskell and HTML. This book is intended to be both a how-to handbook and easy reference guide. Discussions of principle, worked Combines theory examples and exercises are presented. with practice Densely packed with explicit techniques on each springer.com Theoretical Computer Science Knowledge Complexity Theory Exploring the Limits of Efficient Algorithms computer science. -

The Computer Scientist As Toolsmith—Studies in Interactive Computer Graphics

Frederick P. Brooks, Jr. Fred Brooks is the first recipient of the ACM Allen Newell Award—an honor to be presented annually to an individual whose career contributions have bridged computer science and other disciplines. Brooks was honored for a breadth of career contributions within computer science and engineering and his interdisciplinary contributions to visualization methods for biochemistry. Here, we present his acceptance lecture delivered at SIGGRAPH 94. The Computer Scientist Toolsmithas II t is a special honor to receive an award computer science. Another view of computer science named for Allen Newell. Allen was one of sees it as a discipline focused on problem-solving sys- the fathers of computer science. He was tems, and in this view computer graphics is very near especially important as a visionary and a the center of the discipline. leader in developing artificial intelligence (AI) as a subdiscipline, and in enunciating A Discipline Misnamed a vision for it. When our discipline was newborn, there was the What a man is is more important than what he usual perplexity as to its proper name. We at Chapel Idoes professionally, however, and it is Allen’s hum- Hill, following, I believe, Allen Newell and Herb ble, honorable, and self-giving character that makes it Simon, settled on “computer science” as our depart- a double honor to be a Newell awardee. I am pro- ment’s name. Now, with the benefit of three decades’ foundly grateful to the awards committee. hindsight, I believe that to have been a mistake. If we Rather than talking about one particular research understand why, we will better understand our craft. -

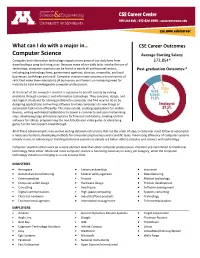

Computer Science Average Starting Salary

What can I do with a major in… CSE Career Outcomes Computer Science Average Starting Salary: Computer and information technology impacts many areas of our daily lives from $73,854* downloading a song to driving a car. Because many of our daily tasks involve the use of technology, computer scientists can be found in nearly all professional sectors, Post-graduation Outcomes:* including big technology firms, government agencies, startups, nonprofits, and local businesses, both large and small. Computer science majors possess a broad variety of skills that make them valuable to all businesses and there is an increasing need for industry to have knowledgeable computer professionals. At the heart of the computer scientist is a passion to benefit society by solving problems through computer and information technology. They conceive, design, and test logical structures for solving problems by computer and find ways to do so by designing applications and writing software to make computers do new things or accomplish tasks more efficiently. This may include, creating applications for mobile devices, writing web-based applications to power e-commerce and social networking sites, developing large enterprise systems for financial institutions, creating control software for robots, programming the next blockbuster video game, or identifying genes for the next biotech breakthrough. All of these advancements may involve writing detailed instructions that list the order of steps a computer must follow to accomplish a necessary function, developing methods for computerizing business and scientific tasks, maximizing efficiency of computer systems already in use, or enhancing or building immersive systems so people are better able to socialize and interact with technology Computer scientists often work on a more abstract level than other computer professionals. -

Mathematics and Computer Science

Mathematics and Computer Science he Mathematics and Computer Science Ma 315, Probability and Statistics TDepartment at Benedictine College is Ma 356, Modern Algebra I committed to maintaining a curriculum that Ma 360, Modern Algebra II or provides students with the necessary tools Ma 480, Introduction to Real Analysis to enter a career in their field with a broad, Ma 488, Senior Comprehensive solid knowledge of mathematics or computer Ma 493, Directed Research science. Our students are provided with the six hours of upper-division math electives knowledge, analytical, and problem solving and Cs 114, Introduction to Computer skills necessary to function as mathematicians Science I or Cs 230, Programming for or computer scientists in our world today. Scientists and Engineers The mathematics curriculum prepares stu- dents for graduate study, for responsible posi- Requirements for a major in tions in business, industry, and government, Computer Science: and for teaching positions in secondary and Cs 114, Introduction to Computer Science I elementary schools. Basic skills and tech- Cs 115, Introduction to Computer Science II niques provide for entering a career as an Ma 255, Discrete Mathematical Structures I actuary, banker, bio-mathematician, computer Cs 256, Discrete Mathematical Structures II programmer, computer scientist, economist, Cs 300, Information & Knowledge engineer, industrial researcher, lawyer, man- Management Cs 351, Algorithm Design and Data Analysis agement consultant, market research analyst, Cs 421, Computer Architecture mathematician, mathematics teacher, opera- Cs 440, Operating Systems and Networking tions researcher, quality control specialist, Cs 488, Senior Comprehensive statistician, or systems analyst. Cs 492, Software Development and Computer science is an area of study that Professional Practice is important in the technological age in which Cs 493, Senior Capstone we live. -

What Is Computer Science All About?

WHAT IS COMPUTER SCIENCE ALL ABOUT? H. Conrad Cunningham and Pallavi Tadepalli Department of Computer and Information Science University of Mississippi COMPUTERS EVERYWHERE As scientific and engineering disciplines go, computer science is still quite young. Although the mathematical roots of computer science go back more than a thousand years, it is only with the invention of the programmable electronic digital computer during the World War II era of the 1930s and 1940s that the modern discipline of computer science began to take shape. As it has developed, computer science includes theoretical studies, experimental methods, and engineering design all in one discipline. One of the first computers was the ENIAC (Electronic Numerical Integrator and Computer), developed in the mid-1940s at the University of Pennsylvania. When construction was completed in 1946, the ENIAC cost about $500,000. In today’s terms, that is about $5,000,000. It weighed 30 tons, occupied as much space as a small house, and consumed 160 kilowatts of electric power. Figure 1 is a classic U.S. Army photograph of the ENIAC. The ENIAC and most other computers of that era were designed for military purposes, such as calculating firing tables for artillery. As a result, many observers viewed the market for such devices to be quite small. The observers were wrong! Figure 1. ENIAC in Classic U.S. Army Photograph 1 Electronics technology has improved greatly in 60 years. Today, a computer with the capacity of the ENIAC would be smaller than a coin from our pockets, would consume little power, and cost just a few dollars on the mass market. -

What to Do Till the Computer Scientist Comes

WHAT TO DO TILL THE COMPUTER SCIENTIST COMES BY G. E. FORSYTHE Reprinted from the American Mathematical Monthly Vol. 75, No. 5, May, 1968 WHAT TO DO TILL THE COMPUTER SCIENTIST COMES* « GEORGE E. FORSYTHE, Computer Science Department, Stanford University Computer science departments. What is computer science anyway? This is a favorite topic in computer science department meetings. Just as with definitions of mathematics, there is less than total agreement and—moreover—you must know a good deal about the subject before any definition makes sense. Perhaps the tersest answer is given by Newell, Perlis, and Simon [8]: just as zoology is "-a the study of animals, so computer science is the study of computers. They explain that it includes the hardware, the software, and the useful algorithms 4 computers perform. J believe they would also include the study of computers rt that might be built, given sufficient demand and sufficient development in the technology. In an earlier paper [4], the author defines computer science as the art and science of representing and processing information. Some persons [10] extend the subject to include a study of the structure of information in nature (e.g., the genetic code). Computer scientists work in three distinguishable areas: (1) design of hard- ware components and especially total systems; (2) design of basic languages and software broadly useful in applications, including monitors, compilers, time- sharing S3 -stems, etc.; (3) methodology of problem solving with computers. The accent here is on the principles of problem solving—those techniques that are common to solving broad classes of problems, as opposed to the preparation of individual programs to solve single problems. -

Computer Science

COMPUTER SCIENCE In this session, Explorers will begin with an overview of the computer science discipline, and they will then conduct a short, fun investigation that is related computer science. CATEGORY . Engineering . Computer Science OBJECTIVES By the end of this session, participants will be able to: • Define computer science. • Describe what computer scientists do. • Demonstrate a key computer science and coding concept. SUPPLIES Computer with Internet access See Activity 3 for suggested activities, and prepare supplies as needed. RESOURCES Reminder: Any time you use an outside source, be sure you review the content in advance and follow the content owner's or website’s permission requirements and guidelines. The following are suggested resources that Advisors may find helpful in planning this session: • Suggested website: United States Bureau of Labor Statistics Occupational Outlook Handbook, http://www.bls.gov/ooh/architecture-and-engineering/home.htm • Suggested website: Code.org, a non-profit dedicated to computer science education, www.code.org • Suggested website: Computer Science Field Guide (created by the University of Canterbury, New Zealand), http://www.csfieldguide.org.nz/en/index.html ADVISOR NOTE: Text in italics should be read aloud to participants. As you engage your post in activities each week, please include comments, discussions, and feedback to the group relating to Character, Leadership, and Ethics. These are important attributes that make a difference in the success of youth in the workplace and in life. ACTIVITY 1 Introduction: What Do Computer Scientists Do? According to Dictionary.com, computer science is the science that deals with the theory and methods of processing information in digital computers, the design of computer hardware and software, and the applications of computers. -

How to Think Like a Computer Scientist

How to think like a computer scientist Allen B. Downey C++ Version, First Edition 2 How to think like a computer scientist C++ Version, First Edition Copyright (C) 1999 Allen B. Downey This book is an Open Source Textbook (OST). Permission is granted to reproduce, store or transmit the text of this book by any means, electrical, mechanical, or biological, in accordance with the terms of the GNU General Public License as published by the Free Software Foundation (version 2). This book is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABIL- ITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. The original form of this book is LaTeX source code. Compiling this LaTeX source has the effect of generating a device-independent representation of a textbook, which can be converted to other formats and printed. All intermediate representations (including DVI and Postscript), and all printed copies of the textbook are also covered by the GNU General Public License. The LaTeX source for this book, and more information about the Open Source Textbook project, is available from http://www.cs.colby.edu/~downey/ost or by writing to Allen B. Downey, 5850 Mayflower Hill, Waterville, ME 04901. The GNU General Public License is available from www.gnu.org or by writ- ing to the Free Software Foundation, Inc., 59 Temple Place - Suite 330, Boston, MA 02111-1307, USA. This book was typeset by the author using LaTeX and dvips, which are both free, open-source programs. -

Artificial Intelligence

Artificial Intelligence A Very Brief Overview of a Big Field Notes for CSC 100 - The Beauty and Joy of Computing The University of North Carolina at Greensboro What is Artificial Intelligence (AI)? Definition by John McCarthy: Getting a computer to do things which, when done by people, are said to involve intelligence. John McCarthy (September 4, 1927 – October 24, 2011) was an American computer scientist and cognitive scientist. He invented the term "artificial intelligence" (AI), developed the Lisp programming language family, significantly influenced the design of the ALGOL programming language, popularized timesharing, and was very influential in the early development of AI. McCarthy received many accolades and honors, such as the Turing Award for his contributions to the topic of AI, the United States National Medal of Science, and the Kyoto Prize. Some Aspects of AI Natural Language Processing (NLP) Communicating the way people do A form of Human-Computer Interaction (HCI) Machine Learning Performing better with experience: supervised, unsupervised, reinforcement Sensory Input Hearing (processing spoken English), Seeing (image recognition, writing recognition, ...) Planning Deciding on a future plan of action Robotics Includes HCI, NLP, machine vision, planning, ... Computer creativity Computer generated music, art, ... Progress in AI A lot of hype in the early days (late 50's and throughout the 60's). For example: In 1957, Allen Newell said: "Within ten years a digital computer will be the world's chess champion" (wasn't until 1997 that a computer beat the human world champion in a match). Hasn't progressed like people thought, but in past 10 years a lot of progress: ● Handwriting recognition on envelopes (zip codes), check amounts (ATM deposits), .. -

Public-Key Cryptography

Informatics in Education, 2018, Vol. 17, No. 1, 93–103 93 © 2018 Vilnius University DOI: 10.15388/infedu.2018.06 Computational Thinking Enrichment: Public-Key Cryptography Frances ROSAMOND The University of Bergen, Institute of Informatics Thormohens Gate 55, Bergen 5020, Norway email: [email protected] Received: August 2017 Abstract. The Computer Science Unplugged activities and project has been an influential STEM (Science, Technology, Engineering & Mathematics) initiative, providing enrichment and teaching activities supporting computational thinking. Many of its activities are suitable for children. One of the most popular Unplugged activities is “Kid Krypto”, invented by Mike Fellows and Neal Koblitz. Kid Krypto demonstrates the mathematics underlying public-key cryptography without using advanced mathematics. The paper gives an example of a Kid Krypto-style encryption sys- tem that is based on disjoint cycles in a graph or network and which is accessible to a very young audience. Also described is the original Kid Krypto system which is based on a version of domi- nating set called perfect code. The paper urges research scientists to participate in mathematical sciences communication and outreach. Keywords: Kid Krypto, Computer Science Unplugged, cryptography, computational thinking, STEM. 1. Introduction Almost every area of our life is influenced by computers and concepts of Informat- ics. School systems are recognizing that Informatics is more than programming, use of software packages or word processing. The Informatics curriculum in many countries is being revised or invented to emphasize computational thinking, a term used by Jeannette Wing to describe attitudes and skills of problem solving related to computer science (Wing, 2006). Teachers are seeking classroom activities and methods for implement- ing the new curriculum requirements.