Transactions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Selection of Retrieval Techniques for Irradiated Graphite During Reactor Decommissioning - 11587

WM2011 Conference, February 27 - March 3, 2011, Phoenix, AZ Selection of Retrieval Techniques for Irradiated Graphite during Reactor Decommissioning - 11587 D.J. Potter*, R.B. Jarvis*, A.W. Banford*, L. Cordingley*, and M. Grave** * National Nuclear Laboratory, Chadwick House, Birchwood Park, Warrington, Cheshire, WA3 6AE, UK ** Doosan Babcock, Baltic Business Centre, Gateshead, NE8 3DA, UK ABSTRACT Globally, around 230,000 tonnes of irradiated graphite requires retrieval, treatment, management and/or disposal. This waste has arisen from a wide range of reactors, predominantly from the use of graphite moderated reactors for base-load generation, but also from experimental research facilities. Much of the graphite is presently still in the reactor core while other graphite is stored in a variety of forms in waste stores. The first step in the management of this significant waste stream is retrieval of the graphite from its present location. Doosan Babcock and the UK National Nuclear Laboratory are participants in the CARBOWASTE European research project which brings together organisations from a range of countries with an irradiated graphite legacy to address the graphite waste management challenge. This paper describes the issues associated with retrieval of graphite from reactors, potential approaches to graphite retrieval and the information needed to select a particular retrieval method for a specified application. Graphite retrieval is viewed within the context of the wider strategy for the management of irradiated graphite waste streams. The paper identifies the challenges of graphite retrieval and provides some examples where modelling can be used to provide information to support retrievals design and operations. INTRODUCTION A four year collaborative European Project ‘Treatment and Disposal of Irradiated Graphite and other Carbonaceous Waste (CARBOWASTE)’ was launched in April 2008 under the 7th EURATOM Framework Programme [1]. -

Slingtv Fire TV Stick TV Nexus Player Mini Nexus Player TV TV M6 HD Personal Video Recorder 2 M2 Chromecast 3 BV-3100 the Playti

HOME ENTERTAINMENT GAMING CONSOLES AND ACCESSORIES TV Chromecast Fire TV Stick 4th Generation HDMI USB TV Stick Streaming Media Player Wirelessly Stream Multimedia Content Wirelessly Stream Content Stream Movies, Music, TV, Games, & More Built-In Wi-Fi & Ethernet Connectivity 1080p Maximum Resolution Up to 1080p Resolution Support via HDMI Apple AirPlay & iCloud Integration Built-In 802.11b/g/n Wi-Fi Smartphone & Tablet Mirroring Support Supports Up to 1080p via HDMI Integrated HDMI Connector 1GB of RAM + 8GB of Flash Storage Rechargeable Siri Remote Control Control from Portable Devices Fire TV Remote App with Voice Search A8 Processor with 64-bit Architecture USB Port or AC Powered Dedicated Bluetooth Remote Control Siri Search/Multidirectional Navigation Compact Design Dual-Band Wi-Fi Connectivity 32GB APTV32 ................................................................................149.00 Compact Design at 3.3” Long 64GB APTV64 ................................................................................199.00 GOCC ................................................................................................ 34.95 AMFTVS ............................................................................................ 39.99 TV 3 TV Media Player Streaming Media Player Streaming Stick Up to 1080p Resolution Over 2,000 Entertainment Channels Stream Over 1000 Entertainment Channels Dual USB 2.0 Inputs Dual-Band Wi-Fi & Ethernet Connectivity Dual-Band 802.11a/b/g/n Wi-Fi Access Streaming Services Outputs -

Universal Remote Code Book

Universal Remote Code Book www.hestia-france.com TV CENTURION 0051 0169 CENTURY 0000 A CGE 0129 0047 0131 0043 ACER 1484 CIMLINE 0009 0028 ACME 0013 CITY 0009 ADA 0008 CLARIVOX 0169 0037 ADC 0012 0008 CLATRONIC 0009 0011 0051 0002 0083 ADMIRAL 0019 0108 0002 0001 0047 0003 0129 0030 0043 0000 COMBITECH 0248 ADYSON 0003 CONCORDE 0009 AGAZI 0002 CONDOR 0198 0051 0083 0003 0245 AGB 0123 CONRAC 0038 1395 AIKO 0003 0009 0004 CONTEC 0003 0009 0027 0030 0029 AIWA 0184 0248 0291 CONTINENTAL EDISON 0022 0111 0036 0045 0126 AKAI 1410 0011 0086 0009 0068 0139 0046 0004 0006 0008 0051 0061 COSMEL 0009 0088 0169 0200 0133 0141 CPRTEC 0156 0069 CROSLEY 0129 0131 0000 0043 AKIBA 0011 CROWN 0009 0169 0083 0047 0051 AKURA 0169 0074 0002 0009 0011 0245 0121 0043 0071 CS ELECTRONICS 0011 0129 0003 ALBA 0028 0027 0009 0011 0003 CTC 0129 0068 0083 0169 0047 0245 CTC CLATRONIC 0014 0248 0162 0062 CYBERCOM 0177 0038 0171 0002 0009 ALBIRAL 0037 0206 0205 0207 0208 0210 ALKOS 0164 0169 0042 0044 0127 0047 ALLORGAN 0157 0026 0061 0063 0067 0068 0103 ALLSTAR 0051 0107 0115 0154 0168 0185 ALTUS 0042 0228 0209 0343 0924 0933 AMPLIVISION 0003 0248 0291 AMSTRAD 0011 0009 0068 0074 0002 CYBERMAXX 0177 0038 0171 0002 0009 0108 0071 0069 0030 0123 0206 0200 0205 0207 0208 0013 0210 0211 0169 0015 0042 ANAM 0009 0065 0109 0044 0047 0048 0049 0061 ANGLO 0009 0063 0067 0068 0087 0103 ANITECH 0009 0002 0043 0109 0107 0115 0127 0154 0155 ANSONIC 0009 0014 0168 0170 0185 0228 0229 AOC 0134 0209 0218 1005 0894 0343 ARC EN CIEL 0126 0045 0139 0924 0933 0248 0291 ARCAM 0003 CYBERTRON -

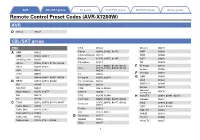

Remote Control Preset Codes (AVR-X7200W) AVR

AVR CBL/SAT group TV group VCR/PVR group BD/DVD group Audio group Remote Control Preset Codes (AVR-X7200W) AVR D Denon 73347 CBL/SAT group CBL CCS 03322 Director 00476 A ABN 03322 Celrun 02959, 03196, 03442 DMT 03036 ADB 01927, 02254 Channel Master 03118 DSD 03340 Alcatel-Lucent 02901 Charter 01376, 01877, 02187 DST 03389 Amino 01602, 01481, 01822, 02482 Chunghwa 01917 DV 02979 Arion 03034, 03336 01877, 00858, 01982, 02345, E Echostar 03452 Cisco 02378, 02563, 03028, 03265, Arris 02187 03294 Entone 02302 AT&T 00858 CJ 03322 F Freebox 01976 au 03444, 03445, 03485, 03534 CJ Digital 02693, 02979 G GBN 03407 B BBTV 02516, 02518, 02980 CJ HelloVision 03322 GCS 03322 Bell 01998 ClubInternet 02132 GDCATV 02980 BIG.BOX 03465 CMB 02979, 03389 Gehua 00476 General Bright House 01376, 01877 CMBTV 03498 Instrument 00476 BSI 02979 CNS 02350, 02980 H Hana TV 02681, 02881, 02959 BT 02294 Com Hem 00660, 01666, 02015, 02832 Handan 03524 C C&M 02962, 02979, 03319, 03407 01376, 00476, 01877, 01982, HCN 02979, 03340 Comcast 02187 Cable Magico 03035 HDT 02959, 03465 Coship 03318 Cable One 01376, 01877 Hello TV 03322 Cox 01376, 01877 Cable&Wireless 01068 HelloD 02979 Daeryung 01877 Cablecom 01582 D Hi-DTV 03500 DASAN 02683 Cablevision 01376, 01877, 03336 Hikari TV 03237 Digeo 02187 1 AVR CBL/SAT group TV group VCR/PVR group BD/DVD group Audio group Homecast 02977, 02979, 03389 02692, 02979, 03196, 03340, 01982, 02703, 02752, 03474, L LG 03389, 03406, 03407, 03500 Panasonic 03475 Huawei 01991 LG U+ 02682, 03196 Philips 01582, 02174, 02294 00660, 01981, 01983, -

NRC Collection of Abbreviations

I Nuclear Regulatory Commission c ElLc LI El LIL El, EEELIILE El ClV. El El, El1 ....... I -4 PI AVAILABILITY NOTICE Availability of Reference Materials Cited in NRC Publications Most documents cited in NRC publications will be available from one of the following sources: 1. The NRC Public Document Room, 2120 L Street, NW., Lower Level, Washington, DC 20555-0001 2. The Superintendent of Documents, U.S. Government Printing Office, P. 0. Box 37082, Washington, DC 20402-9328 3. The National Technical Information Service, Springfield, VA 22161-0002 Although the listing that follows represents the majority of documents cited in NRC publica- tions, it is not intended to be exhaustive. Referenced documents available for inspection and copying for a fee from the NRC Public Document Room include NRC correspondence and internal NRC memoranda; NRC bulletins, circulars, information notices, inspection and investigation notices; licensee event reports; vendor reports and correspondence; Commission papers; and applicant and licensee docu- ments and correspondence. The following documents in the NUREG series are available for purchase from the Government Printing Office: formal NRC staff and contractor reports, NRC-sponsored conference pro- ceedings, international agreement reports, grantee reports, and NRC booklets and bro- chures. Also available are regulatory guides, NRC regulations in the Code of Federal Regula- tions, and Nuclear Regulatory Commission Issuances. Documents available from the National Technical Information Service Include NUREG-series reports and technical reports prepared by other Federal agencies and reports prepared by the Atomic Energy Commission, forerunner agency to the Nuclear Regulatory Commission. Documents available from public and special technical libraries include all open literature items, such as books, journal articles, and transactions. -

Raspberry Pi As an Ad Blocking Access Point Created by Justin Cooper

Raspberry Pi as an Ad Blocking Access Point Created by Justin Cooper Last updated on 2018-08-22 03:37:13 PM UTC Guide Contents Guide Contents 2 Overview 3 Preparation 5 Install Software 6 Improving Performance 10 Pixelserv 10 Apache 12 FAQ 15 Does this work with SSL traffic? 15 Will this work as a WiFi-to-WiFi bridge? (Or WiFi-to-Cellular) 15 My resolv.conf gets overwritten on reboot 15 © Adafruit Industries https://learn.adafruit.com/raspberry-pi-as-an-ad-blocking-access-point Page 2 of 15 Overview This tutorial will show you how to use your Raspberry Pi as a WiFi access point that blocks ads by default for any devices using it. This is really neat in that it would work for your Android or iOS device, your Xbox 360, TiVo, laptop, and more without needing to customize any of those devices other than to use your Raspberry Pi as the access point for WiFi. Using an ad-blocker can be useful for conserving bandwidth, helping out low-power devices, or for keeping your sanity while browsing the web! © Adafruit Industries https://learn.adafruit.com/raspberry-pi-as-an-ad-blocking-access-point Page 3 of 15 I do suggest whitelisting your favorite ad-supported sites so that you can continue to support them (this tutorial shows how to do this as well). It's not easy keeping up with those wily ad serving companies so we'll also setup a way to keep your ad blocking lists up to date as part of this tutorial. -

Nuclear Materials and Waste Management – RSRL Harwell Credible & Preferred Options (Gates a & B)

Exotic Fuels, Nuclear Materials and Waste Management – RSRL Harwell Credible & Preferred Options (Gates A & B) August 2011 Exotic Fuels, Nuclear Materials and Waste Management – RSRL Harwell – Credible and Preferred Options (Gates A & B) Issue 1 Doc Ref: SMS/TS/C3-EF/RSRL/001/A-B Exotic Fuels, Nuclear Materials and Waste Management – RSRL Harwell Credible & Preferred Options (Gates A & B) August 2011 Contents Contents.......................................................................................................................2 Executive Summary .....................................................................................................3 1 Background ..........................................................................................................4 1.1 Project Objective ..........................................................................................4 1.2 Harwell Fuel, Nuclear Materials and Wastes ...............................................4 1.4 What is Low-Enriched Uranium (LEU)? .......................................................5 1.5 What are concrete-lined drums? ..................................................................5 1.6 What are contact-handled Intermediate Level Waste (CHILW) drums?.......5 1.7 Hazard Potential and Reduction?.................................................................6 2 Strategic Case......................................................................................................6 2.1 Existing Situation..........................................................................................6 -

Mounting Bracket for Streaming Boxes and Media Players

Mounting Bracket for Streaming Boxes and Media Players Hides a Streaming Device (Apple TV®, Roku®, Xiaomi® or Raspberry Pi® and More) up to 5 kg (11 lbs.) Behind Your TV, Attaches to TV/TV Mount/Wall, Steel, Black Part No.: 462082 EAN-13: 0766623462082 | UPC: 766623462082 Behind-the-TV Stream Box / Media Player Mounting Bracket – hide a streaming device and cables on the back of the TV For a clean, organized look in your home theater, you have to hide the cables and equipment. The Manhattan Mounting Bracket for Streaming Boxes and Media Players helps you easily secure and conceal your Apple TV®, Roku®, Xiaomi®, Raspberry Pi®, TiVo® or other streaming box / media player and its cables behind your TV. It supports a weight capacity up to 5 kg (11 lbs.) and can mount to your television’s VESA pattern. Two included safety straps offer extra security. If your TV is installed on the wall using a VESA mount, you can attach the bracket to the same wall mount. The Mounting Bracket is also great for hiding a router, repeater, slim gaming console or other similarly sized device. Installation is as simple as it sounds with all materials included. Features: Hides a streaming device (Apple TV®, Roku®, Xiaomi®, Raspberry Pi®, TiVo® and more) up to 5 kg (11 lbs.) behind your TV Attaches to your TV's VESA mounting holes, your TV mount or your wall For more information on Manhattan products, consult your local dealer or visit www.manhattan-products.com. All names of products or services mentioned herein are trademarks or registered trademarks of their respective owners. -

R:\TEMP\Bobbi\RDD-8 3-16-04 Reprint.Wpd

OFFICIAL USE ONLY RESTRICTED DATA DECLASSIFICATION DECISIONS 1946 TO THE PRESENT (RDD-8) January 1, 2002 U.S. Department of Energy Office of Health, Safety and Security Office of Classification Contains information which may be exempt from public release under the Freedom of Information Act (5 U.S.C. 552), exemption number(s) 2. Approval by the Department of Energy prior to public release is required. Reviewed by: Richard J. Lyons Date: 3/20/2002 NOTICE This document provides historical perspective on the sequence of declassification actions performed by the Department of Energy and its predecessor agencies. It is meant to convey the amount and types of information declassified over the years. Although the language of the original declassification authorities is cited verbatim as much as possible to preserve the historical intent of the declassification, THIS DOCUMENT IS NOT TO BE USED AS THE BASIS FOR DECLASSIFYING DOCUMENTS AND MATERIALS without specific authorization from the Director, Information Classification and Control Policy. Classification guides designed for that specific purpose must be used. OFFICIAL USE ONLY OFFICIAL USE ONLY This page intentionally left blank OFFICIAL USE ONLY OFFICIAL USE ONLY FOREWORD This document supersedes Restricted Data Declassification Decisions - 1946 To The Present (RDD-7), January 1, 2001. This is the eighth edition of a document first published in June 1994. This latest edition includes editorial corrections to RDD-7, all declassification actions that have been made since the January 1, 2001, publication date of RDD-7 and any additional declassification actions which were subsequently discovered or confirmed. Note that the terms “declassification” or “declassification action,” as used in this document, refer to changes in classification policy which result in a specific fact or concept that was classified in the past being now unclassified. -

Set up Your Tivo® Mini in 5 Easy Steps

Legal Notices Safety Instructions ® This product contains software copyright © 2013 TiVo Inc. All rights reserved. Save These Safety Instructions. Before you begin Set up your TiVo Mini in 5 easy steps. Your use of this product is subject to TiVo's Service Agreement and Privacy Policy, visit www.tivo.com/policies for the latest versions. TiVo and the TiVo logo are trademarks or registered trademarks of TiVo Inc. or its subsidiaries, 2160 Gold Street, P.O. Box 2160, Alviso, CA Make sure all your equipment is turned off and the TiVo Mini is unplugged. 95002-2160. The Mini is not designed to support any load. To ensure proper function, make sure that the air vents are not blocked, do not put your TiVo Mini in Patented. U.S. patent numbers at www.tivo.com/patents. an enclosed space, and do not place anything on top of the TiVo Mini. CableCARD is a trademark of Cable Television Laboratories, Inc. This product is for use only with listed ITE (Information Technology Equipment). Important! HDMI is a trademark or registered trademark of HDMI Licensing LLC in the United States and other countries. Safety information MoCA is a registered trademark of Multimedia over Coax Alliance in the U.S. and other countries. This product was designed and manufactured to meet strict quality and safety standards. There are, however, some installation and operation Please note that the TiVo Mini requires connection to a 4-tuner whole-home TiVo DVR such as a TiVo Premiere 4 or TiVo Premiere XL4/Elite This product contains open source software. -

Wall Mount for Streaming Boxes and Media Players

Wall Mount for Streaming Boxes and Media Players Holds a Streaming Device (Apple TV®, Roku®, Xiaomi® or Raspberry Pi® and More) up to 5 kg (11 lbs.), Extendable 47 - 76 mm (1.85 - 2.99 in.), Steel, Black Part No.: 462075 EAN-13: 0766623462075 | UPC: 766623462075 Stream Box / Media Player Wall Mount – declutter your home theater for a clean, organized look When it comes to the look of your home theater, less is more. If you aim for a clean and organized approach, you want to move your media accessories off your media furniture or, especially, stop them from dangling from your mounted TV. You can now put them safely on the wall with the Manhattan Wall Mount for Streaming Boxes and Media Players like Apple TV®, Roku®, Xiaomi, Raspberry Pi, TiVo® and more. With a weight capacity of 5 kg (11 lbs.), it easily mounts to walls, shelves and other surfaces with all the installation hardware you need in the package contents, including a nylon tie to always keep your device in place. The shelf extends for a usable depth of 39 - 68 mm (1.53 - 2.68 in.) with an open design for easy access to ports, buttons and cables on either side. If you want to declutter not just your home theater setup, this mount is also suitable for routers, repeaters, Mini PCs, slim gaming consoles and more. Features: Holds a streaming device (Apple TV®, Roku®, Xiaomi, Raspberry Pi, TiVo® and For more information on Manhattan products, consult your local dealer or visit www.manhattan-products.com. -

A Short History of Reactors : the First Generation

AA shortshort HistoryHistory ofof ReactorsReactors :: TheThe firstfirst GenerationGeneration Bertrand BARRÉ Scientific Advisor AREVA Pr. Emeritus INSTN Oklo, Gabon : Generation 0 Nuclear Power – B. Barré - Garching – February 2010. - p.2 Nuclear reactors « Generations » Future Advanced Operating Nuclear Systems Reactors Reactors Pionner Facilities 1950 1970 1990 2010 2030 2050 2070 2090 Generation I Generation II Generation III Generation IV Nuclear Power – B. Barré - Garching – October 2010. - p.3 Fission 1938 - 1942 1938 : Fermi plays with neutrons & U. Hahn-Meitner say «fission !» 1939 : Joliot et al. «chain reaction» 1942 : Staggs Field Nuclear Power – B. Barré - Garching – February 2010. - p.4 December 2, 1942 Prospects & Prerequisites to Renaissance – B. Barré Gassummit 2009 The 50s : Nuclear Electricity 1956 : Inauguration of Calder Hall by Elisabeth II 1951 : EBR 1 lits 4 Bulbs) 1954 : Obninsk, 5 MWe Nuclear Power – B. Barré - Garching – February 2010. - p.6 Obninsk 5 MWe NPP Calder Hall : from Opening to Decommissioning Prospects & Prerequisites to Renaissance – B. Barré Gassummit 2009 Paleontology Nuclear Power – B. Barré - Garching – February 2010. - p.8 Many Possible Combinations Fissile Material U235, Pu, U233 Fertile Material U238, Th232 Moderator D2O, Graphite, H2O, or none Fuel Composition Metal, oxide, carbide, nitride, salt, solid, liquid, suspension Fuel Geometry Cylinder, rod, pin, sphere, particle Coolant Air, H2O, D2O, CO2, He, Na, Pb Cycle Direct, indirect Nuclear Power – B. Barré - Garching – February 2010. - p.9