Quantitative Approaches to Understanding Song Lyrics and Their Interpretations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Excesss Karaoke Master by Artist

XS Master by ARTIST Artist Song Title Artist Song Title (hed) Planet Earth Bartender TOOTIMETOOTIMETOOTIM ? & The Mysterians 96 Tears E 10 Years Beautiful UGH! Wasteland 1999 Man United Squad Lift It High (All About 10,000 Maniacs Candy Everybody Wants Belief) More Than This 2 Chainz Bigger Than You (feat. Drake & Quavo) [clean] Trouble Me I'm Different 100 Proof Aged In Soul Somebody's Been Sleeping I'm Different (explicit) 10cc Donna 2 Chainz & Chris Brown Countdown Dreadlock Holiday 2 Chainz & Kendrick Fuckin' Problems I'm Mandy Fly Me Lamar I'm Not In Love 2 Chainz & Pharrell Feds Watching (explicit) Rubber Bullets 2 Chainz feat Drake No Lie (explicit) Things We Do For Love, 2 Chainz feat Kanye West Birthday Song (explicit) The 2 Evisa Oh La La La Wall Street Shuffle 2 Live Crew Do Wah Diddy Diddy 112 Dance With Me Me So Horny It's Over Now We Want Some Pussy Peaches & Cream 2 Pac California Love U Already Know Changes 112 feat Mase Puff Daddy Only You & Notorious B.I.G. Dear Mama 12 Gauge Dunkie Butt I Get Around 12 Stones We Are One Thugz Mansion 1910 Fruitgum Co. Simon Says Until The End Of Time 1975, The Chocolate 2 Pistols & Ray J You Know Me City, The 2 Pistols & T-Pain & Tay She Got It Dizm Girls (clean) 2 Unlimited No Limits If You're Too Shy (Let Me Know) 20 Fingers Short Dick Man If You're Too Shy (Let Me 21 Savage & Offset &Metro Ghostface Killers Know) Boomin & Travis Scott It's Not Living (If It's Not 21st Century Girls 21st Century Girls With You 2am Club Too Fucked Up To Call It's Not Living (If It's Not 2AM Club Not -

MUSIC NOTES: Exploring Music Listening Data As a Visual Representation of Self

MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall A thesis submitted in partial fulfillment of the requirements for the degree of: Master of Design University of Washington 2016 Committee: Kristine Matthews Karen Cheng Linda Norlen Program Authorized to Offer Degree: Art ©Copyright 2016 Chad Philip Hall University of Washington Abstract MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall Co-Chairs of the Supervisory Committee: Kristine Matthews, Associate Professor + Chair Division of Design, Visual Communication Design School of Art + Art History + Design Karen Cheng, Professor Division of Design, Visual Communication Design School of Art + Art History + Design Shelves of vinyl records and cassette tapes spark thoughts and mem ories at a quick glance. In the shift to digital formats, we lost physical artifacts but gained data as a rich, but often hidden artifact of our music listening. This project tracked and visualized the music listening habits of eight people over 30 days to explore how this data can serve as a visual representation of self and present new opportunities for reflection. 1 exploring music listening data as MUSIC NOTES a visual representation of self CHAD PHILIP HALL 2 A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF: master of design university of washington 2016 COMMITTEE: kristine matthews karen cheng linda norlen PROGRAM AUTHORIZED TO OFFER DEGREE: school of art + art history + design, division -

Cruz's Cell Phone Content and Internet Searches

11/8/2018 Cruz’s Cell Phone Content and Internet Searches Special Agent Randy Camp Special Agent Chuck Massucci Sergeant John Suess 1 Cruz’s Cell Phone Content and Internet Searches • Phone contents prior to February 01, 2018 • Phone contents from February 01 – 14 • Phone content and Cruz’s travels on February 14 • Nikolas Cruz’s weapons • Excerpts from interview with Snead family • James - Father • Kimberly - Mother • Jameson “JT” – friend of Cruz 2 1 11/8/2018 Nikolas Cruz’s Cell Phone Data without a timestamp or prior to February 01, 2018 3 Cruz’s Cell Phone – Searches • November 14, 2017: • “shooting people massacre” • November 15, 2017: • “rape caught on video” • “armed robber gets shot buy every customer” 4 2 11/8/2018 Cruz’s Cell Phone – Notes December 30, 2017 at 10:27 pm “Control your breathing and trigger pull. Your the one who sights in the rifle for yourself.adjust the scope to your shooting ability. Keep that adjustment every time.you have to shoot to yourself only to one self.my trigger squeeze is my ones ability.samething every time.” 5 Cruz’s Cell Phone – Notes January 20, 2018 at 5:58 pm “basketball court full of Targets still thinking of ways to kill people” 6 3 11/8/2018 Cruz’s Cell Phone – Notes January 21, 2018 at 3:35 pm “My life is a mess idk what to do anymore. Everyday I get even more agitated at everyone cause my life is unfair. Everything and everyone is happy except for me I want to kill people but I don't know how I can do it. -

Eyez on Me 2PAC – Changes 2PAC - Dear Mama 2PAC - I Ain't Mad at Cha 2PAC Feat

2 UNLIMITED- No Limit 2PAC - All Eyez On Me 2PAC – Changes 2PAC - Dear Mama 2PAC - I Ain't Mad At Cha 2PAC Feat. Dr DRE & ROGER TROUTMAN - California Love 311 - Amber 311 - Beautiful Disaster 311 - Down 3 DOORS DOWN - Away From The Sun 3 DOORS DOWN – Be Like That 3 DOORS DOWN - Behind Those Eyes 3 DOORS DOWN - Dangerous Game 3 DOORS DOWN – Duck An Run 3 DOORS DOWN – Here By Me 3 DOORS DOWN - Here Without You 3 DOORS DOWN - Kryptonite 3 DOORS DOWN - Landing In London 3 DOORS DOWN – Let Me Go 3 DOORS DOWN - Live For Today 3 DOORS DOWN – Loser 3 DOORS DOWN – So I Need You 3 DOORS DOWN – The Better Life 3 DOORS DOWN – The Road I'm On 3 DOORS DOWN - When I'm Gone 4 NON BLONDES - Spaceman 4 NON BLONDES - What's Up 4 NON BLONDES - What's Up ( Acoustative Version ) 4 THE CAUSE - Ain't No Sunshine 4 THE CAUSE - Stand By Me 5 SECONDS OF SUMMER - Amnesia 5 SECONDS OF SUMMER - Don't Stop 5 SECONDS OF SUMMER – Good Girls 5 SECONDS OF SUMMER - Jet Black Heart 5 SECONDS OF SUMMER – Lie To Me 5 SECONDS OF SUMMER - She Looks So Perfect 5 SECONDS OF SUMMER - Teeth 5 SECONDS OF SUMMER - What I Like About You 5 SECONDS OF SUMMER - Youngblood 10CC - Donna 10CC - Dreadlock Holiday 10CC - I'm Mandy ( Fly Me ) 10CC - I'm Mandy Fly Me 10CC - I'm Not In Love 10CC - Life Is A Minestrone 10CC - Rubber Bullets 10CC - The Things We Do For Love 10CC - The Wall Street Shuffle 30 SECONDS TO MARS - Closer To The Edge 30 SECONDS TO MARS - From Yesterday 30 SECONDS TO MARS - Kings and Queens 30 SECONDS TO MARS - Teeth 30 SECONDS TO MARS - The Kill (Bury Me) 30 SECONDS TO MARS - Up In The Air 30 SECONDS TO MARS - Walk On Water 50 CENT - Candy Shop 50 CENT - Disco Inferno 50 CENT - In Da Club 50 CENT - Just A Lil' Bit 50 CENT - Wanksta 50 CENT Feat. -

Stage Hypnosis in the Shadow of Svengali: Historical Influences, Public Perceptions, and Contemporary Practices

STAGE HYPNOSIS IN THE SHADOW OF SVENGALI: HISTORICAL INFLUENCES, PUBLIC PERCEPTIONS, AND CONTEMPORARY PRACTICES Cynthia Stroud A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2013 Committee: Dr. Lesa Lockford, Advisor Dr. Richard Anderson Graduate Faculty Representative Dr. Scott Magelssen Dr. Ronald E. Shields © 2013 Cynthia Stroud All Rights Reserved iii ABSTRACT Dr. Lesa Lockford, Advisor This dissertation examines stage hypnosis as a contemporary popular entertainment form and investigates the relationship between public perceptions of stage hypnosis and the ways in which it is experienced and practiced. Heretofore, little scholarly attention has been paid to stage hypnosis as a performance phenomenon; most existing scholarship provides psychological or historical perspectives. In this investigation, I employ qualitative research methodologies including close reading, personal interviews, and participant-observation, in order to explore three questions. First, what is stage hypnosis? To answer this, I use examples from performances and from guidebooks for stage hypnotists to describe structural and performance conventions of stage hypnosis shows and to identify some similarities with shortform improvisational comedy. Second, what are some common public perceptions about stage hypnosis? To answer this, I analyze historical narratives, literary and dramatic works, film, television, and digital media. I identify nine -

Freestyle Rap Practices in Experimental Creative Writing and Composition Pedagogy

Illinois State University ISU ReD: Research and eData Theses and Dissertations 3-2-2017 On My Grind: Freestyle Rap Practices in Experimental Creative Writing and Composition Pedagogy Evan Nave Illinois State University, [email protected] Follow this and additional works at: https://ir.library.illinoisstate.edu/etd Part of the African American Studies Commons, Creative Writing Commons, Curriculum and Instruction Commons, and the Educational Methods Commons Recommended Citation Nave, Evan, "On My Grind: Freestyle Rap Practices in Experimental Creative Writing and Composition Pedagogy" (2017). Theses and Dissertations. 697. https://ir.library.illinoisstate.edu/etd/697 This Dissertation is brought to you for free and open access by ISU ReD: Research and eData. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of ISU ReD: Research and eData. For more information, please contact [email protected]. ON MY GRIND: FREESTYLE RAP PRACTICES IN EXPERIMENTAL CREATIVE WRITING AND COMPOSITION PEDAGOGY Evan Nave 312 Pages My work is always necessarily two-headed. Double-voiced. Call-and-response at once. Paranoid self-talk as dichotomous monologue to move the crowd. Part of this has to do with the deep cuts and scratches in my mind. Recorded and remixed across DNA double helixes. Structurally split. Generationally divided. A style and family history built on breaking down. Evidence of how ill I am. And then there’s the matter of skin. The material concerns of cultural cross-fertilization. Itching to plant seeds where the grass is always greener. Color collaborations and appropriations. Writing white/out with black art ink. Distinctions dangerously hidden behind backbeats or shamelessly displayed front and center for familiar-feeling consumption. -

Physical Therapy Dry Needling Sunrise Page 25 DRAFT DRAFT

Appendix A Request from Legislature and Draft Bill DRAFT Physical Therapy Dry Needling Sunrise Page 25 DRAFT DRAFT Physical Therapy Dry Needling Sunrise Page 26 DRAFT S-3546.1 SENATE BILL 6374 State of Washington 64th Legislature 2016 Regular Session By Senators Dammeier, Becker, Cleveland, Warnick, and Jayapal Read first time 01/18/16. Referred to Committee on Health Care. 1 AN ACT Relating to allowing physical therapists to perform dry 2 needling; reenacting and amending RCW 18. 74. 010; and adding a new 3 section to chapter 18.74 RCW. 4 BE IT ENACTED BY THE LEGISLATURE OF THE STATE OF WASHINGTON: 5 Sec. 1. RCW 18.74.010 and 2014 c 116 s 3 are each reenacted and 6 amended to read as follows: 7 The definitions in this section apply throughout this chapter 8 unless the context clearly requires otherwise. 9 (1) "Authorized health care practitioner" means and includes 10 licensed physicians, osteopathic physicians, chiropractors, 11 naturopaths, podiatric physicians and surgeons, dentists, and 12 advanced registered nurseDRAFT practitioners: PROVIDED, HOWEVER, That 13 nothing herein shall be construed as altering the scope of practice 14 of such practitioners as defined in their respective licensure laws. 15 (2) "Board" means the board of physical therapy created by RCW 16 18.74.020. 17 (3) "Close supervision" means that the supervisor has personally 18 diagnosed the condition to be treated and has personally authorized 19 the procedures to be performed. The supervisor is continuously on- 20 site and physically present in the operatory while the procedures are SB 6374 Physical Therapy Dry Needling Sunrise Page 27 DRAFT • 1 performed and capable of responding immediately in the event of an 2 emergency. -

Characteristics of Fame-Seeking Individuals Who Completed Or Attempted Mass Murder in the United States Angelica Wills Walden University

Walden University ScholarWorks Walden Dissertations and Doctoral Studies Walden Dissertations and Doctoral Studies Collection 2019 Characteristics of Fame-Seeking Individuals Who Completed or Attempted Mass Murder in the United States Angelica Wills Walden University Follow this and additional works at: https://scholarworks.waldenu.edu/dissertations Part of the Personality and Social Contexts Commons This Dissertation is brought to you for free and open access by the Walden Dissertations and Doctoral Studies Collection at ScholarWorks. It has been accepted for inclusion in Walden Dissertations and Doctoral Studies by an authorized administrator of ScholarWorks. For more information, please contact [email protected]. Walden University College of Social and Behavioral Sciences This is to certify that the doctoral dissertation by Angelica Wills has been found to be complete and satisfactory in all respects, and that any and all revisions required by the review committee have been made. Review Committee Dr. Eric Hickey, Committee Chairperson, Psychology Faculty Dr. Christopher Bass, Committee Member, Psychology Faculty Dr. John Schmidt, University Reviewer, Psychology Faculty Chief Academic Officer Eric Riedel, Ph.D. Walden University 2019 Abstract Characteristics of Fame-Seeking Individuals Who Completed or Attempted Mass Murder in the United States by Angelica Wills MS, Walden University, 2016 BA, Stockton University, 2008 Dissertation Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Clinical Psychology Walden University June 2019 Abstract Previous researchers have found mass murderers characterized as loners, victims of bullying, goths, and individuals who had a psychotic break. A gap in the literature that remained concerned the motive and mindset of mass murderers before their attack, particularly those who seek fame, and why they are motivated by such violent intentions. -

February 26 March 4

FEBRUARY 26 ISSUE MARCH 4 Orders Due January 29 5 Orders Due February 5 axis.wmg.com 2/23/16 AUDIO & VIDEO RECAP ARTIST TITLE LBL CNF UPC SEL # SRP ORDERS DUE Hawkwind Space Ritual (2LP 180 Gram Vinyl) PRH A 825646120758 407156 34.98 1/22/16 Jethro Tull Living In The Past (2LP 180 Gram Vinyl) PRH A 825646041930 551965 34.98 1/22/16 Morrissey Bona Drag (2LP) RRW A 825646775545 542347 34.98 1/22/16 Red Hot Chili Peppers I'm With You (2LP) WB A 093624924340 551828 29.98 1/22/16 Red Hot Chili Peppers Stadium Arcadium (4LP) WB A 093624439110 44391 39.98 1/22/16 Last Update: 01/05/16 For the latest up to date info on this release visit axis.wmg.com. ARTIST: Hawkwind TITLE: Space Ritual (2LP 180 Gram Vinyl) Label: PRH/Rhino/Parlophone Config & Selection #: A 407156 Street Date: 02/23/16 Order Due Date: 01/22/16 UPC: 825646120758 Box Count: 20 Unit Per Set: 2 SRP: $34.98 Alphabetize Under: H TRACKS Full Length Vinyl 1 Side A Side B 01 Earth Calling 01 Lord Of Light 02 Born To Go 02 Black Corridor 03 Down Through The 03 Space Is Deep Night 04 Electronic No 1 04 The Awakening Full Length Vinyl 2 Side A Side B 01 Orgone Accumulator 01 Seven By Seven 02 Upside Down 02 Sonic Attack 03 10 Seconds Of Forever 03 Time We Left This World 04 Brainstorm Today 04 Master Of The Universe 05 Welcome To The Future ALBUM FACTS Genre: Rock Description: Recorded live in December 1972 and released the following year, Space Ritual is an excellent document of Hawkwind's classic lineup, underscoring the group's status as space rock pioneers. -

Freddie Mercury – the Man Behind the Music

Freddie Mercury – The Man Behind the Music Submitted For: Music 1A: Psychology of Music (2016-17) Word Count: 1851 Introduction Ask any modern musician in the Western world and they are bound to know of Freddie Mercury – the man himself or any of the work he was involved in. As the frontman of the spectacular band, Queen, Mercury spent much of his life in the limelight and under the scrutiny of the public eye and ear. However, upon first listening, one is ignorant to the meaning of his music and the wisdom in the words of his songs, leading us to ask; Who was the man behind the music? There are a multitude of factors contributing to the formation of Mercury’s musical identity; his cultural heritage, education and even his sexuality. It is through exploration of these parts of the musician’s life, that we can determine what took the young Farrokh Bulsara and shaped him into the musical hero that is, Freddie Mercury. Childhood and Education On 5 September 1946, Farrokh Bulsara was born to parents Bomi and Jer, on the island of Zanzibar.1 From the age of eight, Farrokh attended the English boarding school, St. Peter’s near Bombay and began to show an interest in music at the same time in his life. His mother recalls that her son would ‘stack the singles to play constantly’ on the family’s record player, with any type of music he could find (mostly Indian music, and the odd Western record when they became available).2 Prompted by a letter of recommendation written by the headmaster at St Peter’s, the Bulsara family paid the extra fees to the school for Farrokh to receive piano lessons. -

Read Razorcake Issue #48 As a PDF

RIP THIS PAGE OUT Razorcake LV D ERQD¿GH QRQSUR¿W PXVLF PDJD]LQH GHGLFDWHG WR If you wish to donate through the mail, PLEASE supporting independent music culture. All donations, subscriptions, please rip this page out and send it to: and orders directly from us—regardless of amount—have been large Razorcake/Gorsky Press, Inc. components to our continued survival. $WWQ1RQSUR¿W0DQDJHU PO Box 42129 Razorcake has been very fortunate. Why? By the mere fact that Los Angeles, CA 90042 we still exist today. By the fact that we have over one hundred regular contributors—in addition to volunteers coming in every week day—at a time when our print media brethren are getting knocked down and out. Name What you may not realize is that the fanciest part about Razorcake is the zine you’re holding in your hands. We put everything we have into Address it. We pay attention to the pragmatic details that support our ideology. Our entire operations are run out of a 500 square foot basement. We don’t outsource any labor we can do ourselves (we don’t own a printing press or run the postal service). We’re self-reliant and take great pains E-mail WRFRYHUDERRPLQJIRUPRIPXVLFWKDWÀRXULVKHVRXWVLGHRIWKHPXVLF Phone industry, that isn’t locked into a fair weather scene or subgenre. Company name if giving a corporate donation 6RKHUH¶VWRDQRWKHU\HDURIJULWWHGWHHWKKLJK¿YHVDQGVWDULQJDW disbelief at a brand new record spinning on a turntable, improving our Name if giving on behalf of someone quality of life with each spin. Donation amount If you would like to give Razorcake some -

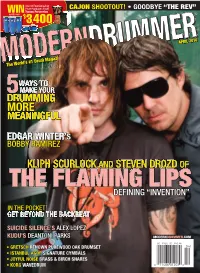

Drumming More Meaningful

One Of Four Amazing Prize Packages From CAJON SHOOTOUT! • GOODBYE “THE REV” WIN Tycoon Percussion worth over $ 3,400 APRIL 2010 The World’s #1 Drum Magazine WAYS TO 5MAKE YOUR DRUMMING MORE MEANINGFUL EDGAR WINTER’S BOBBY RAMIREZ KLIPH SCURLOCK AND STEVEN DROZD OF THE FLAMING LIPS DEFINING “INVENTION” IN THE POCKET GET BEYOND THE BACKBEAT SUICIDE SILENCE’S ALEX LOPEZ KUDU’S DEANTONI PARKS MODERNDRUMMER.COM • GRETSCH RENOWN PUREWOOD OAK DRUMSET • ISTANBUL AGOP SIGNATURE CYMBALS • JOYFUL NOISE BRASS & BIRCH SNARES • KORG WAVEDRUM April2010_Cover.indd 1 1/22/10 10:38:19 AM CONTENTS Volume 34, Number 4 • Cover photo by Timothy Herzog Timothy Herzog Paul La Raia 58 46 42 FEATURES 42 SUICIDE SILENCE’S ALEX LOPEZ 14 UPDATE TESLA’S Over-the-top rock? Minimalist metal? Who cares what bag they put him in— TROY LUCCKETTA Suicide Silence’s drummer has chops, and he knows how to use ’em. ARK’S JOHN MACALUSO 46 THE FLAMING LIPS’ JACKYL’S CHRIS WORLEY 16 GIMME 10! KLIPH SCURLOCK AND STEVEN DROZD BOBBY SANABRIA The unfamiliar beats and tones of the Lips’ trailblazing new studio album, Embryonic, were born from double-drummer experiments conducted in an empty house. How the 36 SLIGHTLY OFFBEAT timekeepers in rock’s most exploratory band define invention. ERIC FISCHER 38 A DIFFERENT VIEW 58 KUDU’S DEANTONI PARKS PETER CASE Once known as a drum ’n’ bass pioneer, the rhythmic phenom is harder than ever to pigeonhole. But for sheer bluster, attitude, and uniqueness on the kit, look no further. 76 PORTRAITS MUTEMATH’S DARREN KING MD DIGITAL SUBSCRIBERS! When you see this icon, click on a shaded box on the MARK SHERMAN QUARTET’S TIM HORNER page to open the audio player.