The Role of Online Discussion Forums During a Public Health Emergency

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Songs by Artist

Reil Entertainment Songs by Artist Karaoke by Artist Title Title &, Caitlin Will 12 Gauge Address In The Stars Dunkie Butt 10 Cc 12 Stones Donna We Are One Dreadlock Holiday 19 Somethin' Im Mandy Fly Me Mark Wills I'm Not In Love 1910 Fruitgum Co Rubber Bullets 1, 2, 3 Redlight Things We Do For Love Simon Says Wall Street Shuffle 1910 Fruitgum Co. 10 Years 1,2,3 Redlight Through The Iris Simon Says Wasteland 1975 10, 000 Maniacs Chocolate These Are The Days City 10,000 Maniacs Love Me Because Of The Night Sex... Because The Night Sex.... More Than This Sound These Are The Days The Sound Trouble Me UGH! 10,000 Maniacs Wvocal 1975, The Because The Night Chocolate 100 Proof Aged In Soul Sex Somebody's Been Sleeping The City 10Cc 1Barenaked Ladies Dreadlock Holiday Be My Yoko Ono I'm Not In Love Brian Wilson (2000 Version) We Do For Love Call And Answer 11) Enid OS Get In Line (Duet Version) 112 Get In Line (Solo Version) Come See Me It's All Been Done Cupid Jane Dance With Me Never Is Enough It's Over Now Old Apartment, The Only You One Week Peaches & Cream Shoe Box Peaches And Cream Straw Hat U Already Know What A Good Boy Song List Generator® Printed 11/21/2017 Page 1 of 486 Licensed to Greg Reil Reil Entertainment Songs by Artist Karaoke by Artist Title Title 1Barenaked Ladies 20 Fingers When I Fall Short Dick Man 1Beatles, The 2AM Club Come Together Not Your Boyfriend Day Tripper 2Pac Good Day Sunshine California Love (Original Version) Help! 3 Degrees I Saw Her Standing There When Will I See You Again Love Me Do Woman In Love Nowhere Man 3 Dog Night P.S. -

The Spirit of the Living Creatures Was in the Wheels -- Ezekiel 1:21

The spirit of the living creatures was in the wheels -- Ezekiel 1:21 "Wheels Within Wheels deepens the musical experience created at Congregation Bet Haverim by lifting the veil between the ordinary and the sacred, so that our earthy expression of musical holiness connects with the celestial resonance of the universal. Like Ezekiel’s mystical vision, the spirals of harmonies, voices and instruments evoke contemplation, awe and celebration. At times Wheels Within Wheels will transport you to an intimate experience with your innermost self, and at other times it will convey a profound connection with the world around you." -- Rabbi Joshua Lesser THE MUSICIANS OF CONGREGATION BET HAVERIM Chorus Soprano: Nefesh Chaya, Sara Dardik, Julie Fishman, Nancy Gerber, Joy Goodman, Ellie McGraw, Theresa Prestwood, Rina Rosenberg, Faith Russler, Sandi Schein Alto: Jesse Harris Bathrick, Elke Davidson, Gayanne Geurin, Kim Goldsmith, Rebecca Green, Carrie Hausman, Alix Laing, Rebecca Leary Safon, AnnaLaura Scheer, Valerie Singer, Linda Weiskoff, Valerie Wolpe, McKenzie Wren Tenor: Ned Bridges, Brad Davidorf, Faye Dresner, Henry Farber, Alan Hymowitz, Lynne Norton Bass: Dan Arnold, Gregg Bedol, David Borthwick, Gary Falcon, Bill Laing, Bill Witherspoon, Howard Winer Band Will Robertson, guitar, keyboards; Natalie Stahl, clarinet, saxophone; Sarah Zaslaw, violin, viola; Reuben Haller, mandolin; Jordan Dayan, bass, electric bass; Mike Zimmerman, drum kit, percussion; Henry Farber and Gayanne Geurin, percussion; with Matthew Kaminski, accordion Strings Sarah Zaslaw and Benjamin Reiss, violin; David Borthwick, viola; Ruth Einstein, cello; Will Robertson, double bass Children’s Choir and CBH Community School Chorus director: Will Robertson Music director: Gayanne Geurin Thank You It takes a shul to raise a recording. -

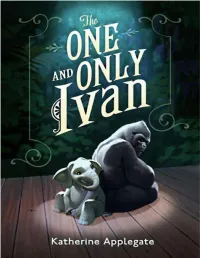

The ONE and ONLY Ivan

KATHERINE APPLEGATE The ONE AND ONLY Ivan illustrations by Patricia Castelao Dedication for Julia Epigraph It is never too late to be what you might have been. —George Eliot Glossary chest beat: repeated slapping of the chest with one or both hands in order to generate a loud sound (sometimes used by gorillas as a threat display to intimidate an opponent) domain: territory the Grunt: snorting, piglike noise made by gorilla parents to express annoyance me-ball: dried excrement thrown at observers 9,855 days (example): While gorillas in the wild typically gauge the passing of time based on seasons or food availability, Ivan has adopted a tally of days. (9,855 days is equal to twenty-seven years.) Not-Tag: stuffed toy gorilla silverback (also, less frequently, grayboss): an adult male over twelve years old with an area of silver hair on his back. The silverback is a figure of authority, responsible for protecting his family. slimy chimp (slang; offensive): a human (refers to sweat on hairless skin) vining: casual play (a reference to vine swinging) Contents Cover Title Page Dedication Epigraph Glossary hello names patience how I look the exit 8 big top mall and video arcade the littlest big top on earth gone artists shapes in clouds imagination the loneliest gorilla in the world tv the nature show stella stella’s trunk a plan bob wild picasso three visitors my visitors return sorry julia drawing bob bob and julia mack not sleepy the beetle change guessing jambo lucky arrival stella helps old news tricks introductions stella and ruby home -

WHAT USE IS MUSIC in an OCEAN of SOUND? Towards an Object-Orientated Arts Practice

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Oxford Brookes University: RADAR WHAT USE IS MUSIC IN AN OCEAN OF SOUND? Towards an object-orientated arts practice AUSTIN SHERLAW-JOHNSON OXFORD BROOKES UNIVERSITY Submitted for PhD DECEMBER 2016 Contents Declaration 5 Abstract 7 Preface 9 1 Running South in as Straight a Line as Possible 12 2.1 Running is Better than Walking 18 2.2 What You See Is What You Get 22 3 Filling (and Emptying) Musical Spaces 28 4.1 On the Superficial Reading of Art Objects 36 4.2 Exhibiting Boxes 40 5 Making Sounds Happen is More Important than Careful Listening 48 6.1 Little or No Input 59 6.2 What Use is Art if it is No Different from Life? 63 7 A Short Ride in a Fast Machine 72 Conclusion 79 Chronological List of Selected Works 82 Bibliography 84 Picture Credits 91 Declaration I declare that the work contained in this thesis has not been submitted for any other award and that it is all my own work. Name: Austin Sherlaw-Johnson Signature: Date: 23/01/18 Abstract What Use is Music in an Ocean of Sound? is a reflective statement upon a body of artistic work created over approximately five years. This work, which I will refer to as "object- orientated", was specifically carried out to find out how I might fill artistic spaces with art objects that do not rely upon expanded notions of art or music nor upon explanations as to their meaning undertaken after the fact of the moment of encounter with them. -

Songs by Title

Karaoke Song Book Songs by Title Title Artist Title Artist #1 Nelly 18 And Life Skid Row #1 Crush Garbage 18 'til I Die Adams, Bryan #Dream Lennon, John 18 Yellow Roses Darin, Bobby (doo Wop) That Thing Parody 19 2000 Gorillaz (I Hate) Everything About You Three Days Grace 19 2000 Gorrilaz (I Would Do) Anything For Love Meatloaf 19 Somethin' Mark Wills (If You're Not In It For Love) I'm Outta Here Twain, Shania 19 Somethin' Wills, Mark (I'm Not Your) Steppin' Stone Monkees, The 19 SOMETHING WILLS,MARK (Now & Then) There's A Fool Such As I Presley, Elvis 192000 Gorillaz (Our Love) Don't Throw It All Away Andy Gibb 1969 Stegall, Keith (Sitting On The) Dock Of The Bay Redding, Otis 1979 Smashing Pumpkins (Theme From) The Monkees Monkees, The 1982 Randy Travis (you Drive Me) Crazy Britney Spears 1982 Travis, Randy (Your Love Has Lifted Me) Higher And Higher Coolidge, Rita 1985 BOWLING FOR SOUP 03 Bonnie & Clyde Jay Z & Beyonce 1985 Bowling For Soup 03 Bonnie & Clyde Jay Z & Beyonce Knowles 1985 BOWLING FOR SOUP '03 Bonnie & Clyde Jay Z & Beyonce Knowles 1985 Bowling For Soup 03 Bonnie And Clyde Jay Z & Beyonce 1999 Prince 1 2 3 Estefan, Gloria 1999 Prince & Revolution 1 Thing Amerie 1999 Wilkinsons, The 1, 2, 3, 4, Sumpin' New Coolio 19Th Nervous Breakdown Rolling Stones, The 1,2 STEP CIARA & M. ELLIOTT 2 Become 1 Jewel 10 Days Late Third Eye Blind 2 Become 1 Spice Girls 10 Min Sorry We've Stopped Taking Requests 2 Become 1 Spice Girls, The 10 Min The Karaoke Show Is Over 2 Become One SPICE GIRLS 10 Min Welcome To Karaoke Show 2 Faced Louise 10 Out Of 10 Louchie Lou 2 Find U Jewel 10 Rounds With Jose Cuervo Byrd, Tracy 2 For The Show Trooper 10 Seconds Down Sugar Ray 2 Legit 2 Quit Hammer, M.C. -

Lolita" and Robert Frank's "The Americans"

W&M ScholarWorks Dissertations, Theses, and Masters Projects Theses, Dissertations, & Master Projects 1997 Going Nowhere Fast: The Car, the Highway and American Identity in Vladimir Nabokov's "Lolita" and Robert Frank's "The Americans" Scott Patrick Moyers College of William & Mary - Arts & Sciences Follow this and additional works at: https://scholarworks.wm.edu/etd Part of the American Literature Commons, and the History of Art, Architecture, and Archaeology Commons Recommended Citation Moyers, Scott Patrick, "Going Nowhere Fast: The Car, the Highway and American Identity in Vladimir Nabokov's "Lolita" and Robert Frank's "The Americans"" (1997). Dissertations, Theses, and Masters Projects. Paper 1539626114. https://dx.doi.org/doi:10.21220/s2-a0k8-dq26 This Thesis is brought to you for free and open access by the Theses, Dissertations, & Master Projects at W&M ScholarWorks. It has been accepted for inclusion in Dissertations, Theses, and Masters Projects by an authorized administrator of W&M ScholarWorks. For more information, please contact [email protected]. Going Nowhere Fast The Car, the Highway and American Identity in Vladimir Nabokov’sLolita and Robert Frank’sThe Americans A Thesis Presented to The Faculty of the Department of English The College of William and Mary in Virginia In Partial Fulfillment Of the Requirements for the Degree of Master of Arts by Scott Moyers 1997 APPROVAL SHEET This thesis is submitted in partial fulfillment of the requirements for the degree of Master of Arts Author Approved, July 1997 Colleen Kennedy -

The Marvel Universe: Origin Stories, a Novel on His Website, the Author Places It in the Public Domain

THE MARVEL UNIVERSE origin stories a NOVEL by BRUCE WAGNER Press Send Press 1 By releasing The Marvel Universe: Origin Stories, A Novel on his website, the author places it in the public domain. All or part of the work may be excerpted without the author’s permission. The same applies to any iteration or adaption of the novel in all media. It is the author’s wish that the original text remains unaltered. In any event, The Marvel Universe: Origin Stories, A Novel will live in its intended, unexpurgated form at brucewagner.la – those seeking veracity can find it there. 2 for Jamie Rose 3 Nothing exists; even if something does exist, nothing can be known about it; and even if something can be known about it, knowledge of it can't be communicated to others. —Gorgias 4 And you, you ridiculous people, you expect me to help you. —Denis Johnson 5 Book One The New Mutants be careless what you wish for 6 “Now must we sing and sing the best we can, But first you must be told our character: Convicted cowards all, by kindred slain “Or driven from home and left to die in fear.” They sang, but had nor human tunes nor words, Though all was done in common as before; They had changed their throats and had the throats of birds. —WB Yeats 7 some years ago 8 Metamorphosis 9 A L I N E L L Oh, Diary! My Insta followers jumped 23,000 the morning I posted an Avedon-inspired black-and-white selfie/mugshot with the caption: Okay, lovebugs, here’s the thing—I have ALS, but it doesn’t have me (not just yet). -

Moloch's Children: Monstrous Techno-Capitalism in North American Popular Fiction

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 10-17-2019 2:00 PM Moloch's Children: Monstrous Techno-Capitalism in North American Popular Fiction Alexandre Desbiens-Brassard The University of Western Ontario Supervisor Randall, Marilyn The University of Western Ontario Graduate Program in Comparative Literature A thesis submitted in partial fulfillment of the equirr ements for the degree in Doctor of Philosophy © Alexandre Desbiens-Brassard 2019 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Comparative Literature Commons Recommended Citation Desbiens-Brassard, Alexandre, "Moloch's Children: Monstrous Techno-Capitalism in North American Popular Fiction" (2019). Electronic Thesis and Dissertation Repository. 6581. https://ir.lib.uwo.ca/etd/6581 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Abstract Deriving from the Latin monere (to warn), monsters are at their very core warnings against the horrors that lurk in the shadows of our present and the mists of our future – in this case, the horrors of techno-capitalism (i.e., the conjunction of scientific modes of research and capitalist modes of production). This thesis reveals the ideological mechanisms that animate “techno-capitalist” monster narratives through close readings of 7 novels and 3 films from Canada and the United States in both English and French and released between 1979 and 2016. All texts are linked by shared themes, narrative tropes, and a North American origin. -

Viral Spiral Also by David Bollier

VIRAL SPIRAL ALSO BY DAVID BOLLIER Brand Name Bullies Silent Theft Aiming Higher Sophisticated Sabotage (with co-authors Thomas O. McGarity and Sidney Shapiro) The Great Hartford Circus Fire (with co-author Henry S. Cohn) Freedom from Harm (with co-author Joan Claybrook) VIRAL SPIRAL How the Commoners Built a Digital Republic of Their Own David Bollier To Norman Lear, dear friend and intrepid explorer of the frontiers of democratic practice © 2008 by David Bollier All rights reserved. No part of this book may be reproduced, in any form, without written permission from the publisher. The author has made an online version of the book available under a Creative Commons Attribution-NonCommercial license. It can be accessed at http://www.viralspiral.cc and http://www.onthecommons.org. Requests for permission to reproduce selections from this book should be mailed to: Permissions Department, The New Press, 38 Greene Street, New York,NY 10013. Published in the United States by The New Press, New York,2008 Distributed by W.W.Norton & Company,Inc., New York ISBN 978-1-59558-396-3 (hc.) CIP data available The New Press was established in 1990 as a not-for-profit alternative to the large, commercial publishing houses currently dominating the book publishing industry. The New Press operates in the public interest rather than for private gain, and is committed to publishing, in innovative ways, works of educational, cultural, and community value that are often deemed insufficiently profitable. www.thenewpress.com A Caravan book. For more information, visit www.caravanbooks.org. Composition by dix! This book was set in Bembo Printed in the United States of America 10987654321 CONTENTS Acknowledgments vii Introduction 1 Part I: Harbingers of the Sharing Economy 21 1. -

BILLBOARD COUNTRY UPDATE [email protected]

Country Update BILLBOARD.COM/NEWSLETTERS SEPTEMBER 23, 2019 | PAGE 1 OF 23 INSIDE BILLBOARD COUNTRY UPDATE [email protected] Bentley “Living” It Up On Chart Cody Johnson, “God’s Country” And The >page 4 Evolution Of The Classic Country Song Chuck Dauphin Remembered When the Nashville Songwriters Association International his second Warner Music Nashville album, Durango — owned >page 10 (NSAI) gave out its annual awards on Sept. 17, songwriter of by Howie Edelman — decided that throwing a party for some the year Josh Osborne (“Hotel Key,” “Kiss Somebody”) and of the old guard might familiarize them with Johnson’s music the trio that penned song of the year “Break Up in the End” — and inspire them to dig into their catalogs for the gems that Chase McGill, Jessie Jo Dillon and Jon Nite — claimed their never quite got cut. Brantley Gilbert’s trophies on the stage of the historic Ryman Auditorium. Durango A&R executive Scott Gunter specifically asked Tootsie’s Role But when Durango Management held a Sept. 18 event to for songs that were written before 2000, and by the next day, >page 12 find songs for untapped music Cody Johnson, and thankful those current notes were hitmakers were already rolling in Luke Bryan Switches not on the invite from the likes of It Up list. Instead, the Allen Shamblin >page 12 aptly named ( “ T h e H o u s e back-alley That Built Me”), h a n g o u t O l d Steve Bogard Makin’ Tracks: Glory filled up (“Carried Away”) Chris Young’s with writers such and Kerry Kurt as Mike Reid Phillips ( “ I “Drowning” STRAIT JOHNSON OSBORNE >page 17 (“Stranger in Don’t Need Your My House”), Jim Rockin’ Chair”), McBride (“Chattahoochee”), Michael P. -

View / Open Final Thesis-Zatarain.Pdf

SEEING CHANGE: Techniques of Transition in the Three-Dimensional Space of Recorded Popular Song by MAXWELL CHRISTIAN BORTS ZATARAIN A THESIS Presented to the School of Music and Dance and the Robert D. Clark Honors College in partial fulfillment of the requirements for the degree of Bachelor of Music November 2015 An Abstract of the Thesis of Maxwell Christian Borts Zatarain for the degree of Bachelor of Arts in the Department of Music to be taken December, 2015 Title: SEEING CHANGE: Techniques of Transition in the Arrangement of Recorded Popular Song Loren Kajikawa This thesis presents a study of the recorded form of popular music through a visual lens. It seeks to show how we conceive of music visually through spatio-visual language such as high-pitch and low-pitch, and how these spatio-visual conceptual metaphors point towards the recorded from as a spatio-visual space. Second, it seeks to define the parameters of this three-dimensional spatio-visual space through the three axes of height, width, and depth. Third, it seeks to identify and define techniques of transition used by producers and musicians seeking to navigate the moment to moment transitions that give music its vitality. 11 Copyright Page If you are assigning a different than standard copyright, such as a Creative Commons license, include that information here. For more info, see pages 15-16 of the Style Manual, provided by the Graduate School here: http://gradschool.uoregon.edu/etd (disregard the information about Proquest/UMI). If you plan to go with standard copyright, delete this page and the associated page break. -

Innocentney, Relationshipney, Uncontentney & Unfitney

REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY REPORTNEY 1/4 2/4 3/4 4/4 1/4 2/4 3/4 4/4 1/4 2/4 3/4 4/4 Innocentney, 1/4 2/4 3/4 4/4 1/4 Relationshipney, 2/4 3/4 4/4 1/4 Uncontentney 2/4 3/4 4/4 1/4 & Unfitney 2/4 3/4 4/4 1/4 2/4 3/4 4/4 1/4 2/4 3/4 4/4 CAN YOU HANDLE MY TRUTH? CAN YOU HANDLE MY TRUTH? CAN YOU HANDLE MY TRUTH? CAN YOU HANDLE MY TRUTH? CAN YOU HANDLE MY TRUTH? CAN YOU HANDLE MY TRUTH? REPORTNEY CAN YOU HANDLE MY TRUTH? This is a graduation project report from the visual communication department of the Iceland Academy of Arts. By Gréta Þorkelsdóttir. 2016. REPORTNEY 1 Bobby Brown & Gene Griffin & Teddy Riley. My Prerogative. Britney Spears. © 2004 by Jive/Zomba. MP3. you handle mine? every question People cantake take awayyour they cannever from truth. Butthe thing away you, but is: Can 1 CAN YOU HANDLE MY TRUTH? Yeah yeah yeah yeah yeah. Yeah yeah yeah yeah yeah yeah. I think I did it again. again. I think it I did yeah. yeah yeah yeah yeah Yeah yeah. yeah yeah yeah Yeah 6 1 I REPORTNEY like a crush, seem might It baby. Oh friends. than just more we’re believe you made 1 INTRODUCTION 7 INTRODUCTION CAN YOU HANDLE MY TRUTH? INTRODUCTION - When I was 6-years-old I heard … Baby One More Time for the first time.