Packet Loss Correlation in the Mbone Multicast Network Maya Yajnik University of Massachusetts - Amherst

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Remote Lecturing System Using Multicast Addressing

MTEACH: A REMOTE LECTURING SYSTEM USING MULTICAST ADDRESSING A Thesis Submitted to the Faculty of Purdue University by Vladimir Kljaji In Partial Fulfillment of the Requirements for the Degree of Master of Science in Electrical Engineering August 1997 - ii - For my family, friends and May. Thank you for your support, help and your love. - iii - ACKNOWLEDGMENTS I would like to express my gratitude to Professor Edward Delp for being my major advisor, for his guidance throughout the course of this work and especially for his time. This work could not have been done without his support. I would also like to thank Pro- fessor Edward Coyle and Professor Michael Zoltowski for their time and for being on my advisory committee. - iv - TABLE OF CONTENTS Page LIST OF TABLES .......................................................................................................viii LIST OF FIGURES........................................................................................................ix LIST OF ABBREVIATIONS.........................................................................................xi ABSTRACT.................................................................................................................xiii 1. INTRODUCTION...................................................................................................1 1.1 Review of Teleconferencing and Remote Lecturing Systems ...............................1 1.2 Motivation ...........................................................................................................2 -

SIP Architecture

383_NTRL_VoIP_08.qxd 7/31/06 4:34 PM Page 345 Chapter 8 SIP Architecture Solutions in this chapter: ■ Understanding SIP ■ SIP Functions and Features ■ SIP Architecture ■ Instant Messaging and SIMPLE Summary Solutions Fast Track Frequently Asked Questions 345 383_NTRL_VoIP_08.qxd 7/31/06 4:34 PM Page 346 346 Chapter 8 • SIP Architecture Introduction As the Internet became more popular in the 1990s, network programs that allowed communication with other Internet users also became more common. Over the years, a need was seen for a standard protocol that could allow participants in a chat, videoconference, interactive gaming, or other media to initiate user sessions with one another. In other words, a standard set of rules and services was needed that defined how computers would connect to one another so that they could share media and communicate.The Session Initiation Protocol (SIP) was developed to set up, maintain, and tear down these sessions between computers. By working in conjunction with a variety of other protocols and special- ized servers, SIP provides a number of important functions that are necessary in allowing communications between participants. SIP provides methods of sharing the location and availability of users and explains the capabilities of the software or device being used. SIP then makes it possible to set up and manage the session between the parties. Without these tasks being performed, communication over a large network like the Internet would be impossible. It would be like a message in a bottle being thrown in the ocean; you would have no way of knowing how to reach someone directly or whether the person even could receive the message. -

Replication Strategies for Streaming Media

“replication-strategies” — 2007/4/24 — 10:56 — page 1 — #1 Research Report No. 2007:03 Replication Strategies for Streaming Media David Erman Department of Telecommunication Systems, School of Engineering, Blekinge Institute of Technology, S–371 79 Karlskrona, Sweden “replication-strategies” — 2007/4/24 — 10:56 — page 2 — #2 °c 2007 by David Erman. All rights reserved. Blekinge Institute of Technology Research Report No. 2007:03 ISSN 1103-1581 Published 2007. Printed by Kaserntryckeriet AB. Karlskrona 2007, Sweden. This publication was typeset using LATEX. “replication-strategies” — 2007/4/24 — 10:56 — page i — #3 Abstract Large-scale, real-time multimedia distribution over the Internet has been the subject of research for a substantial amount of time. A large number of mechanisms, policies, methods and schemes have been proposed for media coding, scheduling and distribution. Internet Protocol (IP) multicast was expected to be the primary transport mechanism for this, though it was never deployed to the expected extent. Recent developments in overlay networks has reactualized the research on multicast, with the consequence that many of the previous mechanisms and schemes are being re-evaluated. This report provides a brief overview of several important techniques for media broad- casting and stream merging, as well as a discussion of traditional IP multicast and overlay multicast. Additionally, we present a proposal for a new distribution system, based on the broadcast and stream merging algorithms in the BitTorrent distribution and repli- cation system. “replication-strategies” — 2007/4/24 — 10:56 — page ii — #4 ii “replication-strategies” — 2007/4/24 — 10:56 — page iii — #5 CONTENTS Contents 1 Introduction 1 1.1 Motivation . -

The Dos and Don'ts of Videoconferencing in Higher

The Dos and Don’ts of Videoconferencing in Higher Education HUSAT Research Institute Loughborough University of Technology Lindsey Butters Anne Clarke Tim Hewson Sue Pomfrett Contents Acknowledgements .................................................................................................................1 Introduction .............................................................................................................................3 How to use this report ..............................................................................................................3 Chapter 1 Videoconferencing in Higher Education — How to get it right ...................................5 Structure of this chapter ...............................................................................................5 Part 1 — Subject sections ............................................................................................6 Uses of videoconferencing, videoconferencing systems, the environment, funding, management Part 2 — Where are you now? ......................................................................................17 Guidance to individual users or service providers Chapter 2 Videoconferencing Services — What is Available .....................................................30 Structure of this chapter ...............................................................................................30 Overview of currently available services .......................................................................30 Broadcasting -

Mbone Provides Audio and Video Across the Internet

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Calhoun, Institutional Archive of the Naval Postgraduate School Calhoun: The NPS Institutional Archive Faculty and Researcher Publications Faculty and Researcher Publications 1994-04 MBone Provides Audio and Video Across the Internet Macedonia, Michael R. http://hdl.handle.net/10945/40136 MBone Provides Audio and Video Across the Internet Michael R. Macedonia and Donald P. Brutzman Naval Postgraduate School he joy of science is in the discovery. In March 1993, our group at the Naval Postgraduate School heard that the Jason Project, an underwater explo ration and educational program supported by Woods Hole Oceanographic IIInstitution in Massachusetts, was showing live video over the Internet from an under water robot in waters off Baja, Mexico. We worked furiously to figure out how to re ceive that video signal, laboring diligently to gather the right equipment, contact the appropriate network managers, and obtain hardware permissions from local bu reaucrats. After several days of effort, we learned that a satellite antenna uplink ca ble on the Jason support ship had become flooded with seawater a few hours before we became operational. Despite this disappointment, we remained enthusiastic because, during our ef forts, we discovered how to use the Internet's most unique network, MBone. Short for Multicast Backbone, 1 MBone is a virtual network that has been in existence since early 1992. It was named by Steve Casner1 of the University of Southern California Information Sciences Institute and originated from an effort to multicast audio and video from meetings of the Internet Engineering Task Force. -

Multicast Redux: a First Look at Enterprise Multicast Traffic

Multicast Redux: A First Look at Enterprise Multicast Traffic Elliott Karpilovsky Lee Breslau Princeton University AT&T Labs – Research [email protected] [email protected] Alexandre Gerber Subhabrata Sen AT&T Labs – Research AT&T Labs – Research [email protected] [email protected] ABSTRACT 1. INTRODUCTION IP multicast, after spending much of the last 20 years as IP multicast [1, 2], which provides an e±cient mechanism the subject of research papers, protocol design e®orts and for the delivery of the same data from a single source to limited experimental usage, is ¯nally seeing signi¯cant de- multiple receivers, was ¯rst proposed more than two decades ployment in production networks. The e±ciency a®orded ago. It was deployed experimentally on the MBone [3] in the by one-to-many network layer distribution is well-suited to early 1990s and was the subject of a signi¯cant amount of re- such emerging applications as IPTV, ¯le distribution, con- search into scalable and robust intra- and inter-domain rout- ferencing, and the dissemination of ¯nancial trading infor- ing protocols [4, 5, 6]. The MBone, an overlay used primarily mation. However, it is important to understand the behavior to support audio and video conferencing between multicast- of these applications in order to see if network protocols are capable networks around the Internet, grew rapidly. How- appropriately supporting them. In this paper we undertake ever, the initial enthusiasm for multicast did not translate a study of enterprise multicast tra±c as observed from the into widespread success. The MBone eventually declined, vantage point of a large VPN service provider. -

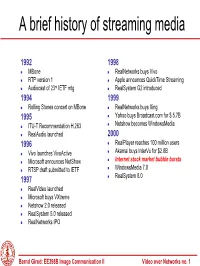

A Brief History of Streaming Media

A brief history of streaming media 1992 1998 MBone RealNetworks buys Vivo RTP version 1 Apple announces QuickTime Streaming rd Audiocast of 23 IETF mtg RealSystem G2 introduced 1994 1999 Rolling Stones concert on MBone RealNetworks buys Xing 1995 Yahoo buys Broadcast.com for $ 5.7B Netshow becomes WindowsMedia ITU-T Recommendation H.263 RealAudio launched 2000 1996 RealPlayer reaches 100 million users Akamai buys InterVu for $2.8B Vivo launches VivoActive Internet stock market bubble bursts Microsoft announces NetShow WindowsMedia 7.0 RTSP draft submitted to IETF 1997 RealSystem 8.0 RealVideo launched Microsoft buys VXtreme Netshow 2.0 released RealSystem 5.0 released RealNetworks IPO Bernd Girod: EE398B Image Communication II Video over Networks no. 1 Desktop Computer CPU Power CPU Power SPECint92 2000 CIF MPEG 1 decoder 200 QCIF H.320/H.324 codec Intel Pentium 20 Motorola PowerPC MIPS R4400 DEC Alpha 2 Intel 486DX2/66 1980 1990 2000 Year [Girod 94] Bernd Girod: EE398B Image Communication II Video over Networks no. 2 Internet Media Streaming Streaming client Media Server DSL Internet 56K modem wireless Best-effort network ChallengesChallenges • low bit-rate ••compressioncompression • variable throughput ••raterate scalability scalability • variable loss ••errorerror resiliency resiliency • variable delay ••lowlow latency latency Bernd Girod: EE398B Image Communication II Video over Networks no. 3 On-demand vs. live streaming Client 1000s simultaneous Media Server streams DSL Internet 56K modem wireless „Producer“ Bernd Girod: EE398B Image Communication II Video over Networks no. 4 Live streaming to large audiences „Producer“ Content-delivery network Relay servers . “Pseudo-multicasting” by stream replication Bernd Girod: EE398B Image Communication II Video over Networks no. -

Internetworking : Multicast and ATM Network Prerequisites for Distance Learning

Calhoun: The NPS Institutional Archive Theses and Dissertations Thesis Collection 1996-09 Internetworking : multicast and ATM network prerequisites for distance learning Tamer, Murat Tevfink Monterey, California. Naval Postgraduate School http://hdl.handle.net/10945/32280 NAVAL POSTGRADUATE SCHOOL Monterey, California THESIS INTERNETWORKING: MULTICAST AND ATM NETWORK PREREQUISITES FOR DISTANCE LEARNING by Murat Tevfik Tamer September 1996 Thesis Advisors: Don Brutzman Michael Zyda Approved for public release; distribution is unlimited. 19970220 056 .------------------------------------------------- Form Approved REPORT DOCUMENTATION PAGE OMB No. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time reviewing instructions, searching existing data sources gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden to Washington Headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Su~e 1204, Arfington, VA 22202-4302, and to the Office of Management and Budget, Paperwork R_eduction Project (0704-0188), Washington, DC 20503. 1. AGENCY USE ONLY CLeave Blank) 12. REPORT DATE 3. REPORT TYPE AND DATES COVERED September 1996 Master's Thesis 4. TITLE AND SUBTITLE 5. FUNDING NUMBERS Internetworking: Multicast and ATM Network Prerequisites for Distance Learning 6.AUTHOR Tamer, Murat Tevfik 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8. PERFORMING ORGANIZATION Naval Postgraduate School REPORT NUMBER Monterey, CA 93943-5000 9. SPONSORING/ MONITORING AGENCY NAME(S) AND ADDRESS(ES) 10. SPONSORING/ MONITORING AGENCY REPORT NUMBER 11. SUPPLEMENTARY NOTES The views expressed in this thesis are those of the author and do not reflect the official policy or position of the Department of Defense or the United States Government. -

Scalable Multimedia Communication with Internet Multicast, Light-Weight Sessions, and the Mbone

Scalable Multimedia Communication with Internet Multicast, Light-weight Sessions, and the MBone Steven McCanne University of California, Berkeley Abstract multicast requires new routing protocols and forwarding algorithms but not all routers can be upgraded simulta- In this survey article we describe the roots of IP Multi- neously, requisite infrastructure has been incrementally cast in the Internet, the evolution of the Internet Multi- put in place as a virtual multicast network embedded cast Backbone or “MBone,” and the technologies that within the traditional unicast-only Internet called the In- have risen around the MBone to support large-scale ternet Multicast Backbone, or MBone. Internet-based multimedia conferencing. We develop In addition to offering an efficient multipoint deliv- the technical rationale for the design decisions that un- ery mechanism, IP Multicast has a number of other at- derly the MBone tools, describe the evolution of this tractive properties that make it especially well suited for work from early prototypes into Internet standards, and large-scale applications of multimedia communication. outline the open challenges that remain and must be First, IP Multicast is both efficient (in terms of network overcome to realize a ubiquitous multicast infrastruc- utilization) and scalable (in terms of control overhead). ture. There are no centralized points of failure and protocol We 1 and others in the MBone research community control messages are distributed across receivers. Group have implemented our protocols and methods in “real” maintenance messages flow from the leaves toward the applications and have deployed a fully operational sys- source and are coalesced within the network to facilitate tem on a very large scale over the MBone. -

Overview of Overlay Multicast Protocols Dennis M

Overview of Overlay Multicast Protocols Dennis M. Moen C3I Center George Mason University [email protected] Introduction Multicasting remains a critical element in the deployment of scalable networked virtual simulation environments. Multicast provides an efficient mechanism for a source of information to reach many recipients. Traditional multicast protocols such as those defined by rfc 1075 [1] and rfc 2362 [2], provide mechanisms to support single source to a large number of destinations typically associated with streaming media or distribution of large volume data information distribution. In real-time collaborative and virtual simulation environments, the requirement is for many senders to send to the same destination group(s) simultaneously. This is commonly referred to as many-to-many multicast [3]. Even though IP multicasting was introduced more than 20 years ago, it still is not widely available as an open Internet service even for one-to-many multicast [4]. The most widely used multicast capability is the MBone [5]. The MBone provides a circuit overlay inter-network that connects IP multicast capable islands by using unicast tunnel connections and is commonly used in university and research environments. Only recently have public carriers started to introduce multicast services, but then only as a private network offering where all interested parties obtain service from the same carrier [6]. These new services are based on traditional multicast services providing one-to-many multicast. Because open multicast services generally have not been available, there has been a shift to the idea of an end-host service to provide similar capabilities. By organizing end hosts into an overlay to act as relay agents, multicast can be achieved through message forwarding among the members of the overlay using unicast across the underlying network or Internet. -

Introduction to IP Multicast Routing

Introduction to IP Multicast Routing by Chuck Semeria and Tom Maufer Abstract The first part of this paper describes the benefits of multicasting, the Multicast Backbone (MBONE), Class D addressing, and the operation of the Internet Group Management Protocol (IGMP). The second section explores a number of different algorithms that may potentially be employed by multicast routing protocols: - Flooding - Spanning Trees - Reverse Path Broadcasting (RPB) - Truncated Reverse Path Broadcasting (TRPB) - Reverse Path Multicasting (RPM) - Core-Based Trees The third part contains the main body of the paper. It describes how the previous algorithms are implemented in multicast routing protocols available today. - Distance Vector Multicast Routing Protocol (DVMRP) - Multicast OSPF (MOSPF) - Protocol-Independent Multicast (PIM) Introduction There are three fundamental types of IPv4 addresses: unicast, broadcast, and multicast. A unicast address is designed to transmit a packet to a single destination. A broadcast address is used to send a datagram to an entire subnetwork. A multicast address is designed to enable the delivery of datagrams to a set of hosts that have been configured as members of a multicast group in various scattered subnetworks. Multicasting is not connection oriented. A multicast datagram is delivered to destination group members with the same “best-effort” reliability as a standard unicast IP datagram. This means that a multicast datagram is not guaranteed to reach all members of the group, or arrive in the same order relative to the transmission of other packets. The only difference between a multicast IP packet and a unicast IP packet is the presence of a “group address” in the Destination Address field of the IP header. -

Internet Protocol Television (IPTV): the Killer Application for the Next-Generation Internet Yang Xiao

Rochester Institute of Technology RIT Scholar Works Articles 2007 Internet protocol television (IPTV): The Killer application for the next-generation internet Yang Xiao Xiaojiang Du Jingyuan Zhang Follow this and additional works at: http://scholarworks.rit.edu/article Recommended Citation IEEE Communications Magazine, November 2007 This Article is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Articles by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. XIAO LAYOUT 10/18/07 2:37 PM Page 126 TOPICS IN INTERNET TECHNOLOGY Internet Protocol Television (IPTV): The Killer Application for the Next-Generation Internet Yang Xiao, University of Alabama Xiaojiang Du, North Dakota State University Jingyuan Zhang, University of Alabama Fei Hu, Rochester Institute of Technology Sghaier Guizani, University of Quebec — Trois Rivières ABSTRACT able on the Internet and can be accessed freely with Internet-connected computers, iPods, and Internet Protocol Television (IPTV) will be cellular phones. The current quality of IPTV in the killer application for the next-generation the United States does not yet approach that of Internet and will provide exciting new revenue cable TV services, but the gap will shrink as opportunities for service providers. However, to bandwidth increases and video codecs improve deploy IPTV services with a full quality of ser- [2]. In 2005, there were about four million homes vice (QoS) guarantee, many underlying tech- in the world that already had IPTV. Minerva has nologies must be further studied. This article about 50 IPTV deployments that cover 150,000 serves as a survey of IPTV services and the users with VCR quality services [2].