Stereotyping Femininity in Disembodied Virtual Assistants Allison M

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

18734 Homework 3

18734 Homework 3 Released: October 7, 2016 Due: 12 noon Eastern, 9am Pacific, Oct 21, 2016 1 Legalease [20 points] In this question, you will convert a simple policy into the Legalease language. To review the Legalease language and its grammar, refer to the lecture slides from September 28. You may find it useful to go through a simple example provided in Section III.C in the paper Bootstrapping Privacy Compliance in Big Data Systems 1. A credit company CreditX has the following information about its customers: • Name • Address • PhoneNumber • DateOfBirth • SSN All this information is organized under a common table: AccountInfo. Their privacy policy states the following: We will not share your SSN with any third party vendors, or use it for any form of advertising. Your phone number will not be used for any purpose other than notifying you about inconsistencies in your account. Your address will not be used, except by the Legal Team for audit purposes. Convert this statement into Legalease. You may use the attributes: DataType, UseForPur- pose, AccessByRole. Relevant attribute-values for DataType are AccountInfo, Name, Address, PhoneNumber, DateOfBirth, and SSN. Useful attribute-values for UseForPurpose are ThirdPar- tySharing, Advertising, Notification and Audit. One attribute-value for AccessByRole is LegalTeam. 1http://www.andrew.cmu.edu/user/danupam/sen-guha-datta-oakland14.pdf 1 2 AdFisher [35=15+20 points] For this part of the homework, you will work with AdFisher. AdFisher is a tool written in Python to automate browser based experiments and run machine learning based statistical analyses on the collected data. 2.1 Installation AdFisher was developed around 2014 on MacOS. -

North Dakota Homeland Security Anti-Terrorism Summary

UNCLASSIFIED North Dakota Homeland Security Anti-Terrorism Summary The North Dakota Open Source Anti-Terrorism Summary is a product of the North Dakota State and Local Intelligence Center (NDSLIC). It provides open source news articles and information on terrorism, crime, and potential destructive or damaging acts of nature or unintentional acts. Articles are placed in the Anti-Terrorism Summary to provide situational awareness for local law enforcement, first responders, government officials, and private/public infrastructure owners. UNCLASSIFIED UNCLASSIFIED NDSLIC Disclaimer The Anti-Terrorism Summary is a non-commercial publication intended to educate and inform. Further reproduction or redistribution is subject to original copyright restrictions. NDSLIC provides no warranty of ownership of the copyright, or accuracy with respect to the original source material. Quick links North Dakota Energy Regional Food and Agriculture National Government Sector (including Schools and Universities) International Information Technology and Banking and Finance Industry Telecommunications Chemical and Hazardous Materials National Monuments and Icons Sector Postal and Shipping Commercial Facilities Public Health Communications Sector Transportation Critical Manufacturing Water and Dams Defense Industrial Base Sector North Dakota Homeland Security Emergency Services Contacts UNCLASSIFIED UNCLASSIFIED North Dakota Nothing Significant to Report Regional (Minnesota) Hacker charged over siphoning off funds meant for software devs. An alleged hacker has been charged with breaking into the e-commerce systems of Digital River before redirecting more than $250,000 to an account under his control. The hacker, of Houston, Texas, 35, is charged with fraudulently obtaining more than $274K between December 2008 and October 2009 following an alleged hack against the network of SWReg Inc, a Digital River subsidiary. -

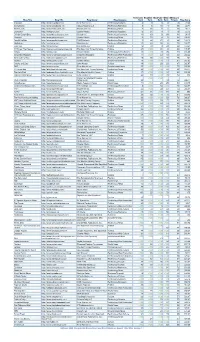

Blog Title Blog URL Blog Owner Blog Category Technorati Rank

Technorati Bloglines BlogPulse Wikio SEOmoz’s Blog Title Blog URL Blog Owner Blog Category Rank Rank Rank Rank Trifecta Blog Score Engadget http://www.engadget.com Time Warner Inc. Technology/Gadgets 4 3 6 2 78 19.23 Boing Boing http://www.boingboing.net Happy Mutants LLC Technology/Marketing 5 6 15 4 89 33.71 TechCrunch http://www.techcrunch.com TechCrunch Inc. Technology/News 2 27 2 1 76 42.11 Lifehacker http://lifehacker.com Gawker Media Technology/Gadgets 6 21 9 7 78 55.13 Official Google Blog http://googleblog.blogspot.com Google Inc. Technology/Corporate 14 10 3 38 94 69.15 Gizmodo http://www.gizmodo.com/ Gawker Media Technology/News 3 79 4 3 65 136.92 ReadWriteWeb http://www.readwriteweb.com RWW Network Technology/Marketing 9 56 21 5 64 142.19 Mashable http://mashable.com Mashable Inc. Technology/Marketing 10 65 36 6 73 160.27 Daily Kos http://dailykos.com/ Kos Media, LLC Politics 12 59 8 24 63 163.49 NYTimes: The Caucus http://thecaucus.blogs.nytimes.com The New York Times Company Politics 27 >100 31 8 93 179.57 Kotaku http://kotaku.com Gawker Media Technology/Video Games 19 >100 19 28 77 216.88 Smashing Magazine http://www.smashingmagazine.com Smashing Magazine Technology/Web Production 11 >100 40 18 60 283.33 Seth Godin's Blog http://sethgodin.typepad.com Seth Godin Technology/Marketing 15 68 >100 29 75 284 Gawker http://www.gawker.com/ Gawker Media Entertainment News 16 >100 >100 15 81 287.65 Crooks and Liars http://www.crooksandliars.com John Amato Politics 49 >100 33 22 67 305.97 TMZ http://www.tmz.com Time Warner Inc. -

Modified Roomba Det

Robots: Modified Roomba Detects Stress, Runs Away When It Thinks Y... http://i.gizmodo.com/5170892/modified-roomba-detects-stress-runs-awa... IPHONE 3.0 GIZ EXPLAINS PHOTOSHOP APPLE LUNCH NSFW iPhone 3.0 Beta Giz Explains: CONTEST iPhone 3.0 OS The Genius iPhone OS 3.0 OS Walkthrough What Makes The 42 Even More Guide: Behind Will Turn Your Video Five Smartphone Ludicrous Everything You Scanwiches.com's Phone Into a Platforms Control Schemes Need to Know Juicy Sandwich Revolutionary Different Apple Might Just Porn Sex Toy Try Gizmodo ROBOTS Modified Roomba Detects Stress, Runs Away When It Thinks You Might Abuse It By Sean Fallon, 4:40 PM on Mon Mar 16 2009, 7,205 views The last thing you need when you get home is something making noise and hovering underfoot. This modified Roomba avoids users when it detects high levels of stress. Designed by researchers at the University of Calgary, this Roomba interacts with a commercial headband for gamers that detects muscle tension in the face. The more tension it detects, the farther away the Roomba will hover from the subject. The purpose of this rather simple device is to explore the potential of human and machine interaction on an emotional level. The researchers envision gadgets that approach you like a pet when it detects that you are lonely and in need of comfort. Conversely, the Roomba model could be taken a step further—imagine if it cowered under the bed when you came home drunk and angry. Then, fearing for it's life, the Roomba calls 911 and gets you thrown in the slammer for appliance abuse. -

Ethical Guidelines 3.0 Association of Internet Researchers

Internet Research: Ethical Guidelines 3.0 Association of Internet Researchers Unanimously approved by the AoIR membership October 6, 2019 This work is licensed under a Creative Commons Attribution-NonCommercial-Share Alike 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/4.0/. aline shakti franzke (University of Duisburg-Essen), Co-Chair Anja Bechmann (Aarhus University), Co-Chair, Michael Zimmer (Marquette University), Co-Chair, Charles M. Ess (University of Oslo), Co-Chair and Editor The AoIR IRE 3.0 Ethics Working Group, including: David J. Brake, Ane Kathrine Gammelby, Nele Heise, Anne Hove Henriksen, Soraj Hongladarom, Anna Jobin, Katharina Kinder-Kurlanda, Sun Sun Lim, Elisabetta Locatelli, Annette Markham, Paul J. Reilly, Katrin Tiidenberg and Carsten Wilhelm.1 Cite as: franzke, aline shakti, Bechmann, Anja, Zimmer, Michael, Ess, Charles and the Association of Internet Researchers (2020). Internet Research: Ethical Guidelines 3.0. https://aoir.org/reports/ethics3.pdf 1 A complete list of the EWG members is provided in Appendix 7.2. Contributions from AoIR members are acknowledged in footnotes. Additional acknowledgements follow 4. Concluding Comments. 0. Preview: Suggested Approaches for Diverse Readers ...................................................................... 2 1. Summary .................................................................................................................................. 3 2. Background and Introduction ..................................................................................................... -

The Relationship Between Local Content, Internet Development and Access Prices

THE RELATIONSHIP BETWEEN LOCAL CONTENT, INTERNET DEVELOPMENT AND ACCESS PRICES This research is the result of collaboration in 2011 between the Internet Society (ISOC), the Organisation for Economic Co-operation and Development (OECD) and the United Nations Educational, Scientific and Cultural Organization (UNESCO). The first findings of the research were presented at the sixth annual meeting of the Internet Governance Forum (IGF) that was held in Nairobi, Kenya on 27-30 September 2011. The views expressed in this presentation are those of the authors and do not necessarily reflect the opinions of ISOC, the OECD or UNESCO, or their respective membership. FOREWORD This report was prepared by a team from the OECD's Information Economy Unit of the Information, Communications and Consumer Policy Division within the Directorate for Science, Technology and Industry. The contributing authors were Chris Bruegge, Kayoko Ido, Taylor Reynolds, Cristina Serra- Vallejo, Piotr Stryszowski and Rudolf Van Der Berg. The case studies were drafted by Laura Recuero Virto of the OECD Development Centre with editing by Elizabeth Nash and Vanda Legrandgerard. The work benefitted from significant guidance and constructive comments from ISOC and UNESCO. The authors would particularly like to thank Dawit Bekele, Constance Bommelaer, Bill Graham and Michuki Mwangi from ISOC and Jānis Kārkliņš, Boyan Radoykov and Irmgarda Kasinskaite-Buddeberg from UNESCO for their work and guidance on the project. The report relies heavily on data for many of its conclusions and the authors would like to thank Alex Kozak, Betsy Masiello and Derek Slater from Google, Geoff Huston from APNIC, Telegeography (Primetrica, Inc) and Karine Perset from the OECD for data that was used in the report. -

Newsletter CLUSIT - 31 Dicembre 2010

Newsletter CLUSIT - 31 dicembre 2010 Indice 1. RINNOVO DIRETTIVO CLUSIT 2. FORMAZIONE NELLE SCUOLE 3. AGGIORNAMENTO QUADERNO PCI-DSS 4. CALENDARIO SEMINARI CLUSIT 2011 5. NOTIZIE DAL BLOG 6. NOTIZIE E SEGNALAZIONI DAI SOCI 1. RINNOVO DIRETTIVO CLUSIT Nel corso dell'assemblea generale dello scorso 17 dicembre, si è proceduto all'elezione del nuovo Comitato Direttivo e del suo Presidente. Riportiamo di seguito l'elenco dei nuovi eletti, con i relativi ruoli e materie di competenza. • Gigi TAGLIAPIETRA - Presidente • Paolo GIUDICE - Segretario Generale • Giorgio GIUDICE - Tesoriere e Webmaster • Luca BECHELLI - Sicurezza e Compliance • Raoul CHIESA - Sicurezza delle Infrastrutture Critiche, CyberWar • Mauro CICOGNINI - Responsabile Seminari Clusit • Gabriele FAGGIOLI - Ufficio Legale • Mariangela FAGNANI - Aspetti etici e regolamenti interni • Massimiliano MANZETTI - Rapporti con il Mondo Sanitario • Marco MISITANO - Convergence tra sicurezza fisica e sicurezza logica • Mattia MONGA - Rapporti con i CERT ed il Mondo Universitario • Alessio PENNASILICO - Security Summit, Hacking Film Festival • Stefano QUINTARELLI - Rapporti con gli operatori Telcom e ISP • Claudio TELMON - Responsabile Iniziative "Rischio IT e PMI", "Premio Tesi", "Adotta una scuola", "video pillole di sicurezza" • Alessandro Vallega - Coordinamento Gruppi di Lavoro 2. FORMAZIONE NELLE SCUOLE Come discusso durante l'ultima assemblea, stiamo attivando l'iniziativa Adotta una scuola, che mira a sviluppare un'attività di sensibilizzazione alle problematiche della sicurezza ICT presso le scuole, da parte dei soci. www.clusit.it pag. 1 [email protected] Newsletter CLUSIT - 31 dicembre 2010 Una volta che l'iniziativa sarà a regime, i soci ci potranno comunicare scuole, presumibilmente della loro area, che sono disposti ad "adottare". A seguito di questa richiesta il Clusit contatterà il preside con una lettera in cui propone l'iniziativa e "accredita" il socio che quindi, se il preside sarà d'accordo, andrà a fare uno o più interventi di sensibilizzazione sul tema della sicurezza ICT presso quella scuola. -

The Impact of Joining a Brand's Social Network on Marketing Outcomes

Does "Liking" Lead to Loving? The Impact of Joining a Brand's Social Network on Marketing Outcomes The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation John, Leslie K., Oliver Emrich, Sunil Gupta, and Michael I. Norton. "Does 'Liking' Lead to Loving? The Impact of Joining a Brand's Social Network on Marketing Outcomes." Journal of Marketing Research (JMR) 54, no. 1 (February 2017): 144–155. Published Version http://dx.doi.org/10.1509/jmr.14.0237 Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:32062564 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Open Access Policy Articles, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#OAP Does “Liking” Lead to Loving? The Impact of Joining a Brand’s Social Network on Marketing Outcomes Forthcoming, Journal of Marketing Research Leslie K. John, Assistant Professor of Business Administration, Harvard Business School email: [email protected] Oliver Emrich, Professor of Marketing, Johannes Gutenberg University Mainz email: [email protected] Sunil Gupta, Professor of Business Administration, Harvard Business School email: [email protected] Michael I. Norton Professor of Business Administration, Harvard Business School email: [email protected] Acknowledgements: The authors are grateful for Evan Robinson’s ingenious programming skills and for Marina Burke’s help with data collection. The authors thank the review team for constructive feedback throughout the review process. 2 ABSTRACT Does “liking” a brand on Facebook cause a person to view it more favorably? Or is “liking” simply a symptom of being fond of a brand? We disentangle these possibilities and find evidence for the latter: brand attitudes and purchasing are predicted by consumers’ preexisting fondness for brands, and are the same regardless of when and whether consumers “like” brands. -

Development of Intelligent Virtual Assistant for Software Testing Team

2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C) Development of Intelligent Virtual Assistant for Software Testing Team Iosif Itkin Andrey Novikov Rostislav Yavorskiy Exactpro Systems Higher School of Economics Surgut State University and London, EC4N 7AE, UK Moscow, 101000, Russia Exactpro Systems [email protected] [email protected] Surgut, 628403, Russia [email protected] Abstract—This is a vision paper on incorporating of embodied systems to be tested scale up, so do tools and algorithms virtual agents into everyday operations of software testing team. for the data analysis, which collect tons of information about An important property of intelligent virtual agents is their the system behavior in different environments, contexts and capability to acquire information from their environment as well as from available data bases and information services. Research configurations. Then, all the collected knowledge is simplified challenges and issues tied up with development of intelligent to be presented in a visual form of a short table or a standard virtual assistant for software testing team are discussed. graphical chart. Interface of the analytic services with a human Index Terms—software testing, virtual assistant, artificial in- is becoming a bottleneck, which limits the capacity of the telligence whole process, see fig. 2. That bottleneck is another reason behind the demand for new types of interfaces. I. INTRODUCTION Recent advances in microelectronics and artificial intelli- gence are turning smart machines and software applications into first class employees. Quite soon work teams will rou- tinely unite humans, robots and artificial agents to work collaboratively on complex tasks, which require multiplex communication, effective coordination of efforts, and mu- tual trust built on long term history of relationships. -

How Likes and Followers Affect Users Perception and Leadership

University of Southern Maine USM Digital Commons All Theses & Dissertations Student Scholarship Spring 2019 Facebook: How Likes and Followers Affect Users Perception and Leadership Troy Johnston MA University of Southern Maine Follow this and additional works at: https://digitalcommons.usm.maine.edu/etd Recommended Citation Johnston, Troy MA, "Facebook: How Likes and Followers Affect Users Perception and Leadership" (2019). All Theses & Dissertations. 339. https://digitalcommons.usm.maine.edu/etd/339 This Open Access Thesis is brought to you for free and open access by the Student Scholarship at USM Digital Commons. It has been accepted for inclusion in All Theses & Dissertations by an authorized administrator of USM Digital Commons. For more information, please contact [email protected]. Running head: FACEBOOK: PERCEPTION OF LEADERSHIP Facebook: How Likes and Followers Affect Users Perception of Leadership By Troy Johnston A QUALITATIVE STUDY Presented to Dr. Sharon Timberlake in Partial Fulfillment for the Degree of Master’s in Leadership Studies Major: Master’s in Leadership Studies Class: LOS689 Master’s Capstone II Under the Supervision of Dr. Sharon Timberlake University of Southern Maine May 10, 2018 FACEBOOK: PERCEPTION OF LEADERSHIP ii Acknowledgements I would like to thank a number of individuals who helped me successfully complete both this research and my master’s degree. There were a number of professors who challenged and guided me, they were an inspiration and their kindness gave me the encouragement to work hard and stay on task. Dr. Dan Jenkins and Dr. Elizabeth Goryunova gave were always available and were model professors that offered me quality examples to emulate. -

Human-Computer Interaction 10Th International Conference Jointly With

Human-Computer Interaction th 10 International Conference jointly with: Symposium on Human Interface (Japan) 2003 5th International Conference on Engineering Psychology and Cognitive Ergonomics 2nd International Conference on Universal Access in Human-Computer Interaction 2003 Final Program 22-27 June 2003 • Crete, Greece In cooperation with: Conference Centre, Creta Maris Hotel Chinese Academy of Sciences Japan Management Association Japan Ergonomics Society Human Interface Society (Japan) Swedish Interdisciplinary Interest Group for Human-Computer Interaction - STIMDI Associación Interacción Persona Ordenador - AIPO (Spain) InternationalGesellschaft für Informatik e.V. - GI (Germany) European Research Consortium for Information and Mathematics - ERCIM HCI www.hcii2003.gr Under the auspices of a distinguished international board of 114 members from 24 countries Conference Sponsors Contacts Table of Contents HCI International 2003 HCI International 2003 HCI International 2003 Welcome Note 2 Institute of Computer Science (ICS) Foundation for Research and Technology - Hellas (FORTH) Conference Registration - Secretariat Foundation for Research Thematic Areas & Program Boards 3 Science and Technology Park of Crete Conference Registration takes place at the Conference Secretariat, located at and Technology - Hellas Heraklion, Crete, GR-71110 the Olympus Hall, Conference Centre Level 0, during the following hours: GREECE Institute of Computer Science Saturday, June 21 14:00 – 20:00 http://www.ics.forth.gr Opening Plenary Session FORTH Tel.: -

Whatsapp-Ening with Mobile Instant Messaging?

Please do not remove this page Getting acquainted with social networks and apps: WhatsApp-ening with mobile instant messaging? Anderson, Katie Elson https://scholarship.libraries.rutgers.edu/discovery/delivery/01RUT_INST:ResearchRepository/12643391590004646?l#13643535980004646 Anderson, K. E. (2016). Getting acquainted with social networks and apps: WhatsApp-ening with mobile instant messaging? In Library Hi Tech News (Vol. 33, Issue 6, pp. 11–15). Rutgers University. https://doi.org/10.7282/T3HD7XVX This work is protected by copyright. You are free to use this resource, with proper attribution, for research and educational purposes. Other uses, such as reproduction or publication, may require the permission of the copyright holder. Downloaded On 2021/09/25 05:30:36 -0400 Getting acquainted with social networks and apps: WhatsApp-ening with Mobile Instant Messaging? The use of Mobile Messaging (MM) or Mobile Instant Messaging (MIM) has grown in the past few years at astonishing rates. This growth has prompted data gatherers and trend forecasters to look at the use of mobile messaging apps in different ways than in the past. In a 2015 survey, The Pew Research Center asked about use of mobile messaging apps separately from cell phone texting for the first time (Duggan, 2015). Digital marketing site eMarketer.com published their first ever worldwide forecast for mobile messaging in 2015. This forecast report shows 1.4 billion current users of mobile messaging apps or 5% of smartphone users accessing a mobile messaging app at least once month. This a 31.6% increase from the previous year. The forecast predicts that by 2018 there will be two billion users, representing 80% of smartphone users (eMarketer.com, 2015).