Principled Procedural Parsing Nicolas Laurent

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Derivatives of Parsing Expression Grammars

Derivatives of Parsing Expression Grammars Aaron Moss Cheriton School of Computer Science University of Waterloo Waterloo, Ontario, Canada [email protected] This paper introduces a new derivative parsing algorithm for recognition of parsing expression gram- mars. Derivative parsing is shown to have a polynomial worst-case time bound, an improvement on the exponential bound of the recursive descent algorithm. This work also introduces asymptotic analysis based on inputs with a constant bound on both grammar nesting depth and number of back- tracking choices; derivative and recursive descent parsing are shown to run in linear time and constant space on this useful class of inputs, with both the theoretical bounds and the reasonability of the in- put class validated empirically. This common-case constant memory usage of derivative parsing is an improvement on the linear space required by the packrat algorithm. 1 Introduction Parsing expression grammars (PEGs) are a parsing formalism introduced by Ford [6]. Any LR(k) lan- guage can be represented as a PEG [7], but there are some non-context-free languages that may also be represented as PEGs (e.g. anbncn [7]). Unlike context-free grammars (CFGs), PEGs are unambiguous, admitting no more than one parse tree for any grammar and input. PEGs are a formalization of recursive descent parsers allowing limited backtracking and infinite lookahead; a string in the language of a PEG can be recognized in exponential time and linear space using a recursive descent algorithm, or linear time and space using the memoized packrat algorithm [6]. PEGs are formally defined and these algo- rithms outlined in Section 3. -

Ece351 Lab Manual

DEREK RAYSIDE & ECE351 STAFF ECE351 LAB MANUAL UNIVERSITYOFWATERLOO 2 derek rayside & ece351 staff Copyright © 2014 Derek Rayside & ECE351 Staff Compiled March 6, 2014 acknowledgements: • Prof Paul Ward suggested that we look into something with vhdl to have synergy with ece327. • Prof Mark Aagaard, as the ece327 instructor, consulted throughout the development of this material. • Prof Patrick Lam generously shared his material from the last offering of ece251. • Zhengfang (Alex) Duanmu & Lingyun (Luke) Li [1b Elec] wrote solutions to most labs in txl. • Jiantong (David) Gao & Rui (Ray) Kong [3b Comp] wrote solutions to the vhdl labs in antlr. • Aman Muthrej and Atulan Zaman [3a Comp] wrote solutions to the vhdl labs in Parboiled. • TA’s Jon Eyolfson, Vajih Montaghami, Alireza Mortezaei, Wenzhu Man, and Mohammed Hassan. • TA Wallace Wu developed the vhdl labs. • High school students Brian Engio and Tianyu Guo drew a number of diagrams for this manual, wrote Javadoc comments for the code, and provided helpful comments on the manual. Licensed under Creative Commons Attribution-ShareAlike (CC BY-SA) version 2.5 or greater. http://creativecommons.org/licenses/by-sa/2.5/ca/ http://creativecommons.org/licenses/by-sa/3.0/ Contents 0 Overview 9 Compiler Concepts: call stack, heap 0.1 How the Labs Fit Together . 9 Programming Concepts: version control, push, pull, merge, SSH keys, IDE, 0.2 Learning Progressions . 11 debugger, objects, pointers 0.3 How this project compares to CS241, the text book, etc. 13 0.4 Student work load . 14 0.5 How this course compares to MIT 6.035 .......... 15 0.6 Where do I learn more? . -

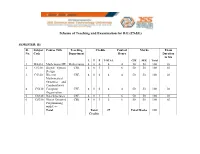

Scheme of Teaching and Examination for BE

Scheme of Teaching and Examination for B.E (CS&E) SEMESTER: III Sl. Subject Course Title Teaching Credits Contact Marks Exam No. Code Department Hours Duration in hrs L T P TOTAL CIE SEE Total 1 MA310 Mathematics III Mathematics 4 0 0 4 4 50 50 100 03 2 CS310 Digital System CSE 4 0 1 5 6 50 50 100 03 Design 3 CS320 Discrete CSE 4 0 0 4 4 50 50 100 03 Mathematical Structures and Combinatorics 4 CS330 Computer CSE 4 0 0 4 4 50 50 100 03 Organization 5 CS340 Data Structures CSE 4 0 1 5 6 50 50 100 03 6 CS350 Object Oriented CSE 4 0 1 5 6 50 50 100 03 Programming with C++ Total Total 27 Total Marks 600 Credits Scheme of Teaching and Examination for B.E (CS&E) SEMESTER: IV Sl. Subject Course Title Teaching Credits Contact Marks Exam No. Code Department Hours Duration in hrs L T P TOTAL CIE SEE Total 1 MA410 Probability, Mathematics 4 0 0 4 4 50 50 100 03 Statistics and Queuing 2 CS410 Operating CSE 4 0 1 5 6 50 50 100 03 Systems 3 CS420 Design and CSE 4 0 1 5 6 50 50 100 03 Analysis of Algorithms 4 CS430 Theory of CSE 4 0 0 4 4 50 50 100 03 Computation 5 CS440 Microprocessors CSE 4 0 1 5 6 50 50 100 03 6 CS450 Data CSE 4 0 0 4 4 50 50 100 03 Communication Total Total 27 Total Marks 600 Credits Scheme of Teaching and Examination for B.E (CS&E) SEMESTER: V Sl. -

Validating LR(1) Parsers

Validating LR(1) Parsers Jacques-Henri Jourdan1;2, Fran¸coisPottier2, and Xavier Leroy2 1 Ecole´ Normale Sup´erieure 2 INRIA Paris-Rocquencourt Abstract. An LR(1) parser is a finite-state automaton, equipped with a stack, which uses a combination of its current state and one lookahead symbol in order to determine which action to perform next. We present a validator which, when applied to a context-free grammar G and an automaton A, checks that A and G agree. Validating the parser pro- vides the correctness guarantees required by verified compilers and other high-assurance software that involves parsing. The validation process is independent of which technique was used to construct A. The validator is implemented and proved correct using the Coq proof assistant. As an application, we build a formally-verified parser for the C99 language. 1 Introduction Parsing remains an essential component of compilers and other programs that input textual representations of structured data. Its theoretical foundations are well understood today, and mature technology, ranging from parser combinator libraries to sophisticated parser generators, is readily available to help imple- menting parsers. The issue we focus on in this paper is that of parser correctness: how to obtain formal evidence that a parser is correct with respect to its specification? Here, following established practice, we choose to specify parsers via context-free grammars enriched with semantic actions. One application area where the parser correctness issue naturally arises is formally-verified compilers such as the CompCert verified C compiler [14]. In- deed, in the current state of CompCert, the passes that have been formally ver- ified start at abstract syntax trees (AST) for the CompCert C subset of C and extend to ASTs for three assembly languages. -

LATE Ain't Earley: a Faster Parallel Earley Parser

LATE Ain’T Earley: A Faster Parallel Earley Parser Peter Ahrens John Feser Joseph Hui [email protected] [email protected] [email protected] July 18, 2018 Abstract We present the LATE algorithm, an asynchronous variant of the Earley algorithm for pars- ing context-free grammars. The Earley algorithm is naturally task-based, but is difficult to parallelize because of dependencies between the tasks. We present the LATE algorithm, which uses additional data structures to maintain information about the state of the parse so that work items may be processed in any order. This property allows the LATE algorithm to be sped up using task parallelism. We show that the LATE algorithm can achieve a 120x speedup over the Earley algorithm on a natural language task. 1 Introduction Improvements in the efficiency of parsers for context-free grammars (CFGs) have the potential to speed up applications in software development, computational linguistics, and human-computer interaction. The Earley parser has an asymptotic complexity that scales with the complexity of the CFG, a unique, desirable trait among parsers for arbitrary CFGs. However, while the more commonly used Cocke-Younger-Kasami (CYK) [2, 5, 12] parser has been successfully parallelized [1, 7], the Earley algorithm has seen relatively few attempts at parallelization. Our research objectives were to understand when there exists parallelism in the Earley algorithm, and to explore methods for exploiting this parallelism. We first tried to naively parallelize the Earley algorithm by processing the Earley items in each Earley set in parallel. We found that this approach does not produce any speedup, because the dependencies between Earley items force much of the work to be performed sequentially. -

CS 432 Fall 2020 Top-Down (LL) Parsing

CS 432 Fall 2020 Mike Lam, Professor Top-Down (LL) Parsing Compilation Current focus "Back end" Source code Tokens Syntax tree Machine code char data[20]; 7f 45 4c 46 01 int main() { 01 01 00 00 00 float x 00 00 00 00 00 = 42.0; ... return 7; } Lexing Parsing Code Generation & Optimization "Front end" Review ● Recognize regular languages with finite automata – Described by regular expressions – Rule-based transitions, no memory required ● Recognize context-free languages with pushdown automata – Described by context-free grammars – Rule-based transitions, MEMORY REQUIRED ● Add a stack! Segue KEY OBSERVATION: Allowing the translator to use memory to track parse state information enables a wider range of automated machine translation. Chomsky Hierarchy of Languages Recursively enumerable Context-sensitive Context-free Most useful Regular for PL https://en.wikipedia.org/wiki/Chomsky_hierarchy Parsing Approaches ● Top-down: begin with start symbol (root of parse tree), and gradually expand non-terminals – Stack contains leaves that still need to be expanded ● Bottom-up: begin with terminals (leaves of parse tree), and gradually connect using non-terminals – Stack contains roots of subtrees that still need to be connected A V = E Top-down a E + E Bottom-up V V b c Top-Down Parsing root = createNode(S) focus = root A → V = E push(null) V → a | b | c token = nextToken() E → E + E loop: | V if (focus is non-terminal): B = chooseRuleAndExpand(focus) for each b in B.reverse(): focus.addChild(createNode(b)) push(b) A focus = pop() else if (token -

Total Parser Combinators

Total Parser Combinators Nils Anders Danielsson School of Computer Science, University of Nottingham, United Kingdom [email protected] Abstract The library has an interface which is very similar to those of A monadic parser combinator library which guarantees termination classical monadic parser combinator libraries. For instance, con- of parsing, while still allowing many forms of left recursion, is sider the following simple, left recursive, expression grammar: described. The library’s interface is similar to those of many other term :: factor term '+' factor parser combinator libraries, with two important differences: one is factor ::D atom j factor '*' atom that the interface clearly specifies which parts of the constructed atom ::D numberj '(' term ')' parsers may be infinite, and which parts have to be finite, using D j dependent types and a combination of induction and coinduction; We can define a parser which accepts strings from this grammar, and the other is that the parser type is unusually informative. and also computes the values of the resulting expressions, as fol- The library comes with a formal semantics, using which it is lows (the combinators are described in Section 4): proved that the parser combinators are as expressive as possible. mutual The implementation is supported by a machine-checked correct- term factor ness proof. D ] term >> λ n j D 1 ! Categories and Subject Descriptors D.1.1 [Programming Tech- tok '+' >> λ D ! niques]: Applicative (Functional) Programming; E.1 [Data Struc- factor >> λ n D 2 ! tures]; F.3.1 [Logics and Meanings of Programs]: Specifying and return .n n / 1 C 2 Verifying and Reasoning about Programs; F.4.2 [Mathematical factor atom Logic and Formal Languages]: Grammars and Other Rewriting D ] factor >> λ n Systems—Grammar types, Parsing 1 j tok '*' >>D λ ! D ! General Terms Languages, theory, verification atom >> λ n2 return .n n / D ! 1 ∗ 2 Keywords Dependent types, mixed induction and coinduction, atom number parser combinators, productivity, termination D tok '(' >> λ j D ! ] term >> λ n 1. -

CWI Scanprofile/PDF/300

Centrum voor Wiskunde en lnformatica Centre for Mathematics and Computer Science J. Heering, P. Klint, J.G. Rekers Incremental generation of parsers , Computer Science/Department of Software Technology Report CS-R8822 May Biblk>tlleek Centrum ypor Wisl~unde en lnformatk:a Am~tel>dam The Centre for Mathematics and Computer Science is a research institute of the Stichting Mathematisch Centrum, which was founded on February 11, 1946, as a nonprofit institution aim ing at the promotion of mathematics, computer science, and their applications. It is sponsored by the Dutch Government through the Netherlands Organization for the Advancement of Pure Research (Z.W.0.). q\ ' Copyright (t:: Stichting Mathematisch Centrum, Amsterdam 1 Incremental Generation of Parsers J. Heering Department of Software Technology, Centre for Mathematics and Computer Science P.O. Box 4079, 1009 AS Amsterdam, The Netherlands P. Klint Department of Software Technology, Centre for Mathematics and Computer Science P.O. Box 4079, 1009 AS Amsterdam, The Netherlands and Programming Research Group, University of Amsterdam P.O. BOX 41882, 1009 DB Amsterdam, The Netherlands J. Rekers Department of Software Technology, Centre for Mathematics and Computer Science P.O. Box 4079, 1009 AB Amsterdam, The Netherlands A parser checks whether a text is a sentence in a language. Therefore, the parser is provided with the grammar of the language, and it usually generates a structure (parse tree) that represents the text according to that grammar. Most present-day parsers are not directly driven by the grammar but by a 'parse table', which is generated by a parse table generator. A table based parser wolks more efficiently than a grammar based parser does, and provided that the parser is used often enough, the cost of gen erating the parse table is outweighed by the gain in parsing efficiency. -

Lecture 10: CYK and Earley Parsers Alvin Cheung Building Parse Trees Maaz Ahmad CYK and Earley Algorithms Talia Ringer More Disambiguation

Hack Your Language! CSE401 Winter 2016 Introduction to Compiler Construction Ras Bodik Lecture 10: CYK and Earley Parsers Alvin Cheung Building Parse Trees Maaz Ahmad CYK and Earley algorithms Talia Ringer More Disambiguation Ben Tebbs 1 Announcements • HW3 due Sunday • Project proposals due tonight – No late days • Review session this Sunday 6-7pm EEB 115 2 Outline • Last time we saw how to construct AST from parse tree • We will now discuss algorithms for generating parse trees from input strings 3 Today CYK parser builds the parse tree bottom up More Disambiguation Forcing the parser to select the desired parse tree Earley parser solves CYK’s inefficiency 4 CYK parser Parser Motivation • Given a grammar G and an input string s, we need an algorithm to: – Decide whether s is in L(G) – If so, generate a parse tree for s • We will see two algorithms for doing this today – Many others are available – Each with different tradeoffs in time and space 6 CYK Algorithm • Parsing algorithm for context-free grammars • Invented by John Cocke, Daniel Younger, and Tadao Kasami • Basic idea given string s with n tokens: 1. Find production rules that cover 1 token in s 2. Use 1. to find rules that cover 2 tokens in s 3. Use 2. to find rules that cover 3 tokens in s 4. … N. Use N-1. to find rules that cover n tokens in s. If succeeds then s is in L(G), else it is not 7 A graphical way to visualize CYK Initial graph: the input (terminals) Repeat: add non-terminal edges until no more can be added. -

Simple, Efficient, Sound-And-Complete Combinator Parsing for All Context

Simple, efficient, sound-and-complete combinator parsing for all context-free grammars, using an oracle OCaml 2014 workshop, talk proposal Tom Ridge University of Leicester, UK [email protected] This proposal describes a parsing library that is based on current grammar, the start symbol and the input to the back-end Earley work due to be published as [3]. The talk will focus on the OCaml parser, which parses the input and returns a parsing oracle. The library and its usage. oracle is then used to guide the action phase of the parser. 1. Combinator parsers 4. Real-world performance 3 Parsers for context-free grammars can be implemented directly and In addition to O(n ) theoretical performance, we also optimize our naturally in a functional style known as “combinator parsing”, us- back-end Earley parser. Extensive performance measurements on ing recursion following the structure of the grammar rules. Tradi- small grammars (described in [3]) indicate that the performance is tionally parser combinators have struggled to handle all features of arguably better than the Happy parser generator [1]. context-free grammars, such as left recursion. 5. Online distribution 2. Sound and complete combinator parsers This work has led to the P3 parsing library for OCaml, freely Previous work [2] introduced novel parser combinators that could available online1. The distribution includes extensive examples. be used to parse all context-free grammars. A parser generator built Some of the examples are: using these combinators was proved both sound and complete in the • HOL4 theorem prover. Parsing and disambiguation of arithmetic expressions using This previous approach has all the traditional benefits of combi- precedence annotations. -

Improving Upon Earley's Parsing Algorithm in Prolog

Improving Upon Earley’s Parsing Algorithm In Prolog Matt Voss Artificial Intelligence Center University of Georgia Athens, GA 30602 May 7, 2004 Abstract This paper presents a modification of the Earley (1970) parsing algorithm in Prolog. The Earley algorithm presented here is based on an implementation in Covington (1994a). The modifications are meant to improve on that algorithm in several key ways. The parser features a predictor that works like a left-corner parser with links, thus decreasing the number of chart entries. It implements subsump- tion checking, and organizes chart entries to take advantage of first argument indexing for quick retrieval. 1 Overview The Earley parsing algorithm is well known for its great efficiency in produc- ing all possible parses of a sentence in relatively little time, without back- tracking, and while handling left recursive rules correctly. It is also known for being considerably slower than most other parsing algorithms1. This pa- per outlines an attempt to overcome many of the pitfalls associated with implementations of Earley parsers. It uses the Earley parser in Covington (1994a) as a starting point. In particular the Earley top-down predictor is exchanged for a predictor that works like a left-corner predictor with links, 1See Covington (1994a) for one comparison of run times for a variety of algorithms. 1 following Leiss (1990). Chart entries store the positions of constituents in the input string, rather than lists containing the constituents themselves, and the arguments of chart entries are arranged to take advantage of first argument indexing. This means a small trade-off between easy-to-read code and efficiency of processing. -

Staged Parser Combinators for Efficient Data Processing

Staged Parser Combinators for Efficient Data Processing Manohar Jonnalagedda∗ Thierry Coppeyz Sandro Stucki∗ Tiark Rompf y∗ Martin Odersky∗ ∗LAMP zDATA, EPFL {first.last}@epfl.ch yOracle Labs: {first.last}@oracle.com Abstract use the language’s abstraction capabilities to enable compo- Parsers are ubiquitous in computing, and many applications sition. As a result, a parser written with such a library can depend on their performance for decoding data efficiently. look like formal grammar descriptions, and is also readily Parser combinators are an intuitive tool for writing parsers: executable: by construction, it is well-structured, and eas- tight integration with the host language enables grammar ily maintainable. Moreover, since combinators are just func- specifications to be interleaved with processing of parse re- tions in the host language, it is easy to combine them into sults. Unfortunately, parser combinators are typically slow larger, more powerful combinators. due to the high overhead of the host language abstraction However, parser combinators suffer from extremely poor mechanisms that enable composition. performance (see Section 5) inherent to their implementa- We present a technique for eliminating such overhead. We tion. There is a heavy penalty to be paid for the expres- use staging, a form of runtime code generation, to dissoci- sivity that they allow. A grammar description is, despite its ate input parsing from parser composition, and eliminate in- declarative appearance, operationally interleaved with input termediate data structures and computations associated with handling, such that parts of the grammar description are re- parser composition at staging time. A key challenge is to built over and over again while input is processed.