Automatic Learning for the Classification of Chemical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(12) United States Patent (10) Patent No.: US 7,381,538 B2 Reardon Et Al

US007381538B2 (12) United States Patent (10) Patent No.: US 7,381,538 B2 Reardon et al. (45) Date of Patent: Jun. 3, 2008 (54) OPTICAL BIOSENSOR WITH ENHANCED 6,060,327 A * 5/2000 Keen ..................... 204,403.14 ACTIVITY RETENTION FOR DETECTION 6,159,681 A 12/2000 Zebala .......................... 435/4 OF HALOGENATED ORGANIC COMPOUNDS OTHER PUBLICATIONS Derek W. Campbell, entitled “The Development of Biosensors for (75) Inventors: Kenneth F. Reardon, Fort Collins, CO the Detection of Halogenated Groundwater Contaminants,” avail (US); Neema Das, Murgeshpalya (IN) able from Morgan Library at the CSU in Fort Collins, Colorado, having been submitted by Derek W. Campbell in fulfillment of the (73) Assignee: Colorado State University Research requirements for the Degree of Master of Science at Colorado State Foundation (CSURF), Fort Collins, University, Spring 1998 (labeled “Attachment B” of applicants' CO (US) provisional app. filed Jun. 1, 2001 as containing general background technical information}. (*) Notice: Subject to any disclaimer, the term of this inst k Eyle A. PS SEE s past l S.s I listed under 35 12,alogenated 1998 by Reardonflydrocarbons, and Campbell, U.S. Appl. 7 pages No. (labeled,89U, “Attachment Illed Sep. M YW- (b) by ayS. C” of applicants’pp pprovisional app.pp filed Jun. 1, 2001 as containin9. general background technical information}. (21) Appl. No.: 10/478,822 Cord Müller. F. Schubert. T. Scheper,p "Multicomponentp fiberopticalp biosensor for use in hemodialysis monitoring.” Proc. SPIE vol. (22) PCT Filed: Jun. 1, 2002 2131, 555-562 Biomedical Fiber Optic Instrumentation(Jul 1994) {referenced as “Cord Müller, et al.” in applicants’ provisional app. -

12) United States Patent (10

US007635572B2 (12) UnitedO States Patent (10) Patent No.: US 7,635,572 B2 Zhou et al. (45) Date of Patent: Dec. 22, 2009 (54) METHODS FOR CONDUCTING ASSAYS FOR 5,506,121 A 4/1996 Skerra et al. ENZYME ACTIVITY ON PROTEIN 5,510,270 A 4/1996 Fodor et al. MICROARRAYS 5,512,492 A 4/1996 Herron et al. 5,516,635 A 5/1996 Ekins et al. (75) Inventors: Fang X. Zhou, New Haven, CT (US); 5,532,128 A 7/1996 Eggers Barry Schweitzer, Cheshire, CT (US) 5,538,897 A 7/1996 Yates, III et al. s s 5,541,070 A 7/1996 Kauvar (73) Assignee: Life Technologies Corporation, .. S.E. al Carlsbad, CA (US) 5,585,069 A 12/1996 Zanzucchi et al. 5,585,639 A 12/1996 Dorsel et al. (*) Notice: Subject to any disclaimer, the term of this 5,593,838 A 1/1997 Zanzucchi et al. patent is extended or adjusted under 35 5,605,662 A 2f1997 Heller et al. U.S.C. 154(b) by 0 days. 5,620,850 A 4/1997 Bamdad et al. 5,624,711 A 4/1997 Sundberg et al. (21) Appl. No.: 10/865,431 5,627,369 A 5/1997 Vestal et al. 5,629,213 A 5/1997 Kornguth et al. (22) Filed: Jun. 9, 2004 (Continued) (65) Prior Publication Data FOREIGN PATENT DOCUMENTS US 2005/O118665 A1 Jun. 2, 2005 EP 596421 10, 1993 EP 0619321 12/1994 (51) Int. Cl. EP O664452 7, 1995 CI2O 1/50 (2006.01) EP O818467 1, 1998 (52) U.S. -

Covalent Tethering of Functional Groups to Proteins Kovalentes Anbinden Von Funktionsgruppen an Proteine Fixation Covalente De Groupes Fonctionnels À Des Protéines

(19) TZZ _T (11) EP 2 455 458 B1 (12) EUROPEAN PATENT SPECIFICATION (45) Date of publication and mention (51) Int Cl.: of the grant of the patent: C12N 9/14 (2006.01) C12N 9/86 (2006.01) 07.01.2015 Bulletin 2015/02 C12Q 1/34 (2006.01) G01N 33/50 (2006.01) G01N 33/58 (2006.01) (21) Application number: 11005045.7 (22) Date of filing: 30.01.2004 (54) Covalent tethering of functional groups to proteins Kovalentes Anbinden von Funktionsgruppen an Proteine Fixation covalente de groupes fonctionnels à des protéines (84) Designated Contracting States: (74) Representative: Potter Clarkson LLP AT BE BG CH CY CZ DE DK EE ES FI FR GB GR The Belgrave Centre HU IE IT LI LU MC NL PT RO SE SI SK TR Talbot Street Nottingham, NG1 5GG (GB) (30) Priority: 31.01.2003 US 444094 P 30.05.2003 US 474659 P (56) References cited: US-A- 4 777 269 (43) Date of publication of application: 23.05.2012 Bulletin 2012/21 • KEVIN KA LEUNG CHEUK ET AL: "SYNTHESIS OF OPTICALLY ACTIVE POLY (62) Document number(s) of the earlier application(s) in (PHENYLACETYLENES) CONTAINING AMINO accordance with Art. 76 EPC: ACID PENDANT GROUPS", POLYMERIC 04707032.1 / 1 594 962 MATERIALS SCIENCE AND ENGINEERING. PMSE PREPRINTS, AMERICAN CHEMICAL (73) Proprietor: PROMEGA CORPORATION SOCIETY, US, vol. 82, 1 January 2000 Madison, Wisconsin 53711 (US) (2000-01-01), page 56/57, XP009071552, ISSN: 0743-0515 (72) Inventors: • HYNKOVAK ET AL: "Identification of the catalytic • Wood, Keith, V. triad in the haloalkane dehalogenase from Madison, Sphingomonas paucimobilis UT26", FEBS Wisconsin 53711 (US) LETTERS,ELSEVIER, AMSTERDAM, NL, vol. -

Recherche Et Caracterisation D'activités

REPUBLIQUE ALGERIENNE DEMOCRATIQUE ET POPULAIRE MINISTERE DE L’ENSEIGNEMENT SUPERIEUR ET DE LA RECHERCHE SCIENTIFIQUE CENTRE UNIVERSITAIRE BELHADJ BOUCHAIB D’AIN-TEMOUCHENT Institut des Sciences Département des Sciences de la Nature et de la Vie Mémoire Pour l’obtention du Diplôme de Master en Sciences Biologiques Option : Biochimie Présenté par: Melle. BRAHIMI Ferdaous Melle. ABDELOUAHAB Ahlem RECHERCHE ET CARACTERISATION D’ACTIVITÉS AMYLASIQUES, LIPASIQUES ET CATALASIQUES CHEZ DES GOMMES DE L’AMANDIER ET DE L’ACACIA Encadrant M. Sofiane Mourad BENYAMINA Maitre de Conférences « B » au C.U.B.B.A.T Soutenu le 08/09/2020 Devant le jury composé de : Présidente : Dr. Nassima BRIXI GORMAT (MCB) C.U.B.B.A.T Examinateur : Dr. Farid BENNABI (MCB) C.U.B.B.A.T Encadrant : Dr. Sofiane Mourad BENYAMINA (MCB) C.U.B.B.A.T Remerciements Ce travail est l’aboutissement d’un dur labeur et de beaucoup de patience, nos remerciements vont d’abord au Créateur de l’univers qui nous a doté d’intelligence, nous a maintenu en santé, et de nous avoir donné le courage et la force pour réaliser ce modeste travail. On voudrait tout d’abord adresser toute nos gratitudes à l’encadrant de ce mémoire, Monsieur Sofiane Mourad BENYAMINA, pour son aide, son encouragement prodigués tout au long de ce travail de recherche, ainsi que pour son grande disponibilité, son rigueur scientifique, son enthousiasme et son précieux conseils qui nous ont permis de travailler dans les meilleures conditions. Nous les remercie aussi pour sa confiance, sa gentillesse ainsi que pour son regard avisé sur nos travaux. -

(12) Patent Application Publication (10) Pub. No.: US 2012/0266329 A1 Mathur Et Al

US 2012026.6329A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2012/0266329 A1 Mathur et al. (43) Pub. Date: Oct. 18, 2012 (54) NUCLEICACIDS AND PROTEINS AND CI2N 9/10 (2006.01) METHODS FOR MAKING AND USING THEMI CI2N 9/24 (2006.01) CI2N 9/02 (2006.01) (75) Inventors: Eric J. Mathur, Carlsbad, CA CI2N 9/06 (2006.01) (US); Cathy Chang, San Marcos, CI2P 2L/02 (2006.01) CA (US) CI2O I/04 (2006.01) CI2N 9/96 (2006.01) (73) Assignee: BP Corporation North America CI2N 5/82 (2006.01) Inc., Houston, TX (US) CI2N 15/53 (2006.01) CI2N IS/54 (2006.01) CI2N 15/57 2006.O1 (22) Filed: Feb. 20, 2012 CI2N IS/60 308: Related U.S. Application Data EN f :08: (62) Division of application No. 1 1/817,403, filed on May AOIH 5/00 (2006.01) 7, 2008, now Pat. No. 8,119,385, filed as application AOIH 5/10 (2006.01) No. PCT/US2006/007642 on Mar. 3, 2006. C07K I4/00 (2006.01) CI2N IS/II (2006.01) (60) Provisional application No. 60/658,984, filed on Mar. AOIH I/06 (2006.01) 4, 2005. CI2N 15/63 (2006.01) Publication Classification (52) U.S. Cl. ................... 800/293; 435/320.1; 435/252.3: 435/325; 435/254.11: 435/254.2:435/348; (51) Int. Cl. 435/419; 435/195; 435/196; 435/198: 435/233; CI2N 15/52 (2006.01) 435/201:435/232; 435/208; 435/227; 435/193; CI2N 15/85 (2006.01) 435/200; 435/189: 435/191: 435/69.1; 435/34; CI2N 5/86 (2006.01) 435/188:536/23.2; 435/468; 800/298; 800/320; CI2N 15/867 (2006.01) 800/317.2: 800/317.4: 800/320.3: 800/306; CI2N 5/864 (2006.01) 800/312 800/320.2: 800/317.3; 800/322; CI2N 5/8 (2006.01) 800/320.1; 530/350, 536/23.1: 800/278; 800/294 CI2N I/2 (2006.01) CI2N 5/10 (2006.01) (57) ABSTRACT CI2N L/15 (2006.01) CI2N I/19 (2006.01) The invention provides polypeptides, including enzymes, CI2N 9/14 (2006.01) structural proteins and binding proteins, polynucleotides CI2N 9/16 (2006.01) encoding these polypeptides, and methods of making and CI2N 9/20 (2006.01) using these polynucleotides and polypeptides. -

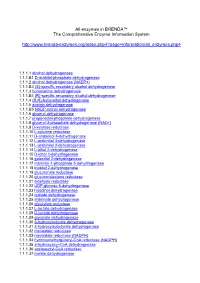

All Enzymes in BRENDA™ the Comprehensive Enzyme Information System

All enzymes in BRENDA™ The Comprehensive Enzyme Information System http://www.brenda-enzymes.org/index.php4?page=information/all_enzymes.php4 1.1.1.1 alcohol dehydrogenase 1.1.1.B1 D-arabitol-phosphate dehydrogenase 1.1.1.2 alcohol dehydrogenase (NADP+) 1.1.1.B3 (S)-specific secondary alcohol dehydrogenase 1.1.1.3 homoserine dehydrogenase 1.1.1.B4 (R)-specific secondary alcohol dehydrogenase 1.1.1.4 (R,R)-butanediol dehydrogenase 1.1.1.5 acetoin dehydrogenase 1.1.1.B5 NADP-retinol dehydrogenase 1.1.1.6 glycerol dehydrogenase 1.1.1.7 propanediol-phosphate dehydrogenase 1.1.1.8 glycerol-3-phosphate dehydrogenase (NAD+) 1.1.1.9 D-xylulose reductase 1.1.1.10 L-xylulose reductase 1.1.1.11 D-arabinitol 4-dehydrogenase 1.1.1.12 L-arabinitol 4-dehydrogenase 1.1.1.13 L-arabinitol 2-dehydrogenase 1.1.1.14 L-iditol 2-dehydrogenase 1.1.1.15 D-iditol 2-dehydrogenase 1.1.1.16 galactitol 2-dehydrogenase 1.1.1.17 mannitol-1-phosphate 5-dehydrogenase 1.1.1.18 inositol 2-dehydrogenase 1.1.1.19 glucuronate reductase 1.1.1.20 glucuronolactone reductase 1.1.1.21 aldehyde reductase 1.1.1.22 UDP-glucose 6-dehydrogenase 1.1.1.23 histidinol dehydrogenase 1.1.1.24 quinate dehydrogenase 1.1.1.25 shikimate dehydrogenase 1.1.1.26 glyoxylate reductase 1.1.1.27 L-lactate dehydrogenase 1.1.1.28 D-lactate dehydrogenase 1.1.1.29 glycerate dehydrogenase 1.1.1.30 3-hydroxybutyrate dehydrogenase 1.1.1.31 3-hydroxyisobutyrate dehydrogenase 1.1.1.32 mevaldate reductase 1.1.1.33 mevaldate reductase (NADPH) 1.1.1.34 hydroxymethylglutaryl-CoA reductase (NADPH) 1.1.1.35 3-hydroxyacyl-CoA -

Développement D'une Nouvelle Méthode De Docking Basée Sur Les

D´eveloppement d'une nouvelle m´ethode de docking bas´eesur les m´ecanismesenzymatiques et guid´eepar des groupes prosth´etiques Fran¸coisMartz To cite this version: Fran¸coisMartz. D´eveloppement d'une nouvelle m´ethode de docking bas´eesur les m´ecanismes enzymatiques et guid´eepar des groupes prosth´etiques.Chemo-informatique. Universit´eParis Sud - Paris XI, 2014. Fran¸cais. <NNT : 2014PA112326>. <tel-01168482> HAL Id: tel-01168482 https://tel.archives-ouvertes.fr/tel-01168482 Submitted on 25 Jun 2015 HAL is a multi-disciplinary open access L'archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destin´eeau d´ep^otet `ala diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publi´esou non, lished or not. The documents may come from ´emanant des ´etablissements d'enseignement et de teaching and research institutions in France or recherche fran¸caisou ´etrangers,des laboratoires abroad, or from public or private research centers. publics ou priv´es. THESE DE DOCTORAT DE L’UNIVERSITE PARIS SUD Spécialité : Chimie École doctorale : ED470 Présentée par François Martz Pour obtenir le titre de DOCTEUR de L’UNIVERSITÉ PARIS SUD Sujet de la thèse : Développement d’une nouvelle méthode de docking basée sur les mécanismes enzymatiques et guidée par des groupes prosthétiques Soutenue le 24 novembre 2014 devant le jury composé de : Présidente: Pr. Isabelle Demachy Université Paris Sud, Laboratoire de Chimie Physique, Orsay Rapporteurs: Dr Thérèse Malliavin -

(12) Patent Application Publication (10) Pub. No.: US 2015/0240226A1 Mathur Et Al

US 20150240226A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2015/0240226A1 Mathur et al. (43) Pub. Date: Aug. 27, 2015 (54) NUCLEICACIDS AND PROTEINS AND CI2N 9/16 (2006.01) METHODS FOR MAKING AND USING THEMI CI2N 9/02 (2006.01) CI2N 9/78 (2006.01) (71) Applicant: BP Corporation North America Inc., CI2N 9/12 (2006.01) Naperville, IL (US) CI2N 9/24 (2006.01) CI2O 1/02 (2006.01) (72) Inventors: Eric J. Mathur, San Diego, CA (US); CI2N 9/42 (2006.01) Cathy Chang, San Marcos, CA (US) (52) U.S. Cl. CPC. CI2N 9/88 (2013.01); C12O 1/02 (2013.01); (21) Appl. No.: 14/630,006 CI2O I/04 (2013.01): CI2N 9/80 (2013.01); CI2N 9/241.1 (2013.01); C12N 9/0065 (22) Filed: Feb. 24, 2015 (2013.01); C12N 9/2437 (2013.01); C12N 9/14 Related U.S. Application Data (2013.01); C12N 9/16 (2013.01); C12N 9/0061 (2013.01); C12N 9/78 (2013.01); C12N 9/0071 (62) Division of application No. 13/400,365, filed on Feb. (2013.01); C12N 9/1241 (2013.01): CI2N 20, 2012, now Pat. No. 8,962,800, which is a division 9/2482 (2013.01); C07K 2/00 (2013.01); C12Y of application No. 1 1/817,403, filed on May 7, 2008, 305/01004 (2013.01); C12Y 1 1 1/01016 now Pat. No. 8,119,385, filed as application No. PCT/ (2013.01); C12Y302/01004 (2013.01); C12Y US2006/007642 on Mar. 3, 2006. -

Generate Metabolic Map Poster

Authors: Pallavi Subhraveti Ron Caspi Quang Ong Peter D Karp An online version of this diagram is available at BioCyc.org. Biosynthetic pathways are positioned in the left of the cytoplasm, degradative pathways on the right, and reactions not assigned to any pathway are in the far right of the cytoplasm. Transporters and membrane proteins are shown on the membrane. Ingrid Keseler Periplasmic (where appropriate) and extracellular reactions and proteins may also be shown. Pathways are colored according to their cellular function. Rbal243090Cyc: Rhodopirellula baltica 1 Cellular Overview Connections between pathways are omitted for legibility. -

Springer Handbook of Enzymes

Dietmar Schomburg Ida Schomburg (Eds.) Springer Handbook of Enzymes Alphabetical Name Index 1 23 © Springer-Verlag Berlin Heidelberg New York 2010 This work is subject to copyright. All rights reserved, whether in whole or part of the material con- cerned, specifically the right of translation, printing and reprinting, reproduction and storage in data- bases. The publisher cannot assume any legal responsibility for given data. Commercial distribution is only permitted with the publishers written consent. Springer Handbook of Enzymes, Vols. 1–39 + Supplements 1–7, Name Index 2.4.1.60 abequosyltransferase, Vol. 31, p. 468 2.7.1.157 N-acetylgalactosamine kinase, Vol. S2, p. 268 4.2.3.18 abietadiene synthase, Vol. S7,p.276 3.1.6.12 N-acetylgalactosamine-4-sulfatase, Vol. 11, p. 300 1.14.13.93 (+)-abscisic acid 8’-hydroxylase, Vol. S1, p. 602 3.1.6.4 N-acetylgalactosamine-6-sulfatase, Vol. 11, p. 267 1.2.3.14 abscisic-aldehyde oxidase, Vol. S1, p. 176 3.2.1.49 a-N-acetylgalactosaminidase, Vol. 13,p.10 1.2.1.10 acetaldehyde dehydrogenase (acetylating), Vol. 20, 3.2.1.53 b-N-acetylgalactosaminidase, Vol. 13,p.91 p. 115 2.4.99.3 a-N-acetylgalactosaminide a-2,6-sialyltransferase, 3.5.1.63 4-acetamidobutyrate deacetylase, Vol. 14,p.528 Vol. 33,p.335 3.5.1.51 4-acetamidobutyryl-CoA deacetylase, Vol. 14, 2.4.1.147 acetylgalactosaminyl-O-glycosyl-glycoprotein b- p. 482 1,3-N-acetylglucosaminyltransferase, Vol. 32, 3.5.1.29 2-(acetamidomethylene)succinate hydrolase, p. 287 Vol. -

Dependent Monooxygenases

The evolution of the flavin-dependent monooxygenases Chopping and Changing: the Evolution of the Flavin- dependent Monooxygenases Maria Laura Mascotti1*, Maximiliano Juri Ayub1, Nicholas Furnham2, Janet M. Thornton3, Roman A Laskowski3* 1IMIBIO-SL CONICET, Facultad de Química Bioquímica y Farmacia, Universidad Nacional de San Luis, Ejército de los Andes 950, San Luis, D5700HHW, Argentina 2Department of Pathogen Molecular Biology, London School of Hygiene and Tropical Medicine, Keppel Street, London, WC1E 7HT, UK 3EMBL-EBI, Wellcome Trust Genome Campus, Hinxton, Cambridge, CB10 1SD, UK *corresponding authors: Maria Laura Mascotti ([email protected]), Roman A. Laskowski ([email protected]) Supplementary Data Page nº Table S1 …………………….. 2-7 Dataset S1 ………………………. 8 Table S2 ………………………. 9 Figure S1 ……………………... 10 Figure S2 ……………………... 11 References ..……………………. 12 1 The evolution of the flavin-dependent monooxygenases Table S1 Classification of enzymes included in the EC sub-subclasses 1.13.12, 1.14.13 and 1.14.14. Data collected from the enzyme database BRENDA (http://www.brenda-enzymes.org/, last accessed on 8th June 2016). The classes containing flavin- dependent monooxygenases are identified by FMO in the final column and their class (A-H). EC PDBs Enzyme Name Classification* 1.13.12.1 - arginine 2-monooxygenase FMO G 1.13.12.2 - lysine 2-monooxygenase FMO G 1.13.12.3 2 tryptophan 2-monooxygenase FMO G 1.13.12.4 30 lactate 2-monooxygenase FMO H 1.13.12.5 11 Renilla-luciferin 2-monooxygenase Luc 1.13.12.6 - Cypridina-luciferin 2-monooxygenase -

Functional and Structural Characterization of a Flavoprotein

ARTICLE https://doi.org/10.1038/s41467-021-21200-9 OPEN Functional and structural characterization of a flavoprotein monooxygenase essential for biogenesis of tryptophylquinone cofactor Toshinori Oozeki1,3, Tadashi Nakai 1,2,3, Kazuki Kozakai1, Kazuki Okamoto1, Shun’ichi Kuroda1, ✉ Kazuo Kobayashi1, Katsuyuki Tanizawa1 & Toshihide Okajima 1 1234567890():,; Bioconversion of peptidyl amino acids into enzyme cofactors is an important post- translational modification. Here, we report a flavoprotein, essential for biosynthesis of a protein-derived quinone cofactor, cysteine tryptophylquinone, contained in a widely dis- tributed bacterial enzyme, quinohemoprotein amine dehydrogenase. The purified flavoprotein catalyzes the single-turnover dihydroxylation of the tryptophylquinone-precursor, tryptophan, in the protein substrate containing triple intra-peptidyl crosslinks that are pre-formed by a radical S-adenosylmethionine enzyme within the ternary complex of these proteins. Crystal structure of the peptidyl tryptophan dihydroxylase reveals a large pocket that may dock the protein substrate with the bound flavin adenine dinucleotide situated close to the precursor tryptophan. Based on the enzyme-protein substrate docking model, we propose a chemical reaction mechanism of peptidyl tryptophan dihydroxylation catalyzed by the flavoprotein monooxygenase. The diversity of the tryptophylquinone-generating systems suggests con- vergent evolution of the peptidyl tryptophan-derived cofactors in different proteins. 1 Institute of Scientific and Industrial Research, Osaka University, Osaka, Japan. 2 Faculty of Life Sciences, Hiroshima Institute of Technology, Hiroshima, Japan. ✉ 3These authors contributed equally: Toshinori Oozeki, Tadashi Nakai. email: [email protected] NATURE COMMUNICATIONS | (2021) 12:933 | https://doi.org/10.1038/s41467-021-21200-9 | www.nature.com/naturecommunications 1 ARTICLE NATURE COMMUNICATIONS | https://doi.org/10.1038/s41467-021-21200-9 osttranslational protein modifications expand the chemical (γ-subunit)13,14.