'Intelligence & WMD: Reports, Politics, and Intelligence Failures: the Case

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Iraq's WMD Capability

BRITISH AMERICAN SECURITY INFORMATION COUNCIL BASIC SPECIAL REPORT Unravelling the Known Unknowns: Why no Weapons of Mass Destruction have been found in Iraq By David Isenberg and Ian Davis BASIC Special Report 2004.1 January 2004 1 The British American Security Information Council The British American Security Information Council (BASIC) is an independent research organization that analyzes international security issues. BASIC works to promote awareness of security issues among the public, policy makers and the media in order to foster informed debate on both sides of the Atlantic. BASIC in the U.K. is a registered charity no. 1001081 BASIC in the U.S. is a non-profit organization constituted under Section 501(c)(3) of the U.S. Internal Revenue Service Code David Isenberg, Senior Analyst David Isenberg joined BASIC's Washington office in November 2002. He has a wide background in arms control and national security issues, and brings close to 20 years of experience in this field, including three years as a member of DynMeridian's Arms Control & Threat Reduction Division, and nine years as Senior Analyst at the Center for Defense Information. Ian Davis, Director Dr. Ian Davis is Executive Director of BASIC and has a rich background in government, academia, and the non-governmental organization (NGO) sector. He received both his Ph.D. and B.A. in Peace Studies from the University of Bradford. He was formerly Program Manager at Saferworld before being appointed as the new Executive Director of BASIC in October 2001. He has published widely on British defense and foreign policy, European security, the international arms trade, arms export controls, small arms and light weapons and defense diversification. -

Chapter 22. Case Study

CHAPTER Case Study 22 A Tale of Two NIEs he two cases discussed in this chapter provide contrasting insights into the pro- Tcess of preparing intelligence estimates in the United States. They are presented here as the basis for a capstone set of critical thinking questions that are tied to the material discussed throughout this text. In 1990, the National Intelligence Council produced a national intelli- gence estimate (NIE)—the most authoritative intelligence assessment pro- duced by the intelligence community—on Yugoslavia. Twelve years later, the National Intelligence Council produced an NIE on Iraq’s WMD program. The Yugoslavia NIE • Used a sound prediction methodology • Got it right distribute • Presented conclusions that were anathema to U.S.or policymakers • Had zero effect on U.S. policy The Iraqi WMD NIE, in contrast, • Used a flawed prediction methodologypost, • Got it wrong • Presented conclusions that were exactly what U.S. policymakers wanted to hear • Provided supportcopy, to a predetermined U.S. policy This case highlights the differences in analytic approaches used in the two NIEs. Both NIEs now are available online. The full text of the “Yugoslavia Trans- formed” NIEnot is available at https://www.cia.gov/library/readingroom/docs/1990- 10-01.pdf. In 2014, the CIA released the most complete copy (with previously redacted material) of the original “Iraq’s Continuing Programs for Weapons of Mass Destruction” NIE. It is available at https://www.cia.gov/library/reports/ _general-reports-1/iraq_wmd/Iraq_Oct_2002.htm.Do The Yugoslavia NIE The opening statements of the 1990 Yugoslavia NIE contain four conclusions that were remarkably prescient: 420 Copyright ©2020 by SAGE Publications, Inc. -

N Ieman Reports

NIEMAN REPORTS Nieman Reports One Francis Avenue Cambridge, Massachusetts 02138 Nieman Reports THE NIEMAN FOUNDATION FOR JOURNALISM AT HARVARD UNIVERSITY VOL. 62 NO. 1 SPRING 2008 VOL. 62 NO. 1 SPRING 2008 21 ST CENTURY MUCKRAKERS THE NIEMAN FOUNDATION HARVARDAT UNIVERSITY 21st Century Muckrakers Who Are They? How Do They Do Their Work? Words & Reflections: Secrets, Sources and Silencing Watchdogs Journalism 2.0 End Note went to the Carnegie Endowment in New York but of the Oakland Tribune, and Maynard was throw- found times to return to Cambridge—like many, ing out questions fast and furiously about my civil I had “withdrawal symptoms” after my Harvard rights coverage. I realized my interview was lasting ‘to promote and elevate the year—and would meet with Tenney. She came to longer than most, and I wondered, “Is he trying to my wedding in Toronto in 1984, and we tried to knock me out of competition?” Then I happened to keep in touch regularly. Several of our class, Peggy glance over at Tenney and got the only smile from standards of journalism’ Simpson, Peggy Engel, Kat Harting, and Nancy the group—and a warm, welcoming one it was. I Day visited Tenney in her assisted living facility felt calmer. Finally, when the interview ended, I in Cambridge some years ago, during a Nieman am happy to say, Maynard leaped out of his chair reunion. She cared little about her own problems and hugged me. Agnes Wahl Nieman and was always interested in others. Curator Jim Tenney was a unique woman, and I thoroughly Thomson was the public and intellectual face of enjoyed her friendship. -

I,St=-Rn Endorsedb~ Chief, Policy, Information, Performance, and Exports

NATIONAL SECURITY AGENCY CENTRAL SECURITY SERVICE NSA/CSS POLICY 2-4 Issue Date: IO May 20 I 9 Revised: HANDLING OF REQUESTS FOR RELEASE OF U.S. IDENTITIES PURPOSE AND SCOPE This policy, developed in consultation with the Director of National Intelligence (DNI), the Attorney General, and the Secretary of Defense, implements Intelligence Community Policy Guidance I 07 .1 , "Requests for Identities of U.S. Persons in Disseminated Intelligence Reports" (Reference a), and prescribes the policy, procedures, and responsibilities for responding to a requesting entity, other than NSA/CSS, for post-publication release and dissemination of masked US person idenlity information in disseminated serialized NSA/CSS reporting. This policy applies exclusively to requests from a requesting entity, other than NSA/CSS, for post-publication release and dissemination of nonpublic US person identity information that was masked in a disseminated serialized NSA/CSS report. This policy does not apply in circumstances where a U.S. person has consented to the dissemination of communications to, from, or about the U.S. person. This policy applies to all NSA/CSS personnel and to all U.S. Cryptologic System Government personnel performing an NSA/CSS mission. \ This policy does not affect any minimization procedures established pursuant to the Foreign Intelligence Surveillance Act of 1978 (Reference b), Executive Order 12333 (Reference £), or other provisions of law. This policy does not affect the requirements established in Annex A, "Dissemination of Congressional Identity Information," of Intelligence Community Directive 112, "Congressional Notification" (Reference d). ~A General, U.S. Army Director, NSA/Chief, CSS i,st=-rn Endorsedb~ Chief, Policy, Information, Performance, and Exports NSA/CSS Policy 2-4 is approved for public release. -

Curveball Saga

Bob DROGIN & John GOETZ: The Curveball Saga Los Angeles Times 2005, November 20 THE CURVEBALL SAGA How U.S. Fell Under the Spell of 'Curveball' The Iraqi informant's German handlers say they had told U.S. officials that his information was 'not proven,' and were shocked when President Bush and Colin L. Powell used it in key prewar speeches. By Bob Drogin and John Goetz, Special to The Times The German intelligence officials responsible for one of the most important informants on Saddam Hussein's suspected weapons of mass destruction say that the Bush administration and the CIA repeatedly exaggerated his claims during the run-up to the war in Iraq. Five senior officials from Germany's Federal Intelligence Service, or BND, said in interviews with The Times that they warned U.S. intelligence authorities that the source, an Iraqi defector code-named Curveball, never claimed to produce germ weapons and never saw anyone else do so. According to the Germans, President Bush mischaracterized Curveball's information when he warned before the war that Iraq had at least seven mobile factories brewing biological poisons. Then-Secretary of State Colin L. Powell also misstated Curveball's accounts in his prewar presentation to the United Nations on Feb. 5, 2003, the Germans said. Curveball's German handlers for the last six years said his information was often vague, mostly secondhand and impossible to confirm. "This was not substantial evidence," said a senior German intelligence official. "We made clear we could not verify the things he said." The German authorities, speaking about the case for the first time, also said that their informant suffered from emotional and mental problems. -

Nsa-Spybases-Expansi

National Security Agency Military Construction, Defense-Wide FY 2008 Budget Estimates ($ in thousands) New/ Authorization Approp. Current Page State/Installation/Project Request Request Mission No. Georgia Augusta Regional Security Operation Center Inc. III - 100,000 C 202 Hawaii Kunia, Naval Security Group Activity Regional Security Operation Center Inc. III - 136,318 C 207 Maryland Fort Meade NSAW Utility Management System 7,901 7,901 C 213 OPS1 South Stair Tower 4,000 4,000 C 217 Total 11,901 248,219 201 1. COMPONENT 2. DATE NSA/CSS FY 2008 MILITARY CONSTRUCTION PROGRAM DEFENSE February 2007 3. INSTALLATION AND LOCATION 4. COMMAND 5. AREA CONSTRUCTION COST INDEX FORT GORDON, GEORGIA NSA/CSS 0.84 6. PERSONNEL STRENGTH PERMANENT STUDENTS SUPPORTED TOTAL Army Installation OFF ENL CIV OFF ENL CIV OFF ENL CIV a. AS OF x b. END FY CLASS IFIED 7. INVENTORY DATA ($000) A. TOTAL ACREAGE B. INVENTORY TOTAL AS OF C. AUTHORIZED NOT YET IN INVENTORY 340,854 D. AUTHORIZATION REQUESTED IN THIS PROGRAM 0 E. AUTHORIZATION INCLUDED IN FOLLOWING PROGRAM 0 F. PLANNED IN NEXT THREE YEARS 0 G. REMAINING DEFICIENCY 0 H. GRAND TOTAL 340,854 8. PROJECTS REQUESTED IN THIS PROGRAM: CATEGORY PROJECT COST DESIGN PROJECT TITLE CODE NUMBER ($000) START COMPLETE 141 50080 Georgia Regional Security Operations Center 100,000 Jan 06 May 06 (FY08) (3rd Increment) (NSA/CSS Georgia) 9. FUTURE PROJECTS: a. INCLUDED IN FOLLOWING PROGRAM CATEGORY COST PROJECT TITLE CODE ($000) 141 Georgia Regional Security Operations Center (FY09) 86,550 (4th Increment) (NSA/CSS Georgia) b. PLANNED IN NEXT THREE YEARS CATEGORY COST PROJECT TITLE CODE ($000) 10. -

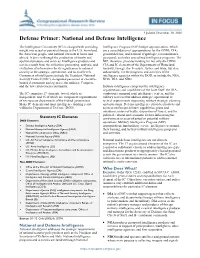

Defense Primer: National and Defense Intelligence

Updated December 30, 2020 Defense Primer: National and Defense Intelligence The Intelligence Community (IC) is charged with providing Intelligence Program (NIP) budget appropriations, which insight into actual or potential threats to the U.S. homeland, are a consolidation of appropriations for the ODNI; CIA; the American people, and national interests at home and general defense; and national cryptologic, reconnaissance, abroad. It does so through the production of timely and geospatial, and other specialized intelligence programs. The apolitical products and services. Intelligence products and NIP, therefore, provides funding for not only the ODNI, services result from the collection, processing, analysis, and CIA and IC elements of the Departments of Homeland evaluation of information for its significance to national Security, Energy, the Treasury, Justice and State, but also, security at the strategic, operational, and tactical levels. substantially, for the programs and activities of the Consumers of intelligence include the President, National intelligence agencies within the DOD, to include the NSA, Security Council (NSC), designated personnel in executive NGA, DIA, and NRO. branch departments and agencies, the military, Congress, and the law enforcement community. Defense intelligence comprises the intelligence organizations and capabilities of the Joint Staff, the DIA, The IC comprises 17 elements, two of which are combatant command joint intelligence centers, and the independent, and 15 of which are component organizations military services that address strategic, operational or of six separate departments of the federal government. tactical requirements supporting military strategy, planning, Many IC elements and most intelligence funding reside and operations. Defense intelligence provides products and within the Department of Defense (DOD). -

Ministerial Appointments, July 2018

Ministerial appointments, July 2018 Department Secretary of State Permanent Secretary PM The Rt Hon Theresa May MP The Rt Hon Brandon Lewis MP James Cleverly MP (Deputy Gavin Barwell (Chief of Staff) (Party Chairman) Party Chairman) Cabinet Office The Rt Hon David Lidington The Rt Hon Andrea Leadsom The Rt Hon Brandon Lewis MP Oliver Dowden CBE MP Chloe Smith MP (Parliamentary John Manzoni (Chief Exec of Sir Jeremy Heywood CBE MP (Chancellor of the MP (Lord President of the (Minister without portolio) (Parliamentary Secretary, Secretary, Minister for the the Civil Service) (Head of the Civil Duchy of Lancaster and Council and Leader of the HoC) Minister for Implementation) Constitution) Service, Cabinet Minister for the Cabinet Office) Secretary) Treasury (HMT) The Rt Hon Philip Hammond The Rt Hon Elizabeth Truss MP The Rt Hon Mel Stride MP John Glen MP (Economic Robert Jenrick MP (Exchequer Tom Scholar MP (Chief Secretary to the (Financial Secretary to the Secretary to the Treasury) Secretary to the Treasury) Treasury) Treasury) Ministry of Housing, The Rt Hon James Brokenshire Kit Malthouse MP (Minister of Jake Berry MP (Parliamentary Rishi Sunak (Parliamentary Heather Wheeler MP Lord Bourne of Aberystwyth Nigel Adams (Parliamentary Melanie Dawes CB Communities & Local MP State for Housing) Under Secretary of State and Under Secretary of State, (Parliamentary Under Secretary (Parliamentary Under Secretary Under Secretary of State) Government (MHCLG) Minister for the Northern Minister for Local Government) of State, Minister for Housing of State and Minister for Faith) Powerhouse and Local Growth) and Homelessness) Jointly with Wales Office) Business, Energy & Industrial The Rt Hon Greg Clark MP The Rt Hon Claire Perry MP Sam Gyimah (Minister of State Andrew Griffiths MP Richard Harrington MP The Rt Hon Lord Henley Alex Chisholm Strategy (BEIS) (Minister of State for Energy for Universities, Science, (Parliamentary Under Secretary (Parliamentary Under Secretary (Parliamentary Under Secretary and Clean Growth) Research and Innovation). -

Fostering Global Security: Nonviolent Resistance and US Foreign Policy

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2010 Fostering Global Security: Nonviolent Resistance and US Foreign Policy Amentahru Wahlrab University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Communication Commons, and the International Relations Commons Recommended Citation Wahlrab, Amentahru, "Fostering Global Security: Nonviolent Resistance and US Foreign Policy" (2010). Electronic Theses and Dissertations. 680. https://digitalcommons.du.edu/etd/680 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. Fostering Global Security: Nonviolent Resistance and US Foreign Policy _____________ A Dissertation presented to The Faculty of the Joseph Korbel School of International Studies University of Denver _____________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy _____________ By Amentahru Wahlrab November 2010 Advisor: Jack Donnelly © Copyright by Amentahru Wahlrab, 2010 All Rights Reserved Author: Amentahru Wahlrab Title: Fostering Global Security: Nonviolent Resistance and US Foreign Policy Advisor: Jack Donnelly Degree Date: November 2010 ABSTRACT This dissertation comprehensively evaluates, for the first time, nonviolence and its relationship to International Relations (IR) theory and US foreign policy along the categories of principled, strategic, and regulative nonviolence. The current debate within nonviolence studies is between principled and strategic nonviolence as relevant categories for theorizing nonviolent resistance. Principled nonviolence, while retaining the primacy of ethics, is often not practical. -

A Reference Guide to Selected Historical Documents Relating to the National Security Agency/Central Security Service (NSA/CSS) 1931-1985

Description of document: A Reference Guide to Selected Historical Documents Relating to the National Security Agency/Central Security Service (NSA/CSS) 1931-1985 Requested date: 15-June-2009 Released date: 03-February-2010 Posted date: 15-February-2010 Source of document: National Security Agency Attn: FOIA/PA Office (DJP4) 9800 Savage Road, Suite 6248 Ft. George G. Meade, MD 20755-6248 Fax: 443-479-3612 Online form: Here The governmentattic.org web site (“the site”) is noncommercial and free to the public. The site and materials made available on the site, such as this file, are for reference only. The governmentattic.org web site and its principals have made every effort to make this information as complete and as accurate as possible, however, there may be mistakes and omissions, both typographical and in content. The governmentattic.org web site and its principals shall have neither liability nor responsibility to any person or entity with respect to any loss or damage caused, or alleged to have been caused, directly or indirectly, by the information provided on the governmentattic.org web site or in this file. The public records published on the site were obtained from government agencies using proper legal channels. Each document is identified as to the source. Any concerns about the contents of the site should be directed to the agency originating the document in question. GovernmentAttic.org is not responsible for the contents of documents published on the website. A REFERENCE GUIDE TO SELECTED HISTORICAL DOCUMENTS RELATING TO THE NATIONAL SECURITY AGENCY/CENTRAL SECURITY SERVICE 1931-1985 (U) SOURCE DOCUMENTS IN Compiled by: CRYPTOLOGIC HISTORY Gerald K. -

Basic Special Report

BRITISH AMERICAN SECURITY INFORMATION COUNCIL BASIC SPECIAL REPORT Unravelling the Known Unknowns: Why no Weapons of Mass Destruction have been found in Iraq By David Isenberg and Ian Davis BASIC Special Report 2004.1 January 2004 1 The British American Security Information Council The British American Security Information Council (BASIC) is an independent research organization that analyzes international security issues. BASIC works to promote awareness of security issues among the public, policy makers and the media in order to foster informed debate on both sides of the Atlantic. BASIC in the U.K. is a registered charity no. 1001081 BASIC in the U.S. is a non-profit organization constituted under Section 501(c)(3) of the U.S. Internal Revenue Service Code David Isenberg, Senior Analyst David Isenberg joined BASIC's Washington office in November 2002. He has a wide background in arms control and national security issues, and brings close to 20 years of experience in this field, including three years as a member of DynMeridian's Arms Control & Threat Reduction Division, and nine years as Senior Analyst at the Center for Defense Information. Ian Davis, Director Dr. Ian Davis is Executive Director of BASIC and has a rich background in government, academia, and the non-governmental organization (NGO) sector. He received both his Ph.D. and B.A. in Peace Studies from the University of Bradford. He was formerly Program Manager at Saferworld before being appointed as the new Executive Director of BASIC in October 2001. He has published widely on British defense and foreign policy, European security, the international arms trade, arms export controls, small arms and light weapons and defense diversification. -

The United Nations at 70 Witness Seminar 3

The United Nations at 70 witness seminar 3 The UN and international peace and security: navigating a divided world? ~British perspectives and experiences~ Wednesday 13 January 2016, Hoare Memorial Hall, Church House, Westminster INTRODUCTION TO SEMINAR SERIES To mark the UN’s 70th anniversary this year, the British Association of Former UN Civil Servants (BAFUNCS) and United Nations Association – UK (UNA-UK) are organising three witness seminars to draw on the experience of British citizens who have worked for the UN over the past seven decades. The purpose of the seminar series is to provide recommendations for UK action to increase the effectiveness of the UN, at a time when the need for the UN is more urgent but the international system is under increasing strain. Our partners for the seminar series are: All Souls College, Oxford; the Bodleian Library, Oxford; Institute of Development Studies, Sussex; King’s College London; and the Overseas Development Institute. The UK Foreign & Commonwealth Office (FCO) and Department for International Development (DFID) have generously provided funding for the series. The events take the form of ‘witness seminars’ that will capture the experiences of senior individuals, in particular British citizens, who have worked for or with the UN system at the headquarters and country level. They will serve as a record of testimony, providing a long-term perspective on UN issues and contributing to knowledge and understanding of the UK’s evolving role within the Organisation. UN scholars, practitioners, policy-makers, civil servants and students will be invited to attend and contribute. The first two seminars have already taken place: Witness seminar 1; The UK and the UN in development cooperation, held at the Institute of Development Studies (IDS), University of Sussex on 13-14th May 2015; Witness seminar 2; The UK and the UN in humanitarian action, held at the Weston (Bodleian) Library, University of Oxford, on 16 October 2015.