SGI Altix Applications Development and Optimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ebook - Informations About Operating Systems Version: August 15, 2006 | Download

eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org AIX Internet: AIX AmigaOS Internet: AmigaOS AtheOS Internet: AtheOS BeIA Internet: BeIA BeOS Internet: BeOS BSDi Internet: BSDi CP/M Internet: CP/M Darwin Internet: Darwin EPOC Internet: EPOC FreeBSD Internet: FreeBSD HP-UX Internet: HP-UX Hurd Internet: Hurd Inferno Internet: Inferno IRIX Internet: IRIX JavaOS Internet: JavaOS LFS Internet: LFS Linspire Internet: Linspire Linux Internet: Linux MacOS Internet: MacOS Minix Internet: Minix MorphOS Internet: MorphOS MS-DOS Internet: MS-DOS MVS Internet: MVS NetBSD Internet: NetBSD NetWare Internet: NetWare Newdeal Internet: Newdeal NEXTSTEP Internet: NEXTSTEP OpenBSD Internet: OpenBSD OS/2 Internet: OS/2 Further operating systems Internet: Further operating systems PalmOS Internet: PalmOS Plan9 Internet: Plan9 QNX Internet: QNX RiscOS Internet: RiscOS Solaris Internet: Solaris SuSE Linux Internet: SuSE Linux Unicos Internet: Unicos Unix Internet: Unix Unixware Internet: Unixware Windows 2000 Internet: Windows 2000 Windows 3.11 Internet: Windows 3.11 Windows 95 Internet: Windows 95 Windows 98 Internet: Windows 98 Windows CE Internet: Windows CE Windows Family Internet: Windows Family Windows ME Internet: Windows ME Seite 1 von 138 eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org Windows NT 3.1 Internet: Windows NT 3.1 Windows NT 4.0 Internet: Windows NT 4.0 Windows Server 2003 Internet: Windows Server 2003 Windows Vista Internet: Windows Vista Windows XP Internet: Windows XP Apple - Company Internet: Apple - Company AT&T - Company Internet: AT&T - Company Be Inc. - Company Internet: Be Inc. - Company BSD Family Internet: BSD Family Cray Inc. -

SGI® L1 and L2 Controller Software User's Guide

SGI® L1 and L2 Controller Software User’s Guide 007-3938-004 CONTRIBUTORS Written by Linda Rae Sande Revised by Francisco Razo and Terry Schultz Illustrated by Dan Young Production by Terry Schultz Engineering contributions by Don Adams, Michael T. Brown, Dick Brownell, Jason Chang, Steve Hein, Jill Heitpas, Nancy Heller, Matt Hoy, Hao Pham, Craig Schultz, and Lisa Steinmetz. COPYRIGHT © 2002, 2003, 2004, 2005, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is “commercial computer software” provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, Onyx, and Origin are registered trademarks and Fuei, NUMAflex, NUMAlink, Prism, and SGIconsole are trademarks of Silicon Graphics, Inc., in the U.S. and/or other countries worldwide. All other trademarks mentioned herein are the property of their respective owners. New Features in This Guide This manual has been updated with information to support the SGI Altix 3700 Bx2 system. Major Documentation Changes The following sections were revised for this release: • Added information about the Silicon Graphics Prism Visualization System in the Introduction, Chapter 1, and Chapter 2. -

Application of General Purpose HPC Systems in HPEC David Alexander

Application of General Purpose HPC Systems in HPEC David Alexander Silicon Graphics, Inc. Phone: (650) 933-1073 Fax: (650) 932-0663 Email: [email protected] Areas that this paper/presentation will address: * Reconfigurable Computing for Embedded Systems * High-Speed Interconnect Technologies Abstract: High performance embedded computing (HPEC) has traditionally been performed by systems designed specifically for the task. Recent years have seen the increasing application of general-purpose high performance computing (HPC) systems in embedded applications. General purpose HPC systems typically have a large user base which results in broad application SW and device driver availability, robust development and debugging tools, and revenue streams which support significant R&D funding of technologies to enhance HPC system performance and reliability. Various factors have prevented wider adoption of general purpose HPC systems in the embedded space...factors such as lack of dense, ruggedized packaging suitable for embedded applications, lack of real-time capabilities in general purpose operating systems [1], and performance/watt and performance/unit volume advantages that specialized systems have traditionally had over general purpose HPC systems. This presentation details plans for addressing these shortcomings through the deployment of a heterogeneous computing architecture which incorporates FPGA-based reconfigurable computing and I/O elements, system interconnect advancements leveraged from HPC system development, microprocessor and system advancements developed under DARPA's HPCS program, and the mapping of the system into packaging suitable for HPEC applications. Introduction and System Architectural Review SGI's ccNUMA (cache coherent non-uniform memory architecture) global shared memory system architecture is the basis of our general-purpose Origin [2] and Altix [3] HPC systems. -

SGI® Altix® 330 Self-Paced Training

SGI Multi-Paradigm Architecture Michael Woodacre Chief Engineer, Server Platform Group [email protected] A History of Innovation in HPC Challenge® XL media server fuels Steven Spielberg’s Shoah NASA Ames and project to document Altix® set world Power Series™, Holocaust survivor record for multi-processing stories systems provide STREAMS Jim Clark compute power First systems benchmark founded SGI on SGI introduces for high-end deployed in Stephen the vision of its first 64-bit graphics Hawking’s COSMOS Altix®, first scalable Computer operating applications system 64-bit Linux® Server Visualization system 1982 1984 1988 1994 1995 1996 1997 1998 2001 2003 2004 DOE deploys 6144p Introduced First generation Origin 2000 to IRIS® Workstations modular NUMA System: monitor and become first integrated NUMAflex™ Origin® 2000 simulate nuclear 3D graphics systems architecture stockpile with Origin® 3000 First 512p Altix cluster Dockside engineering analysis on Origin® drives ocean research at NASA Ames 2000 and Indigo2™ helps Team New +10000p upgrade! Zealand win America’s Cup Images courtesy of Team New Zealand and the University of Cambridge SGI Proprietary 2 Over Time, Problems Get More Complex, Data Sets Exploding Bumper, hood, engine, wheels Entire car E-crash dummy Organ damage This Trend Continues Across SGI's Markets Improve design Improve patient safety Improve oil exploration Improve hurricane prediction & manufacturing First Row Images: EAI, Lana Rushing, Engineering Animation, Inc, Volvo Car Corporation, Images courtesy of the SCI, Second Row Images: The MacNeal-Schwendler Corp , Manchester Visualization Center and University Department of Surgery, Paradigm Geophysical, the Laboratory for Atmospheres,SGI Proprietary NASA Goddard Space Flight Center. -

SGI® Altix® 4700 Servers and Supercomputers

SGI® Altix® 4700 Servers and Supercomputers Revolutionary Platform Delivers New System Highlights • Unique modular blade design for superior performance Levels of Performance and Flexibility density and ‘plug and solve’ fl exibility with a Functional Blade Design • Designed for future upgrade, expansion and integration of next-generation technologies • Scalable system size for simplifi ed programming, administration and sustained performance • Standards-based platform reduces cost while delivering uncompromised performance on Linux® Modular Blade Design for Superior Performance Density and ‘Plug and Solve’ Flexibility SGI® Altix® 4700 platform is comprised of modular blades - interchangeable compute, memory, I/O and special purpose blades for ‘plug and solve’ configuration flexibility. The innovative blade-to-NUMAlink™ architecture enables users to mix and match a variety of standardized blade choices, for perfect system right-sizing. The compact blade packaging of the Altix 4700 rack also provides excellent performance density, delivering over one teraflop per tall rack. SGI Altix 4700 is offered with two alternate compute blades; one optimized for maximum performance with top memory bandwidth and another optimized for cost-effective compute density. Designed for Future Upgrade, Expansion and Integration of Next-Generation Technologies SGI Altix 4700 supports dual-core Intel® Itanium® Series 9000 cpus and offers easy upgrade or expansion of memory, I/O or other capabilities. This flexible growth path makes it possible for customers to adjust system configurations to meet current and changing requirements easily and cost-effectively; minimum risk for maximum productivity. Altix 4700 also integrates SGI’s Peer I/O technology which enables high-speed access to SGI’s large shared memory for all system components. -

SGI® Altix® Architecture Considerations for Linux® Device Drivers

SGI® Altix® Architecture Considerations for Linux® Device Drivers 007–4763–001 COPYRIGHT © 2005, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, IRIX, and Origin are registered trademarks and NUMAlink is a trademark of Silicon Graphics, Inc., in the United States and/or other countries worldwide. Intel and Itanium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. Linux is a registered trademark of Linux Torvalds. Motorola is a registered trademark of Motorola, Inc. All other trademarks mentioned herein are the property of their respective owners. The information in Chapter 2, “Memory Operation Ordering on SGI Altix Systems,” was originally authored by Jesse Barnes. Record of Revision Version Description 001 August 2005 Original publication 007–4763–001 iii Contents About This Guide ..................... ix Related Resources ....................... ix Developer Program . -

SGI® Altix® Systems Dual-Port Gigabit Ethernet Board User's Guide

SGI® Altix® Systems Dual-Port Gigabit Ethernet Board User’s Guide 007-4326-001 CONTRIBUTORS Written by Terry Schultz Illustrated by Dan Young and Chrystie Danzer Production by Karen Jacobson Engineering contributions by Jim Hunter and Steve Modica COPYRIGHT © 2004, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the US government or any contractor thereto, it is acquired as “commercial computer software” subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1500 Crittenden Lane, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, IRIS, IRIX, Octane, Onyx, Onyx2, and Origin are registered trademarks, and Octane2, Silicon Graphics Fuel, and Silicon Graphics Tezro are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. FCC WARNING This equipment has been tested and found compliant with the limits for a Class A digital device, pursuant to Part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial environment. -

SGI® Altix® XE320 System User's Guide

SGI® Altix® XE320 System User’s Guide 007-5466-002 COPYRIGHT © 2008 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. LIMITED RIGHTS LEGEND The software described in this document is “commercial computer software” provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as “commercial computer software” subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is SGI, 1140 E. Arques Avenue, Sunnyvale, CA 94085. TRADEMARKS AND ATTRIBUTIONS Altix, SGI, and the SGI logo are registered trademarks and SGI ProPack is a trademark of SGI in the United States and/or other countries worldwide. InfiniBand is a registered trademark of the InfiniBand Trade Association. Intel, Itanium, and Xeon are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Porting IRIX® Applications to SGI® Altix® Platforms: SGI Propack™ for Linux®

Porting IRIX® Applications to SGI® Altix® Platforms: SGI ProPack™ for Linux® 007-4674-001 CONTRIBUTORS WrittenbyStevenLevine Illustrated by Chrystie Danzer Production by Karen Jacobson Engineering contributions by George Pirocanac COPYRIGHT © 2004, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is “commercial computer software” provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, IRIX, Origin, Onyx, Onyx2, and XFS are registered trademarks and CaseVision, NUMAflex, NUMAlink, OpenMP, Performance Co-Pilot, ProDev, SGI Advanced Linux, SGI ProPack, SGIconsole, and SHMEM are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. SGI Advanced Linux Environment 3.0 is based on Red Hat Enterprise Linux AS 3.0, but is not sponsored by or endorsed by Red Hat, Inc. in any way. Cray is a registered trademark of Cray, Inc. FLEXlm is a registered trademark of Macrovision Corporation. Java is a registered trademark of Sun Microsystems, Inc. in the United States and/or other countries. -

High-End HPC Architectures

High-end HPC architectures Mithuna Thottethodi School of Electrical and Computer Engineering Purdue University What makes a Supercomputer a Supercomputer • Top 500 (www.top500.org) – Processor family: Intel/AMD/Power families • 96% of top 500 – Operating systems • Linux/Unix/BSD dominate –Scale • Range from 128-128K processors – Interconnect • Also varies significantly • Is this really surprising? • Better interconnect can scale to more CPUs #1 and #500 over time The Pyramid • 3 of top 5 and 13 of top 50 - BlueGene solutions Number ofSystems • MPPs (60% of top 50 vs Cost/Performance 21% of top 500) • Clusters (~75%) – with High-performance interconnect (#8, #9 on top 10) – with Gigabit Ethernet (41% of top 500, only 1 in top 50) Outline • Interconnects? – Connectivity: MPP vs. cluster – Latency, Bandwidth, Bisection • When is computer A faster than computer B? – Algorithm Scaling, Concurrency, Communication • Other issues – Storage I/O, Failures, Power • Case Studies Connectivity • How fast/slow can the processor get information on/off the network – How far is the on-ramp/the exit from the source/destination? – Can limit performance even if network is fast Massively Parallel Processing (MPP) • Network interface typically Processor close to processor – Memory bus: Cache Network • locked to specific processor Interface architecture/bus protocol – Registers/cache: Memory Bus I/O Bridge • only in research machines Network • Time-to-market is long I/O Bus – processor already available or Main Memory work closely with processor Disk designers -

New Dual-Core SGI Altix Systems Push Leading Applications to Unprecedented Heights/2

FOR IMMEDIATE RELEASE NEW DUAL-CORE SGI ALTIX SYSTEMS PUSH LEADING APPLICATIONS TO UNPRECEDENTED HEIGHTS World-Record Performance on MD Nastran, SPECjbb2005 and More Reveals How Altix Continues to Shorten Time to Results for HPC Users MOUNTAIN VIEW, Calif. (July 18, 2006)⎯Silicon Graphics, Inc. (OTC: SGID) 1200 Crittenden Lane today announced that its new dual-core SGI® Altix® systems are pushing Mountain View, CA 94043 key applications to levels of performance never seen before. The servers are Telephone 650.960.1980 based on the new Dual-Core Intel® Itanium® 2 processor 9000 series sgi.com (formerly codenamed Montecito). SGI’s dual-core Altix® 4700 and Altix® 450 servers deliver double the MEDIA CONTACT performance of today’s award-winning Altix systems at the same or lower Lisa Pistacchio hardware cost, while drawing less power and offering more density and [email protected] flexibility for demanding, data-intensive applications. 650.933.5683 In all of SGI’s major markets – including engineering, earth and life sciences, SGI PR HOTLINE government and defense, and data management – the new servers derive 650.933.7777 blistering performance from real-world applications to shorten time to results. Engineering: MD Nastran SGI PR FACSIMILE In tests of the popular MD Nastran computational structural mechanics 650.933.0317 application, a dual-core SGI Altix system was 2.6 times faster than a dual- core HP server. The tested Altix 4700 system was based on two 1.6GHz dual-core Intel Itanium 2 processor 9000 series CPUs, and the HP server was powered by two dual-core 2.6GHz Opteron processors1. -

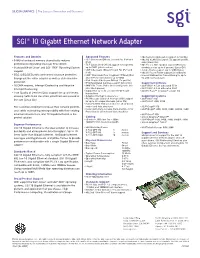

SGI 10 Gigabit Ethernet Network Adapter

SGI® 10 Gigabit Ethernet Network Adapter Features and Benefits Advanced Features • 802.3ad Link Aggregation support (Linux OS) • 64MB of on-board memory dramatically reduces • TCP Checksum Offload (Tx and Rx; IPv4 and • 802.1Q VLAN tag support (Tx append and Rx IPv6) strip) (Linux OS) performance degrading link-layer flow control • TCP Large Send Offload support for segments • 802.1D (ex. 802.1p) QoS support (Rx frame • Standard 64-bit Linux® and SGI® IRIX® Operating System up to 64KB (plus headers) steering across up to 8 queues) (Linux OS) Support • UDP Checksum offload (Tx and Rx; IPv4 and • Jumbo Frame support (up to 9600 Bytes) IPv6) • 802.3X Pause Frame support (in hardware) • ECC (SEC/DED) data and control structure protection • UDP “Checksum Over Fragment” Offload (IPv4 • Unicast/Multicast Rx frame filtering for up to throughout the entire adapter as well as state machine and IPv6) for transactions up to 64KB 64 address/mask pairs • IPv4 Header Checksum Offload (Tx and Rx) protection • IP Differentiated Services support (Linux OS) Supported Software • 16 DMA engines, Interrupt Coalescing and Adaptive • 64MB Rx Frame Buffer (host-configurable into • SGI® IRIX® 6.5.23 with patch 5516 Interrupt Coalescing prioritized queues) • SGI® IRIX® 6.5.24 with patch 5587 • Support for up to 32 concurrent PCI-X split • SGI ProPack™ for Linux® version 3.0 • True Quality of Service (QoS) support for up to 8 levels, transactions allowing traffic to be classified, prioritized and queued at • Adaptive Interrupt Coalescence Supported Systems ® ® line