Evaluation of the Cccco Financial Literacy Pilot Interim Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

External Evaluation Report

EXTERNAL EVALUATION REPORT Yuba College 2088 North Beale Road Marysville, CA 95901 This report represents the findings of the evaluation team that visited Yuba Community College District, October 8-11, 2018 Linda D. Rose Ed.D. Team Chair Contents Team Roster .............................................................................................................................................. 3 Summary of Evaluation Report ................................................................................................................ 4 Team Commendations .............................................................................................................................. 5 Team Recommendations .......................................................................................................................... 5 Introduction ............................................................................................................................................... 6 Eligibility Requirements ........................................................................................................................... 7 Checklist for Evaluating Compliance with Federal Regulations and Related Commission Policies ...... 8 Public Notification of an Evaluation Team Visit and Third Party Comment....................................... 8 Standards and Performance with Respect to Student Achievement ..................................................... 9 Credits, Program Length, and Tuition ............................................................................................... -

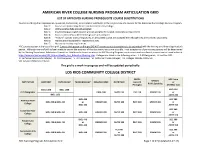

ARC Nursing Program Articulation Grid Page 1 of 27 (Updated 6-27-17) CALIFORNIA COMMUNITY COLLEGES

AMERICAN RIVER COLLEGE NURSING PROGRAM ARTICULATION GRID LIST OF APPROVED NURSING PREREQUISITE COURSE SUBSTITUTIONS Courses on this grid are approved as equivalent, comparable, or acceptable substitutes to the pre/co-requisite courses for the American River College Nursing Program. Note 1: Course acceptance may differ from Sacramento City College. Note 2: Online science labs are not accepted. Note 3: ESL/Multilingual English courses are not accepted for English Composition requirement. Note 4: Courses with comparable C-ID designators are accepted. Note 5: “Honors” courses that correspond to an articulated course are accepted even though they are not listed separately. Note 6: Highest grade accepted for repeated courses. Note 7: No course recency requirement. ARC courses appear at the top of this grid. Courses that appear on this grid DO NOT require course descriptions to be submitted with the Nursing enrollment (application) packet. Although every effort has been made to ensure the accuracy of this document, errors are possible. Final acceptance of pre-nursing courses will be determined by the Nursing Department Admissions Committee. Additional information about the ARC Nursing Program requirements and enrollment process can be found online at http://www.arc.losrios.edu/ARC_Majors/Health_And_Education/Nursing.htm. Colleges are listed in the following order: I. C-ID Designator; II. Los Rios CCD; III. California Community Colleges; IV. CSU Campuses; V. UC Campuses; VI. California Private Colleges; VII. Colleges Outside California; VIII. -

Yuba College Presidential Forums

Yuba College Presidential Forums - May 11, 2020 Students, faculty, staff and the community are invited to meet the four Yuba College President Candidates at the Community Forum on Monday, May 11, 2020 (candidate forum time listed below biography). Dr. Sonja Lolland Dr. Tawny Dotson Dr. James Todd Dr. Torence Powell Dr. Lolland has 20 years of experience in the Dr. Tawny M. Dotson currently serves as the In- Dr. James Todd has over 13 years of experi- Dr. Torence Powell attributes his enduring pas- sion for education in part to the transformative California community system and is currently terim Vice President for Instruction and Executive ence in instruction and student services in experiences he had as a first-generation commu- serving as the Interim Vice Chancellor of Educa- Director of the Foundation at Clover Park Tech- California community colleges. He has held a nity college student in South Sacramento. After variety of positions, including Professor, Ac- tion and Planning at Yuba Community College nical College, Pierce County, Wash. CPTC serves completing his senior year of high school at Cos- ademic Senate President, Accreditation Li- District in Northern California. Dr. Lolland also more than 6,500 students annually, in 7 schools, umnes River College as an advanced education has three years of experience serving as a Vice 44 program areas (BAS, AAS-T, AAT, Certificate), aison Officer, Chief Student Services Officer, student, Torence transferred to Cal State, Los President. In 2016, she joined Yuba College as 496 employees, four bargaining units, two cam- and Chief Instructional Officer. He has also Angeles where he completed a Bachelor’s degree the Vice President of Academics and Student puses, two satellite locations, and a $36 million served as an elected faculty representative on in Social Science, with a minor in History. -

Survey of Entering Student Engagement

Survey of Entering Student Engagement - Riverside City College (2018 Administration) 2018 Frequency Distributions - Guided Pathways Module Comparison Group: CA Colleges* Entering Students Only [Weighted] Module Your College CA Colleges Participants Item Variable Responses Count Percent Count Percent Count Percent 1. Were you required to meet (in person or COLLQ879 Yes 199 35.2 5,104 58.0 26,959 67.3 online) with an academic advisor before registering for classes this academic term at this college? No 366 64.8 3,698 42.0 13,119 32.7 Total 565 100.0 8,802 100.0 40,078 100.0 2. During this academic term at this college, COLLQ880 None 295 51.6 3,075 34.8 11,354 28.2 how many times have you met (in person or online) with an academic advisor? 1 time 193 33.8 3,597 40.7 15,699 39.0 2 times 60 10.6 1,399 15.8 7,887 19.6 More than 2 times 23 4.1 770 8.7 5,348 13.3 Total 571 100.0 8,841 100.0 40,287 100.0 3. Prior to registering for classes your first COLLQ881 Yes 405 71.9 6,084 69.2 29,453 73.5 academic term at this college, had you decided on a job or career that you wanted to pursue? I am not attending this college to pursue a 35 6.2 483 5.5 2,122 5.3 job or career No 123 21.9 2,221 25.3 8,501 21.2 Total 563 100.0 8,788 100.0 40,076 100.0 4. -

2017-2018 Catalog 3 District Calendar District Calendar

Yuba College A Public Community College in its Ninetieth Year of Service Accredited by the Western Association of Schools and Colleges, and recognized as a fully accredited two-year Community College by the University of California, the California State University, the California Community Colleges, the United States Office of Education, the Veterans Administration, and the American Association of Collegiate Registrars and Admissions Officers. Yuba College 2088 North Beale Road Marysville, California 95901 (530) 741-6700 yc.yccd.edu Sutter County Center 3301 E. Onstott Road Yuba City, California 95993 (530) 751-5600 Beale AFB Outreach Services Base Education Building 17849 16th Street Beale AFB, California 95903 (530) 788-0973 This catalog is in effect from August 2017 - July 2018. Information about course offerings, fees, refunds, and college policies published in this Catalog, is subject to change without prior notice. Any updates to courses and programs can be found in the Catalog Addendum. Any updates to policy, enrollment, or fee information can be found in the online Semester Class Schedule prior to registration each term (Fall 2017 and Spring 2018). District Map 2017-18 Catalog Table of Contents Page District Map ...................................................... 2 District Calendar .............................................. 4 President’s Welcome ....................................... 7 District Organization ........................................ 8 sville District Information........................................ -

Mini-Corps Sites

CALIFORNIA MINI-CORPS PROJECT SITES Del CSU CHICO Norte 400 W. 1st. St. Tehama 101 Siskiyou Modoc Chico, CA 95929 (530) 898-426828 YUBA COLLEGE 2088 N Beale Road, EOP & S Dept, RM 114 Marysville, CA 95901 Shasta Lassen (707) 995-4166 Trinity CSU SACRAMENTO Humboldt 6000 J Street, Alpine Hall 129 Sacramento, CA 95819 (916) 278-6729 Tehama DELTA COLLEGE Plumas 5151 Pacic Avenue Budd Bldg. 316 Mendocino Stockton, CA 95207 Glenn Butte Sierra (209) 954-5279 Nevada CSU STANISLAUS Yuba One University Circle Lake Colusa Placer Turlock, CA 95382 (209) 667-3259 Sutter MERCED COLLEGE Yolo El Dorado 3600 M Street Sonoma Napa Tri-College 3 Sacra- Alpine Solano Amador Merced, CA 95348 mento (209) 384-6046 MENDOCINO COLLEGE Calaveras 1000 Hensley Creek Rd. Marin CSU FRESNO Suite 6500 Tuolumne 5005 N. Maple Ave. M/S Ed 1 Contra San Mono Ukiah, CA 95482 Costa Joaquin Fresno, CA 93740 (707) 468-3040 (559) 278-0360 San Francisco Alameda Stanislaus Mariposa COLLEGE OF THE SEQUOIAS CSU SONOMA San Mateo 915 S. Mooney Blvd. 1801 E. Cotati Avenue Sequoias Bldg. Room 109 Salazar Hall 2030 Santa Merced Madera Visalia, CA 93277 Rohnert Park, CA 94928 Clara (559) 730-3958 (707) 664-3473 PORTERVILLE COLLEGE Santa Cruz SAN JOSE STATE 100 E. College Avenue San Inyo Porterville, CA 93257 One Washington Square Fresno Sweeney Hall 106 Benito (559) 791-2300 San Jose, CA 95192 CSU BAKERSFIELD (408) 924-3631 Tulare 9001 Stockdale Highway 3025 CABRILLO Bakerseld, CA 93311 Monterey 6500 Soquel Dr. RM 801 C Kings (661) 654-2429 Aptos, CA 95003 (831) 477-3560 San Bernardino HARTNELL COLLEGE San Luis 156 Homestead Ave. -

Black History Month State of the Region

REGION II FEBRUARY NEWSLETTER Table of Contents Black History 1 Month College 2 Highlight Student 3 Resources Pick-up lines 4 Welcome Aboard! State of the Hello everybody, 5 Region Happy Valentine's Day! Regional 6 Meeting I am Kelvin Chan, your new Communications Officer. I was the Solano College delegate. I cannot wait to showcase my creativity and some of my works. I am very excited to work with you all. Black History Month Black History Month presents an opportunity to intentionally learn about the often-ignored accomplishments of Black Americans. One day, I hope that Black History Month will no longer be necessary. My hope is that Black history is integrated to be a significant and meaningful part of American history. From observations over my lifetime, mainstream media and American history classes tend to focus on “Black trauma.” We are inundated with images of slavery, suggestions that slavery was a job, descriptions of how Black people are underperforming in education, the workforce and health, and the fight for civil rights, just to name a few examples. All of these examples highlight and reinforce the Black struggle in America. For now, Black History Month is 28 days to bring awareness to the contributions to the many facets of American society including politics, education, technology, science, entertainment and athletics. It is a time to highlight African American contributions to history beyond the overly simplified versions taught in history classes. I look forward to Black History Month every year because I know that I will learn more about the Black experience in our collective history. -

Approved Prerequisite Course List

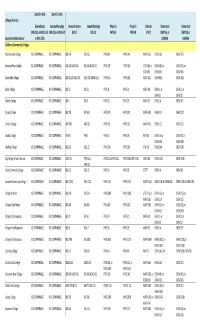

Upper Div Only Upper Div Only College/University Biomechanics Exercise Physiology Human Anatomy Human Physiology Physics A Physics B Statistics Chemistry A Chemistry B KINS 151, or KINS 151A, KINS 152, or KINS 150 BIO 22 BIO 131 PHYS 5A PHYS 5B STAT 1 CHEM 1A, or CHEM 1B, or Sacramento State Courses: or KINS 151D CHEM 6A CHEM 6B California Community Colleges Allan Hancock College NO COMPARABLE NO COMPARABLE BIO 124 BIO 125 PHYS 141 PHYS 142 MATH 123 CHEM 150 CHEM 151 American River College NO COMPARABLE NO COMPARABLE BIO 430 & BIO 431 BIO 430 & BIO 431 PHYS 350 PHYS 360 STAT 300, or CHEM 400, or CHEM 401, or STAT 305 CHEM 305 CHEM 306 Bakersfield College NO COMPARABLE NO COMPARABLE BIOL B32 & BIOL B33 BIOL B32 & BIOL B33 PHYS B2A PHYS B2B MATH B22 CHEM B1A CHEM B1B Butte College NO COMPARABLE NO COMPARABLE BIO 20 BIO 21 PHYS 21 PHYS 22 MATH 18 CHEM 1, or CHEM 2, or CHEM 51 CHEM 52 Cabrillo College NO COMPARABLE NO COMPARABLE BIO 4 BIO 5 PHYS 2A PHYS 2B MATH 12 CHEM 1A CHEM 1B Canada College NO COMPARABLE NO COMPARABLE BIO 250 BIO 260 PHYS 210 PHYS 220 MATH 200 CHEM 210 CHEM 220 Cerritos College NO COMPARABLE NO COMPARABLE A&P 200 A&P 201 PHYS 101 PHYS 102 MATH 112 CHEM 111 CHEM 112 Chabot College NO COMPARABLE NO COMPARABLE ANAT 1 PHSI 1 PHYS 3A PHYS 3B MTH 43 CHEM 1A, or CHEM 1B, or CHEM 30A CHEM 30B Chaffrey College NO COMPARABLE NO COMPARABLE BIOL 20 BIOL 22 PHYS 20A PHYS 20B STAT 10 CHEM 24A CHEM 24B City College of San Francisco NO COMPARABLE NO COMPARABLE ANAT 25 PHYS 1, or PHYSC 2A & PHYC 2AL PHYSC 2B & PHYC 2BL MATH 80 CHEM 101A -

Area Map and College Listing

Member Senate and Map North South AREA A AREA B AREA C AREA D American River College Alameda, College of Allan Hancock College Barstow College Bakersfield College Berkeley City College Antelope Valley College Chaffey College Butte College Cabrillo College Canyons, College of the Coastline College Cerro Coso College Cañada College Cerritos College Copper Mountain College Clovis College Chabot College Citrus College Crafton Hills College Columbia College Contra Costa College Compton College Cuyamaca College Cosumnes River College Contra Costa CC District Cuesta College Cypress College Feather River College De Anza College East Los Angeles College Desert, College of the Folsom Lake College Diablo Valley College El Camino College Fullerton College Glendale College Fresno City College Evergreen Valley College Golden West College Los Angeles City College Lake Tahoe College Foothill College Grossmont College Los Angeles CC District Lassen College Foothill - De Anza CC District Imperial Valley College Los Angeles Harbor College Los Rios CC District Gavilan College Irvine Valley College Los Angeles Mission College Madera Community College Hartnell College Long Beach City College Los Angeles Pierce College Merced College Laney College MiraCosta College Los Angeles Southwest College Modesto Junior College Las Positas College Moreno Valley College Los Angeles Trade-Technical Porterville College Los Medanos College Mt. San Jacinto College College Redwoods, College of the Marin, College of Norco College Los Angeles Valley College Reedley College Mendocino -

AB 86 Consortial

122 — 2014 Community College Directory 1 Allan Hancock Joint 13 Copper Mountain 24 Lake Tahoe 44 Redwoods 2 Antelope Valley 14 Desert 25 Lassen 45 Rio Hondo 3 Barstow 15 El Camino 26 Long Beach 46 Riverside 4 Butte - Glenn 16 Feather River 27 Los Angeles 47 San Bernardino 5 Cabrillo 17 Foothill - DeAnza East LA College Crafton Hills College 6 Cerritos DeAnza College LA City College San Bernardino Vly College 7 Chabot - Las Positas Foothill College LA Harbor College 48 San Diego Chabot College 18 Gavilan LA Mission College San Diego City Collge Las Positas College 19 Glendale LA Pierce College San Diego Mesa College 8 Chaffey 20 Grossmont - Cuyamaca LA Southwest College San Diego Miramar College 9 Citrus Cuyamaca College LA Trade Technical College 49 San Francisco 10 Coast Grossmont College LA Valley College City College of S.F. Coastline CC 21 Hartnell West LA College 50 San Joaquin Delta Golden West College 22 Imperial 28 Los Rios 51 San Jose/Evergreen Orange Coast College 23 Kern American River College Evergreen Valley College 11 Compton Bakersfield College Cosumnes River College San Jose City College 12 Contra Costa Cerro Coso College Folsom Lake College 52 San Luis Obispo County Contra Costa College Porterville College Sacramento City College Cuesta College Diablo Valley College 29 Marin 53 San Mateo Los Medanos College 30 Mendocino - Lake Canada College 31 Merced College of San Mateo 32 MiraCosta Skyline College 60 33 Monterey Peninsula 54 Santa Barbara 34 Mt. San Antonio 55 Santa Clarita 35 Mt. San Jacinto College of the Canyons -

Area Map and List

Member Senate and Map North South AREA A AREA B AREA C AREA D American River College Alameda, College of Allan Hancock College Barstow College Bakersfield College Berkeley City College Antelope Valley College Chaffey College Butte College Cabrillo College Canyons, College of the Coastline College Cerro Coso College Cañada College Cerritos College Copper Mountain College Clovis College Chabot College Citrus College Crafton Hills College El Camino College Compton Columbia College Contra Costa College Cuyamaca College Center Cosumnes River College Contra Costa CC District Cuesta College Cypress College Feather River College De Anza College East Los Angeles College Desert, College of the Folsom Lake College Diablo Valley College El Camino College Fullerton College Fresno City College Evergreen Valley College Glendale College Golden West College Lake Tahoe College Foothill College Los Angeles City College Grossmont College Lassen College Foothill - De Anza CC District Los Angeles CC District Imperial Valley College Los Rios CC District Gavilan College Los Angeles Harbor College Irvine Valley College Merced College Hartnell College Los Angeles Mission College Long Beach City College Modesto Junior College Laney College Los Angeles Pierce College MiraCosta College Porterville College Las Positas College Los Angeles Southwest College Moreno Valley College Los Angeles Trade-Technical Redwoods, College of the Los Medanos College Mt. San Jacinto College College Reedley College Marin, College of Los Angeles Valley College Norco College Sacramento -

Institutional Self-Evaluation Report of Educational Quality

22018018 InstitutionalInstitutional Self-EvaluationSelf-Evaluation ReportReport ofof EducationalEducational QualityQuality andand InstitutionalInstitutional EffectivenessEffectiveness inin SupportSupport ofof ReaffirmationReaffirmation ofof AccreditationAccreditation Yuba College Institutional Self-Evaluation Report in Support of Reaffirmation of Accreditation Submitted by: Yuba College 2088 North Beale Rd Marysville, CA 95901 Submitted to: Accreditation Commission for Community and Junior Colleges, Western Association of Schools and Colleges Submitted August 2018 INSTITUTIONAL SELF-EVALUATION REPORT – CERTIFICATION To: Accrediting Commission for Community and Junior Colleges, Western Association of Schools and Colleges From: Dr. G. H. Javaheripour, President Yuba College 2088 North Beale Rd Marysville, CA 95901 This Institutional Self-Evaluation Report is submitted to the Accrediting Commission for Community and Junior Colleges for assisting in the determination of the institution’s accreditation status. I certify there was effective participation by the campus community and I believe the Institutional Self-Evaluation Report accurately reflects the nature and substance of this institution. Signatures: __________________________________________________________ Dr. G. H. Javaheripour, President __________________________________________________________ Michael Pasquale, Governing Board President __________________________________________________________ Dr. Sonja Lolland, Accreditation Liaison Officer __________________________________________________________