Deliverable 4.2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Show Crypto Ace Redundancy Through Show Cts Sxp

show crypto ace redundancy through show cts sxp • show crypto ace redundancy, on page 4 • show crypto ca certificates, on page 6 • show crypto ca crls, on page 9 • show crypto ca roots, on page 10 • show crypto ca timers, on page 11 • show crypto ca trustpoints, on page 12 • show crypto call admission statistics, on page 13 • show crypto ctcp, on page 15 • show crypto datapath, on page 17 • show crypto debug-condition, on page 20 • show crypto dynamic-map, on page 23 • show crypto eli, on page 24 • show crypto eng qos, on page 26 • show crypto engine, on page 27 • show crypto engine accelerator sa-database, on page 31 • show crypto engine accelerator ring, on page 32 • show crypto engine accelerator logs, on page 34 • show crypto engine accelerator statistic, on page 36 • show crypto gdoi, on page 52 • show crypto ha, on page 81 • show crypto identity, on page 82 • show crypto ikev2 cluster, on page 83 • show crypto ikev2 diagnose error, on page 85 • show crypto ikev2 policy, on page 86 • show crypto ikev2 profile, on page 88 • show crypto ikev2 proposal, on page 90 • show crypto ikev2 sa, on page 92 • show crypto ikev2 session, on page 95 • show crypto ikev2 stats, on page 98 • show crypto ipsec client ezvpn, on page 105 • show crypto ipsec transform-set default, on page 108 show crypto ace redundancy through show cts sxp 1 show crypto ace redundancy through show cts sxp • show crypto ipsec sa, on page 110 • show crypto ipsec security-association idle-time, on page 120 • show crypto ipsec security-association lifetime, on page 121 • show -

Where to Download Quake 1 Full Version Pc Quake Windows 10

where to download quake 1 full version pc Quake windows 10. Quake II is the next major follow up after Quake I. Shortly after landing on an alien surface . Similar choice. Programs for query ″quake windows 10″ XenArmor All-In-One Key Finder Pro. All-In-One Key Finder Pro is the enterprise software to recover license keys of Windows, Office & 1300+ popular softwares/games. of Windows from XP to 10 . NFS, AOE, Quake , Star Wars . starting from Windows XP to Windows 10. Windows Product Key Finder. Windows Product Key Finder is the all-in-one software to recover license keys of Windows & 500+ popular softwares/games. of Empires, Quake , The . or periodically Windows Product . from Windows XP to Windows 10. Quake Live Mozilla Plugin. QUAKE LIVE delivers the excitement and energy of first-person multiplayer action to a broader audience than ever before. QUAKE LIVE delivers . "web game," QUAKE LIVE offers . QUAKE 2007. Quake Virtual DJ is ground breaking in concept (hence the name “Quake”). Quake Virtual DJ . the name “ Quake ”). It is . environment. With Quake , the days . Quake Mate Seeker. QMS is a simple utility that allows you to find players on Quake 3 servers. players on Quake 3 servers. Unlike . and customizable Quake 3 server list . Quake Video Maker. Here is Quake Video Maker. A simple tool to create AVI files with both video and audio. Here is Quake Video Maker. A . Quake2xp. Quake 2 is a based engine with glsl per pixel lighting effects and more. Quake 2 is a based . QuakeMap. QuakeMap is a powerful GPS/mapping program for your Windows computer. -

Guidelines on Firewalls and Firewall Policy

Special Publication 800-41 Revision 1 Guidelines on Firewalls and Firewall Policy Recommendations of the National Institute of Standards and Technology Karen Scarfone Paul Hoffman NIST Special Publication 800-41 Guidelines on Firewalls and Firewall Revision 1 Policy Recommendations of the National Institute of Standards and Technology Karen Scarfone Paul Hoffman C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 September 2009 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Deputy Director GUIDELINES ON FIREWALLS AND FIREWALL POLICY Reports on Computer Systems Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the U.S. economy and public welfare by providing technical leadership for the nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analysis to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. This Special Publication 800-series reports on ITL’s research, guidance, and outreach efforts in computer security and its collaborative activities with industry, government, and academic organizations. National Institute of Standards and Technology Special Publication 800-41 Revision 1 Natl. Inst. Stand. Technol. Spec. Publ. 800-41 rev1, 48 pages (Sep. 2009) Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. -

Automated Malware Analysis Report for ORDER 5211009876.Exe

ID: 386799 Sample Name: ORDER 5211009876.exe Cookbook: default.jbs Time: 20:09:05 Date: 14/04/2021 Version: 31.0.0 Emerald Table of Contents Table of Contents 2 Analysis Report ORDER 5211009876.exe 4 Overview 4 General Information 4 Detection 4 Signatures 4 Classification 4 Startup 4 Malware Configuration 4 Threatname: Agenttesla 4 Yara Overview 4 Memory Dumps 4 Unpacked PEs 5 Sigma Overview 5 System Summary: 5 Signature Overview 5 AV Detection: 5 System Summary: 5 Boot Survival: 5 Malware Analysis System Evasion: 6 HIPS / PFW / Operating System Protection Evasion: 6 Stealing of Sensitive Information: 6 Remote Access Functionality: 6 Mitre Att&ck Matrix 6 Behavior Graph 7 Screenshots 7 Thumbnails 7 Antivirus, Machine Learning and Genetic Malware Detection 8 Initial Sample 8 Dropped Files 8 Unpacked PE Files 8 Domains 8 URLs 9 Domains and IPs 10 Contacted Domains 10 URLs from Memory and Binaries 10 Contacted IPs 13 Public 14 General Information 14 Simulations 15 Behavior and APIs 15 Joe Sandbox View / Context 15 IPs 15 Domains 16 ASN 16 JA3 Fingerprints 16 Dropped Files 17 Created / dropped Files 17 Static File Info 18 General 18 File Icon 18 Static PE Info 18 General 19 Entrypoint Preview 19 Data Directories 20 Sections 21 Copyright Joe Security LLC 2021 Page 2 of 30 Resources 21 Imports 21 Version Infos 21 Network Behavior 21 Network Port Distribution 22 TCP Packets 22 UDP Packets 23 DNS Queries 23 DNS Answers 23 SMTP Packets 23 Code Manipulations 24 Statistics 24 Behavior 24 System Behavior 24 Analysis Process: ORDER 5211009876.exe PID: -

Multiplayer Game Programming: Architecting Networked Games

ptg16606381 Multiplayer Game Programming ptg16606381 The Addison-Wesley Game Design and Development Series Visit informit.com/series/gamedesign for a complete list of available publications. ptg16606381 Essential References for Game Designers and Developers hese practical guides, written by distinguished professors and industry gurus, Tcover basic tenets of game design and development using a straightforward, common-sense approach. The books encourage readers to try things on their own and think for themselves, making it easier for anyone to learn how to design and develop digital games for both computers and mobile devices. Make sure to connect with us! informit.com/socialconnect Multiplayer Game Programming Architecting Networked Games ptg16606381 Joshua Glazer Sanjay Madhav New York • Boston • Indianapolis • San Francisco Toronto • Montreal • London • Munich • Paris • Madrid Cape Town • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products Editor-in-Chief are claimed as trademarks. Where those designations appear in this book, and the Mark Taub publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals Acquisitions Editor Laura Lewin The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or Development Editor omissions. No liability is assumed for incidental or consequential damages in connection Michael Thurston with or arising out of the use of the information or programs contained herein. Managing Editor For information about buying this title in bulk quantities, or for special sales opportunities Kristy Hart (which may include electronic versions; custom cover designs; and content particular to your business, training goals, marketing focus, or branding interests), please contact our Project Editor corporate sales department at [email protected] or (800) 382-3419. -

Quake 2 Xp Mod Download Quake II - Quakeiixp V.1.26.9.1 - Game Mod - Download

quake 2 xp mod download Quake II - QuakeIIxp v.1.26.9.1 - Game mod - Download. The file QuakeIIxp v.1.26.9.1 is a modification for Quake II , a(n) action game. Download for free. file type Game mod. file size 2241.4 MB. last update Sunday, July 5, 2020. Report problems with download to [email protected] QuakeIIxp is a mod for Quake II , created by Quake2xp Team.. Description: QuakeIIxp is a multi-platform (windows, linux and freeBSD (experemental)) graphics port of the game Quake II developed by Id Software. Completely updated rendering takes full advantage of the latest graphics cards to get the perfect picture, preserving the original style of the game. Real time per-pixel lighting and shadowing (like DooM3) with hi quality parallax mapping (relief mapping technology) Oren-Nayar Diffuse with GGX Specular BRDF, Phong, Lambert and Sub-Surface Scattering lighting models. Cubemaps and caustic light filters. Editable GLSL shaders. 2d lighting for hud digits and menu tags. In-game light editor. Advanced decal and particle system (infinity decals and soft particles) Reflection (screen space local reflection) and refraction surfaces. Radiosity normal mapping with high resolution lightmaps. Postrocessing effects - FXAA 3.11, Bloom, Depth of Field, Radial Blur, Thermal Vision, Film Grain, Brightness/Contrast/Saturation, Motion Blur, SSAO, Scanline and mediam cinematic filters. Raw mouse input (windows only) OpenAL 3d audio engine with EFX (high-quality environmental audio sound effects both win and linux) Playing OGG and WAV music tracks from the hdd. Native support for the Reckoning mp and 3zb2 bot. All other addons and mods supported in compatibility mode. -

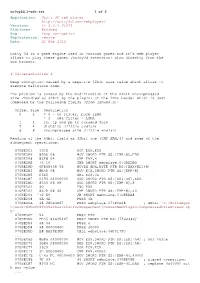

Application: Unity 3D Web Player

unity3d_1-adv.txt 1 of 2 Application: Unity 3D web player http://unity3d.com/webplayer/ Versions: <= 3.2.0.61061 Platforms: Windows Bug: heap corruption Exploitation: remote Date: 21 Feb 2012 Unity 3d is a game engine used in various games and it’s web player allows to play these games (unity3d extension) also directly from the web browser. # Vulnerabilities # Heap corruption caused by a negative 32bit size value which allows to execute malicious code. The problem is caused by the modification of the 64bit uncompressed size (handled as 32bit by the plugin) of the lzma header which is just composed by the following fields (from lzma86.h): Offset Size Description 0 1 = 0 - no filter, pure LZMA = 1 - x86 filter + LZMA 1 1 lc, lp and pb in encoded form 2 4 dictSize (little endian) 6 8 uncompressed size (little endian) Reading of the 64bit field as 32bit one (CMP EAX,4) and some of the subsequent operations: 070BEDA3 33C0 XOR EAX,EAX 070BEDA5 895D 08 MOV DWORD PTR SS:[EBP+8],EBX 070BEDA8 83F8 04 CMP EAX,4 070BEDAB 73 10 JNB SHORT webplaye.070BEDBD 070BEDAD 0FB65438 05 MOVZX EDX,BYTE PTR DS:[EAX+EDI+5] 070BEDB2 8B4D 08 MOV ECX,DWORD PTR SS:[EBP+8] 070BEDB5 D3E2 SHL EDX,CL 070BEDB7 0196 A4000000 ADD DWORD PTR DS:[ESI+A4],EDX 070BEDBD 8345 08 08 ADD DWORD PTR SS:[EBP+8],8 070BEDC1 40 INC EAX 070BEDC2 837D 08 40 CMP DWORD PTR SS:[EBP+8],40 070BEDC6 ^72 E0 JB SHORT webplaye.070BEDA8 070BEDC8 6A 4A PUSH 4A 070BEDCA 68 280A4B07 PUSH webplaye.074B0A28 ; ASCII "C:/BuildAgen t/work/b0bcff80449a48aa/PlatformDependent/CommonWebPlugin/CompressedFileStream.cp p" 070BEDCF 53 PUSH EBX 070BEDD0 FF35 84635407 PUSH DWORD PTR DS:[7546384] 070BEDD6 6A 04 PUSH 4 070BEDD8 68 00000400 PUSH 40000 070BEDDD E8 BA29E4FF CALL webplaye.06F0179C .. -

Winroute Lite 4.2

User's Guide WinRoute Lite 4.2 Kerio Technologies Inc. Contents Contents Before Installing WinRoute 3 System Requirements.............................................................................................. 4 Choosing the right computer for sharing................................................................. 5 Configure TCP/IP.................................................................................................... 6 Multi-operating systems environment (Linux, AS400, Apple)............................... 7 Connecting the network to the Internet 9 WinRoute Lite installation and maintenance ........................................................ 10 Basic Configuration .............................................................................................. 11 Dial-up or ISDN connection ................................................................................. 12 About DHCP server .............................................................................................. 15 About DNS proxy ................................................................................................. 17 About Port Mapping.............................................................................................. 19 Inside WinRoute Lite 23 The Architecture.................................................................................................... 24 How NAT works................................................................................................... 25 Logs and packet analysis...................................................................................... -

List of TCP and UDP Port Numbers

List of TCP and UDP port numbers From Wikipedia, the free encyclopedia Jump to: navigation, search In computer networking, the protocols of the Transport Layer of the Internet Protocol Suite, most notably the Transmission Control Protocol (TCP) and the User Datagram Protocol (UDP), but also other protocols, use a numerical identifier for the data structures of the endpoints for host-to- host communications. Such an endpoint is known as a port and the identifier is the port number. The Internet Assigned Numbers Authority (IANA) is responsible for maintaining the official assignments of port numbers for specific uses.[1] Contents [hide] y 1 Table legend y 2 Well-known ports: 0±1023 y 3 Registered ports: 1024±49151 y 4 Dynamic, private or ephemeral ports: 49152±65535 y 5 See also y 6 References y 7 External links [edit] Table legend Color coding of table entries Official Port/application combination is registered with IANA Unofficial Port/application combination is not registered with IANA Conflict Port is in use for multiple applications [edit] Well-known ports: 0±1023 According to IANA "The Well Known Ports are assigned by the IANA and on most systems can only be used by system (or root) processes or by programs executed by privileged users. Ports are used in the TCP [RFC793] to name the ends of logical connections which carry long term conversations. For the purpose of providing services to unknown callers, a service contact port is defined. This list specifies the port used by the server process as its contact port. The contact port is sometimes called the well-known port."[1] Port TCP UDP Description Status 0 UDP Reserved Official When running a server on port 0, the system will run it on a random 0 TCP UDP port from 1-65535 or 1024-65535 depending on the privileges of Official the user. -

Quake Shareware Windows 10 Download Quake on Windows 10 in High Resolution

quake shareware windows 10 download Quake on Windows 10 in High Resolution. Quake: another all time classic, although this DOS game looks like it was never really finished properly (which is true). Poorly designed weaponry. No gun-changing animation. Cartoonish characters. But it was an instant classic FPS anyway, with true 3D level design and polygonal characters, as well as TCP/IP network support. With the DarkPlaces quake engine you still can play Quake on a computer with a modern operating system! The DarkPlaces Quake engine is the best source port we've encountered so far. Other Quake source ports we've tested: ezQuake. So, what do you need to get Quake running with DarkPlaces on Windows 10, Windows 8 and Windows 7? Installation of Quake. If you have an original Quake CD with a DOS version, install the game with DOSBox. Instructions on how to install a game from CD in DOSBox are here. The game files are in the ID1 folder of the Quake installation. If you have an original Quake CD with a Windows version, you don't have to install the game. The game files are in the ID1 folder on the CD. You don't have the original Quake game? Download Quake (including Mission Pack 1 and 2)! Installation of the DarkPlaces Quake engine. the latest stable/official release of the DarkPlaces Quake engine files: Windows 32 bits: DarkPlaces engine Windows OpenGL build 20140513 Windows 64 bits: DarkPlaces engine Windows 64 OpenGL build 20140513. Quake CD soundtrack. The music of Quake on the original installation CD consists of CD audio tracks (starting with track 2), which are not copied to your hard disk when you install the game. -

1 SEC-310 2979 05 2001 C1

SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 1 Troubleshooting the Implementation of IPSec VPNs Session SEC-310 SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 3 Virtual Private Network (VPN) Defined “A Virtual Private Network carries private traffic over public network.” SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 4 The Complete VPN Supplier Business Enterprise Partner AAA Service CA Provider B DMZ Remote Office Service Provider A Web Servers DNS Server STMP Mail Relay Regional Office Mobile User Small Or Corporate Office Telecommuter SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 5 What Is IPSec? • IPSec stands for IP Security • Standard for privacy, integrity and authenticity for networked commerce • Implemented transparently in the network infrastructure • End-to-end security solution including routers, firewalls, PCs, and servers SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 6 Agenda • Router IPSec VPNs • PIX IPSec VPNs • Cisco VPN 3000 IPSec VPNs • CA Server Issues • NAT with IPSec • Firewalling and IPSec • MTU Issues • GRE over IPSec • Loss of Connectivity to IPSec Peers SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 7 Layout 172.21.114.123 172.21.114.68 Internet Router Router Encrypted SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. All rights reserved. 8 Normal Router Configurations Router# ! crypto isakmp policy 10 authentication pre-share crypto isakmp key gwock address 172.21.114.68 ! crypto IPSec transform-set t1 esp-des esp-md5-hmac ! crypto map multi-peer 10 IPSec-isakmp set peer 172.21.114.68 set transform-set t1 match address 151 SEC-310 2979_05_2001_c1 © 2001, Cisco Systems, Inc. -

* His Is the Original Ubuntuguide. You Are Free to Copy This Guide but Not to Sell It Or Any Derivative of It. Copyright Of

* his is the original Ubuntuguide. You are free to copy this guide but not to sell it or any derivative of it. Copyright of the names Ubuntuguide and Ubuntu Guide reside solely with this site. This guide is neither sold nor distributed in any other medium. Beware of copies that are for sale or are similarly named; they are neither endorsed nor sanctioned by this guide. Ubuntuguide is not associated with Canonical Ltd nor with any commercial enterprise. * Ubuntu allows a user to accomplish tasks from either a menu-driven Graphical User Interface (GUI) or from a text-based command-line interface (CLI). In Ubuntu, the command-line-interface terminal is called Terminal, which is started: Applications -> Accessories -> Terminal. Text inside the grey dotted box like this should be put into the command-line Terminal. * Many changes to the operating system can only be done by a User with Administrative privileges. 'sudo' elevates a User's privileges to the Administrator level temporarily (i.e. when installing programs or making changes to the system). Example: sudo bash * 'gksudo' should be used instead of 'sudo' when opening a Graphical Application through the "Run Command" dialog box. Example: gksudo gedit /etc/apt/sources.list * "man" command can be used to find help manual for a command. For example, "man sudo" will display the manual page for the "sudo" command: man sudo * While "apt-get" and "aptitude" are fast ways of installing programs/packages, you can also use the Synaptic Package Manager, a GUI method for installing programs/packages. Most (but not all) programs/packages available with apt-get install will also be available from the Synaptic Package Manager.