3Rd Dimension Veritas Et Visus May 2010 Vol 5 No 5/6

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Program BMIM 2012

PROGRAM 2012 (May 24, 2012 – Pakhuis de Zwijger, Amsterdam) Music and Audio Branding for Games .................................................................................................... 2 Case Study: Stars of Football .................................................................................................................. 2 Composing for Advertising, Film and TV: Getting Started ...................................................................... 3 Listening to Chanel No.5: How Music Maps the Sense of Smell ............................................................. 5 BMIM Talent Award: Van God Los Score Competition (Kick-Off Session) .............................................. 5 Composing for Kids and Teens ................................................................................................................ 6 The Challenges of Composing for Games ............................................................................................... 8 BMIM Talent Award: Van God Los Score Competition (Fine Tuning Session) ........................................ 9 DIY – Making Money with Your Music Copyright ................................................................................. 10 Music in Advertising – Joined at the Hip? ............................................................................................. 10 From Band to Screen ............................................................................................................................. 12 BMIM New Talent Award: Van God Los -

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Physx As a Middleware for Dynamic Simulations in the Container Loading Problem

Proceedings of the 2018 Winter Simulation Conference M. Rabe, A.A. Juan, N. Mustafee, A. Skoogh, S. Jain, and B. Johansson, eds. PHYSX AS A MIDDLEWARE FOR DYNAMIC SIMULATIONS IN THE CONTAINER LOADING PROBLEM Juan C. Martínez-Franco David Álvarez-Martínez Department of Mechanical Engineering Department of Industrial Engineering Universidad de Los Andes Universidad de Los Andes Carrera 1 Este No. 19A – 40 Carrera 1 Este No. 19A – 40 Bogotá, 11711, COLOMBIA Bogotá, 11711, COLOMBIA ABSTRACT The Container Loading Problem (CLP) is an optimization challenge where the constraint of dynamic stability plays a significant role. The evaluation of dynamic stability requires the use of dynamic simulations that are carried out either with dedicated simulation software that produces very small errors at the expense of simulation speed, or real-time physics engines that complete simulations in a very short time at the cost of repeatability. One such engine, PhysX, is evaluated to determine the feasibility of its integration with the open source application PackageCargo. A simulation tool based on PhysX is proposed and compared with the dynamic simulation environment of Autodesk Inventor to verify its reliability. The simulation tool presents a dynamically accurate representation of the physical phenomena experienced by cargo during transportation, making it a viable option for the evaluation of dynamic stability in solutions to the CLP. 1 INTRODUCTION The Container Loading Problem (CLP) consists of the geometric arrangement of small rectangular items (cargo) into a larger rectangular space (container), but it is not a simple geometry problem. The arrangements of cargo must maximize volume utilization while complying with certain constraints such as cargo fragility, delivery order, etc. -

The Style of Video Games Graphics: Analyzing the Functions of Visual Styles in Storytelling and Gameplay in Video Games

The Style of Video Games Graphics: Analyzing the Functions of Visual Styles in Storytelling and Gameplay in Video Games by Yin Wu B.A., (New Media Arts, SIAT) Simon Fraser University, 2008 Thesis Submitted In Partial Fulfillment of the Requirements for the Degree of Master of Arts in the School of Interactive Arts and Technology Faculty of Communication, Art and Technology Yin Wu 2012 SIMON FRASER UNIVERSITY Fall 2012 Approval Name: Yin Wu Degree: Master of Arts Title of Thesis: The Style of Video Games Graphics: Analyzing the Functions of Visual Styles in Storytelling and Gameplay in Video Games Examining Committee: Chair: Carman Neustaedter Assistant Professor School of Interactive Arts & Technology Simon Fraser University Jim Bizzocchi, Senior Supervisor Associate Professor School of Interactive Arts & Technology Simon Fraser University Steve DiPaola, Supervisor Associate Professor School of Interactive Arts & Technology Simon Fraser University Thecla Schiphorst, External Examiner Associate Professor School of Interactive Arts & Technology Simon Fraser University Date Defended/Approved: October 09, 2012 ii Partial Copyright Licence iii Abstract Every video game has a distinct visual style however the functions of visual style in game graphics have rarely been investigated in terms of medium-specific design decisions. This thesis suggests that visual style in a video game shapes players’ gaming experience in terms of three salient dimensions: narrative pleasure, ludic challenge, and aesthetic reward. The thesis first develops a context based on the fields of aesthetics, art history, visual psychology, narrative studies and new media studies. Next it builds an analytical framework with two visual styles categories containing six separate modes. This research uses examples drawn from 29 games to illustrate and to instantiate the categories and the modes. -

Developer Deck Draft

WiiWare Business Overview Dan Adelman Business Development Nintendo of America What is WiiWare All About? • Developer freedom • Lowering barriers • Opportunity for large and small developers Business Model Recap • 65:35 (Content Provider:Nintendo) revenue share from unit 1 for titles that cross the Performance Threshold • Developer provides suggested price; Nintendo sets final price Europe + The Americas Oceania Performance 6,000 units 3,000 units Threshold (>16MB) Performance 4,000 units 2,000 units Threshold (<16MB) Royalties paid by NOA NOE Payments and Reporting • Payments made 30 days after the close of each calendar quarter • Unit sales status reports available online – Ability to break down by time frame and country/region – Link to your status report will be provided when your title is released Ground Rules • Game size must be < 40MB – < 16MB strongly encouraged! – The manual is viewable online and does not count against this limit • No hardware emulation • No advergames, product placement, or collection of user data • Must be a complete game – The game cannot require the purchase of add-on content or a separate title Minimum Localization Requirements Europe + The Americas Oceania In-game language English* English* English, French, Online manual EFIGS + Dutch Spanish Wii Shop Channel English, French, EFIGS + Dutch catalog info Spanish * Support for additional languages is strongly encouraged! Some Issues to Consider... Taxes! • Royalties paid by NOA/NOE to a foreign company may be subject to a source withholding tax. In the US, -

Xbox 360 Total Size (GB) 0 # of Items 0

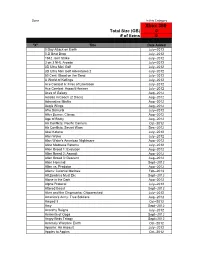

Done In this Category Xbox 360 Total Size (GB) 0 # of items 0 "X" Title Date Added 0 Day Attack on Earth July--2012 0-D Beat Drop July--2012 1942 Joint Strike July--2012 3 on 3 NHL Arcade July--2012 3D Ultra Mini Golf July--2012 3D Ultra Mini Golf Adventures 2 July--2012 50 Cent: Blood on the Sand July--2012 A World of Keflings July--2012 Ace Combat 6: Fires of Liberation July--2012 Ace Combat: Assault Horizon July--2012 Aces of Galaxy Aug--2012 Adidas miCoach (2 Discs) Aug--2012 Adrenaline Misfits Aug--2012 Aegis Wings Aug--2012 Afro Samurai July--2012 After Burner: Climax Aug--2012 Age of Booty Aug--2012 Air Conflicts: Pacific Carriers Oct--2012 Air Conflicts: Secret Wars Dec--2012 Akai Katana July--2012 Alan Wake July--2012 Alan Wake's American Nightmare Aug--2012 Alice Madness Returns July--2012 Alien Breed 1: Evolution Aug--2012 Alien Breed 2: Assault Aug--2012 Alien Breed 3: Descent Aug--2012 Alien Hominid Sept--2012 Alien vs. Predator Aug--2012 Aliens: Colonial Marines Feb--2013 All Zombies Must Die Sept--2012 Alone in the Dark Aug--2012 Alpha Protocol July--2012 Altered Beast Sept--2012 Alvin and the Chipmunks: Chipwrecked July--2012 America's Army: True Soldiers Aug--2012 Amped 3 Oct--2012 Amy Sept--2012 Anarchy Reigns July--2012 Ancients of Ooga Sept--2012 Angry Birds Trilogy Sept--2012 Anomaly Warzone Earth Oct--2012 Apache: Air Assault July--2012 Apples to Apples Oct--2012 Aqua Oct--2012 Arcana Heart 3 July--2012 Arcania Gothica July--2012 Are You Smarter that a 5th Grader July--2012 Arkadian Warriors Oct--2012 Arkanoid Live -

The Growing Importance of Ray Tracing Due to Gpus

NVIDIA Application Acceleration Engines advancing interactive realism & development speed July 2010 NVIDIA Application Acceleration Engines A family of highly optimized software modules, enabling software developers to supercharge applications with high performance capabilities that exploit NVIDIA GPUs. Easy to acquire, license and deploy (most being free) Valuable features and superior performance can be quickly added App’s stay pace with GPU advancements (via API abstraction) NVIDIA Application Acceleration Engines PhysX physics & dynamics engine breathing life into real-time 3D; Apex enabling 3D animators CgFX programmable shading engine enhancing realism across platforms and hardware SceniX scene management engine the basis of a real-time 3D system CompleX scene scaling engine giving a broader/faster view on massive data OptiX ray tracing engine making ray tracing ultra fast to execute and develop iray physically correct, photorealistic renderer, from mental images making photorealism easy to add and produce © 2010 Application Acceleration Engines PhysX • Streamlines the adoption of latest GPU capabilities, physics & dynamics getting cutting-edge features into applications ASAP, CgFX exploiting the full power of larger and multiple GPUs programmable shading • Gaining adoption by key ISVs in major markets: SceniX scene • Oil & Gas Statoil, Open Inventor management • Design Autodesk, Dassault Systems CompleX • Styling Autodesk, Bunkspeed, RTT, ICIDO scene scaling • Digital Content Creation Autodesk OptiX ray tracing • Medical Imaging N.I.H iray photoreal rendering © 2010 Accelerating Application Development App Example: Auto Styling App Example: Seismic Interpretation 1. Establish the Scene 1. Establish the Scene = SceniX = SceniX 2. Maximize interactive 2. Maximize data visualization quality + quad buffered stereo + CgFX + OptiX + volume rendering + ambient occlusion 3. -

NVIDIA Physx SDK EULA

NVIDIA CORPORATION NVIDIA® PHYSX® SDK END USER LICENSE AGREEMENT Welcome to the new world of reality gaming brought to you by PhysX® acceleration from NVIDIA®. NVIDIA Corporation (“NVIDIA”) is willing to license the PHYSX SDK and the accompanying documentation to you only on the condition that you accept all the terms in this License Agreement (“Agreement”). IMPORTANT: READ THE FOLLOWING TERMS AND CONDITIONS BEFORE USING THE ACCOMPANYING NVIDIA PHYSX SDK. IF YOU DO NOT AGREE TO THE TERMS OF THIS AGREEMENT, NVIDIA IS NOT WILLING TO LICENSE THE PHYSX SDK TO YOU. IF YOU DO NOT AGREE TO THESE TERMS, YOU SHALL DESTROY THIS ENTIRE PRODUCT AND PROVIDE EMAIL VERIFICATION TO [email protected] OF DELETION OF ALL COPIES OF THE ENTIRE PRODUCT. NVIDIA MAY MODIFY THE TERMS OF THIS AGREEMENT FROM TIME TO TIME. ANY USE OF THE PHYSX SDK WILL BE SUBJECT TO SUCH UPDATED TERMS. A CURRENT VERSION OF THIS AGREEMENT IS POSTED ON NVIDIA’S DEVELOPER WEBSITE: www.developer.nvidia.com/object/physx_eula.html 1. Definitions. “Licensed Platforms” means the following: - Any PC or Apple Mac computer with a NVIDIA CUDA-enabled processor executing NVIDIA PhysX; - Any PC or Apple Mac computer running NVIDIA PhysX software executing on the primary central processing unit of the PC only; - Any PC utilizing an AGEIA PhysX processor executing NVIDIA PhysX code; - Microsoft XBOX 360; - Nintendo Wii; and/or - Sony Playstation 3 “Physics Application” means a software application designed for use and fully compatible with the PhysX SDK and/or NVIDIA Graphics processor products, including but not limited to, a video game, visual simulation, movie, or other product. -

I Creatori Di Resogun E Nex Machina Non Faranno Un Altro Arcade Shooter

I creatori di Resogun e Nex Machina non faranno un altro arcade shooter Nel passato decennio, il nome Housemarque è diventato sinonimo di giochi dallo stile arcade. Da Super Stardust HD, uscito nei primi tempi della PS3 sul PSN, fino aResogun , uscito nei primi mesi di vita di Playstation 4, lo studio finlandese ha prodotto molti giochi acclamati dalla critica. Negli anni recenti, però, le buone recensioni non sono state accompagnate da vendite al pari. Ad esempio, il recente Nex Machina, un colorato shooter arcade a scorrimento verticale, rappresenta alla perfezione gli stilemi dei giochi Housemarque: creato in collaborazione con la leggenda arcade Eugene Jarvis, ha ricevuto molte lodi da parte della stampa videoludica, e una media di 88 su Metacritic, rendendolo l’ottavo miglior gioco della classifica Playstation 4. Purtroppo, la casa sviluppatrice ha confermato di aver venduto meno di 100.000 copie su Playstation 4 e su PC, andando così in perdita. Stessa sorte è toccata anche a Matterfall, un altro gioco di stampo arcade, uscito lo scorso agosto e che non è stato un grande successo di vendite. Ilari Kuttinen, CEO di Housemarque, ha dichiarato: «Siamo davvero orgogliosi del lavoro che abbiamo svolto con Nex Machina. È stato un lavoro pieno di passione e di amore, anche perché abbiamo lavorato col nostro eroe, Eugene Jarvis. Ci sarebbe piaciuto continuare su questa strada, ma purtroppo non è più possibile. Il prossimo gioco non sarà uno shooter a scorrimento orizzontale o verticale e non sarà focalizzato sulle leaderboard. Sarà qualcosa di diverso rispetto ai giochi che hanno reso lo studio famoso.» Il responsabile editoriale, Mikael Haveri, ha parlato della volontà di spostarsi su generi più popolari e di includere funzionalità multiplayer, ma non ha voluto essere più specifico. -

Zoom Player Documentation

Table of Contents Part I Introduction 1 1 Feature................................................................................................................................... Chart 5 2 Features................................................................................................................................... in detail 10 3 Options................................................................................................................................... & Settings 19 Advanced Options.......................................................................................................................................................... 20 Interface ......................................................................................................................................................... 21 Control Bar ......................................................................................................................................... 22 Buttons ................................................................................................................................... 23 Timeline Area ................................................................................................................................... 23 Display ................................................................................................................................... 24 Keyboard ........................................................................................................................................ -

Getting Started

Getting started Note: This Help file explains the features available in RecordNow! and RecordNow! Deluxe. Some of the features and projects detailed in the Help are only available in RecordNow! Deluxe. Click here to connect to a Web site where you can learn more about upgrading to RecordNow! Deluxe. Welcome to RecordNow! by Sonic, your gateway to the world of digital music, video, and data recording. With RecordNow! you can make perfect copies of your CDs and DVDs, transfer music from your CD collection to your computer, create personalized audio CDs containing all of your favorite songs, and much more. In addition, a full suite of data and video recording programs by Sonic can be started from within RecordNow! to back up your computer, create drag-and-drop discs, watch movies, edit digital video, and create your own DVDs. Some of these programs may already be installed on your computer. Others are available for purchase. This Help file is divided into the following sections to help you quickly find the information you need: Getting started — Learn about System requirements, Getting help, Accessibility, and Removing RecordNow!. Things you should know — Useful information for newcomers to digital recording. Exploring RecordNow! — Learn to use RecordNow! and find out more about associated programs and upgrade options. Audio Projects — Step-by-step instructions for every type of audio project. Data projects — Step-by-step instructions for every type of data project. Backup projects — Step-by-step instructions for backup projects. Video projects — Step-by-step instructions for video projects. Utilities — Instructions on how to erase and finalize a disc, how to display detailed information about your discs and drives, and how to create disc labels. -

Cineplayer Datasheet

CinePlayer SDK DVD-VComprehensive VCD SVCD Media -VR Player +VR Engine BDAV FEATURES AND BENEFITS The CinePlayer CE Navigator SDK is a software development kit that allows developers for both PC and consumer electronics (CE) platforms to quickly and CINEPLAYER easily incorporate comprehensive, reliable The CinePlayer SDK is Sonic's powerful, comprehensive solu- playback of BD-ROM, tion for playback of BD-ROM, DVD-Video, VCD, SVCD, and DVD, Super Video CD, CDDA formatted discs as well as compressed audio and pic- Video CD, Audio CD and ture file formats. The SDK makes it simple and fast for OEMs compressed file formats and third-party developers to integrate rich playback func- Blu-Ray tionality into host applications running in embedded environ- Complete support for all ments. The SDK interprets all the details of supported for- profiles and content mats so that in-depth knowledge of specifications is not re- types including BDAV quired to enable full-featured, high-quality playback of digital and BD-Live advanced media content. content. DVD Formats The family of CinePlayer SDKs are designed to be cross plat- Support for DVD-V, form to support any embedded environment with well- +VR, -VR and Divx defined APIs that enables quick, easy, and flexible integra- including software tion of media playback capabilities into existing systems. solutions for CSS and CPRM decryption. Powerful and efficient, the CinePlayer supports an impressive feature set with minimal demand on system resources. CD Formats Support for SVCD, VCD, Sonic’s 20+ years of engineering experience with optical disc CD-DA, MP3 and JPEG formats provide the highest level of compatibility and qual- CDs.