Evaluation of Contactless Versus Contact Fingerprint Data, Phase 2 (Version 1.1)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biometrically Enabled Smartphones the Pla�Orm for Mobile-ID

Biometrically Enabled Smartphones The PlaBorm for mobile-ID Jonas Andersson Director Mobile Payment, Mobile ID Fingerprint Cards AB WWW.FINGERPRINTS.COM Fingerprint Sensor Main New iPhone 5S Feature Phone 5s and 5c Sales Stascs Sales Total number of iPhone 5s units sold 9,750,000 Total number of iPhone 5c units sold 5,800,000 iPhone First Day Sales Sta$s$cs Number Sold Number of iPhone 5s sales in the first 24 2,750,000 hours Number of iPhone 5c pre-orders in the first 1,320,000 24 hours Stasc Verificaon Source: Apple, AT&T, Sprint, Verizon Research Date: 11.12.2013 Another huge launch for Apple as they release their latest iPhone the 5s and 5c. Early reports show that the iPhone 5s will break the iPhone 5 first day sales records. The 5c hasn’t faired as well with die hard apple fans. A surveyor from Philip Elmer-DeWiN interviewed a number of people camping at the Apple Store and none were there for the 5c. 2014-02-01 WWW.FINGERPRINTS.COM ConfidenKal 2 Mobile • 1st phone launched to the market in July (Japan) • Most demanding operator (DoCoMo) Most experienced finger sensor phone OEM (Fujitsu) • 1st Korean player (Pantech) launched in August • 1st Chinese manufacturer (Konka) launched in September, First launch with 1080A Several Chinese manufacturers soon to follow • Finger sensor high-lighted as key selling point by OEMs • FPC selected in several plaBorm reference designs • Repeat Customers is proof of Market Success WWW.FINGERPRINTS.COM ConfidenKal 3 Mobile • Happy and saKsfied customers give fast follow-up projects • Fujitsu announced another -

Fingerprint Cards Commissioned Research 160718

Commissioned research 16 July 2018 Fingerprint Cards AB Electronic Equipment, Instruments & Components | Sweden KEY DATA Looking past the past Country Sweden Bloomberg FING.SS A new dawn Reuters FINGb.ST Fingerprint Cards (Fingerprints) today is not the same company as it was Share price 6.25 during its early phase, and it certainly does not have the same market Free float 93% expectations as it did during the stock price peak in 2015. Nevertheless, Market cap (m) SEK 1,916 Website www.fingerprints.com we think there are still a few tricks up its sleeve that could chart a path to Next report date 2018/07/19 innovation and growth. The decline of the previously quite profitable market of capacitive fingerprint sensors for smartphone implementation has prompted many investors to give up on the company, but we do not ABSOLUTE & RELATIVE PERFORMANCE believe it is time to pull the plug just yet. 50 40 Innovate or retreat 30 Fingerprints is sitting between a rock and a hard place, as it needs to expand and dominate new and adjacent segments (ie in-display 20 fingerprint sensors and biometric smartcards), while the capacitive 10 fingerprint sensor market is facing price pressure from low-cost competitors. We think the smartcard market, while not fully 0 jul-17 okt-17 jan-18 apr-18 compensating for the decline of capacitive fingerprint sensors, could help Fingerprint Cards OMX Stockholm PI stabilise revenues and gross margins if the product can gain any interest on the market. The biggest risk for the company would be if its new -1M -6M -12M YTD Absolute 1% -62% -79% -61% product lines fail to capitalise on the upcoming market window, which is Relative 2% -61% -84% -62% why we base our estimate forecasts on product releases as planned, but Source: Thomson Reuters and Bloomberg with a higher WACC to account for the apparent development risks. -

Highlights of the Quarter Third Quarter of 2020

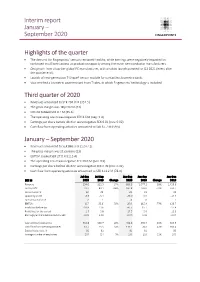

Interim report January – September 2020 Highlights of the quarter • The demand for Fingerprints’ sensors remained healthy, while earnings were negatively impacted by continued insufficient access to production capacity among the major semiconductor manufacturers. • Design win from a top-tier global PC manufacturer, with product launch planned for Q1 2021 (event after the quarter end). • Launch of next-generation T-Shape® sensor module for contactless biometric cards. • Visa certified a biometric payment card from Thales, in which Fingerprints’ technology is included. Third quarter of 2020 • Revenues amounted to SEK 294.0 M (352.5) • The gross margin was 18 percent (23) • EBITDA totaled SEK 8.7 M (35.6) • The operating result was negative SEK 4.6 M (neg: 3.4) • Earnings per share before dilution were negative SEK 0.01 (pos: 0.02) • Cash flow from operating activities amounted to SEK 67.7 M (59.5) January – September 2020 • Revenues amounted to SEK 886.3 M (1,077.2). • The gross margin was 21 percent (23) • EBITDA totaled SEK 27.0 M (115.4) • The operating result was negative SEK 39.0 M (pos: 0.9) • Earnings per share before dilution were negative SEK 0.09 (pos: 0.02) • Cash flow from operating activities amounted to SEK 111.2 M (78.0) Jul-Sep Jul-Sep Jan-Sep Jan-Sep Jan-Dec SEK M 2020 2019 Change 2020 2019 Change 2019 Revenue 294.0 352.5 -17% 886.3 1,077.2 -18% 1,458.6 Gross profit 53.2 80.1 -34% 187.8 243.0 -23% 331.1 Gross margin % 18 23 21 23 23 Operating profit -4.6 -3.4 -39.0 0.9 -14.4 Operating margin % -2 -1 -4 0 -1 EBITDA 8.7 35.6 -

Capacitive Fingerprint Sensors

Technology and Patent Infringement Risk Analysis – November 2015 Capacitive Fingerprint Sensors TMDR92 (AuthenTec-Apple), FPC1020 (Fingerprint Cards), VAL004A8-T (Synaptics) and B1202A0-01 (Synaptics) Who risk to be deprived of its imprint in future infringement lawsuits? REPORT OUTLINE WILL APPLE AND SAMSUNG CONFRONT EACH OTHER ON • Technology and patent infringement risk analysis of THE FINGERPRINT SENSOR FIELD? fingerprint sensors TMDR92 (AuthenTec), FPC1020 (Fingerprint Fingerprint sensors using capacitive technology represent a fast growing market, especially Cards), VAL004A8-T (Synaptics) and in smartphones. The fingerprint sensor vendor Idex forecasts an increase of 360% of the B1202A0-01 (Synaptics) number of fingerprint sensor units in mobile devices and of the fingerprint sensor market • November 2015 between 2014 and 2017 (source : N+1 Singer, Idex, 2014). A new generation of capacitive • PDF (110+ slides ) & Excel file fingerprint sensors has emerged in the last few years and have been incorporated in the last • €5,990 (corporate license) generations of smartphones of companies like Apple, Samsung or Huawei. The market growth for fingerprint sensors is also supported by a new phase of IP development, revealed KEY FEATURES OF THE REPORT by an increase in the number of new patents related to capacitive fingerprint sensor • Deep insight on technology data published since 2012 (source: Knowmade, ‘Capacitive Fingerprint Sensors Patent Landscape’, and manufacturing processes 2015). • Comparative studies of product Fingerprint sensors are greatly appreciated by smartphone’s users. Indeed, such biometric features (similarities & differences) authentication mode is easier and quicker than a password to access the device. It also • Key patents related to the target allows to give selected access to applications for each user of the device. -

Mobile Biometrics Market Report

Mobile Biometric Applications Rawlson O’Neil King Lead Researcher, Biometrics Research Group. Chris Burt, Contributing Editor, BiometricUpdate.com All information, analysis, forecasts and data provided by Biometrics Research Group, Inc. is for the exclusive use of subscribing persons and organiza- tions (including those using the service on a trial basis). All such content is copyrighted in the name of Biometric Research Group, Inc., and as such no part of this content may be reproduced, repackaged, copies or redistributed without the express consent of Biometrics Research Group, Inc. All content, including forecasts, analysis and opinion, has been based on information and sources believed to be accurate and reliable at the time of publishing. Biometrics Research Group, Inc. makes no representation of/or warranty of any kind as to the accuracy or completeness of any information provided, and accepts no liability whatsoever for any loss or damage resulting from opinion, errors, inaccuracies or omissions affecting any part of the content. © 2017, Biometrics Research Group, Inc. About the Biometrics Research Group Biometrics Research Group, Inc. provides pro- particular focus on the law enforcement and prietary research, consumer and business data, national security sectors. Our portfolio of white custom consulting, and industry intelligence to papers and full research reports is based upon help companies make informed business deci- high-quality quantitative analysis, allowing our sions. clients to gain deeper understanding of the marketplace. We provide news, research and analysis to com- panies ranging from Fortune 500 to small start- We customize our research design, data collec- ups through market reports, primary studies, tion, and statistical reporting using proprietary consumer research, custom research, consulta- micro- and macroeconomic modeling and re- tion, workshops, executive conferences and our gression analysis. -

Fingerprint Sensor Market Demands Driven by Smartcards and Iot 1 Fingerprint Sensor Market Demands Driven by Smartcards and Iot

Fingerprint Sensor Market Demands Driven by Smartcards and IoT 1 Fingerprint Sensor Market Demands Driven by Smartcards And IoT Report Submitted By, Amalnath Sathyan S3EC, No:12 Submitted By, Amalnath Sathyan, S3EC, Roll No:12 Fingerprint Sensor Market Demands Driven by Smartcards and IoT 2 Key Topics Covered Overview Fingerprint Processing Fingerprint Sensors Market Dynamics Company Profiles Conclusion Submitted By, Amalnath Sathyan, S3EC, Roll No:12 Fingerprint Sensor Market Demands Driven by Smartcards and IoT 3 Overview The technological advancements in 21st century have provided people with the access to top notch security tools such as smartphones, for their privacy. Nowadays, one can use biometric scans such as fingerprint or even retina scan as the key to access their personnel information. These specs of a person act as his/her personal identity card because of a distinct quality that is; no two persons can have identical fingerprints or even retina patterns. Fingerprint sensing is one of the primary biometric technology that has been employed on a large scale. Fingerprint processing involves three primary steps: enrollment, searching and verification. The report “Global Fingerprint Sensor Market by Application (Smartphones, Smartcards, PC & Tablet) Industry Analysis & Outlook (2016-2020)” analyzes the development of this market, with focus on the North American and European markets. The major trends, growth drivers as well as issues being faced by the market Submitted By, Amalnath Sathyan, S3EC, Roll No:12 Fingerprint Sensor Market Demands Driven by Smartcards and IoT 4 are discussed in detail in this report. The four major players: Synaptics Incorporated, Fingerprint Cards, NEXT Biometrics ASA and IDEX ASA are being profiled along with their key financials and strategies for growth. -

Fingerprint Cards Ab Annual General Meeting

FINGERPRINT CARDS AB ANNUAL GENERAL MEETING CHRISTIAN FREDRIKSON, CEO GOTHENBURG, SWEDEN APRIL 20 2017 Agenda Inledning Kortsiktiga utmaningar 2016 i perspektiv Långsiktiga möjligheter 2 INTRODUCTION 3 We are highly competitive and fight for our market shares OEMs implement dual sourcing which fuels competition Our products are competitive and so are our cost levels Market share from 55-60% in 2016 to around 50% in 2017 Unique system knowledge Biometric algorithms Asic/silicon chip development Software development Packaging expertise 4 SHORT TERM CHALLENGES 5 Short term congestion in our value chain 6 We are part of a long value chain Fingerprints ”Sell-In” Fingerprints market Fingerprints ”Sell- Fingerprints Revenue recognition share measurement Through” Packaging, SW Fingerprint Distributor Mod House OEM Distr&Retail Cards AB (~ 5) (~ 10) (> 20) (100.000s) wafers wafers modules Smartphones Approximately 4-6 months lead time 7 Note: Illustrative. Applies to mobile devices Inventory levels are a short term challenge Inventory levels are expected to normalize during Q2 Consumer demand remains as expected and fingerprint sensor penetration is still increasing 8 2016 IN PERSPECTIVE 9 Fingerprints value creation 55-60% 579 MSEK Market share in taxes* #1 Innovative Swedish Company Employee >10 Billion sat 80% touches per day 186 136 new talents in Fingerprints Devices launched in 2016 20 Sensor models 10 *From Income Statement. 498 MSEK cash-flow wise Value creation Key financials Revenues of SEK 6,639 M (2,900), up 129% YoY Gross Margin 48% -

Fingerprint Cards AB – FPC1268 1 Table of Contents

Fingerprints Cards AB – FPC1268 Capacitive Fingerprint Under Glass from Huawei Mate 9 pro MEMS report by Stéphane ELISABETH March 2016 21 rue la Noue Bras de Fer 44200 NANTES - FRANCE +33 2 40 18 09 16 [email protected] www.systemplus.fr ©2016 System Plus Consulting | Fingerprint Cards AB – FPC1268 1 Table of Contents Overview / Introduction 3 Comparison Analysis 72 o Executive Summary o Comparison with FPC1025 o Reverse Costing Methodology o Comparison With Qualcomm Ultrasonic Sensor Company Profile 7 Manufacturing Process Flow 77 o FingerPrint Cards o Global Overview o Huawei Mate 9 pro Teardown o Sensor & ASIC Front-End Process Physical Analysis 15 o Sensor & ASIC Wafer Fabrication Unit o Synthesis of the Physical Analysis o Sensor Back-End Process Flow o Sensor Back-End Fabrication Unit o Physical Analysis Methodology o ASIC Back-End & LGA Package Assembly unit o Package 18 o Assembly Process Flow Package Views & Dimensions o Glass & Final Assembly Unit Package Opening Cost Analysis 91 Fingerprint Package Views & Dimensions o Synthesis of the cost analysis Fingerprint Package opening o Yields Explanation & Hypotheses Package Cross-Section : Metal Parts, Glass Cover o Sensor & ASIC Die 96 Fingerprint Package Cross-Section : Sensor & ASIC Front-End Cost ASIC, ASIC Copper Pillar, Sensor Bumps, Sensor TSV Sensor Back-End Cost Sensor Back-End Cost per process steps o Sensor Die 38 Sensor & ASIC Back-End 0 : Probe Test & Dicing View, Dimensions & Marking Sensor & ASIC Wafer & Die Cost Active area, Density, Pixels & Pads Delayering -

Fingerprint Cards Sweden

Commissioned Equity Research • 16 May 2019 Telecom Equipment and IT Fingerprint Cards Sweden KEY DATA Solid report, hard to justify share price rally Stock country Sweden Bloomberg FINGB SS Fingerprint's Q1 sales were SEK 343m, up 18% y/y, marking the first quarter Reuters FINGb.ST of yearly revenue growth since 2016. The gross margin came in at 23.0%, Share price (close) SEK 18.54 2.2 pp below consensus, albeit with a more promising outlook given. We Free Float 100% Market cap. (bn) EUR 0.54/SEK 5.82 increase our estimates for 2019 revenue thanks to stabilising market Website www.fingerprints.com conditions in capacitive sensors. We also see improved gross profit from Next report date 15 Aug 2019 the higher share of high-margin sensor FPC1511, but we reduce our long- term estimates for in-display sensors due to its late entrance to the market PERFORMANCE and need to play catch-up. We trim our EBIT estimates by 3.3%, 2.7% and 2.5% for 2020-22, respectively, and our new DCF-derived fair value range 150 lands at SEK 8.1-15.5 (8.3-16.0). The stock was shorted by around 8% of its 120 market cap ahead of the report, leading to a short squeeze. However, our fundamental view has not changed materially and justifying a higher price 90 would require a brighter outlook than we assume either for the in-display 60 sensor or the smartcard business. 30 0 May16 May17 May18 May19 Increased confidence in short-term profitability With 18% revenue growth y/y and shipment volumes for Fingerprint's core Fingerprint Cards Source: Thomson Reuters Sweden OMX Stockholm All-Share (Rebased) customers declining less than the market, we see stronger conviction in the company's ability to increase market share in capacitive sensors for 2019E. -

Record Sales and Pole Position in a Multi-Billion Market, Which Is Just Opening

Press release Gothenburg, October 17, 2013 Record sales and pole position in a multi-billion market, which is just opening Fingerprint Cards AB (publ), Corp. Reg. No. 556154-2381, (FPC), Third quarter 2013: Sales totaled SEK 31.6 M (2.6). Gross profit amounted to SEK 15.5 M (loss: 0.9). Profit after financial items amounted to a loss of SEK 1.3 M (loss: 9.7). Gross margin was 49% (neg: 34). The loss per share was SEK 0.03 (loss: 0.22). Cash and cash equivalents at the end of the period amounted to SEK 142.3 M (25.9). The order backlog at the end of the period was SEK 38.9 M (0.0). FPC published a new forecast for 2013 on September 23 as well as forecasts for 2014 and 2015. Fujitsu, in cooperation with DoCoMo, launched the first mobile phone incorporating FPC’s fingerprint technology. Konka launched its first smartphone with the FPC1080A fingerprint sensor in China. WWTT launched FingerQ products with FPC’s 1080A sensor. Pantech in South Korea launched a new mobile phone incorporating FPC’s fingerprint technology. FPC secured a new DW* from a major Tier 2 OEM in China. FPC, in partnership with a leading US operator, won a smartphone DW* from an existing OEM customer in Asia. FPC secured a new major DW* for three new smartphones from a prominent existing customer in Asia. FPC won new DWs* for smartphones and tablet devices from existing Tier 2 customers in Asia. FPC secured a Chinese order for area sensors valued at SEK 24 M. -

Biometric Payment Cards

A SECURE TECHNOLOGY ALLIANCE PAYMENTS COUNCIL WHITE PAPER Biometric Payment Cards Version 1.0 March 2019 Secure Technology Alliance ©2019 Page 1 About the Secure Technology Alliance The Secure Technology Alliance is a not-for-profit, multi-industry association working to stimulate the understanding, adoption and widespread application of secure solutions, including smart cards, embedded chip technology, and related hardware and software across a variety of markets including authentication, commerce and Internet of Things (IoT). The Secure Technology Alliance, formerly known as the Smart Card Alliance, invests heavily in education on the appropriate uses of secure technologies to enable privacy and data protection. The Secure Technology Alliance delivers on its mission through training, research, publications, industry outreach and open forums for end users and industry stakeholders in payments, mobile, healthcare, identity and access, transportation, and the IoT in the U.S. and Latin America. For additional information, please visit www.securetechalliance.org. Copyright © 2019 Secure Technology Alliance. All rights reserved. Reproduction or distribution of this publication in any form is forbidden without prior permission from the Secure Technology Alliance. The Secure Technology Alliance has used best efforts to ensure, but cannot guarantee, that the information described in this report is accurate as of the publication date. The Secure Technology Alliance disclaims all warranties as to the accuracy, completeness or adequacy of information in this report. This white paper does not endorse any specific product or service. Product or service references are provided to illustrate the points being made. Secure Technology Alliance ©2019 Page 2 Table of Contents 1 Introduction .......................................................................................................................................... 4 2 Overview .............................................................................................................................................. -

Fingerprint Cards AB (Publ.)

Fingerprint Cards AB (Publ.) Year-end report 2015 Fourth quarter 2015: • Revenues amounted to SEK 1,351.5 M (105.0), up 1,187% compared with fourth quarter of 2014. • Gross margin was 46% (32). • Operating profit amounted to SEK 517.6 M (loss: 30.0). • Earnings per share increased to SEK 6.39 (loss: 0.49). • Cash flow from operating activities was SEK 537.4 M (neg: 74.7). January to December 2015 • Revenues amounted to SEK 2,900.5 M (233.6), up 1,142% compared with 2014. • Gross margin was 43% (31). • Operating profit amounted to SEK 910.3 M (loss: 145.2). • Earnings per share increased to SEK 12.62 (loss: 2.47). • Cash flow from operating activities was SEK 908.1 M (neg: 174.3). Outlook • The 2016 revenue guidance is updated to SEK 7,000-8,500 M, compared with the previous guidance of SEK 6,500- 8,500 M. • As previously announced, it is estimated that the operating margin for 2016 will exceed the operating margin for the second half of 2015. The operating margin for the second half of 2015 was 37%. The Board’s proposal to the Annual General Meeting 2016 • The Board proposes that no dividend be paid for fiscal year 2015 • The Board proposes a stock split of 5:1 • The Board proposes a mandate to buy back and transfer FING B shares Fingerprint Cards AB (Publ) Year-end report 2015 1 CEO’s comments Strong closing of a record year I am very proud to report that Fingerprint Cards (FPC) closed 2015 in line with the strong performance achieved in the first three quarters of the year.