Exercise 3 : Asynchronous I/O and Caching

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Pocket Guide.Pdf

3rd Edition Linux Pocket Guide ESSENTIAL COMMANDS Daniel J. Barrett 3RD EDITION Linux Pocket Guide Daniel J. Barrett Linux Pocket Guide by Daniel J. Barrett Copyright © 2016 Daniel Barrett. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebasto‐ pol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promo‐ tional use. Online editions are also available for most titles (http://safaribook‐ sonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editor: Nan Barber Production Editor: Nicholas Adams Copyeditor: Jasmine Kwityn Proofreader: Susan Moritz Indexer: Daniel Barrett Interior Designer: David Futato Cover Designer: Karen Montgomery Illustrator: Rebecca Demarest June 2016: Third Edition Revision History for the Third Edition 2016-05-27: First Release See http://oreilly.com/catalog/errata.csp?isbn=9781491927571 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Linux Pocket Guide, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellec‐ tual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights. -

Traceroute Is One of the Key Tools Used for Network Troubleshooting and Scouting

1 Introduction Traceroute is one of the key tools used for network troubleshooting and scouting. It has been available since networking's early days. Because traceroute is based on TTL header modification, it crafts its own network packets. The goal of this assignment is to re-implement a traceroute with cutting edge techniques designed to improve its scouting capabilities and bypass SPI firewalls. In this assignment you will learn about packet injection and sniffing. The assignment also teaches about more subtle topics such as network latency, packet filtering, and Q.O.S. 1.1 Instructions This project has to be completed sequentially as each part depends on the previous ones. The required coding language is C. You should consider using libnet [1] and libpcap [2], however using raw sockets is permitted as well. Note that the exercises become progressively more difficult, with Exercise 5 likely to consume the greatest amount of effort. 1.2 Submission and Grading To compile your project, the script will use the command make, executed in the project directory. Then it will launch our tests, using an executable name "traceng" you have to make sure your make file results in this executable name, in the same project directory. Make sure that the command line options and the output format are correctly implemented as they will be used by our script during the grading process. Specific examples of how we are going to test your executable are provided later in this document, for each exercise. Your project directory should also contain a file called README which is text file describing the highlights of your solution to each exercise anything special that you did, information required in the specific exercise, what is the most interesting thing you learned in the exercise, and anything else we need to take into account. -

The Bioinformatics Lab Linux Proficiency Terminal-Based Text Editors Version Control Systems

The Bioinformatics Lab Linux proficiency terminal-based text editors version control systems Jonas Reeb 30.04.2013 “What makes you proficient on the command line?” - General ideas I Use CLIs in the first place I Use each tool for what it does best I Chain tools for more complex tasks I Use power of shell for small scripting jobs I Automate repeating tasks I Knowledge of regular expression 1 / 22 Standard tools I man I ls/cd/mkdir/rm/touch/cp/mv/chmod/cat... I grep, sort, uniq I find I wget/curl I scp/ssh I top(/htop/iftop/iotop) I bg/fg 2 / 22 Input-Output RedirectionI By default three streams (“files”) open Name Descriptor stdin 0 stdout 1 stderr 2 Any program can check for its file descriptors’ redirection! (isatty) 3 / 22 Input-Output RedirectionII Output I M>f Redirect file descriptor M to file f, e.g. 1>f I Use >> for appending I &>f Redirect stdout and stderr to f I M>&N Redirect fd M to fd N Input I 0<f Read from file f 4 / 22 Pipes I Forward output of one program to input of another I Essential for Unix philosophy of specialized tools I grep -P -v "^>" *.fa | sort -u > seqs I Input and arguments are different things. Use xargs for arguments: ls *.fa | xargs rm 5 / 22 Scripting I Quick way to get basic programs running I Basic layout: #!/bin/bash if test"$1" then count=$1 else count=0 fi for i in {1..10} do echo $((i+count)) let"count +=1" done 6 / 22 Motivation - “What makes a good text editor” I Fast execution, little system load I Little bandwidth needed I Available for all (your) major platforms –> Familiar environment I Fully controllable via keyboard I Extensible and customizable I Auto-indent, Auto-complete, Syntax highlighting, Folding, .. -

Ongoing Software Development Projects Under Linux/QNX

Ongoing Software Development Projects under Linux/QNX 1. CCD Image acquistion software under Linux i) Gtk version( 2.0 ) ii) IDL version (6.3) -V.Arumugam, A.V.Ananth 2. Software development under QNX REAL-TIME O.S. Although our efforts are for CONTROL applications specifically for HAGAR, as an initial step we have tried CCD image acquistion software development in QNX to familiarise with various features like Photon Application Builder(PhAB), Resource Manager, Process Manager etc. -V.Arumugam, A.V.Ananth 3. Distributed Telescope control system for 30” telescope at VainuBappu Observatory.The approach we have planned is similar to that of 2 Mtr. Telescope.The advantage of this is scheme is its enoromous flexibility in implementation in the sense ,that different sub-systems could be modified/implemented at different points of time without substantially modify software related to other sub-systems.Also back-ends can also communicate with the telescope and other sub-systems if required to tap information. -V.Arumugam, A.V.Ananth, KavithaPathak, Faseehana, Anbazhagan CCD Image acquisition software under Linux i) Gtk version (2.0) ii) IDL version (6.3) MODEL : It is a Client/Server Design where the Server can be local or at remote site and client accesses the server on LAN/WAN. Applicability : This software intended for 2Kx2K TEK chip and 2Kx4K Marconi chip. Can be used with a little modification for any other sensor in future if appropriate Hardware modifications are made for the existing controller. Drawbacks : As linux and gtk/genome are freely available software and are continuously being modified their version keep changing and hence maintenance of camera software is a hectic task. -

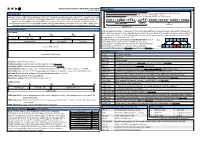

Ipv6 Cheat Sheet

Internet Protocol Version 6 (IPv6) Basics Cheat Sheet IPv6 Addresses by Jens Roesen /64 – lan segment, 18,446,744,073,709,551,616 v6 IPs IPv6 quick facts /48 – subscriber site, 65536 /64 lan segments successor of IPv4 • 128-bit long addresses • that's 296 times the IPv4 address space • that's 2128 or 3.4x1038 or over 340 /32 – minimum allocation size, 65536 /48 subscriber sites, allocated to ISPs undecillion IPs overall • customer usually gets a /64 subnet, which yields 4 billion times the Ipv4 address space • no need for network address translation (NAT) any more • no broadcasts any more • no ARP • stateless address 2001:0db8:0f61:a1ff:0000:0000:0000:0080 configuration without DHCP • improved multicast • easy IP renumbering • minimum MTU size 1280 • mobile IPv6 • global routing prefix subnet ID interface ID mandatory IPsec support • fixed IPv6 header size of 40 bytes • extension headers • jumbograms up to 4 GiB subnet prefix /64 IPv6 & ICMPv6 Headers IPv6 addresses are written in hexadecimal and divided into eight pairs of two byte blocks, each containing four hex IPv6 header digits. Addresses can be shortened by skipping leading zeros in each block. This would shorten our example address to 0 8 16 24 32 2001:db8:f61:a1ff:0:0:0:80. Additionally, once per IPv6 IP, we can replace consecutive blocks of zeros with a version traffic class flow label double colon: 2001:db8:f61:a1ff::80. The 64-bit interface ID can/should be in modified EUI-64 format. A MAC 00 03 ba 24 a9 6c payload length next header hop limit 48-bit MAC can be transformed to an 64-bit interface ID by inverting the 7th (universal) bit and inserting a ff and fe byte after rd source IPv6 address the 3 byte. -

DDOS Detection and Denial Using Third Party Application in SDN

International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS-2017) DDOS Detection and Denial using Third Party Application in SDN Roshni Mary Thomas Divya James Dept. of Information Technology Dept. of Information Technology Rajagiri School of Engineering & Technology Rajagiri School of Engineering & Technology Ernakulam, India Ernakulam, India [email protected] [email protected] Abstract— Software Defined Networking(SDN) is a developing introduced i.e, Software Defined Networking (SDN). Software area where network managers can manage the network behavior Defined Networking (SDN) is a developing area where it programmatically such as modify, control etc. Using this feature extract the limitations of traditional network which make we can empower, facilitate or e network related security networking more uncomplicated. In SDN we can develop or applications due to the its capacity to reprogram the data plane change the network functions or behavior program. To make at any time. DoS/DDoS attacks are attempt to make controller the decision where the traffic needs to send with the updated functions such as online services or web applications unavailable to clients by exhausting computing or memory resources of feature SDN decouple the network planes into two. servers using multiple attackers. A DDoS attacker could produce 1. Control Plane enormous flooding traffic in a short time to a server so that the 2. Data Plane services provided by the server get degraded. This will lose of In control plane we can add update or add new features customer support, brand trust etc. to improve the network programmatically also we can change To detect this DDoS attack we use a traffic monitoring method the traffic according to our decision and in updated traffic are iftop in the server as third party application and check the traffic applied in data plane. -

High Performance Linux Shell Programming Reference 2015 Edition

Extensive, example-based Linux shell programming reference includes an English-to-shell dictionary, a tutorial and handbook, and many tables of information useful to programmers. Besides listing more than 2000 shell one- liners, it explains the principles and techniques of how to increase performance (execution speed, reliability, and efficiency), which apply to many other programming languages beyond shell. High Performance Linux Shell Programming Reference 2015 Edition Order the complete book from Booklocker.com http://www.booklocker.com/p/books/7831.html?s=pdf or from your favorite neighborhood or online bookstore. Your free excerpt appears below. Enjoy! High Performance Linux Shell Programming Reference 2015 Edition High Performance Linux Shell Programming Reference, 2015 Edition Copyright © 2015 by Edward J. Smeltz ISBN 978-1-63263-401-6 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, recording or otherwise, without the prior written permission of the author. Printed on acid-free paper All information herein is believed to be accurate and correct, but the author and Booklocker.com, Inc assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained in this book. Manufacturers and sellers often use specific designations for their products to distinguish them in the marketplace. Where such designations appear in this book, and E. J. Smeltz was aware of a trademark claim, the designations have been printed in all caps or in initial caps. All trademarks are the property of their respective owners. -

Flexible Internet Router for Linux

fli4l – flexible internet router for linux Version 3.10.18 The fli4l-Team email: [email protected] September 15, 2019 Contents 1. Documentation of the base package 10 1.1. Introduction...................................... 10 2. Setup and Configuration 13 2.1. Unpacking the archives................................ 13 2.2. Configuration..................................... 14 2.2.1. Editing the configuration files........................ 14 2.2.2. Configuration via a special configuration file................ 15 2.2.3. Variables................................... 15 2.3. Setup flavours..................................... 15 2.3.1. Router on a USB-Stick............................ 16 2.3.2. Router on a CD, or network boot...................... 16 2.3.3. Type A: Router on hard disk—only one FAT partition.......... 16 2.3.4. Type B: Router on hard disk—one FAT and one ext3 partition..... 16 3. Base configuration 18 3.1. Example file...................................... 19 3.2. General settings.................................... 25 3.3. Console settings.................................... 30 3.4. Hints To Identify Problems And Errors...................... 31 3.5. Usage of a customized /etc/inittab......................... 32 3.6. Localized keyboard layouts............................. 32 3.7. Ethernet network adapter drivers.......................... 33 3.8. Networks....................................... 42 3.9. Additional routes (optional)............................. 44 3.10. The Packet Filter................................... 44 3.10.1. Packet Filter -

SECUMOBI SERVER Technical Description

SECUMOBI SERVER Technical Description Contents SIP Server 3 Media Relay 10 Dimensioning of the Hardware 18 SIP server 18 Media Proxy 18 Page 2 of 18 SIP Server Operatingsystem: Debian https://www.debian.org/ Application: openSIPS http://www.opensips.org/ OpenSIPS is built and installed from source code. The operating system is installed with the following packages: Package Description acpi displays information on ACPI devices acpi-support-base scripts for handling base ACPI events such as the power button acpid Advanced Configuration and Power Interface event daemon adduser add and remove users and groups anthy-common input method for Japanese - common files and dictionary apt Advanced front-end for dpkg apt -utils APT utility programs aptitude terminal-based package manager (terminal interface only) autopoint The autopoint program from GNU gettext backup -manager command -line backup tool base-files Debian base system miscellaneous files base-passwd Debian base system master password and group files bash The GNU Bourne Again SHell bc The GNU bc arbitrary precision calculator language binutils The GNU assembler, linker and binary utilities bison A parser generator that is compatible with YACC bsdmainutils collection of more utilities from FreeBSD bsdutils Basic utilities from 4.4BSD-Lite build -essential Informational list of build -essential packages busybox Tiny utilities for small and embedded systems bzip2 high-quality block-sorting file compressor - utilities ca-certificates Common CA certificates console-setup console font and keymap -

Linux Commands Cheat Sheet

LINUX COMMANDS CHEAT SHEET by Gokhan Kosem, www.ipcisco.com System Information Commands User Information Commands shows user&group ids of the current uname -a shows Linux system info id user uname -r shows kernel release info last shows the last users logged on cat /etc/redhat-release shows installed redhat version whoami shows who you are logged in as uptime displays system running/life time who shows who is logged into the system shows who is logged in and what hostname shows system host name w they do hostname -I shows ip addresses of the host groupadd test creates group “test” creates “Gokhan” account with last reboot displays system reboot history useradd -c “GK” -m Gokhan comment “GK” date displays current date and time userdel Gokhan deletes account “Gokhan” usermod -aG Networkers adds account “Gokhan” to the cal displays monthly calendar Gokhan “Networkers” group mount shows mounted filesy-stems File Permission changes ownership of a File Commands chown user file/directory shows file type and access changes user and group for a file or ls -l chown user:group filename permission directory ls -a lists also hidden files r (read) permission, 4 ls -al lists files and directories detailly w (write) permission, 2 File Permissions pwd shows present directory x (execute) permission, 1 mkdir directory creates a directory -= no permission rm xyz deletes file xyz File Owner owner/group/everyone deletes directory /xyz and its 777 | Owner, Group, Everyone has rm -r /xyz contents recursively rwx permissions forcefully deletes abc file without -

A Standardized and Flexible Ipv6 Architecture for Field Area Networks

White Paper A Standardized and Flexible IPv6 Architecture for Field Area Networks: Smart-Grid Last-Mile Infrastructure Last update: January 2014 This paper is intended to provide a synthetic and holistic view of open-standards-based Internet Protocol Version 6 (IPv6) architecture for smart-grid last-mile infrastructures in support of a number of advanced smart-grid applications (meter readout, demand-response, telemetry, and grid monitoring and automation) and its benefit as a true multiservice platform. In this paper, we show how the various building blocks of IPv6 networking infrastructure can provide an efficient, flexible, highly secure, and multiservice network based on open standards. This paper does not address transition paths for electric utilities that deal with such issues as legacy devices, network and application integration, and the operation of hybrid network structures during transitional rollouts. Figure 1. The Telecom Network Architecture Viewed as a Hierarchy of Interrelated Networks Page 1 of 23 1. Introduction Last-mile networks have gained considerable momentum over the past few years because of their prominent role in the smart-grid infrastructure. These networks, referred to as neighborhood-area networks (NANs) in this document, support a variety of applications including not only electricity usage measurement and management, but also advanced applications such as demand/response (DR), which gives users the opportunity to optimize their energy usage based on real-time electricity pricing information; distribution automation (DA), which allows distribution monitoring and control; and automatic fault detection, isolation and management. NANs also serve as a foundation for future virtual power plants, which comprise distributed power generation, residential energy storage (for example, in combination with electric vehicle (EV) charging), and small-scale trading communities. -

Section 4 Network Programming and Administration Exercises

Lab Manual SECTION 4 NETWORK PROGRAMMING AND ADMINISTRATION EXERCISES Structure Page Nos. 4.0 Introduction 48 4.1 Objectives 48 4.2 Lab Sessions 49 4.3 List of Unix Commands 52 4.4 List of TCP/IP Ports 54 3.5 Summary 58 3.6 Further Readings 58 4.0 INTRODUCTION In the earlier sections, you studied the Unix, Linux and C language basics. This section contains more practical information to help you know best about Socket programming, it contains different lab exercises based on Unix and C language. We hope these exercises will provide you practice for socket programming. Towards the end of this section, we have given the list of Unix commands frequently required by the Unix users, further we have given a list of port numbers to indicate the TCP/IP services which will be helpful to you during socket programming. To successfully complete this section, the learner should have the following knowledge and skills prior to starting the section. S/he must have: • Studied the corresponding course material of BCS-052 and completed the assignments. • Proficiency to work with Unix and Linux and C interface. • Knowledge of networking concepts, including network operating system, client- server relationship, and local area network (LAN). Also, to successfully complete this section, the learner should adhere to the following: • Before attending the lab session, the learner must already have written steps/algorithms in his/her lab record. This activity should be treated as home- work that is to be done before attending the lab session. • The learner must have already thoroughly studied the corresponding units of the course material (BCS-052) before attempting to write steps/algorithms for the problems given in a particular lab session.