Visual Apollo: a Graphical Exploration of Computer-Human Relationships Yanni Alexander Loukissas, David Mindell

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bills Paid by Payee Second Quarter Fiscal Year 20/21

BILLS PAID BY PAYEE SECOND QUARTER FISCAL YEAR 20/21 A complete detailed record is available at the Elko County Comptroller's Office. Office Hours: Monday-Friday 8:00A.M. until 5:00P.M. 540 Court St. Suite 101 Elko, NV 89801 Office Phone (775)753-7073*Disclaimer-The original and any duplicate or copy of each receipt, bill,check,warrant,voucher or other similar document that supports a transaction, the amount of which is shown in the total of this report, along with the name of the person whom such allowance is made and the purpose of the allowance, is a public record that is available for inspection and copying by any person pursuant to the provisions of chapter 239 of NRS. VENDOR CHECK ARGO COMPANY, INC 821.98 A ARNOLD BECK CONSTRUCTION 1,599.40 AT&T 3,697.06 5TH GEAR POWER SPORTS 41.74 AT&T MOBILITY 42.24 A PLUS URGENT CARE 766 AUTO GRAPHICS 400 A PLUS URGENT CARE ELKO 846.33 B A-1 RADIATOR REPAIR INC. 1,079.00 BARBARA J HOFHEINS 121.9 ACKERMAN MATTHEW 1388.93 BARBARA JO MAPLE 762.5 ADVANCE AUTO CARE 629.89 BARRY RENTAL 86.03 ADVANCED RADIOLOGY 1195.5 BEI CAPELI 5,000.00 AIRGAS 541.3 BERTOLINI VALVES INC 1,859.12 AIRPORT SHELL 9 BETTY HICKS 177.56 ALCOHOL MONITORING SYSTEMS INC 791 BLAINE ROBINSON & NIKIERA CAST 1,132.00 ALERTUS TECHNOLOGIES LLC 4,950.00 BLOHM JEWELERS INC 5,000.00 ALICIA GUAMAN 58 BLUE 360 MEDIA LLC 70.75 ALLIED UNIVERAL SECURITY SERVI 37,229.50 BOARD OF REGENTS 14300.04 ALLUSIVE IMAGES 5,000.00 BOB BARKER COMPANY 4418.55 ALYSSA K DANN 120 BONANZA PRODUCE 320.23 AMANDA JEAN GIRMAUDO 160 BOSS TANKS 5,024.00 AMBER DAWN -

March 21–25, 2016

FORTY-SEVENTH LUNAR AND PLANETARY SCIENCE CONFERENCE PROGRAM OF TECHNICAL SESSIONS MARCH 21–25, 2016 The Woodlands Waterway Marriott Hotel and Convention Center The Woodlands, Texas INSTITUTIONAL SUPPORT Universities Space Research Association Lunar and Planetary Institute National Aeronautics and Space Administration CONFERENCE CO-CHAIRS Stephen Mackwell, Lunar and Planetary Institute Eileen Stansbery, NASA Johnson Space Center PROGRAM COMMITTEE CHAIRS David Draper, NASA Johnson Space Center Walter Kiefer, Lunar and Planetary Institute PROGRAM COMMITTEE P. Doug Archer, NASA Johnson Space Center Nicolas LeCorvec, Lunar and Planetary Institute Katherine Bermingham, University of Maryland Yo Matsubara, Smithsonian Institute Janice Bishop, SETI and NASA Ames Research Center Francis McCubbin, NASA Johnson Space Center Jeremy Boyce, University of California, Los Angeles Andrew Needham, Carnegie Institution of Washington Lisa Danielson, NASA Johnson Space Center Lan-Anh Nguyen, NASA Johnson Space Center Deepak Dhingra, University of Idaho Paul Niles, NASA Johnson Space Center Stephen Elardo, Carnegie Institution of Washington Dorothy Oehler, NASA Johnson Space Center Marc Fries, NASA Johnson Space Center D. Alex Patthoff, Jet Propulsion Laboratory Cyrena Goodrich, Lunar and Planetary Institute Elizabeth Rampe, Aerodyne Industries, Jacobs JETS at John Gruener, NASA Johnson Space Center NASA Johnson Space Center Justin Hagerty, U.S. Geological Survey Carol Raymond, Jet Propulsion Laboratory Lindsay Hays, Jet Propulsion Laboratory Paul Schenk, -

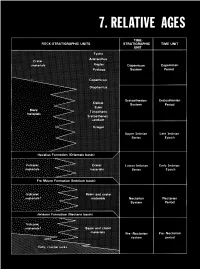

Relative Ages

CONTENTS Page Introduction ...................................................... 123 Stratigraphic nomenclature ........................................ 123 Superpositions ................................................... 125 Mare-crater relations .......................................... 125 Crater-crater relations .......................................... 127 Basin-crater relations .......................................... 127 Mapping conventions .......................................... 127 Crater dating .................................................... 129 General principles ............................................. 129 Size-frequency relations ........................................ 129 Morphology of large craters .................................... 129 Morphology of small craters, by Newell J. Fask .................. 131 D, method .................................................... 133 Summary ........................................................ 133 table 7.1). The first three of these sequences, which are older than INTRODUCTION the visible mare materials, are also dominated internally by the The goals of both terrestrial and lunar stratigraphy are to inte- deposits of basins. The fourth (youngest) sequence consists of mare grate geologic units into a stratigraphic column applicable over the and crater materials. This chapter explains the general methods of whole planet and to calibrate this column with absolute ages. The stratigraphic analysis that are employed in the next six chapters first step in reconstructing -

Glossary of Lunar Terminology

Glossary of Lunar Terminology albedo A measure of the reflectivity of the Moon's gabbro A coarse crystalline rock, often found in the visible surface. The Moon's albedo averages 0.07, which lunar highlands, containing plagioclase and pyroxene. means that its surface reflects, on average, 7% of the Anorthositic gabbros contain 65-78% calcium feldspar. light falling on it. gardening The process by which the Moon's surface is anorthosite A coarse-grained rock, largely composed of mixed with deeper layers, mainly as a result of meteor calcium feldspar, common on the Moon. itic bombardment. basalt A type of fine-grained volcanic rock containing ghost crater (ruined crater) The faint outline that remains the minerals pyroxene and plagioclase (calcium of a lunar crater that has been largely erased by some feldspar). Mare basalts are rich in iron and titanium, later action, usually lava flooding. while highland basalts are high in aluminum. glacis A gently sloping bank; an old term for the outer breccia A rock composed of a matrix oflarger, angular slope of a crater's walls. stony fragments and a finer, binding component. graben A sunken area between faults. caldera A type of volcanic crater formed primarily by a highlands The Moon's lighter-colored regions, which sinking of its floor rather than by the ejection of lava. are higher than their surroundings and thus not central peak A mountainous landform at or near the covered by dark lavas. Most highland features are the center of certain lunar craters, possibly formed by an rims or central peaks of impact sites. -

California State University Fullerton Emeriti Directory 2018

California State University Fullerton Emeriti Directory 2018 Excerpts from the Emeriti Bylaws The purpose of the Emeriti of California State University, Fullerton shall be to promote the welfare of California State University, Fullerton; to enhance the continuing professionalism of the emeriti; and to provide for the fellowship of the members Those eligible for membership shall include all persons awarded emeritus status by the President of California State University. Those eligible for associate membership shall be the spouse of any deceased Emeritus. California State University Emeritus and Retired Faculty Association The Emeriti of California State University, Fullerton are affiliated with the California State University Emeritus and Retired Faculty Association (CSU-ERFA). CSU-ERFA is the state- wide, non-profit organization that works to protect and advance the interests of retired faculty, academic administrators and staff of the CSU at the state and national level. Membership is open to all members of the Emeriti of CSUF including emeriti staff. CSU-ERFA monthly dues are very modest and are related to the amount of your 15% rebate of dues collected from CSUF members for use by our local emeriti group. We encourage all Fullerton emeriti to consider joining CSU-ERFA. For more information go to http://csuerfa.org or send email to [email protected]. CSUF Emeriti Directory August 2018 Emeriti Officers . 1 Current Faculty and Staff Emeriti . 2 Deceased Emeriti . .. 52 Emeriti by Department . 59 Emeriti Associates . 74 Emeriti Officers Emeriti Board President Local Representatives to CSU-ERFA Jack Bedell [email protected] Vince Buck [email protected] Vice President Diana Guerin Paul Miller [email protected] [email protected] Directory Information Secretary Please send changes of address and contact George Giacumakis informaiton to [email protected] [email protected] Emeriti Parking and Benefits Treasurer Rachel Robbins, Asst. -

GRAIL-Identified Gravity Anomalies in Oceanus Procellarum: Insight Into 2 Subsurface Impact and Magmatic Structures on the Moon 3 4 Ariel N

1 GRAIL-identified gravity anomalies in Oceanus Procellarum: Insight into 2 subsurface impact and magmatic structures on the Moon 3 4 Ariel N. Deutscha, Gregory A. Neumannb, James W. Heada, Lionel Wilsona,c 5 6 aDepartment of Earth, Environmental and Planetary Sciences, Brown University, Providence, RI 7 02912, USA 8 bNASA Goddard Space Flight Center, Greenbelt, MD 20771, USA 9 cLancaster Environment Centre, Lancaster University, Lancaster LA1 4YQ, UK 10 11 Corresponding author: Ariel N. Deutsch 12 Corresponding email: [email protected] 13 14 Date of re-submission: 5 April 2019 15 16 Re-submitted to: Icarus 17 Manuscript number: ICARUS_2018_549 18 19 Highlights: 20 • Four positive Bouguer gravity anomalies are analyzed on the Moon’s nearside. 21 • The amplitudes of the anomalies require a deep density contrast. 22 • One 190-km anomaly with crater-related topography is suggestive of mantle uplift. 23 • Marius Hills anomalies are consistent with intruded dike swarms. 24 • An anomaly south of Aristarchus has a crater rim and possibly magmatic intrusions. 25 26 Key words: 27 Moon; gravity; impact cratering; volcanism 1 28 Abstract 29 30 Four, quasi-circular, positive Bouguer gravity anomalies (PBGAs) that are similar in diameter 31 (~90–190 km) and gravitational amplitude (>140 mGal contrast) are identified within the central 32 Oceanus Procellarum region of the Moon. These spatially associated PBGAs are located south of 33 Aristarchus Plateau, north of Flamsteed crater, and two are within the Marius Hills volcanic 34 complex (north and south). Each is characterized by distinct surface geologic features suggestive 35 of ancient impact craters and/or volcanic/plutonic activity. -

Lunar Sample Compendium, 63335.Pdf

63335 Impact Melt Breccia 65.4 grams ,1 ,2 ,3 ,4 ,9 ,10 ,8 Figure 2: 63335,6. Cube is 1 cm. S75-33389. Introduction ,7 63335 is a sample chipped off of Shadow Rock (Ulrich 1973). It was collected as several fragments (figure 1) along with 60017 and 63355 – also from Shadow Rock – see transcript in 60017. A few zap pits are found on the surface. The age is not determined. Petrography Kridelbaugh et al. (1973) described 63335 as a dark ,6 grey microbreccia, cut by a light vein of devitrified glass. Clasts include anorthosite, plagioclase and gabbroic anorthosite, but there is more matrix than clasts. Ryder and Norman (1981) describe 63335 as a complex mix of melt-breccia and anorthositc clasts. The vein has a spherulitic texture (figure 3) while the matrix is a mesostasis-olivine-plagioclase met rock with devitrified glass (figure 4). Misra and Taylor (1975) and Hunter and Taylor (1981) reported the ,5 characteristics of metal particles in 63335. (meteoritic). Significant Clast Plagioclase Clast Obvious in figures of 63335,6. Not studied. Chemistry Hubbard et al. (1974) and Laul et al. (1974) obtained Figure 1: PET photo of 63335 right out of the similar data for 63335, which is also similar to that of bag. Cube is 1 cm. S72-37808. Lunar Sample Compendium C Meyer 2009 Figure 3: Shadow Rock near North Ray Crater, Apollo 16. S16-106-17392, 17394. Boulder is 5 m across. CMeyer 63335 2009 68.2 g ,5 ,9 ,2 ,10 ,13 ,6 ,8 ,7 2.5 g PB 2.6 g ,14 27 g TS ,34 TS ,19 ,6 ,15 ,16 ,17 ,7 ,12 ,26 1.4 g 14 g PB 1.3 g PB 2g 0.9 g 1.5 g ,31 ,32 Rock ,33 TS CMB Regolith Breccia 63335 soil 015050 100 200 carbon ppm Figure 4: Carbon content of Apollo 16 samples showing 63335 (data from Moore and Lewis 1976). -

Interpreting Cultural Resources at Craters of the Moon National Monument & Preserve

National Park Service U.S. Department of the Interior Interpreting Cultural Resources at Craters of the Moon National Monument & Preserve Table of Contents Introduction……………………………………………………...3 Interpretive Themes……………………………………...……...4 Protecting Cultural Resources…………………………………..6 Native Americans……………………………………………….8 Fur Trade and Early Exploration………………………………12 Emigrants………………………………………………………13 Surveys, Mining, and Ranching………………………………..16 Robert Limbert……………………………………………....…18 Park Structures………………………………………………....21 The Apollo Astronauts…………………………………….…...23 Monument Expansion………………………………...….….…25 Appendix I: Timeline of Events……………………….……….26 Appendix II: Projectile Point Chronology………………….….31 Sources……………………………………………………..…..32 Page 2 Introduction Volcanic geology is what makes Craters of the sites once used by the Shoshone on their Moon a national monument. Every year travels through the area. Since then, most visitors are awed, inspired, and inquisitive travelers have done everything they could to about the vast volumes of blackened lava that avoid the uninviting lava fields. cover hundreds of square miles of Idaho’s However limited, the cultural record of the high desert. monument boasts an amazing range of groups The emphasis on the area’s volcanism as the and individuals that have alternately shunned reason for designating the area a monument is and embraced the harsh terrain over thousands clear in Calvin Coolidge’s 1924 proclamation, of years, and for a variety of reasons. It is this citing the “remarkable fissure eruption range of uses and emotions evoked by the together with its associated volcanic cones, terrain that makes understanding the cultural craters, rifts, lava flows, caves, natural past of Craters of the Moon so important in bridges, and other phenomena…of unusual relating a seemingly-dead environment to scientific value and general interest.” visitors today. -

Tossup Roentgen, Archimedes, Gauss, Balboa an D~¥As.:.? Da

Tossup , Roentgen, Archimedes, Gauss, Balboa an_d ~¥as.:.? Da Gama all have one bigger than Einstein's. Rutherford's is inside of..G!avius'. ' ~e three largest are Hertzsprung, Korolev and Apollo. For 10 points );IlUlt are t!1e~ objects, seven of which will be named for the astronauts who ~e..s-p~ttle disaster. / . Lunar Craters (' ,/ J- This Athenjan wa~ an admirer of Socrates ~ndji . him' such works as the Symposium and the Apo!?gy of Socrates. ~ - - st known wever, as an historian, the author of the Hellemca and the AnaEiastS, an a t of a mercenary expedition into Asia Minor. For 10 points, name ntury B.C. author. Xenophon ,. .. .. "The seizure of $3~&;gWo Y Hills," "Savage Lu~y ... 'Teeth Like Baseballs, Eyes Like J llied ire, '" and "A Terrible Experience with Extremely Dangerous Drugs." These e apters from, for 10 points, .what book? Fear and Loathing in Las Vegas (by Hunter S. Thompson) .' Lazlo Toth attacked her with a h~e, screaming "I Jesus Christ". He severed her left arm, smas~er nose-and lsfigured her I eye. That was in 1972, and today she and her ~n~i~ ·- behlnd i ss wall in J) Peter's Basilica the scars barely visible. For 10 y.Qin(s, "Yho are .... y, re ? ' The Pieul . L/ Accept: C~st and the Virgin Mary In 1649She invited Rene Descartes to her court to teach her philosophy. She had her lessons at 5 in the morning; Des.cartes, more used to rising at noon, caught a c . d died. Fo t's: who was this Swedish queen, \ the daughter of Gustavus Christina \, \ \ / Tossup Its biological name Ailuropod I 'l- " --~ . -

Summary of Sexual Abuse Claims in Chapter 11 Cases of Boy Scouts of America

Summary of Sexual Abuse Claims in Chapter 11 Cases of Boy Scouts of America There are approximately 101,135sexual abuse claims filed. Of those claims, the Tort Claimants’ Committee estimates that there are approximately 83,807 unique claims if the amended and superseded and multiple claims filed on account of the same survivor are removed. The summary of sexual abuse claims below uses the set of 83,807 of claim for purposes of claims summary below.1 The Tort Claimants’ Committee has broken down the sexual abuse claims in various categories for the purpose of disclosing where and when the sexual abuse claims arose and the identity of certain of the parties that are implicated in the alleged sexual abuse. Attached hereto as Exhibit 1 is a chart that shows the sexual abuse claims broken down by the year in which they first arose. Please note that there approximately 10,500 claims did not provide a date for when the sexual abuse occurred. As a result, those claims have not been assigned a year in which the abuse first arose. Attached hereto as Exhibit 2 is a chart that shows the claims broken down by the state or jurisdiction in which they arose. Please note there are approximately 7,186 claims that did not provide a location of abuse. Those claims are reflected by YY or ZZ in the codes used to identify the applicable state or jurisdiction. Those claims have not been assigned a state or other jurisdiction. Attached hereto as Exhibit 3 is a chart that shows the claims broken down by the Local Council implicated in the sexual abuse. -

Identification of Buried Lunar Impact Craters from GRAIL Data And

PUBLICATIONS Geophysical Research Letters RESEARCH LETTER Identification of buried lunar impact craters from GRAIL 10.1002/2015GL067394 data and implications for the nearside maria Key Points: Alexander J. Evans1,2,3, Jason M. Soderblom1, Jeffrey C. Andrews-Hanna4, Sean C. Solomon2,5, • One-hundred four buried crater 1 candidates on the lunar nearside are and Maria T. Zuber fi identi ed from gravity anomalies 1 • The density, thickness, and volume of Department of Earth, Atmospheric and Planetary Sciences, Massachusetts Institute of Technology, Cambridge, 2 3 infilling deposits are determined Massachusetts, USA, Lamont-Doherty Earth Observatory, Columbia University, Palisades, New York, USA, Now at Planetary Science Directorate, Southwest Research Institute, Boulder, Colorado, USA, 4Planetary Science Directorate, Southwest Research Institute, Boulder, Colorado, USA, 5Department of Terrestrial Magnetism, Carnegie Institution of Supporting Information: • Figures S1–S7 and Table S1 and S2 Washington, Washington, District of Columbia, USA Caption • Table S1 • Table S2 Abstract Gravity observations from the dual Gravity Recovery and Interior Laboratory (GRAIL) spacecraft have revealed more than 100 quasi-circular mass anomalies, 26–300 km in diameter, on the lunar nearside. Correspondence to: These anomalies are interpreted to be impact craters filled primarily by mare deposits, and their characteristics A. J. Evans, fl [email protected] are consistent with those of impact structures that formed prior to, and during, intervals of ooding of feldspathic terrane by mare basalt lavas. We determine that mare deposits have an average density contrast of þ300 À3 850À kg m relative to the surrounding crust. The presence of a large population of volcanically buried Citation: 200 Evans,A.J.,J.M.Soderblom,J.C.Andrews- craters with minimal topographic expression and diameters up to 300 km requires an average nearside mare Hanna, S. -

Studies of the Chesapeake Bay Impact Structure— Introduction and Discussion

Studies of the Chesapeake Bay Impact Structure— Introduction and Discussion By J. Wright Horton, Jr., David S. Powars, and Gregory S. Gohn Chapter A of Studies of the Chesapeake Bay Impact Structure— The USGS-NASA Langley Corehole, Hampton, Virginia, and Related Coreholes and Geophysical Surveys Edited by J. Wright Horton, Jr., David S. Powars, and Gregory S. Gohn Prepared in cooperation with the Hampton Roads Planning District Commission, Virginia Department of Environmental Quality, and National Aeronautics and Space Administration Langley Research Center Professional Paper 1688 U.S. Department of the Interior U.S. Geological Survey iii Contents Abstract . .A1 Introduction . 1 Previous Work . 3 The Chesapeake Bay Impact Structure . 5 Form and Structure . 5 Character of the Target . 7 Land Surface Features . 7 The USGS-NASA Langley Core . 9 Significant Results . 11 Crystalline Basement Rocks . 11 Impact-Modified and Impact-Generated Sediments . 11 Postimpact Sediments . 13 Water Depths—Impact and Postimpact . 14 Dating the Impact Event . 14 Structural Interpretation of Seismic Data . 15 Interpretation of Audio-Magnetotelluric (AMT) Soundings . 15 Hydrologic Effects and Water-Resources Implications . 16 Conceptual Model . 16 Acknowledgments . 18 References Cited . 18 Appendix A1. Abstracts of Research on the Chesapeake Bay Impact Structure, 2001–2003 . 24 Figures A1. Regional map showing the location of the Chesapeake Bay impact structure, the USGS-NASA Langley corehole at Hampton, Va., and some other coreholes in southeastern Virginia . A2 A2. Map of southeastern Virginia showing locations of recently completed coreholes and geophysical surveys in relation to the Chesapeake Bay impact structure . .4 A3. Satellite image of Chesapeake Bay showing location of the buried impact structure and nearby Mesozoic to Cenozoic tectonic features.