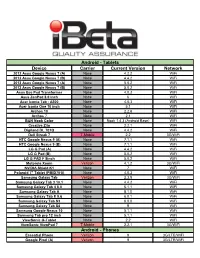

Device Carrier Current Version Network Android

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Technology That Brings Together All Things Mobile

NFC – The Technology That Brings Together All Things Mobile Philippe Benitez Wednesday, June 4th, 2014 NFC enables fast, secure, mobile contactless services… Card Emulation Mode Reader Mode P2P Mode … for both payment and non-payment services Hospitality – Hotel room keys Mass Transit – passes and limited use tickets Education – Student badge Airlines – Frequent flyer card and boarding passes Enterprise & Government– Employee badge Automotive – car sharing / car rental / fleet management Residential - Access Payment – secure mobile payments Events – Access to stadiums and large venues Loyalty and rewards – enhanced consumer experience 3 h h 1996 2001 2003 2005 2007 2014 2014 2007 2005 2003 2001 1996 previous experiences experiences previous We are benefiting from from benefiting are We Barriers to adoption are disappearing ! NFC Handsets have become mainstream ! Terminalization is being driven by ecosystem upgrades ! TSM Provisioning infrastructure has been deployed Barriers to adoption are disappearing ! NFC Handsets have become mainstream ! Terminalization is being driven by ecosystem upgrades ! TSM Provisioning infrastructure has been deployed 256 handset models now in market worldwide Gionee Elife E7 LG G Pro 2 Nokia Lumia 1020 Samsung Galaxy Note Sony Xperia P Acer E320 Liquid Express Google Nexus 10 LG G2 Nokia Lumia 1520 Samsung Galaxy Note 3 Sony Xperia S Acer Liquid Glow Google Nexus 5 LG Mach Nokia Lumia 2520 Samsung Galaxy Note II Sony Xperia Sola Adlink IMX-2000 Google Nexus 7 (2013) LG Optimus 3D Max Nokia Lumia 610 NFC Samsung -

Flash Yellow Powered by the Nationwide Sprint 4G LTE Network Table of Contents

How to Build a Successful Business with Flash Yellow powered by the Nationwide Sprint 4G LTE Network Table of Contents This playbook contains everything you need build a successful business with Flash Wireless! 1. Understand your customer’s needs. GO TO PAGE 3 2. Recommend Flash Yellow in strong service areas. GO TO PAGE 4 a. Strong service map GO TO PAGE 5 3. Help your customer decide on a service plan GO TO PAGE 6 4. Ensure your customer is on the right device GO TO PAGE 8 a. If they are buying a new device GO TO PAGE 9 b. If they are bringing their own device GO TO PAGE 15 5. Device Appendix – Is your customer’s device compatible? GO TO PAGE 28 2 Step 1. Understand your customer’s needs • Mostly voice and text - Flash Yellow network would be a good fit in most metropolitan areas. For suburban / rural areas, check to see if they live in a strong Flash Yellow service area. • Average to heavy data user - Check to see if they live in a strong Flash Yellow service area. See page 5. If not, guide them to Flash Green or Flash Purple network to ensure they get the best customer experience. Remember, a good customer experience is the key to keeping a long-term customer! 3 Step 2. Recommend Flash Yellow in strong areas • Review Flash Yellow’s strong service areas. Focus your Flash Yellow customer acquisition efforts on these areas to ensure high customer satisfaction and retention. See page 5. o This is a top 24 list, the network is strong in other areas too. -

Polycom® Realpresence® Mobile for Android® Release Notes

RELEASE NOTES Version 3.9 | Feburary 2018 | 3725-82877-021A1 Polycom® RealPresence® Mobile for Android® Contents What’s New in Release 3.9 . 1 Release History . 3 Security Updates . 4 Hardware and Software Requirements . 4 Products Tested with This Release . 5 Install and Uninstall RealPresence Mobile . 7 System Constraints and Limitations . 7 Resolved Issues . 10 Known Issues . 10 Interoperability Issues . 12 Enterprise Scalable Video Coding (SVC) Solution . 13 Access Media Statistics . 15 Prepare Your Device for Mutual Transport Layer Security . 17 Get Help . 19 Copyright and Trademark Information . 19 What’s New in Release 3.9 Polycom® RealPresence® Mobile version 3.9 includes the features and functionality of previous releases and includes the following new features. Dropped Support for Automatic Detection of Polycom® SmartPairing™ From this version on, the RealPresence Mobile video collaboration software drops support for the automatic detection feature of Polycom SmartPairing. Instead of listing the IP address of nearby Polycom HDX or RealPresence Group Series systems automatically, you will have to enter their IP addresses manually to pair to them. Polycom, Inc. 1 Release Notes Polycom RealPresence Mobile application - Version 3.9 New Device and OS Support Refer to Hardware and Software Requirements for more information. Polycom, Inc. 2 Release Notes Polycom RealPresence Mobile application - Version 3.9 Release History This following table lists the release history of Polycom RealPresence Mobile application. Release History Release -

A Survey of Enabling Technologies in Successful Consumer Digital Imaging Products

R06 R06 A Survey of Enabling Technologies in Successful Consumer Digital Imaging Products R. Fontaine, TechInsights1 The early trend for both types of masked PDAF pixels was Abstract—The image quality and customized functionality of to deploy them within a relatively small central region of the small-pixel mobile camera systems continues to bring true active pixel array, or as linear arrays (periodic rows of product differentiation to smartphones. Recently, phase PDAF pixel pairs). Today, masked pixel PDAF arrays in detection autofocus (PDAF) pixels, pixel isolation structures, marquee products occupy more than 90% of the host active chip stacking and other technology elements have contributed to a remarkable increase in mobile camera performance. pixel arrays and display a trend of increasing density Other imaging applications continue to benefit from small- generation-over-generation. pixel development efforts as foundries and IDMs transfer leading edge technology to active pixel arrays for emerging A. Masked PDAF in Front-Illuminated CCDs imaging and sensing applications. Hybrid PDAF systems were introduced to the camera market by a FujiFilm press release in July 2010. The Index Terms—CMOS image sensor, through silicon via, FinePix Z800EXR featured a hybrid AF system with a Cu-Cu hybrid bonding, direct bonding interconnect, stated AF performance of 0.158 s [1]. This front-illuminated homogeneous wafer-to-wafer bonding (oxide bonding), stacked chip, phase detection autofocus, image signal processor charge-coupled device (CCD) imager, fabricated by Toshiba, employed metal half-masks in two of 32 pixels I. INTRODUCTION within a rectangular PDAF subarray occupying the central ~7% of the active pixel array [2]. -

Echolock: Towards Low Effort Mobile User Identification

EchoLock: Towards Low Effort Mobile User Identification Yilin Yang Chen Wang Yingying Chen Rutgers University Louisiana State University Rutgers University yy450@scarletmail. [email protected] ∗ yingche@scarletmail. rutgers.edu rutgers.edu Yan Wang Binghamton University [email protected] ABSTRACT User identification plays a pivotal role in how we interact with our mobile devices. Many existing authentication ap- proaches require active input from the user or specialized sensing hardware, and studies on mobile device usage show significant interest in less inconvenient procedures. In this paper, we propose EchoLock, a low effort identification scheme that validates the user by sensing hand geometry via com- modity microphones and speakers. These acoustic signals produce distinct structure-borne sound reflections when con- tacting the user’s hand, which can be used to differentiate Figure 1: Capture of hand biometric informa- between different people based on how they hold their mo- tion embedded in structure-borne sound using bile devices. We process these reflections to derive unique commodity microphones and speakers. acoustic features in both the time and frequency domain, convenient practices [37]. Techniques such as facial recog- which can effectively represent physiological and behavioral nition or fingerprinting provide fast validation without traits, such as hand contours, finger sizes, holding strength, requiring considerable effort from the user, but demand and gesture. Furthermore, learning-based algorithms are de- dedicated hardware components that may not be avail- veloped to robustly identify the user under various environ- able on all devices. This is of particular importance in ments and conditions. We conduct extensive experiments markets of developing countries, where devices such as with 20 participants using different hardware setups in key the Huawei IDEOS must forgo multiple utilities in or- use case scenarios and study various attack models to demon- der to maintain affordable price points (e.g. -

Factory Model Device Model

Factory Model Device Model Acer A1-713 acer_aprilia Acer A1-811 mango Acer A1-830 ducati Acer A3-A10 G1EA3 Acer A3-A10 mtk6589_e_lca Acer A3-A10 zara Acer A3-A20 acer_harley Acer A3-A20FHD acer_harleyfhd Acer Acer E320-orange C6 Acer Aspire A3 V7 Acer AT390 T2 Acer B1-723 oban Acer B1-730 EverFancy D40 Acer B1-730 vespatn Acer CloudMobile S500 a9 Acer DA220HQL lenovo72_we_jb3 Acer DA222HQL N451 Acer DA222HQLA A66 Acer DA222HQLA Flare S3 Power Acer DA226HQ tianyu72_w_hz_kk Acer E330 C7 Acer E330 GT-N7105T Acer E330 STUDIO XL Acer E350 C8n Acer E350 wiko Acer G100W maya Acer G1-715 A510s Acer G1-715 e1808_v75_hjy1_5640_maxwest Acer Icona One 7 vespa Acer Iconia One 7 AT1G* Acer Iconia One 7 G1-725 Acer Iconia One 7 m72_emmc_s6_pcb22_1024_8g1g_fuyin Acer Iconia One 7 vespa2 Acer Iconia One 8 vespa8 Acer Iconia Tab 7 acer_apriliahd Acer Iconia Tab 8 ducati2fhd Acer Iconia Tab 8 ducati2hd Acer Iconia Tab 8 ducati2hd3g Acer Iconia Tab 8 Modelo II - Professor Acer Iconia Tab A100 (VanGogh) vangogh Acer Iconia Tab A200 s7503 Acer Iconia Tab A200 SM-N9006 Acer Iconia Tab A501 ELUGA_Mark Acer Iconia Tab A501 picasso Acer Iconia Tab A510 myPhone Acer Iconia Tab A510 picasso_m Acer Iconia Tab A510 ZUUM_M50 Acer Iconia Tab A701 picasso_mf Acer Iconia Tab A701 Revo_HD2 Acer Iconia TalkTab 7 acer_a1_724 Acer Iconia TalkTab 7 AG CHROME ULTRA Acer Liquid a1 Acer Liquid C1 I1 Acer Liquid C1 l3365 Acer Liquid E1 C10 Acer Liquid E2 C11 Acer Liquid E3 acer_e3 Acer Liquid E3 acer_e3n Acer Liquid E3 LS900 Acer Liquid E3 Quasar Acer Liquid E600 e600 Acer Liquid -

Ibeta-Device-Invento

Android - Tablets Device Carrier Current Version Network 2012 Asus Google Nexus 7 (A) None 4.2.2 WiFi 2012 Asus Google Nexus 7 (B) None 4.4.2 WiFi 2013 Asus Google Nexus 7 (A) None 5.0.2 WiFi 2013 Asus Google Nexus 7 (B) None 5.0.2 WiFi Asus Eee Pad Transformer None 4.0.3 WiFi Asus ZenPad 8.0 inch None 6 WiFi Acer Iconia Tab - A500 None 4.0.3 WiFi Acer Iconia One 10 inch None 5.1 WiFi Archos 10 None 2.2.6 WiFi Archos 7 None 2.1 WiFi B&N Nook Color None Nook 1.4.3 (Android Base) WiFi Creative Ziio None 2.2.1 WiFi Digiland DL 701Q None 4.4.2 WiFi Dell Streak 7 T-Mobile 2.2 3G/WiFi HTC Google Nexus 9 (A) None 7.1.1 WiFi HTC Google Nexus 9 (B) None 7.1.1 WiFi LG G Pad (A) None 4.4.2 WiFi LG G Pad (B) None 5.0.2 WiFi LG G PAD F 8inch None 5.0.2 WiFi Motorola Xoom Verizon 4.1.2 3G/WiFi NVIDIA Shield K1 None 7 WiFi Polaroid 7" Tablet (PMID701i) None 4.0.3 WiFi Samsung Galaxy Tab Verizon 2.3.5 3G/WiFi Samsung Galaxy Tab 3 10.1 None 4.4.2 WiFi Samsung Galaxy Tab 4 8.0 None 5.1.1 WiFi Samsung Galaxy Tab A None 8.1.0 WiFi Samsung Galaxy Tab E 9.6 None 6.0.1 WiFi Samsung Galaxy Tab S3 None 8.0.0 WiFi Samsung Galaxy Tab S4 None 9 WiFi Samsung Google Nexus 10 None 5.1.1 WiFi Samsung Tab pro 12 inch None 5.1.1 WiFi ViewSonic G-Tablet None 2.2 WiFi ViewSonic ViewPad 7 T-Mobile 2.2.1 3G/WiFi Android - Phones Essential Phone Verizon 9 3G/LTE/WiFi Google Pixel (A) Verizon 9 3G/LTE/WiFi Android - Phones (continued) Google Pixel (B) Verizon 8.1 3G/LTE/WiFi Google Pixel (C) Factory Unlocked 9 3G/LTE/WiFi Google Pixel 2 Verizon 8.1 3G/LTE/WiFi Google Pixel -

SECOND AMENDED COMPLAINT 3:14-Cv-582-JD

Case 3:14-cv-00582-JD Document 51 Filed 11/10/14 Page 1 of 19 1 EDUARDO G. ROY (Bar No. 146316) DANIEL C. QUINTERO (Bar No. 196492) 2 JOHN R. HURLEY (Bar No. 203641) PROMETHEUS PARTNERS L.L.P. 3 220 Montgomery Street Suite 1094 San Francisco, CA 94104 4 Telephone: 415.527.0255 5 Attorneys for Plaintiff 6 DANIEL NORCIA 7 UNITED STATES DISTIRCT COURT 8 NORTHERN DISTRICT OF CALIFORNIA 9 DANIEL NORCIA, on his own behalf and on Case No.: 3:14-cv-582-JD 10 behalf of all others similarly situated, SECOND AMENDED CLASS ACTION 11 Plaintiffs, COMPLAINT FOR: 12 v. 1. VIOLATION OF CALIFORNIA CONSUMERS LEGAL REMEDIES 13 SAMSUNG TELECOMMUNICATIONS ACT, CIVIL CODE §1750, et seq. AMERICA, LLC, a New York Corporation, and 2. UNLAWFUL AND UNFAIR 14 SAMSUNG ELECTRONICS AMERICA, INC., BUSINESS PRACTICES, a New Jersey Corporation, CALIFORNIA BUS. & PROF. CODE 15 §17200, et seq. Defendants. 3. FALSE ADVERTISING, 16 CALIFORNIA BUS. & PROF. CODE §17500, et seq. 17 4. FRAUD 18 JURY TRIAL DEMANDED 19 20 21 22 23 24 25 26 27 28 1 SECOND AMENDED COMPLAINT 3:14-cv-582-JD Case 3:14-cv-00582-JD Document 51 Filed 11/10/14 Page 2 of 19 1 Plaintiff DANIEL NORCIA, having not previously amended as a matter of course pursuant to 2 Fed.R.Civ.P. 15(a)(1)(B), hereby exercises that right by amending within 21 days of service of 3 Defendants’ Motion to Dismiss filed October 20, 2014 (ECF 45). 4 Individually and on behalf of all others similarly situated, Daniel Norcia complains and alleges, 5 by and through his attorneys, upon personal knowledge and information and belief, as follows: 6 NATURE OF THE ACTION 7 1. -

In the United States District Court for the Eastern District of Texas Marshall Division

Case 2:18-cv-00343-JRG Document 24 Filed 12/12/18 Page 1 of 32 PageID #: 142 IN THE UNITED STATES DISTRICT COURT FOR THE EASTERN DISTRICT OF TEXAS MARSHALL DIVISION EVS CODEC TECHNOLOGIES, LLC and § SAINT LAWRENCE COMMUNICATIONS, § LLC, § § Plaintiffs, Case No. 2:18-cv-00343-JRG § v. § § JURY TRIAL DEMANDED LG ELECTRONICS, INC., LG § ELECTRONICS U.S.A., INC., and LG ELECTRONICS MOBILECOMM U.S.A., § INC., § § Defendants. § § FIRST AMENDED COMPLAINT FOR PATENT INFRINGEMENT Case 2:18-cv-00343-JRG Document 24 Filed 12/12/18 Page 2 of 32 PageID #: 143 EVS Codec Technologies, LLC (“ECT”) and Saint Lawrence Communications, LLC (“SLC”) (collectively “Plaintiffs”) hereby submit this First Amended Complaint for patent infringement against Defendants LG Electronics, Inc. (“LGE”), LG Electronics U.S.A., Inc. (“LGUSA”), and LG Electronics Mobilecomm U.S.A., Inc. (“LGEM”) (collectively “LG” or “Defendants”) and state as follows: THE PARTIES 1. ECT is a Texas limited liability company with a principal place of business at 2323 S. Shepherd, 14th floor, Houston, Texas 77019-7024. 2. SLC is a Texas limited liability company, having a principal place of business at 6136 Frisco Square Blvd., Suite 400, Frisco, Texas 75034. 3. On information and belief, Defendant LGE is a Korean corporation with a principal place of business at LG Twin Towers, 128 Yeoui-daero, Yeongdungpo-gu, Seoul, 07366, South Korea. On information and belief, LGE is the entity that manufactures the LG-branded products sold in the United States, including the accused products in this case. On information and belief, in addition to making the products, LGE is responsible for research and development, product design, and sourcing of components. -

February 2010 Admob Mobile Metrics Report

AdMob Mobile Metrics Report AdMob serves ads for more than 15,000 mobile Web sites and applications around the world. AdMob stores and analyzes the data from every ad request, impression, and click and uses this to optimize ad matching in its network. This monthly report offers a snapshot of its data to provide insight into trends in the mobile ecosystem. February 2010 Find archived reports and sign up for future report notifications at metrics.admob.com. AdMob Mobile Metrics Report February 2010 New and Noteworthy For this month's report, we separate the traffic in our network into three categories – smartphones, feature phones, and mobile Internet devices – to examine the growth rates of each over the past year and look at the traffic share of smartphone operating systems and manufacturers of feature phones. * In February 2010, smartphones accounted for 48% of AdMob’s worldwide traffic, up from 35% in February 2009. The strong growth of iPhone and Android traffic, fueled by heavy application usage, was primarily responsible for the increase. In absolute terms, smartphone traffic increased 193% over the last year. * Feature phones declined from 58% to 35% of AdMob's total traffic as users began switching to smartphones. Although the share of traffic from feature phones as a category declined, in absolute terms traffic grew 31% year-over-year. * The mobile Internet devices category experienced the strongest growth of the three, increasing to account for 17% of traffic in AdMob’s network in February 2010. The iPod touch is responsible for 93% of this traffic; other devices include the Sony PSP and Nintendo DSi. -

Tarifas De Servicio/ Deducibles T-Mobile®

Tarifas de servicio/ deducibles T-Mobile® Las tarifas de servicio/deducibles a continuación se aplican a los siguientes programas: JUMP! Plus™ Protección Premium Plus para Dispositivos Nivel 1 Nivel 2 Nivel 3 Tarifa de servicio: $20 por reclamo Tarifa de servicio: $20 por reclamo Tarifa de servicio: $20 por reclamo para daño accidental para daño accidental para daño accidental Deducible: $20 por reclamo Deducible: $50 por reclamo Deducible: $100 por reclamo para pérdida y robo para pérdida y robo para pérdida y robo Cargo por equipo no recuperado: Cargo por equipo no recuperado: Cargo por equipo no recuperado: hasta $200 hasta $300 hasta $500 ALCATEL A30 ALCATEL ONETOUCH Fierce XL LG G Stylo ALCATEL Aspire ALCATEL ONETOUCH POP 7 Samsung Galaxy Tab A 8.0 ALCATEL GO FLIP HTC Desire 530 Samsung Gear S2 ALCATEL LinkZone Hotspot HTC Desire 626s ALCATEL ONETOUCH POP Astro LG G Pad F Coolpad Catalyst LG G Pad X 8.0 Coolpad Rogue LG K10 Kyocera Rally LG K20 LG 450 LG Stylo 3 Plus LG Aristo LG Stylo Plus LG K7 Kyocera Hydro Wave C6740 LG Leon LTE Microsoft Lumia 640 Microsoft Lumia 435 Samsung Galaxy Core Prime T-Mobile LineLink Samsung Galaxy Grand Prime ZTE Avid Plus Samsung Galaxy J3 Prime ZTE Avid Trio Samsung Galaxy J7 ZTE Cymbal Samsung Galaxy Tab E ZTE Falcon Z-917 Hotspot ZTE SyncUp DRIVE ZTE Obsidian ZTE Quartz ZTE Zmax Pro Nivel 4 Nivel 5 Tarifa de servicio: $99 por reclamo Tarifa de servicio: $99 por reclamo para daño accidental para daño accidental Deducible: $150 por reclamo Deducible: $175 por reclamo para pérdida y robo para pérdida -

Device Support for Beacon Transmission with Android 5+

Device Support for Beacon Transmission with Android 5+ The list below identifies the Android device builds that are able to transmit as beacons. The ability to transmit as a beacon requires Bluetooth LE advertisement capability, which may or may not be supported by a device’s firmware. Acer T01 LMY47V 5.1.1 yes Amazon KFFOWI LVY48F 5.1.1 yes archos Archos 80d Xenon LMY47I 5.1 yes asus ASUS_T00N MMB29P 6.0.1 yes asus ASUS_X008D MRA58K 6.0 yes asus ASUS_Z008D LRX21V 5.0 yes asus ASUS_Z00AD LRX21V 5.0 yes asus ASUS_Z00AD MMB29P 6.0.1 yes asus ASUS_Z00ED LRX22G 5.0.2 yes asus ASUS_Z00ED MMB29P 6.0.1 yes asus ASUS_Z00LD LRX22G 5.0.2 yes asus ASUS_Z00LD MMB29P 6.0.1 yes asus ASUS_Z00UD MMB29P 6.0.1 yes asus ASUS_Z00VD LMY47I 5.1 yes asus ASUS_Z010D MMB29P 6.0.1 yes asus ASUS_Z011D LRX22G 5.0.2 yes asus ASUS_Z016D MXB48T 6.0.1 yes asus ASUS_Z017DA MMB29P 6.0.1 yes asus ASUS_Z017DA NRD90M 7.0 yes asus ASUS_Z017DB MMB29P 6.0.1 yes asus ASUS_Z017D MMB29P 6.0.1 yes asus P008 MMB29M 6.0.1 yes asus P024 LRX22G 5.0.2 yes blackberry STV100-3 MMB29M 6.0.1 yes BLU BLU STUDIO ONE LMY47D 5.1 yes BLUBOO XFire LMY47D 5.1 yes BLUBOO Xtouch LMY47D 5.1 yes bq Aquaris E5 HD LRX21M 5.0 yes ZBXCNCU5801712 Coolpad C106-7 291S 6.0.1 yes Coolpad Coolpad 3320A LMY47V 5.1.1 yes Coolpad Coolpad 3622A LMY47V 5.1.1 yes 1 CQ CQ-BOX 2.1.0-d158f31 5.1.1 yes CQ CQ-BOX 2.1.0-f9c6a47 5.1.1 yes DANY TECHNOLOGIES HK LTD Genius Talk T460 LMY47I 5.1 yes DOOGEE F5 LMY47D 5.1 yes DOOGEE X5 LMY47I 5.1 yes DOOGEE X5max MRA58K 6.0 yes elephone Elephone P7000 LRX21M 5.0 yes Elephone P8000