Echolock: Towards Low Effort Mobile User Identification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Factory Model Device Model

Factory Model Device Model Acer A1-713 acer_aprilia Acer A1-811 mango Acer A1-830 ducati Acer A3-A10 G1EA3 Acer A3-A10 mtk6589_e_lca Acer A3-A10 zara Acer A3-A20 acer_harley Acer A3-A20FHD acer_harleyfhd Acer Acer E320-orange C6 Acer Aspire A3 V7 Acer AT390 T2 Acer B1-723 oban Acer B1-730 EverFancy D40 Acer B1-730 vespatn Acer CloudMobile S500 a9 Acer DA220HQL lenovo72_we_jb3 Acer DA222HQL N451 Acer DA222HQLA A66 Acer DA222HQLA Flare S3 Power Acer DA226HQ tianyu72_w_hz_kk Acer E330 C7 Acer E330 GT-N7105T Acer E330 STUDIO XL Acer E350 C8n Acer E350 wiko Acer G100W maya Acer G1-715 A510s Acer G1-715 e1808_v75_hjy1_5640_maxwest Acer Icona One 7 vespa Acer Iconia One 7 AT1G* Acer Iconia One 7 G1-725 Acer Iconia One 7 m72_emmc_s6_pcb22_1024_8g1g_fuyin Acer Iconia One 7 vespa2 Acer Iconia One 8 vespa8 Acer Iconia Tab 7 acer_apriliahd Acer Iconia Tab 8 ducati2fhd Acer Iconia Tab 8 ducati2hd Acer Iconia Tab 8 ducati2hd3g Acer Iconia Tab 8 Modelo II - Professor Acer Iconia Tab A100 (VanGogh) vangogh Acer Iconia Tab A200 s7503 Acer Iconia Tab A200 SM-N9006 Acer Iconia Tab A501 ELUGA_Mark Acer Iconia Tab A501 picasso Acer Iconia Tab A510 myPhone Acer Iconia Tab A510 picasso_m Acer Iconia Tab A510 ZUUM_M50 Acer Iconia Tab A701 picasso_mf Acer Iconia Tab A701 Revo_HD2 Acer Iconia TalkTab 7 acer_a1_724 Acer Iconia TalkTab 7 AG CHROME ULTRA Acer Liquid a1 Acer Liquid C1 I1 Acer Liquid C1 l3365 Acer Liquid E1 C10 Acer Liquid E2 C11 Acer Liquid E3 acer_e3 Acer Liquid E3 acer_e3n Acer Liquid E3 LS900 Acer Liquid E3 Quasar Acer Liquid E600 e600 Acer Liquid -

Accelerating Development Using the Web: Empowering Poor and Marginalized Populations George Sadowsky, Ed

Accelerating Development Using the Web: Empowering Poor and Marginalized Populations George Sadowsky, ed. George Sadowsky Najeeb Al-Shorbaji Richard Duncombe Torbjörn Fredriksson Alan Greenberg Nancy Hafkin Michael Jensen Shalini Kala Barbara J. Mack Nnenna Nwakanma Daniel Pimienta Tim Unwin Cynthia Waddell Raul Zambrano Cover image: Paul Butler http://paulbutler.org/archives/visualizing-facebook-friends/ Creative Commons License Attribution-ShareAlike 3.0 This work, with the exception of Chapter 7 (Health), is licensed under the Creative Commons Attribution 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Chapter 7 (Health) © World Health Organization [2012]. All rights reserved. The World Health Organization has granted the Publisher permission for the reproduction of this chapter. Accelerating Development Using the Web | Foreword from the Rockefeller Foundation i Foreword from the Rockefeller Foundation For almost 100 years, the Rockefeller Foundation has been at the forefront of new ideas and innovations related to emerging areas of technology. In its early years, the Foundation advanced new technologies to eradicate hookworm and develop a vaccine for yellow fever, creating a lasting legacy of strengthening the application of new technologies to improve the lives of the world’s poor and vulnerable. By the middle of the 20th century, this approach led the Foundation to the pre-cursor to the modern day comput- er. At the dawn of the digital era in 1956, the Foundation helped launch the field of artificial intelligence through its support for the work of John McCarthy, the computing visionary who coined the term. -

Māori Language Interfaces

10 Māori language resources & Māori initiatives for teaching & learning te reo Māori Māori language interfaces Te Taka Keegan Introduction The decisions around the language of a software interface lie with the software engineer and their development team. The decisions are, or should be, based primarily on the likely target audience and how they will most easily be able to use the interface. That is to say, language decisions should be based on the usability of the interface. If this was applied for all cases it is unlikely that we would see interfaces in te reo Māori From a computational perspective the language of an interface does not need to be in English, it can just as easily be programmed to display in Spanish, in French, in Māori or any other of the 1000 languages that are covered by the Unicode scripts. Ultimately the computer itself does not think, or compute, in a spoken language; all of its operations are undertaken in machine code based on the binary number system, which is then compiled onto various layers of assembly and high level programming languages which ultimately display human language messages in text boxes. What is displayed in those text boxes is quite arbitrary to running the computer, but it is everything to the usability of the interface, and subsequently, the usefulness of the software. This paper considers the usability of the interface and the ramifications for te reo Māori if the interface language, the messages displayed in those text boxes, is written in te reo Māori. An initial question that may arise is 'Why would we want to create software that has an interface in te reo Māori?' Studies have shown (see examples in this paper) that interfaces are more difficult to use in te reo Māori. -

Huawei IDEOS S7 Slim Manual

IDEOS S7 Slim User Guide Contents 1 Getting started...........................................................................................1 1.1 About your Tablet...............................................................................................1 1.2 Getting to know your Tablet ...............................................................................1 1.3 Installation .........................................................................................................3 1.3.1 To remove the back cover.......................................................................3 1.3.2 To install or remove the battery...............................................................3 1.3.3 To install the back cover..........................................................................4 1.4 Turning your Tablet on or off..............................................................................4 1.4.1 To turn your Tablet on .............................................................................4 1.4.2 To turn your Tablet off..............................................................................5 1.5 Charging the battery..........................................................................................5 1.6 Working with the keys........................................................................................6 1.7 Using the touchscreen.......................................................................................7 1.8 Home screen .....................................................................................................8 -

Fnac Reprise

FNAC REPRISE Liste des smartphones éligibles au programme de reprise au 19/08/2016 ACER LIQUID Z4 APPLE IPHONE 5 BLACK 64GB ACER INCORPORATED LIQUID Z530S APPLE IPHONE 5 WHITE 16GB ACER INCORPORATED LIQUID Z630S APPLE IPHONE 5 WHITE 32GB ALBA ALBA 4.5INCH 5MP 4G 8GB APPLE IPHONE 5 WHITE 64GB ALBA DUAL SIM APPLE IPHONE 5C ALCATEL IDOL 3 8GB APPLE IPHONE 5C BLUE 16GB ALCATEL ONE TOUCH 228 APPLE IPHONE 5C BLUE 32GB ALCATEL ONE TOUCH 903 APPLE IPHONE 5C BLUE 8GB ALCATEL ONE TOUCH 903X APPLE IPHONE 5C GREEN 16GB ALCATEL ONE TOUCH IDOL 2 MINI S APPLE IPHONE 5C GREEN 32GB ALCATEL ONE TOUCH TPOP APPLE IPHONE 5C GREEN 8GB ALCATEL ONETOUCH POP C3 APPLE IPHONE 5C PINK 16GB AMAZON FIRE PHONE APPLE IPHONE 5C PINK 32GB APPLE APPLE WATCH EDITION 42MM APPLE IPHONE 5C PINK 8GB APPLE IPHONE 3G APPLE IPHONE 5C WHITE 16GB APPLE IPHONE 3G BLACK 16GB APPLE IPHONE 5C WHITE 32GB APPLE IPHONE 3G BLACK 8GB APPLE IPHONE 5C WHITE 8GB APPLE IPHONE 3G WHITE 16GB APPLE IPHONE 5C YELLOW 16GB APPLE IPHONE 3GS APPLE IPHONE 5C YELLOW 32GB APPLE IPHONE 3GS 8GB APPLE IPHONE 5C YELLOW 8GB APPLE IPHONE 3GS BLACK 16GB APPLE IPHONE 5S APPLE IPHONE 3GS BLACK 32GB APPLE IPHONE 5S BLACK 16GB APPLE IPHONE 3GS WHITE 16GB APPLE IPHONE 5S BLACK 32GB APPLE IPHONE 3GS WHITE 32GB APPLE IPHONE 5S BLACK 64GB APPLE IPHONE 4 APPLE IPHONE 5S GOLD 16GB APPLE IPHONE 4 BLACK 16GB APPLE IPHONE 5S GOLD 32GB APPLE IPHONE 4 BLACK 32GB APPLE IPHONE 5S GOLD 64GB APPLE IPHONE 4 BLACK 8GB APPLE IPHONE 5S WHITE 16GB APPLE IPHONE 4 WHITE 16GB APPLE IPHONE 5S WHITE 32GB APPLE IPHONE 4 WHITE 32GB APPLE IPHONE -

Devices for Which We Currently Recommend Opera Mini 7.0 Number of Device Models

Devices for which we currently recommend Opera Mini 7.0 Number of device models: 625 Platforms included: JME, BlackBerry, Android, S60 and iOS List generated date: 2012-05-30 -------------------------------------------------------------------------------------------------------------------------------------- au by KDDI IS03 by Sharp BlackBerry 9900 Bold Acer beTouch E110 au by KDDI REGZA Phone BlackBerry Curve 3G 9300 IS04 by Fujitsu-Toshiba Acer beTouch E130 Dell Aero au by KDDI Sirius IS06 by Acer Iconia Tab A500 Pantech Dell Streak Acer Liquid E Ezze S1 Beyond B818 Acer Liquid mt Fly MC160 BlackBerry 8520 Curve Acer Liquid S100 Garmin-Asus nüvifone A10 BlackBerry 8530 Curve Acer Stream Google Android Dev Phone BlackBerry 8800 1 G1 Alcatel One Touch OT-890D BlackBerry 8820 Google Nexus One Alfatel H200 BlackBerry 8830 Google Nexus S i9023 Amoi WP-S1 Skypephone BlackBerry 8900 Curve HTC A6277 Apple iPad BlackBerry 9000 Bold HTC Aria A6366 Apple iPhone BlackBerry 9105 Pearl HTC ChaCha / Status / Apple iPhone 3G BlackBerry 9300 Curve A810e Apple iPhone 3GS BlackBerry 9500 Storm HTC Desire Apple iPhone 4 BlackBerry 9530 Storm HTC Desire HD Apple iPod Touch BlackBerry 9550 Storm2 HTC Desire S Archos 101 Internet Tablet BlackBerry 9630 Tour HTC Desire Z Archos 32 Internet Tablet BlackBerry 9700 Bold HTC Dream Archos 70 Internet Tablet BlackBerry 9800 Torch HTC Droid Eris Asus EeePad Transformer BlackBerry 9860 Torch HTC Droid Incredible TF101 ADR6300 HTC EVO 3D X515 INQ INQ1 LG GU230 HTC EVO 4G Karbonn K25 LG GW300 Etna 2 / Gossip HTC Explorer -

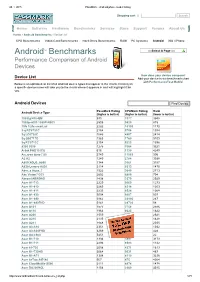

Androidtm Benchmarks

28. 1. 2015 PassMark Android phone model listing Shopping cart | Search Home Software Hardware Benchmarks Services Store Support Forums About Us Home » Android Benchmarks » Device List CPU Benchmarks Video Card Benchmarks Hard Drive Benchmarks RAM PC Systems Android iOS / iPhone TM Select A Page Android Benchmarks Performance Comparison of Android Devices How does your device compare? Device List Add your device to our benchmark chart with PerformanceTest Mobile! Below is an alphabetical list of all Android device types that appear in the charts. Clicking on a specific device name will take you to the charts where it appears in and will highlight it for you. Android Devices Find Device PassMark Rating CPUMark Rating Rank Android Device Type (higher is better) (higher is better) (lower is better) 1005tg N10QM 935 3377 3948 1080pn003 1080PN003 2505 9820 815 1life 1Life.smart.air 2282 10103 1170 3q RC9731C 2154 5756 1394 3q LC0720C 1646 4897 2414 3q QS0717D 1363 1760 3109 3q RC9712C 2154 5223 1396 9300 9300 1275 3364 3321 Alink PAD10 ICS 616 1130 4249 A.c.ryan dyno 7.85 2749 11065 596 A2 A2 1240 2784 3388 A800 XOLO_A800 1344 3661 3157 A830 Lenovo A830 2114 8313 1470 Abs_a Aqua_7 1522 3640 2713 Abc Vision7DCI 2602 6880 704 Abroad ABROAD 1438 3379 2929 Acer A1713 2229 9069 1273 Acer A1810 2265 8314 1203 Acer A1811 2233 8524 1268 Acer A1830 3004 9207 507 Acer A1840 3962 23996 267 Acer A1840FHD 5141 28720 58 Acer A101 1577 3758 2586 Acer A110 1964 8623 1822 Acer A200 1559 3822 2621 Acer A210 2135 8315 1428 Acer A211 1848 8130 2035 Acer A3A10 2351 8128 1032 Acer A3A20FHD 3269 11265 428 Acer AA3600 5451 22392 22 Acer B1710 1336 3897 3173 Acer B1711 2293 8583 1142 Acer b1720 2058 4371 1613 Acer B1730HD 3064 9031 487 Acer B1A71 1308 4119 3236 Acer beTouch E140 567 475 4264 Acer CloudMobile S500 2111 4874 1478 Acer DA220HQL 1156 2960 3545 http://www.androidbenchmark.net/device_list.php 1/71 28. -

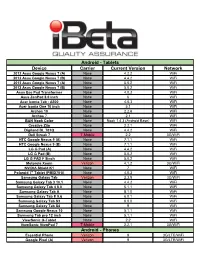

Device Carrier Current Version Network Android

Android - Tablets Device Carrier Current Version Network 2012 Asus Google Nexus 7 (A) None 4.2.2 WiFi 2012 Asus Google Nexus 7 (B) None 4.4.2 WiFi 2013 Asus Google Nexus 7 (A) None 5.0.2 WiFi 2013 Asus Google Nexus 7 (B) None 5.0.2 WiFi Asus Eee Pad Transformer None 4.0.3 WiFi Asus ZenPad 8.0 inch None 6 WiFi Acer Iconia Tab - A500 None 4.0.3 WiFi Acer Iconia One 10 inch None 5.1 WiFi Archos 10 None 2.2.6 WiFi Archos 7 None 2.1 WiFi B&N Nook Color None Nook 1.4.3 (Android Base) WiFi Creative Ziio None 2.2.1 WiFi Digiland DL 701Q None 4.4.2 WiFi Dell Streak 7 T-Mobile 2.2 3G/WiFi HTC Google Nexus 9 (A) None 7.1.1 WiFi HTC Google Nexus 9 (B) None 7.1.1 WiFi LG G Pad (A) None 4.4.2 WiFi LG G Pad (B) None 5.0.2 WiFi LG G PAD F 8inch None 5.0.2 WiFi Motorola Xoom Verizon 4.1.2 3G/WiFi NVIDIA Shield K1 None 7 WiFi Polaroid 7" Tablet (PMID701i) None 4.0.3 WiFi Samsung Galaxy Tab Verizon 2.3.5 3G/WiFi Samsung Galaxy Tab 3 10.1 None 4.4.2 WiFi Samsung Galaxy Tab 4 8.0 None 5.1.1 WiFi Samsung Galaxy Tab A None 8.1.0 WiFi Samsung Galaxy Tab E 9.6 None 6.0.1 WiFi Samsung Galaxy Tab S3 None 8.0.0 WiFi Samsung Galaxy Tab S4 None 9 WiFi Samsung Google Nexus 10 None 5.1.1 WiFi Samsung Tab pro 12 inch None 5.1.1 WiFi ViewSonic G-Tablet None 2.2 WiFi ViewSonic ViewPad 7 T-Mobile 2.2.1 3G/WiFi Android - Phones Essential Phone Verizon 9 3G/LTE/WiFi Google Pixel (A) Verizon 9 3G/LTE/WiFi Android - Phones (continued) Google Pixel (B) Verizon 8.1 3G/LTE/WiFi Google Pixel 2 Verizon 8.1 3G/LTE/WiFi Google Pixel 3 XL None 9 3G/LTE/WiFi HTC Desire (International) -

Huawei Lanserar IDEOS Tillsammans Med Google

Huawei lanserar IDEOSTM tillsammans med Google - världens första prisvärda smartphone - Berlin, 2 september 2010: Idag presenterade Huawei IDEOSTM på en presskonferens inför IFA-mässan i Berlin. Huawei IDEOS är Huaweis senaste smartphone som är förinstallerad med AndroidTM 2.2 samt fullt kompatibel hård - och mjukvara. Huawei IDEOS kommer att lanseras i ett antal länder i Europa, Asien- och Stillahavsområdet, Nordamerika och Sydamerika. IDEOS erbjuder användare en nedladdningshastighet på över 7.2Mb / s och dubbla nätverksstöd via WCDMA WiFi samt ett stort antal mobila bredbandstjänster. IDEOS fungerar också som en WiFi-router för upp till åtta enheter samtidigt. Utöver den senaste versionen av Android kommer IDEOS även utrustad med funktioner som röstuppringning, röstnavigering och möjligheten att köra applikationer från SD-kortet. Med mer än 70 000 applikationer tillgängliga via Android Market möjliggör IDEOS en mängd funktioner för kommunikation, underhållning, kontoret och ekonomi. Huawei IDEOS lanseras i färgerna svart, gult, blått och lila och kommer att kosta mellan 100 USD och 200 USD, beroende på marknad. - "Smartphonens populäritet är ett av de viktigaste verktygen för att visa användarna den "gyllene eran av mobilt bredband”, något som är direkt kopplat till Googles strategi för mobilt internet”, säger Kevin Tao, vd för Huawei Device. - "IDEOS är ett prisvärt alternativ som förenklar tillgången till internet via mobilen. Vi är stolta över att redan ha uppnått vårt mål för 2010; att utveckla en smartphone med en fantastisk användarupplevelse i 150 USD – klassen”, säger Kevin Tao, Huawei Device. Huawei lanserade ytterligare tre Android-baserade smartphones – Huawei U8800, Huawei U8500, Huawei U8300. Huawei U8800 U8800 drivs med Android 2.2 och kommer utrustad med HSPA+ som stödjer hastigheter upp till 14 Mb/s. -

Huawei Chooses Synaptics Clearpad™ 7010 to Power Multi-Touch on IDEOS S7 Slim Tablet

Huawei Chooses Synaptics ClearPad™ 7010 to Power Multi-Touch on IDEOS S7 Slim Tablet ClearPad™ 7010 Single-Chip Touch Solution Provides Industry-Leading Accuracy for a Superior User Experience SANTA CLARA, Calif., March 29, 2011 /PRNewswire/ -- Synaptics Incorporated (Nasdaq: SYNA), a leading developer of human interface solutions for mobile computing, communications, and entertainment devices today announced that Huawei has selected its ClearPad™ 7010 single-chip solution for the IDEOS S7 Slim tablet. The Huawei IDEOS S7 Slim features a 7" capacitive touchscreen, giving users an intuitive touch interface that is extremely responsive and accurate. The IDEOS S7 Slim's design and interface are optimized for portability and easy single-handed use. The tablet is thin and light measuring only 12.5 mm thick and weighing only 400 grams; a 16x9 aspect ratio makes it easy to hold. The IDEOS S7 Slim runs Android 2.2, supports HD media with 720p HD playback and multi-tasking, and sports a 3.2 megapixel rear-facing camera. Huawei plans to launch the tablet globally in April 2011. The 7010, part of Synaptics Series 7 product portfolio for large touchscreen applications, is an excellent touch interface for low power and small component area footprint, supporting up to 8.2" diagonal touchscreens and multi-touch for up to five fingers. Compared to other single-chip solutions, ClearPad 7010 offers: ● Lower power consumption featuring 8-12 mW ● Industry-leading touch sensing accuracy and linearity ● Faster finger tracking — 60Hz report rate for up to five fingers "Huawei's IDEOS S7 Slim tablet has a sleek, high-performance touchscreen that's the ideal companion device for users on the go," said Kevin Barber, senior vice president and general manager of the Handheld Division at Synaptics. -

A Sensor Integration Framework for Android at the Application-Level

Open Data Kit Sensors: A Sensor Integration Framework for Android at the Application-Level Waylon Brunette, Rita Sodt, Rohit Chaudhri, Mayank Goel, Michael Falcone, Jaylen VanOrden, Gaetano Borriello Department of Computer Science & Engineering University of Washington Box 352350 Seattle, WA 98195-2350 {wrb, rsodt, rohitc, mayank, mfalcone, dutchsct, gaetano}@cse.uw.edu ABSTRACT 1. INTRODUCTION Smartphones can now connect to a variety of external sensors Market penetration of smartphones as a computing and over wired and wireless channels. However, ensuring proper communications platform has increased significantly in recent device interaction can be burdensome, especially when a single years. Basic feature phones are gradually being replaced by application needs to integrate with a number of sensors using relatively inexpensive smartphones in developing countries. For different communication channels and data formats. This paper example, in Kenya the Android based Huawei Ideos is sold for presents a framework to simplify the interface between a variety approximately USD 80[27]. Researchers and practitioners in the of external sensors and consumer Android devices. The information and communication technologies for development framework simplifies both application and driver development (ICTD) community are increasingly leveraging smartphones to with abstractions that separate responsibilities between the user improve information management in under-resourced application, sensor framework, and device driver. These environments. Our work is motivated by the platform shift from abstractions facilitate a componentized framework that allows traditional PCs and standalone sensing appliances to mobile developers to focus on writing minimal pieces of sensor-specific devices (e.g., smartphones, tablets) coupled with cloud services to code enabling an ecosystem of reusable sensor drivers. -

Das Kompendium

Android- 6 e 6,99 Antiviren-Paket www.ctspecial.de 6 Monate kostenlos extraextra Android AndroidAndroid 6 Geballtes Wissen effizient einsetzen E-Book Android für Einsteigere Aktion: Bluetooth-Headset 1 Test und Beratung Smartphones günstig bis edel 10, 16 Android-Tablets vs. iPad 20, 30 Optimale Mobilfunktarife 96 Update-Chaos unter der Lupe 40 Experten-Know-how Anti-Gauner-Apps 84 Kinderschutz im Handy 80 Apps programmieren 160, 166 Mit Rooten zum Superuser 152 Urlaub und Outdoor Android – Kompendium Das Zubehör für Radfahrer 36 Offroad- und Auto-Navigation 58, 118 Günstig im Ausland surfen 98 Geocaching-Spaß 126 Großer App-Guide Grundausstattung für Smartphone und Tablet 46, 52 Spiele, Kreativ-Apps, Office 108, 132, 144 Musik aus der Cloud 112 Gratis-Apps statt teurer SMS 90 Das Kompendium Alle aktuellen Android-Themen auf 178 Seiten Editorial Liebe Leserin, lieber Leser, Android startet durch. Das Smartphone-Betriebs system Einsteigern machen wir Vorschläge zu Software-Basisaus - konnte seinen Marktanteil hierzulande innerhalb eines stat tungen für Tablets und Smartphones. Wer es ganz genau Jahres mehr als verdoppeln und auf 40 Prozent ausbauen: wissen will, kann in ausführlichen Tests in einer ganzen Reihe Marktführer! In etwa jedem zweiten Smartphone, das derzeit von Kategorien die passenden Apps für seine Zwecke heraus - über den Tresen geht, arbeitet der kleine grüne Roboter. suchen, darunter Offroad- und Auto-Navis, Musik-Streaming- Konsumenten können aus knapp 200 Smartphones und Dienste, RSS-Reader, Geocaching, PDF-, Kinderschutz- und Dutzenden Tablets auswählen; der Play Store hält mehr als Anti-Diebstahl-Apps. 360ˇ000 Apps bereit. Etliche Praxisbeiträge helfen Ihnen, das Maximum aus Ihrem Der große Erfolg hat aber nicht nur positive Seiten.