A LINE-BASED LOSSLESS BACKWARD CODING of WAVELET TREES (BCWT) and BCWT IMPROVEMENTS for APPLICATION by Bian Li, B.S.E.E., M.S.E.E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2018-07-11 and for Information to the Iso Member Bodies and to the Tmb Members

Sergio Mujica Secretary-General TO THE CHAIRS AND SECRETARIES OF ISO COMMITTEES 2018-07-11 AND FOR INFORMATION TO THE ISO MEMBER BODIES AND TO THE TMB MEMBERS ISO/IEC/ITU coordination – New work items Dear Sir or Madam, Please find attached the lists of IEC, ITU and ISO new work items issued in June 2018. If you wish more information about IEC technical committees and subcommittees, please access: http://www.iec.ch/. Click on the last option to the right: Advanced Search and then click on: Documents / Projects / Work Programme. In case of need, a copy of an actual IEC new work item may be obtained by contacting [email protected]. Please note for your information that in the annexed table from IEC the "document reference" 22F/188/NP means a new work item from IEC Committee 22, Subcommittee F. If you wish to look at the ISO new work items, please access: http://isotc.iso.org/pp/. On the ISO Project Portal you can find all information about the ISO projects, by committee, document number or project ID, or choose the option "Stages search" and select "Search" to obtain the annexed list of ISO new work items. Yours sincerely, Sergio Mujica Secretary-General Enclosures ISO New work items 1 of 8 2018-07-11 Alert Detailed alert Timeframe Reference Document title Developing committee VA Registration dCurrent stage Stage date Guidance for multiple organizations implementing a common Warning Warning – NP decision SDT 36 ISO/NP 50009 (ISO50001) EnMS ISO/TC 301 - - 10.60 2018-06-10 Warning Warning – NP decision SDT 36 ISO/NP 31050 Guidance for managing -

(L3) - Audio/Picture Coding

Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document L3 INCITS/ISO/IEC 9281-1:1990:[R2013] Information technology - Picture Coding Methods - Part 1: Identification IS 9281-1:1990 INCITS/ISO/IEC 9281-2:1990:[R2013] Information technology - Picture Coding Methods - Part 2: Procedure for Registration IS 9281-2:1990 INCITS/ISO/IEC 9282-1:1988:[R2013] Information technology - Coded Representation of Computer Graphics Images - Part IS 9282-1:1988 1: Encoding principles for picture representation in a 7-bit or 8-bit environment :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 7: IS 13522-7:2001 Interoperability and conformance testing for ISO/IEC 13522-5 (MHEG-7) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 5: IS 13522-5:1997 Support for Base-Level Interactive Applications (MHEG-5) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 3: IS 13522-3:1997 MHEG script interchange representation (MHEG-3) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 6: IS 13522-6:1998 Support for enhanced interactive applications (MHEG-6) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 8: IS 13522-8:2001 XML notation for ISO/IEC 13522-5 (MHEG-8) Created: 11/16/2014 Page 1 of 44 Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document :[] Information technology - Coding -

Lossy Image Compression Based on Prediction Error and Vector Quantisation Mohamed Uvaze Ahamed Ayoobkhan* , Eswaran Chikkannan and Kannan Ramakrishnan

Ayoobkhan et al. EURASIP Journal on Image and Video Processing (2017) 2017:35 EURASIP Journal on Image DOI 10.1186/s13640-017-0184-3 and Video Processing RESEARCH Open Access Lossy image compression based on prediction error and vector quantisation Mohamed Uvaze Ahamed Ayoobkhan* , Eswaran Chikkannan and Kannan Ramakrishnan Abstract Lossy image compression has been gaining importance in recent years due to the enormous increase in the volume of image data employed for Internet and other applications. In a lossy compression, it is essential to ensure that the compression process does not affect the quality of the image adversely. The performance of a lossy compression algorithm is evaluated based on two conflicting parameters, namely, compression ratio and image quality which is usually measured by PSNR values. In this paper, a new lossy compression method denoted as PE-VQ method is proposed which employs prediction error and vector quantization (VQ) concepts. An optimum codebook is generated by using a combination of two algorithms, namely, artificial bee colony and genetic algorithms. The performance of the proposed PE-VQ method is evaluated in terms of compression ratio (CR) and PSNR values using three different types of databases, namely, CLEF med 2009, Corel 1 k and standard images (Lena, Barbara etc.). Experiments are conducted for different codebook sizes and for different CR values. The results show that for a given CR, the proposed PE-VQ technique yields higher PSNR value compared to the existing algorithms. It is also shown that higher PSNR values can be obtained by applying VQ on prediction errors rather than on the original image pixels. -

JPEG Image Compression.Pdf

JPEG image compression FAQ, part 1/2 2/18/05 5:03 PM Part1 - Part2 - MultiPage JPEG image compression FAQ, part 1/2 There are reader questions on this topic! Help others by sharing your knowledge Newsgroups: comp.graphics.misc, comp.infosystems.www.authoring.images From: [email protected] (Tom Lane) Subject: JPEG image compression FAQ, part 1/2 Message-ID: <[email protected]> Summary: General questions and answers about JPEG Keywords: JPEG, image compression, FAQ, JPG, JFIF Reply-To: [email protected] Date: Mon, 29 Mar 1999 02:24:27 GMT Sender: [email protected] Archive-name: jpeg-faq/part1 Posting-Frequency: every 14 days Last-modified: 28 March 1999 This article answers Frequently Asked Questions about JPEG image compression. This is part 1, covering general questions and answers about JPEG. Part 2 gives system-specific hints and program recommendations. As always, suggestions for improvement of this FAQ are welcome. New since version of 14 March 1999: * Expanded item 10 to discuss lossless rotation and cropping of JPEGs. This article includes the following sections: Basic questions: [1] What is JPEG? [2] Why use JPEG? [3] When should I use JPEG, and when should I stick with GIF? [4] How well does JPEG compress images? [5] What are good "quality" settings for JPEG? [6] Where can I get JPEG software? [7] How do I view JPEG images posted on Usenet? More advanced questions: [8] What is color quantization? [9] What are some rules of thumb for converting GIF images to JPEG? [10] Does loss accumulate with repeated compression/decompression? -

Vue PACS 12.2 DICOM Conformance Statement

Clinical Collaboration Platform Vue PACS 12.2 DICOM Conformance Statement Part # AD0536 2017-11-23 Vue PACS 12.2 DICOM Conformance Statement AD0536 A Uncontrolled unless otherwise indicated Confidential Vue PACS 12.2 - DICOM Conformance Statement - AD0536.docx PAGE 1 of 155 Table of Contents 1 Introduction ............................................................................................................................................ 3 1.1 Terms and Definitions ...................................................................................................................... 3 1.2 About This Document ....................................................................................................................... 4 1.3 Important Remarks ........................................................................................................................... 4 2 Implementation Model............................................................................................................................ 5 2.1 Application Data Flow Diagram ........................................................................................................ 5 2.2 Functional Definitions of AEs ......................................................................................................... 11 2.3 Sequencing of Real World Activities .............................................................................................. 12 3 AE Specifications ................................................................................................................................ -

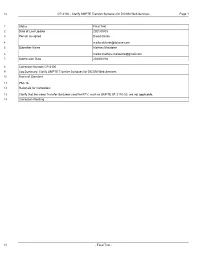

CP2100 Clarify SMPTE Transfer Syntaxes for DICOM Web Services

16 CP-2100 - Clarify SMPTE Transfer Syntaxes for DICOM Web Services Page 1 1 Status Final Text 2 Date of Last Update 2021/09/03 3 Person Assigned David Clunie 4 mailto:[email protected] 5 Submitter Name Mathieu Malaterre 6 mailto:[email protected] 7 Submission Date 2020/04/16 8 Correction Number CP-2100 9 Log Summary: Clarify SMPTE Transfer Syntaxes for DICOM Web Services 10 Name of Standard 11 PS3.18 12 Rationale for Correction: 13 Clarify that the video Transfer Syntaxes used for RTV, such as SMPTE ST 2110-20, are not applicable. 14 Correction Wording: 15 - Final Text - 48 CP-2100 - Clarify SMPTE Transfer Syntaxes for DICOM Web Services Page 2 1 Amend DICOM PS3.18 as follows (changes to existing text are bold and underlined for additions and struckthrough for removals): 2 8.7.3.1 Instance Media Types 3 The application/dicom media type specifies a representation of Instances encoded in the DICOM File Format specified in Section 7 4 “DICOM File Format” in PS3.10. 5 Note 6 The origin server may populate the PS3.10 File Meta Information with the identification of the Source, Sending and Receiving 7 AE Titles and Presentation Addresses as described in Section 7.1 in PS3.10, or these Attributes may have been left unaltered 8 from when the origin server received the objects. The user agent storing the objects received in the response may populate 9 or coerce these Attributes based on its own knowledge of the endpoints involved in the transaction, so that they accurately 10 identify the most recent -

Forcepoint DLP Supported File Formats and Size Limits

Forcepoint DLP Supported File Formats and Size Limits Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 This article provides a list of the file formats that can be analyzed by Forcepoint DLP, file formats from which content and meta data can be extracted, and the file size limits for network, endpoint, and discovery functions. See: ● Supported File Formats ● File Size Limits © 2021 Forcepoint LLC Supported File Formats Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 The following tables lists the file formats supported by Forcepoint DLP. File formats are in alphabetical order by format group. ● Archive For mats, page 3 ● Backup Formats, page 7 ● Business Intelligence (BI) and Analysis Formats, page 8 ● Computer-Aided Design Formats, page 9 ● Cryptography Formats, page 12 ● Database Formats, page 14 ● Desktop publishing formats, page 16 ● eBook/Audio book formats, page 17 ● Executable formats, page 18 ● Font formats, page 20 ● Graphics formats - general, page 21 ● Graphics formats - vector graphics, page 26 ● Library formats, page 29 ● Log formats, page 30 ● Mail formats, page 31 ● Multimedia formats, page 32 ● Object formats, page 37 ● Presentation formats, page 38 ● Project management formats, page 40 ● Spreadsheet formats, page 41 ● Text and markup formats, page 43 ● Word processing formats, page 45 ● Miscellaneous formats, page 53 Supported file formats are added and updated frequently. Key to support tables Symbol Description Y The format is supported N The format is not supported P Partial metadata -

Metadefender Core V4.17.3

MetaDefender Core v4.17.3 © 2020 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of MetaDefender Core 14 1. Quick Start with MetaDefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Process Files with MetaDefender Core 21 2. Installing or Upgrading MetaDefender Core 22 2.1. Recommended System Configuration 22 Microsoft Windows Deployments 22 Unix Based Deployments 24 Data Retention 26 Custom Engines 27 Browser Requirements for the Metadefender Core Management Console 27 2.2. Installing MetaDefender 27 Installation 27 Installation notes 27 2.2.1. Installing Metadefender Core using command line 28 2.2.2. Installing Metadefender Core using the Install Wizard 31 2.3. Upgrading MetaDefender Core 31 Upgrading from MetaDefender Core 3.x 31 Upgrading from MetaDefender Core 4.x 31 2.4. MetaDefender Core Licensing 32 2.4.1. Activating Metadefender Licenses 32 2.4.2. Checking Your Metadefender Core License 37 2.5. Performance and Load Estimation 38 What to know before reading the results: Some factors that affect performance 38 How test results are calculated 39 Test Reports 39 Performance Report - Multi-Scanning On Linux 39 Performance Report - Multi-Scanning On Windows 43 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. -

Digital Recording of Performing Arts: Formats and Conversion

detailed approach also when the transfer of rights forms part of an employment contract between the producer of Digital recording of performing the recording and the live crew. arts: formats and conversion • Since the area of activity most probably qualifies as Stijn Notebaert, Jan De Cock, Sam Coppens, Erik Mannens, part of the ‘cultural sector’, separate remuneration for Rik Van de Walle (IBBT-MMLab-UGent) each method of exploitation should be stipulated in the Marc Jacobs, Joeri Barbarien, Peter Schelkens (IBBT-ETRO-VUB) contract. If no separate remuneration system has been set up, right holders might at any time invoke the legal default mechanism. This default mechanism grants a proportionate part of the gross revenue linked to a specific method of exploitation to the right holders. The producer In today’s digital era, the cultural sector is confronted with a may also be obliged to provide an annual overview of the growing demand for making digital recordings – audio, video and gross revenue per way of exploitation. This clause is crucial still images – of stage performances available over a multitude of in order to avoid unforeseen financial and administrative channels, including digital television and the internet. Essentially, burdens in a later phase. this can be accomplished in two different ways. A single entity can act as a content aggregator, collecting digital recordings from • Determine geographical scope and, if necessary, the several cultural partners and making this content available to duration of the transfer for each way of exploitation. content distributors or each individual partner can distribute its own • Include future methods of exploitation in the contract. -

Application of Reversible Denoising and Lifting Steps to DWT in Lossless JPEG 2000 for Improved Bitrates

Application of reversible denoising and lifting steps to DWT in lossless JPEG 2000 for improved bitrates Roman Starosolski Institute of Informatics, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland, e-mail: [email protected], [email protected], tel.: +48 322372151 Abstract In a previous study, we noticed that the lifting step of a color space transform might increase the amount of noise that must be encoded during compression of an image. To alleviate this problem, we proposed the replacement of lifting steps with reversible denoising and lifting steps (RDLS), which are basically lifting steps integrated with denoising filters. We found the approach effective for some of the tested images. In this study, we apply RDLS to discrete wavelet transform (DWT) in JPEG 2000 lossless coding. We evaluate RDLS effects on bi- trates using various denoising filters and a large number of diverse images. We employ a heu- ristic for image-adaptive RDLS filter selection; based on its empirical outcomes, we also pro- pose a fixed filter selection variant. We find that RDLS significantly improves bitrates of non- photographic images and of images with impulse noise added, while bitrates of photographic images are improved by below 1% on average. Considering that the DWT stage may worsen bitrates of some images, we propose a couple of practical compression schemes based on JPEG 2000 and RDLS. For non-photographic images, we obtain an average bitrate improve- ment of about 12% for fixed filter selection and about 14% for image-adaptive selection. Keywords: reversible denoising and lifting step, discrete wavelet transform, denoising, lifting tech- nique, lossless image compression, JPEG 2000. -

Alert Detailed Alert Timeframe Reference Document Title Developing

Registration Current Alert Detailed alert Timeframe Reference Document title Developing committee VA date stage Stage date Warning – NP Guidance for multiple organizations implementing a common (ISO50001) Warning decision SDT 36 ISO/NP 50009 EnMS ISO/TC 301 - - 10.60 2018-06-10 Warning – NP Warning decision SDT 36 ISO/NP 31050 Guidance for managing emerging risks to enhance resilience ISO/TC 262 - - 10.60 2018-06-14 Warning – NP Reproducibility of the LOD of binary methods in collaborative and in-house Warning decision SDT 36 ISO/NP TS 27878 validation studies ISO/TC 69/SC 6/WG 1 - - 10.60 2018-06-25 Safety of machinery -- Ergonomic principles for the design of sorting cabins Warning – NP intended for the manual sorting of dry household and similar waste TO CHECK Warning decision - ISO/NP TR 23474 originating from selective collection ISO/TC 159/SC 3/WG 4 (ISO lead) - 10.60 2018-06-30 Surface chemical analysis -- Depth profiling -- Quantitative analysis of Warning – NP multi-element alloy films by depth profiling analysis using reference Warning decision SDT 36 ISO/NP 23473 materials ISO/TC 201/SC 4 - - 10.60 2018-06-28 Warning – NP Warning decision SDT 36 ISO/NP 23472-1 Foundry machinery -- Terminology -- Part 1: Fundamental terms ISO/TC 306 - - 10.60 2018-06-27 Warning – NP Experimental designs for evaluation of uncertainty -- Use of factorial Warning decision SDT 36 ISO/NP TS 23471 designs for determining uncertainty functions ISO/TC 69/SC 6/WG 7 - - 10.60 2018-06-25 Warning – NP Determination of heavy water isotopic purity by Fourier -

JPEG XR an Image Coding Standard

International Journal of Computer and Electrical Engineering, Vol.4, No.2, April 2012 JPEG XR an Image Coding Standard Savita S. Jadhav and Sandeep K. Jadhav implementations of the encoder and decoder as simple as Abstract—JPEG XR is an emerging image coding standard, possible. For the compression of digital image, the Joint based on HD Photo developed by Microsoft technology. It Photographic Experts Group, the first international image supports high compression performance twice as high as the de coding standard for continuous-tone natural images, was facto image coding system, namely JPEG, and also has an advantage over JPEG 2000 in terms of computational cost. defined in 1992. JPEG is a well-known image compression JPEG XR is expected to be widespread for many devices format today because of the population of digital still camera including embedded systems in the near future. This and Internet. Another image coding standard, JPEG2000 [3], review-based paper proposes a novel architecture for JPEG XR was finalized in 2001. Differed from JPEG standard, a encoding. This paper gives discussion of image partition and Discrete Cosine Transform based coder, the JPEG2000 uses windowing techniques. Further frequency transform and a Discrete Wavelet Transform based coder for better coding quantization is also addressed. A brief insight into Predication, Adaptive Encode and Packetization has been provided in the efficiency. The JPEG2000 not only enhances the paper. compression, but also includes many new features, such as quality scalability, resolution scalability, region of interest, Index Terms—JPEG XR, encoder, PCT, quantization, and lossy/lossless coding in a unified framework.