Universality and Diversity in Human Song Samuel A. Mehr1,2*, Manvir

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Universal Dependencies and Morphology for Hungarian – and on the Price of Universality

Universal Dependencies and Morphology for Hungarian – and on the Price of Universality Veronika Vincze1,2, Katalin Ilona Simkó1, Zsolt Szántó1, Richárd Farkas1 1University of Szeged Institute of Informatics 2MTA-SZTE Research Group on Artificial Intelligence [email protected] {vinczev,szantozs,rfarkas}@inf.u-szeged.hu Abstract plementation of multilingual morphological and syntactic parsers from a computational linguistic In this paper, we present how the princi- point of view. Furthermore, it can enhance studies ples of universal dependencies and mor- on linguistic typology and contrastive linguistics. phology have been adapted to Hungarian. From the viewpoint of syntactic parsing, the We report the most challenging grammati- languages of the world are usually categorized ac- cal phenomena and our solutions to those. cording to their level of morphological richness On the basis of the adapted guidelines, (which is negatively correlated with configura- we have converted and manually corrected tionality). At one end, there is English, a strongly 1,800 sentences from the Szeged Tree- configurational language while there is Hungarian bank to universal dependency format. We at the other end of the spectrum with rich mor- also introduce experiments on this manu- phology and free word order (Fraser et al., 2013). ally annotated corpus for evaluating auto- In this paper, we present how UD principles were matic conversion and the added value of adapted to Hungarian, with special emphasis on language-specific, i.e. non-universal, an- Hungarian-specific phenomena. notations. Our results reveal that convert- Hungarian is one of the prototypical morpho- ing to universal dependencies is not nec- logically rich languages thus our UD principles essarily trivial, moreover, using language- can provide important best practices for the uni- specific morphological features may have versalization of other morphologically rich lan- an impact on overall performance. -

Supporting Online Material

Supplementary Materials for Distinct regimes of particle and virus abundance explain face mask efficacy for COVID-19 Yafang Cheng†,*, Nan Ma*, Christian Witt, Steffen Rapp, Philipp Wild, Meinrat O. Andreae, Ulrich Pöschl, Hang Su†. * These authors contributed equally to this work. † Correspondence to: H.S. ([email protected]) and Y.C. ([email protected]) This PDF file includes: Supplementary Text S1 to S6 Figs. S1 to S7 Tables S1 to S7 1 Supplementary Text S1. Scenarios in Leung et al. (2020) Leung et al. (2020) reported an average of 5 to 17 coughs during the 30-min exhaled breath collection for virus-infected participants (10). Taking the particle size distribution given in Fig. 2, we calculate that one person can emit a total number of 9.31e5 to 2.72e6 particles in a 30 min sampling period. Note that particles > 100 µm were not considered here, and the volume concentrations of particles in the “droplet” mode (2.44e-4 mL, with 4.29e-5 to 2.45e-3 mL in 5% to 95% confidence level) overwhelm those in the “aerosol” mode (7.68e-7 mL, with 3.37e-7 to 5.24e-6 mL in 5% to 95% confidence level). S2. Virus concentration in Leung et al. (2020) Many samples in Leung et al. (2020) return a virus load signal below the detection limit (10). Thus we adopted an alternative approach, using the statistical distribution, i.e., percentage of positive cases, to calculate the virus concentration. Assuming that samples containing more than 2 viruses (Leung et al., 2020 used 100.3# as undetectable values in their statistical analysis) can be detected and the virus number in the samples follows a Poisson distribution, the fraction of positive samples (containing at least 3 viruses) can be calculated with pre-assumed virus concentration per particle volume. -

Robert GADEN: Slim GAILLARD

This discography is automatically generated by The JazzOmat Database System written by Thomas Wagner For private use only! ------------------------------------------ Robert GADEN: Robert Gaden -v,ldr; H.O. McFarlane, Karl Emmerling, Karl Nierenz -tp; Eduard Krause, Paul Hartmann -tb; Kurt Arlt, Joe Alex, Wolf Gradies -ts,as,bs; Hans Becker, Alex Beregowsky, Adalbert Luczkowski -v; Horst Kudritzki -p; Harold M. Kirchstein -g; Karl Grassnick -tu,b; Waldi Luczkowski - d; recorded September 1933 in Berlin 65485 ORIENT EXPRESS 2.47 EOD1717-2 Elec EG2859 Robert Gaden und sein Orchester; recorded September 16, 1933 in Berlin 108044 ORIENTEXPRESS 2.45 OD1717-2 --- Robert Gaden mit seinem Orchester; recorded December 1936 in Berlin 105298 MEIN ENTZÜCKENDES FRÄULEIN 2.21 ORA 1653-1 HMV EG3821 Robert Gaden mit seinem Orchester; recorded October 1938 in Berlin 106900 ICH HAB DAS GLÜCK GESEHEN 2.12 ORA3296-2 Elec EG6519 Robert Gaden mit seinem Orchester; recorded November 1938 in Berlin 106902 SIGNORINA 2.40 ORA3571-2 Elec EG6567 106962 SPANISCHER ZIGEUNERTANZ 2.45 ORA 3370-1 --- Robert Gaden mit seinem Orchester; Refraingesang: Rudi Schuricke; recorded September 1939 in Berlin 106907 TAUSEND SCHÖNE MÄRCHEN 2.56 ORA4169-1 Elec EG7098 ------------------------------------------ Slim GAILLARD: "Swing Street" Slim Gaillard -g,vib,vo; Slam Stewart -b; Sam Allen -p; Pompey 'Guts' Dobson -d; recorded February 17, 1938 in New York 9079 FLAT FOOT FLOOGIE 2.51 22318-4 Voc 4021 Some sources say that Lionel Hampton plays vibraphone. 98874 CHINATOWN MY CHINATOWN -

'I Spy': Mike Leigh in the Age of Britpop (A Critical Memoir)

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Glasgow School of Art: RADAR 'I Spy': Mike Leigh in the Age of Britpop (A Critical Memoir) David Sweeney During the Britpop era of the 1990s, the name of Mike Leigh was invoked regularly both by musicians and the journalists who wrote about them. To compare a band or a record to Mike Leigh was to use a form of cultural shorthand that established a shared aesthetic between musician and filmmaker. Often this aesthetic similarity went undiscussed beyond a vague acknowledgement that both parties were interested in 'real life' rather than the escapist fantasies usually associated with popular entertainment. This focus on 'real life' involved exposing the ugly truth of British existence concealed behind drawing room curtains and beneath prim good manners, its 'secrets and lies' as Leigh would later title one of his films. I know this because I was there. Here's how I remember it all: Jarvis Cocker and Abigail's Party To achieve this exposure, both Leigh and the Britpop bands he influenced used a form of 'real world' observation that some critics found intrusive to the extent of voyeurism, particularly when their gaze was directed, as it so often was, at the working class. Jarvis Cocker, lead singer and lyricist of the band Pulp -exemplars, along with Suede and Blur, of Leigh-esque Britpop - described the band's biggest hit, and one of the definitive Britpop songs, 'Common People', as dealing with "a certain voyeurism on the part of the middle classes, a certain romanticism of working class culture and a desire to slum it a bit". -

The Globalization of K-Pop: the Interplay of External and Internal Forces

THE GLOBALIZATION OF K-POP: THE INTERPLAY OF EXTERNAL AND INTERNAL FORCES Master Thesis presented by Hiu Yan Kong Furtwangen University MBA WS14/16 Matriculation Number 249536 May, 2016 Sworn Statement I hereby solemnly declare on my oath that the work presented has been carried out by me alone without any form of illicit assistance. All sources used have been fully quoted. (Signature, Date) Abstract This thesis aims to provide a comprehensive and systematic analysis about the growing popularity of Korean pop music (K-pop) worldwide in recent years. On one hand, the international expansion of K-pop can be understood as a result of the strategic planning and business execution that are created and carried out by the entertainment agencies. On the other hand, external circumstances such as the rise of social media also create a wide array of opportunities for K-pop to broaden its global appeal. The research explores the ways how the interplay between external circumstances and organizational strategies has jointly contributed to the global circulation of K-pop. The research starts with providing a general descriptive overview of K-pop. Following that, quantitative methods are applied to measure and assess the international recognition and global spread of K-pop. Next, a systematic approach is used to identify and analyze factors and forces that have important influences and implications on K-pop’s globalization. The analysis is carried out based on three levels of business environment which are macro, operating, and internal level. PEST analysis is applied to identify critical macro-environmental factors including political, economic, socio-cultural, and technological. -

3 Feet High and Rising”--De La Soul (1989) Added to the National Registry: 2010 Essay by Vikki Tobak (Guest Post)*

“3 Feet High and Rising”--De La Soul (1989) Added to the National Registry: 2010 Essay by Vikki Tobak (guest post)* De La Soul For hip-hop, the late 1980’s was a tinderbox of possibility. The music had already raised its voice over tensions stemming from the “crack epidemic,” from Reagan-era politics, and an inner city community hit hard by failing policies of policing and an underfunded education system--a general energy rife with tension and desperation. From coast to coast, groundbreaking albums from Public Enemy’s “It Takes a Nation of Millions to Hold Us Back” to N.W.A.’s “Straight Outta Compton” were expressing an unprecedented line of fire into American musical and political norms. The line was drawn and now the stage was set for an unparalleled time of creativity, righteousness and possibility in hip-hop. Enter De La Soul. De La Soul didn’t just open the door to the possibility of being different. They kicked it in. If the preceding generation took hip-hop from the park jams and revolutionary commentary to lay the foundation of a burgeoning hip-hop music industry, De La Soul was going to take that foundation and flip it. The kids on the outside who were a little different, dressed different and had a sense of humor and experimentation for days. In 1987, a trio from Long Island, NY--Kelvin “Posdnous” Mercer, Dave “Trugoy the Dove” Jolicoeur, and Vincent “Maseo, P.A. Pasemaster Mase and Plug Three” Mason—were classmates at Amityville Memorial High in the “black belt” enclave of Long Island were dusting off their parents’ record collections and digging into the possibilities of rhyming over breaks like the Honey Drippers’ “Impeach the President” all the while immersing themselves in the imperfections and dust-laden loops and interludes of early funk and soul albums. -

Copyright by Daniel Sherwood Sotelino 2006 Choro Paulistano and the Seven-String Guitar: an Ethnographic History

Copyright by Daniel Sherwood Sotelino 2006 Choro Paulistano and the Seven-String Guitar: an Ethnographic History by Daniel Sherwood Sotelino, B.A. Master’s Report Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Ethnomusicology Master of Music The University of Texas at Austin May 2006 Choro Paulistano and the Seven-String Guitar: an Ethnographic History Approved by Supervising Committee: Dedication To Dr. Gerard Béhague Friend and Mentor Treasure of Knowledge 1937–2005 Acknowledgements I would like to express utmost gratitude to my family–my wife, Mariah, my brother, Marco, and my parents, Fernando & Karen–for supporting my musical and scholarly pursuits for all of these years. I offer special thanks to José Luiz Herencia and the Instituto Moreira Salles, luthier João Batista, the great chorões Israel and Izaías Bueno de Almeida, Luizinho Sete Cordas, Arnaldinho at Contemporânea Instrumentos Musicais, Sandra at the Music Library of UFRJ, Wilson Sete Cordas, the folks at Ó do Borogodó, and everyone at Praça Benedito Calixto, Sesc Pompéia, Pop’s Music, and Cachuera. I am very appreciative of Lúcia for all of her delicious cooking. I am fortunate to have worked with the professors and instructors at the University of Texas-Austin: Dr. Slawek, my advisor, who truly encourages intellectual exploration; Dr. Moore for his candid observations; Dr. Hale for his tireless dedication to teaching; Dr. Mooney, for wonderful research opportunities; Dr. Dell’Antonio for looking out for the interests and needs of students, and for providing valuable insight; Dr. -

Raja Mohan 21M.775 Prof. Defrantz from Bronx's Hip-Hop To

Raja Mohan 21M.775 Prof. DeFrantz From Bronx’s Hip-Hop to Bristol’s Trip-Hop As Tricia Rose describes, the birth of hip-hop occurred in Bronx, a marginalized city, characterized by poverty and congestion, serving as a backdrop for an art form that flourished into an international phenomenon. The city inhabited a black culture suffering from post-war economic effects and was cordoned off from other regions of New York City due to modifications in the highway system, making the people victims of “urban renewal.” (30) Given the opportunity to form new identities in the realm of hip-hop and share their personal accounts and ideologies, similar to traditions in African oral history, these people conceived a movement whose worldwide appeal impacted major events such as the Million Man March. Hip-hop’s enormous influence on the world is undeniable. In the isolated city of Bristol located in England arose a style of music dubbed trip-hop. The origins of trip-hop clearly trace to hip-hop, probably explaining why artists categorized in this genre vehemently oppose to calling their music trip-hop. They argue their music is hip-hop, or perhaps a fresh and original offshoot of hip-hop. Mushroom, a member of the trip-hop band Massive Attack, said, "We called it lover's hip hop. Forget all that trip hop bullshit. There's no difference between what Puffy or Mary J Blige or Common Sense is doing now and what we were doing…” (Bristol Underground Website) Trip-hop can abstractly be defined as music employing hip-hop, soul, dub grooves, jazz samples, and break beat rhythms. -

Walt Whitman, John Muir, and the Song of the Cosmos Jason Balserait Rollins College, [email protected]

Rollins College Rollins Scholarship Online Master of Liberal Studies Theses Spring 2014 The niU versal Roar: Walt Whitman, John Muir, and the Song of the Cosmos Jason Balserait Rollins College, [email protected] Follow this and additional works at: http://scholarship.rollins.edu/mls Part of the American Studies Commons Recommended Citation Balserait, Jason, "The nivU ersal Roar: Walt Whitman, John Muir, and the Song of the Cosmos" (2014). Master of Liberal Studies Theses. 54. http://scholarship.rollins.edu/mls/54 This Open Access is brought to you for free and open access by Rollins Scholarship Online. It has been accepted for inclusion in Master of Liberal Studies Theses by an authorized administrator of Rollins Scholarship Online. For more information, please contact [email protected]. The Universal Roar: Walt Whitman, John Muir, and the Song of the Cosmos A Project Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Liberal Studies by Jason A. Balserait May, 2014 Mentor: Dr. Steve Phelan Reader: Dr. Joseph V. Siry Rollins College Hamilton Holt School Master of Liberal Studies Program Winter Park, Florida Acknowledgements There are a number of people who I would like to thank for making this dream possible. Steve Phelan, thank you for setting me on this path of self-discovery. Your infectious love for wild things and Whitman has changed my life. Joe Siry, thank you for support and invaluable guidance throughout this entire process. Melissa, my wife, thank you for your endless love and understanding. I cannot forget my two furry children, Willis and Aida Mae. -

Singer of the Hollies and Marks An- Thews.Melissa'ssoulfulversion Should Down Home Funkisstillvery Much a Other Change of Pace for Havens

DEDICATED TO THE NEEDS OF THE MUSIC 'RECORD I SINGLES SLEEPERS ALBUMS BOZ SCAGGS, "LIDO SHUFFLE" (prod. by Joe THIN .LIZZY, "DON'T BELIEVE A WORD" (prod. NATALIE COLE, "UNPREDICTABLE."It Wissert)(writers: B.Scaggs & D. by John Alcock)(writer:P.Lynott) is rare that an artist "arrives" on the Paich) (Boz Scaggs/Hudmar, ASCAP) (RSO/Chappell, ASCAP) (2:18). The scene, estabiishes herself with a first (3:40). One of the best tracks from closest that the group has come to release and :mmediately takes a place Scaggs' much acclaimed "Silk De- the infectious,rockingbadboy among the giants of popdom with every grees" album, this one seems the sound of "The Boys Are BackIn indicatio' being that's where she'll re- most likely contender to follow in Town," this "Johnny The Fox" track main for many years. Such has been.thie' thesuccessfulfootstepsof"Low- has already charted in the U.K. Be- case for Ms. Cole, whose remarkable down." Scaggs' easy going vocal lieveit: the boys are back to stay. growth continues unabated with yet a blazes the way. Columbia 3 10491. Mercury 73892. third Ip. Capitol SO -11600 (6.98). DAVID BOWIE, "SOUND AND VISION" (prod. GENESIS, "YOUR OWN SPECIAL WAY" (prod. JETHRO7JIi.L, "SONGS FROM THE )114K)1.1 -,P - by David Bowie & Tony Visconti) by David Hentschel & Genesis) WOOD." .::te tour of smaller halls re- (writers: David Bowie) (Bewlay Bros./ (writer: Michael Rutherford) (Warner cently completed by Tull has put the Fleur, BMI) (3:00). "The man who Bros., ASCAP) (3:03). The group is group in the proper frame of reference fell to earth" is still one step ahead currently enjoying its biggest album for this latest set. -

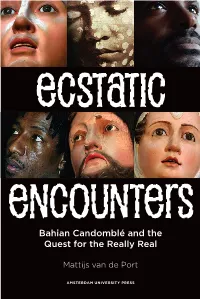

Ecstatic Encounters Ecstatic Encounters

encounters ecstatic encounters ecstatic ecstatic encounters Bahian Candomblé and the Quest for the Really Real Mattijs van de Port AMSTERDAM UNIVERSITY PRESS Ecstatic Encounters Bahian Candomblé and the Quest for the Really Real Mattijs van de Port AMSTERDAM UNIVERSITY PRESS Layout: Maedium, Utrecht ISBN 978 90 8964 298 1 e-ISBN 978 90 4851 396 3 NUR 761 © Mattijs van de Port / Amsterdam University Press, Amsterdam 2011 All rights reserved. Without limiting the rights under copyright reserved above, no part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise) without the written permission of both the copyright owner and the author of the book. Contents PREFACE / 7 INTRODUCTION: Avenida Oceânica / 11 Candomblé, mystery and the-rest-of-what-is in processes of world-making 1 On Immersion / 47 Academics and the seductions of a baroque society 2 Mysteries are Invisible / 69 Understanding images in the Bahia of Dr Raimundo Nina Rodrigues 3 Re-encoding the Primitive / 99 Surrealist appreciations of Candomblé in a violence-ridden world 4 Abstracting Candomblé / 127 Defining the ‘public’ and the ‘particular’ dimensions of a spirit possession cult 5 Allegorical Worlds / 159 Baroque aesthetics and the notion of an ‘absent truth’ 6 Bafflement Politics / 183 Possessions, apparitions and the really real of Candomblé’s miracle productions 5 7 The Permeable Boundary / 215 Media imaginaries in Candomblé’s public performance of authenticity CONCLUSIONS Cracks in the Wall / 249 Invocations of the-rest-of-what-is in the anthropological study of world-making NOTES / 263 BIBLIOGRAPHY / 273 INDEX / 295 ECSTATIC ENCOUNTERS · 6 Preface Oh! Bahia da magia, dos feitiços e da fé. -

Phil Guerini Joins Jonas Group Entertainment As

May 21, 2021 The MusicRow Weekly Friday, May 21, 2021 Phil Guerini Joins Jonas Group SIGN UP HERE (FREE!) Entertainment As CEO If you were forwarded this newsletter and would like to receive it, sign up here. THIS WEEK’S HEADLINES Phil Guerini Joins Jonas Group Entertainment RCA Records Partners With Sony Music Nashville On Elle King Blake Shelton To Hit The Road In August Kelsea Ballerini To Join Jonas Brothers On Tour Jonas Group Entertainment has announced that music industry power player Phil Guerini has joined the team as CEO, effective immediately. James Barker Band Signs To Villa 40/Sony Music Nashville Based in Nashville, Guerini will be at the helm of Jonas Group Entertainment. The company was founded in 2005 by Kevin Jonas, Sr., Logan Turner Inks With who was managing his sons, Grammy-nominated, multi-Platinum selling UMPG Nashville group Jonas Brothers. Jonas Group Entertainment now houses entertainment, publishing, and marketing divisions, with offices in Nashville Jason Aldean Announces and Charlotte, North Carolina. Back In The Saddle Tour Guerini last oversaw music strategy for Disney Channels Worldwide Lady A To Launch What A networks, alongside all aspects of Radio Disney and Radio Disney Country. He played a vital role within three divisions and five businesses of Song Can Do Tour The Walt Disney Company with highlights that include spearheading the development and launch of the annual Radio Disney Music Awards in Jacob Durrett Inks With Big 2013, and reimagining Radio Disney’s highly-acclaimed artist development Loud And Warner Chappell program Next Big Thing (NBT). Prior to that he spent time in roles at radio stations in Miami and Atlanta, in addition to record labels like MCA More Tour Announcements Records, A&M Records, Chrysalis Records, and East West Records.