Cpus, Gpus, Accelerators and Memory.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

2021 Proxy Statement

2021 Proxy Statement Notice of Annual Stockholders’ Meeting Investor Engagement To see how we responded to your Who We Met With feedback, see page 41. Total Contacted Total Engaged Director Engaged 50.4% 39.5% 29.7% O/S O/S O/S Intel’s outstanding shares (o/s) caculated as of September 30,2020 We are proud of our long-standing and robust investor engagement program. Our integrated outreach team, led by our Investor Relations group, Corporate Responsibility office, Human Resources office, and Corporate Secretary office, engages proactively with our stockholders, maintaining a two-way, year-round governance calendar as shown in this graphic. During 2020, our engagement program addressed our executive compensation program, corporate governance best practices, our Board’s operation and experience, and our commitment to addressing environmental and social responsibility issues that are critical to our business. Through direct participation in our engagement efforts and through briefings from our engagement teams, our directors are able to monitor developments in corporate governance and social responsibility and benefit from our stockholders’ perspectives on these topics. In consultation with our Board, we seek to thoughtfully adopt and apply developing practices in a manner that best supports our business and our culture. Summer Winter Review annual meeting Incorporate input results to determine from investor meetings Annual appropriate next into annual meeting planning steps, and prioritize and enhance governance Stockholders’ post annual meeting and compensation investor engagement practices and disclosures Meeting focus areas when warranted Fall Spring Hold post- Conduct pre-annual annual meeting meeting investor meetings investor meetings to solicit feedback and report to the to answer questions and Board, Compensation understand investor Committee, and Corporate views on proxy Governance and Nominating matters Committee Additional detail on specific topics and initiatives we have adopted is discussed under “Investor Engagement” on page 40. -

Openpower AI CERN V1.Pdf

Moore’s Law Processor Technology Firmware / OS Linux Accelerator sSoftware OpenStack Storage Network ... Price/Performance POWER8 2000 2020 DRAM Memory Chips Buffer Power8: Up to 12 Cores, up to 96 Threads L1, L2, L3 + L4 Caches Up to 1 TB per socket https://www.ibm.com/blogs/syst Up to 230 GB/s sustained memory ems/power-systems- openpower-enable- bandwidth acceleration/ System System Memory Memory 115 GB/s 115 GB/s POWER8 POWER8 CPU CPU NVLink NVLink 80 GB/s 80 GB/s P100 P100 P100 P100 GPU GPU GPU GPU GPU GPU GPU GPU Memory Memory Memory Memory GPU PCIe CPU 16 GB/s System bottleneck Graphics System Memory Memory IBM aDVantage: data communication and GPU performance POWER8 + 78 ms Tesla P100+NVLink x86 baseD 170 ms GPU system ImageNet / Alexnet: Minibatch size = 128 ADD: Coherent Accelerator Processor Interface (CAPI) FPGA CAPP PCIe POWER8 Processor ...FPGAs, networking, memory... Typical I/O MoDel Flow Copy or Pin MMIO Notify Poll / Int Copy or Unpin Ret. From DD DD Call Acceleration Source Data Accelerator Completion Result Data Completion Flow with a Coherent MoDel ShareD Mem. ShareD Memory Acceleration Notify Accelerator Completion Focus on Enterprise Scale-Up Focus on Scale-Out and Enterprise Future Technology and Performance DriVen Cost and Acceleration DriVen Partner Chip POWER6 Architecture POWER7 Architecture POWER8 Architecture POWER9 Architecture POWER10 POWER8/9 2007 2008 2010 2012 2014 2016 2017 TBD 2018 - 20 2020+ POWER6 POWER6+ POWER7 POWER7+ POWER8 POWER8 P9 SO P9 SU P9 SO 2 cores 2 cores 8 cores 8 cores 12 cores w/ NVLink -

Lecture Note 7. IA: History and Features

System Programming Lecture Note 7. IA: History and Features October 30, 2020 Jongmoo Choi Dept. of Software Dankook University http://embedded.dankook.ac.kr/~choijm (Copyright © 2020 by Jongmoo Choi, All Rights Reserved. Distribution requires permission) Objectives Discuss Issues on ISA (Instruction Set Architecture) ü Opcode and operand addressing modes Apprehend how ISA affects system program ü Context switch, memory alignment, stack overflow protection Describe the history of IA (Intel Architecture) Grasp the key technologies in recent IA ü Pipeline and Moore’s law Refer to Chapter 3, 4 in the CSAPP and Intel SW Developer Manual 2 Issues on ISA (1/2) Consideration on ISA (Instruction Set Architecture) asm_sum: addl $1, %ecx movl -4(%ebx, %ebp, 4), %eax call func1 leave ü opcode issues § how many? (add vs. inc è RISC vs. CISC) § multi functions? (SISD vs. SIMD vs. MIMD …) ü operand issues § fixed vs. variable operands f bits n bits n bits n bits § fixed: how many? opcode operand 1 operand 2 operand 3 § operand addressing modes f bits n bits n bits ü performance issues opcode operand 1 operand 2 § pipeline f bits n bits § superscalar opcode operand 1 § multicore 3 Issues on ISA (2/2) Features of IA (Intel Architecture) ü Basically CISC (Complex Instruction Set Computing) § Variable length instruction § Variable number of operands (0~3) § Diverse operand addressing modes § Stack based function call § Supporting SIMD (Single Instruction Multiple Data) ü Try to take advantage of RISC (Reduced Instruction Set Computing) § Micro-operations -

POWER10 Processor Chip

POWER10 Processor Chip Technology and Packaging: PowerAXON PowerAXON - 602mm2 7nm Samsung (18B devices) x x 3 SMT8 SMT8 SMT8 SMT8 3 - 18 layer metal stack, enhanced device 2 Core Core Core Core 2 - Single-chip or Dual-chip sockets + 2MB L2 2MB L2 2MB L2 2MB L2 + 4 4 SMP, Memory, Accel, Cluster, PCI Interconnect Cluster,Accel, Memory, SMP, Computational Capabilities: PCI Interconnect Cluster,Accel, Memory, SMP, Local 8MB - Up to 15 SMT8 Cores (2 MB L2 Cache / core) L3 region (Up to 120 simultaneous hardware threads) 64 MB L3 Hemisphere Memory Signaling (8x8 OMI) (8x8 Signaling Memory - Up to 120 MB L3 cache (low latency NUCA mgmt) OMI) (8x8 Signaling Memory - 3x energy efficiency relative to POWER9 SMT8 SMT8 SMT8 SMT8 - Enterprise thread strength optimizations Core Core Core Core - AI and security focused ISA additions 2MB L2 2MB L2 2MB L2 2MB L2 - 2x general, 4x matrix SIMD relative to POWER9 - EA-tagged L1 cache, 4x MMU relative to POWER9 SMT8 SMT8 SMT8 SMT8 Core Core Core Core Open Memory Interface: 2MB L2 2MB L2 2MB L2 2MB L2 - 16 x8 at up to 32 GT/s (1 TB/s) - Technology agnostic support: near/main/storage tiers - Minimal (< 10ns latency) add vs DDR direct attach 64 MB L3 Hemisphere PowerAXON Interface: - 16 x8 at up to 32 GT/s (1 TB/s) SMT8 SMT8 SMT8 SMT8 x Core Core Core Core x - SMP interconnect for up to 16 sockets 3 2MB L2 2MB L2 2MB L2 2MB L2 3 - OpenCAPI attach for memory, accelerators, I/O 2 2 + + - Integrated clustering (memory semantics) 4 4 PCIe Gen 5 PCIe Gen 5 PowerAXON PowerAXON PCIe Gen 5 Interface: Signaling (x16) Signaling -

Power8 Quser Mspl Nov 2015 Handout

IBM Power Systems Power Systems Hardware: Today and Tomorrow November 2015 Mark Olson [email protected] © 2015 IBM Corporation IBM Power Systems POWER8 Chip © 2015 IBM Corporation IBM Power Systems Processor Technology Roadmap POWER11 Or whatever it is POWER10 named Or whatever it is POWER9 named Or whatever it is named POWER7 POWER8 POWER6 45 nm 22 nm POWER5 65 nm 130 nm POWER4 90 nm 180 nm 130 nm 2001 2004 2007 2010 2014 Future 3 © 2015 IBM Corporation IBM Power Systems Processor Chip Comparisons POWER5 POWER6 POWER7 POWER7+ POWER8 2004 2007 2010 2012 45nm SOI 32nm SOI 22nm SOI Technology 130nm SOI 65nm SOI eDRAM eDRAM eDRAM Compute Cores 2 2 8 8 12 Threads SMT2 SMT2 SMT4 SMT4 SMT8 Caching On-chip 1.9MB (L2) 8MB (L2) 2 + 32MB (L2+3) 2 + 80MB (L2+3) 6 + 96MB (L2+3) Off-chip 36MB (L3) 32MB (L3) None None 128MB (L4) Bandwidth Sust. Mem. 15GB/s 30GB/s 100GB/s 100GB/s 230GB/s Peak I/O 6GB/s 20GB/s 40GB/s 40GB/s 96GB/s 4 © 2015 IBM Corporation IBM Power Systems Processor Designs POWER5+ POWER6 POWER7 POWER7+ POWER8 Max cores 4 2 8 8 12 Technology 90nm 65nm 45nm 32nm 22nm Size 245 mm2 341 mm2 567 mm2 567 mm2 650 mm2 * Transistors 276 M 790 M 1.2 B 2.1 B 4.2 B * 1.9 4 - 5 3 – 4 Up to 4.4 Up to 4.1 Frequencies GHz GHz GHz GHz GHz ** SMT (threads) 2 2 4 4 8 L2 Cache 1.9MB Shared 4MB / Core 256KB / core 256KB / core 512KB/core 4MB / Core 10MB / Core 8MB / Core L3 Cache 36MB 32MB On chip On chip On chip L4 Cache -- -- -- -- Up to 128MB Bandwidth Sust memory 15GB/s 30GB/s 100GB/s 100GB/s 230GB/s Peak I/O 6GB/s 20GB/s 40GB/s 40GB/s 96GB/s * with 12-core -

Advancement in Processor's Architecture

© June 2021| IJIRT | Volume 8 Issue 1 | ISSN: 2349-6002 Advancement In Processor’s Architecture Avinash Maurya1, Prachi Mishra2, Prateek Choudhary3, Prashant Upadhyay4 1,2,3,4 Student of Information Technology, RKGIT, Ghaziabad, India Abstract - AMD has launched its next-gen Zen 3 lineup physical layout perspective. The Zen2 AMD Ryzen CPU which makes it the best and most powerful processor series had a big improvement in architecture commercial CPU series. as they moved from 12/14nm processors to 7nm The "Zen 3" core architecture delivers a 19% increase architecture manufactured by TSMC (Taiwan in the instructions per clock for the Ryzen 5600x, 5800x, Semiconductor Manufacturing Company). 5900x, and 5950x. This is a significant modification in the last decade that The 2nd Gen AMD Ryzen processor's which is there happened due to the improvement in core architecture, in the AMD Ryzen 7 3700x has one compute die with which results in full access of the L3 cache without 8 cores and one input/output die that handles PCI having to deal with the extra latency involved. express lanes, SATA, USB, etc. Here, the layout of die matters. In the Zen2 layouts, the L3 cache that is of I.INTRODUCTION 32MB lies in the middle, which is separated into 2 parts of 16MB each with 4 cores on either side so, in In the world of computer performance, there is total, we get 32 MB of L3 cache with 8 total CPU competition between Intel and AMD processors and cores. both are having a large consumer base, which makes it If we look at the gen 3 architecture it's a little bit tricky to get unbiased advice about the best choice for different, we've got 32 MB of L3 cache just like the a processor. -

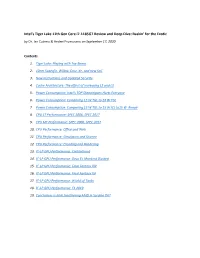

Intel's Tiger Lake 11Th Gen Core I7-1185G7 Review and Deep Dive

Intel’s Tiger Lake 11th Gen Core i7-1185G7 Review and Deep Dive: Baskin’ for the Exotic by Dr. Ian Cutress & Andrei Frumusanu on September 17, 2020 Contents 1. Tiger Lake: Playing with Toe Beans 2. 10nm Superfin, Willow Cove, Xe, and new SoC 3. New Instructions and Updated Security 4. Cache Architecture: The effect of increasing L2 and L3 5. Power Consumption: Intel’s TDP Shenanigans Hurts Everyone 6. Power Consumption: Comparing 15 W TGL to 28 W TGL 7. Power Consumption: Comparing 15 W TGL to 15 W ICL to15 W Renoir 8. CPU ST Performance: SPEC 2006, SPEC 2017 9. CPU MT Performance: SPEC 2006, SPEC 2017 10. CPU Performance: Office and Web 11. CPU Performance: Simulation and Science 12. CPU Performance: Encoding and Rendering 13. Xe-LP GPU Performance: Civilization 6 14. Xe-LP GPU Performance: Deus Ex Mankind Divided 15. Xe-LP GPU Performance: Final Fantasy XIV 16. Xe-LP GPU Performance: Final Fantasy XV 17. Xe-LP GPU Performance: World of Tanks 18. Xe-LP GPU Performance: F1 2019 19. Conclusion: Is Intel Smothering AMD in Sardine Oil? The big notebook launch for Intel this year is Tiger Lake, its upcoming 10nm platform designed to pair a new graphics architecture with a nice high frequency for the performance that customers in this space require. Over the past few weeks, we’ve covered the microarchitecture as presented by Intel at its latest Intel Architecture Day 2020, as well as the formal launch of the new platform in early September. The missing piece of the puzzle was actually testing it, to see if it can match the very progressive platform currently offered by AMD’s Ryzen Mobile. -

IBM's Next Generation POWER Processor

IBM’s Next Generation POWER Processor Hot Chips August 18-20, 2019 Jeff Stuecheli Scott Willenborg William Starke Proposed POWER Processor Technology and I/O Roadmap Focus of 2018 talk POWER7 Architecture POWER8 Architecture POWER9 Architecture POWER10 2010 2012 2014 2016 2017 2018 2020 2021 POWER7 POWER7+ POWER8 POWER8 P9 SO P9 SU P9 AIO P10 8 cores 8 cores 12 cores w/ NVLink 12/24 cores 12/24 cores 12/24 cores TBA cores 45nm 32nm 22nm 12 cores 14nm 14nm 14nm 22nm New Micro- New Micro- Enhanced Enhanced New Micro- Enhanced Enhanced Micro- New Micro- Architecture Micro- Architecture Architecture Micro- Micro- Architecture Architecture Architecture Architecture Architecture With NVLink Direct attach memory Buffered New New Process Memory Memory New Process New Process Subsystem New Process Technology Technology Technology New Process Technology Technology Up To Up To Up To Up To Up To Up To Up To Up To Sustained Memory Bandwidth 65 GB/s 65 GB/s 210 GB/s 210 GB/s 150 GB/s 210 GB/s 650 GB/s 800 GB/s Standard I/O Interconnect PCIe Gen2 PCIe Gen2 PCIe Gen3 PCIe Gen3 PCIe Gen4 x48 PCIe Gen4 x48 PCIe Gen4 x48 PCIe Gen5 20 GT/s 25 GT/s Advanced I/O Signaling N/A N/A N/A 25 GT/s 25 GT/s 32 & 50 GT/s 160GB/s 300GB/s 300GB/s 300GB/s CAPI 2.0, CAPI 2.0, CAPI 2.0, CAPI 1.0 , Advanced I/O Architecture N/A N/A CAPI 1.0 OpenCAPI3.0, OpenCAPI3.0, OpenCAPI4.0, TBA NVLink NVLink NVLink NVLink © 2019 IBM Corporation Statement of Direction, Subject to Change 2 Proposed POWER Processor Technology and I/O Roadmap Focus of today’s talk POWER7 Architecture POWER8 -

Starting at the Top of the Hour V2 © IBM Corporation, 2020 1

7/7/2020 AIX User Meeting 1 Starting at the top of the hour V2 © IBM Corporation, 2020 1 AIX User Meeting 1 https://ibm.webex.com/meet/nag Regular Sections in the following areas: 1. Recent News 2. AIX releases you should be running! 3. What you really need to know about ... <various topics> 4. Interesting information sources 5. Questions: That we have answered in the last few weeks! 6. Can you help? Perhaps you know answers or techniques that IBM does not. 7. Fun facts or favourite features! © IBM Corporation, 2020 2 1 7/7/2020 AIX User Meeting 1 https://ibm.webex.com/meet/nag Website https://www.ibm.com/support/pages/aix-user-meetings No pre-announcements – Once Announced: YES No official statements Yes: Technical Techie Talk Informal chat – these are Nigel’s opinions Will try leaving the microphones open - so you can ask questions - Unless we have too many people Shorter 5 to 10 minute topics, with where to get more information © IBM Corporation, 2020 3 AIX njmon (& nmon) user meeting & user meeting Aimed at hands-on AIX System admin, Aimed at AIX and Linux AIX operations & architects performance monitoring techies We are going to keep this simple We are going to keep this simple - Just turn up - Just turn up - Next event: - Next event: - Monday 6th July 2020 - Monday 6th July 2020 - 3 pm BST - 4:30 pm BST (British Summer Time = UTC+1) (British Summer Time = UTC+1) - Browse to - Browse to https://ibm.webex.com/meet/nag https://ibm.webex.com/meet/nag - To be recorded for replay - To be recorded for replay © IBM Corporation, 2020 4 2 7/7/2020 AIX User Meeting 1 Recent News 1. -

POWER Processor

POWER Processor Technology Overview Myron Slota POWER Systems, IBM Systems © 2017 IBM Corporation Quarter Century of POWER 22nm Legacy of Leadership Innovation 45/32nm Driving Client Value 65nm POWER8 0.18um 0.25um 130/90nm POWER7/7+ 0.35um Business 0.5um RS64IV Sstar 180/130nm POWER6 0.5um RS64III Pulsar RS64II North Star 0.5um POWER5/5+ RS64I Apache 0.22um Cobra A10 Muskie POWER4/4+ A35 Modern UNIX Era 0.35um Workstation POWER3 -630 0.72um POWER2 P2SC 1.0um RSC 0.25um POWER1 0.35um PC 0.6um 604e 603 601 1990 1995 2000 2005 2010 2015 © 2017 IBM Corporation 2 IBM Optimized Semiconductor Technology World class technology with value-added features for server business. POWER9 is built on 14nm finFET technology transitioned to Global Foundaries 17-layer copper wire Silicon On Insulator On-chip eDRAM (14nm) -Faster Transistor, Less Noise - 6x latency improvement - No off-chip signaling required - 8x bandwidth improvement - 3x less area than SRAM - 5x less energy than SRAM Dense interconnect - Faster connections - Low latency distance paths - High density complex circuits - 2X wire per transistor DT DT eDRAM Cell “IBM is committed to meeting the rising demands of cognitive systems and cloud computing. GF’s leading performance in 7LP process technology, reflecting our joint Research collaboration, will allow IBM Power and Mainframe systems to push beyond limitations to provide high-performance computing solutions while aggressively pursuing 5nm to advance our leadership for years to come.” Tom Rosamilia, Senior Vice President, IBM Systems © 2017