Questioning Gender Studies on Chess

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

World's Top-10 Chess Players Battle It out in 4-Day

WORLD’S TOP-10 CHESS PLAYERS BATTLE IT OUT IN 4-DAY TOURNAMENT IN LEUVEN (BELGIUM) Leuven, Belgium – Wednesday, 11 May 2016 – The greatest chess tournament ever staged in Belgium, Your Next Move Grand Chess Tour, will take place in the historic Town Hall of Leuven from Friday 17 June until Monday 20 June. The best chess players in the world at the moment will take part in the tournament: World Champion Magnus Carlsen, former World Champions Viswanathan Anand, Vladimir Kramnik and Veselin Topalov, as well as Fabiano Caruana, Anish Giri, Maxime Vachier- Lagrave, Hikaru Nakamura, Aronian Levon and Wesley So. The players will compete in a Rapid Chess and Blitz Chess tournament during the 4 days. The prize money for the tournament is $ 150.000 (€ 134.100). Your Next Move Grand Chess Tour is part of the the Grand Chess Tour 2016, a series of 4 chess events organized worldwide (Paris - France, Leuven - Belgium, Saint Louis – USA and London - UK). This tournament being held in Belgium is truly uniqe and is ‘the greatest chess event ever staged in Belgium’. Never before have the 10 smartest, fastest and strongest chess players of the moment – coming from Norway, Russia, USA, France, Netherland, Bulgaria, Armenia and India – competed against each-other in Belgium. Chess fans will be able to enjoy the experience of seeing the greatest players compete live in Leuven or watch the streaming broadcast, complete with grandmaster commentary. Your Next Move, a non-profit organization and the organizer of the event in Leuven, promotes chess as an educational tool for children and youngsters in Belgium. -

2000/4 Layout

Virginia Chess Newsletter 2000- #4 1 GEORGE WASHINGTON OPEN by Mike Atkins N A COLD WINTER'S NIGHT on Dec 14, 1799, OGeorge Washington passed away on the grounds of his estate in Mt Vernon. He had gone for a tour of his property on a rainy day, fell ill, and was slowly killed by his physicians. Today the Best Western Mt Vernon hotel, site of VCF tournaments since 1996, stands only a few miles away. One wonders how George would have reacted to his name being used for a chess tournament, the George Washington Open. Eighty-seven players competed, a new record for Mt Vernon events. Designed as a one year replacement for the Fredericksburg Open, the GWO was a resounding success in its initial and perhaps not last appearance. Sitting atop the field by a good 170 points were IM Larry Kaufman (2456) and FM Emory Tate (2443). Kudos to the validity of 1 the rating system, as the final round saw these two playing on board 1, the only 4 ⁄2s. Tate is famous for his tactics and EMORY TATE -LARRY KAUFMAN (13...gxf3!?) 14 Nh5 gxf3 15 Kaufman is super solid and FRENCH gxf3 Nf8 16 Rg1+ Ng6 17 Rg4 rarely loses except to brilliancies. 1 e4 e6 2 Nf3 d5 3 Nc3 Nf6 Bd7 18 Kf1 Nd8 19 Qd2 Bb5 Inevitably one recalls their 4 e5 Nfd7 5 Ne2 c5 6 d4 20 Re1 f5 21 exf6 Bb4 22 f7+ meeting in the last round at the Nc6 7 c3 Be7 8 Nf4 cxd4 9 Kxf7 23 Rf4+ Nxf4 24 Qxf4+ 1999 Virginia Open, there also cxd4 Qb6 10 Be2 g5 11 Ke7 25 Qf6+ Kd7 26 Qg7+ on on the top board. -

Chess-Moves-July-August-2011.Pdf

ECF Under 18 and Under 13 County Championships The 2011 ECF Under 18 and Under 13 County Championships were hosted by outgoing 2010 Under 18 winners Berkshire at Eton College, which kindly provided a venue excellently suited for this prestigious jun- ior competition. The event attracted 192 players, many travelling far from north, south, east and west, with 9 teams of 12 participating in the Under 18 event, and 14 teams of 6 in the Under 13 event. The younger event was split between an Open section and a Minor with an average grade ceiling of 80, which broadened participation even fur- ther, encouraging inclusion of a number of plucky contestants years below the age limit. The different age groups were, as in previous years, faced with different event formats. The seniors did battle over a measured two rounds with 75 minutes per player on the clock, whilst the younger sections engaged in four rounds of 30 minute-a-side rapidplay. In each case, the available time was valued, and there were more exciting finishes than early exits ... (continued on Page 7) tact the ECF in confidence. I can also recommend From the Director’s desk that you join The Friends of Chess, a subscription- The annual British Championships this based organisation that supports British participation month in Sheffield will be the in international chess - to find out more visit strongest Championships ever held, http://friendsofchess.wordpress.com/ with (as I write) 12 Grandmasters and or ring John Philpott on 020 8527 4063 14 International Masters. This feat was not a coincidence - it took spon- - Adam Raoof, Director of Home Chess sorship (thank you to Darwin Strategic and to CJ) to achieve that. -

1979 September 29

I position with International flocked to see; To sorri{ of position and succumbed to · Chess - Masters Jonathan Speelman the top established players John Littlewood. In round> and Robert · Bellin until in · they represented vultures I 0, as Black in a Frerich (' . round eight the sensational come to witness the greatest. again, Short drew with Nunn. Short caught happened. As White against "humiliation" of British chess In the last round he met. Short, Miles selected an indif• ~r~a~! • 27-yeai:-old Robert Bellin, THIS YEAR's British Chain- an assistant at his Interzonal ferent line against the French Next up was defending also on 7½ points. Bellin · pionship was one of the most tournament. More quietly, at defence and was thrashed off champion '. Speelman, stood to win the champion- · sensational ever. The stage the other end of the scale, 14- the board. amusingly described along _ ship on tie-break if they drew was set when Grandmaster year-old Nigel Short scraped _ Turmoil reigned! Short had with Miles as· "having the the game as he had faced J - Tony Miles flew in from an in because the selected field great talent and an already physique of a boxer" in pub• stronger opposition earlier in international in Argentina . was then· extended to 48 formidable reputation - but lic information leaflets. Short the event. Against Bellin, and decided to exercise his players. no one had -foreseen this. He defeated Speelman as well to Short rattled off his moves special last-minute entry op- Miles began impressively, .couldn't possibly · win the take the sole lead on seven like · a machinegun in the tion, apparently because he. -

Chess-Moves-November

November / December 2006 NEWSLETTER OF THE ENGLISH CHESS FEDERATION £1.50 European Union Individual Chess Championships Liverpool World Museum Wednesday 6th September to Friday 15th September 2006 FM Steve Giddins reports on round 10 Nigel Short became the outright winner of the 2006 EU Championship, by beating Mark Hebden in the 10th round, whilst his main rivals could only draw. The former world title challenger later declared himself “extremely chuffed” at having won on his first appearance in an international tournament in his home country, since 1989. Hebden is a player whose opening repertoire is well-known, and has been almost constant for his entire chess-playing life. As Black against 1 e4, he plays only 1...e5, usually either the Marshall or a main line Chigorin. Short avoided these with 3 Bc4, secure in the knowledge that Hebden only ever plays 3...Nf6. Over recent years, just about every top-level player has abandoned the Two Knights Defence, on the basis that Black does not have enough compensation after 4 Ng5. Indeed, after the game, Short commented that “The Two Knights just loses a pawn!”, and he added that anybody who played the line regularly as Black “is taking their life in their hands”. Hebden fought well, but never really had enough for his pawn, and eventually lost the ending. Meanwhile, McShane and Sulskis both fought out hard draws with Gordon and Jones respectively. Unlike Short, McShane chose to avoid a theoretical dispute and chose the Trompowsky. He did not achieve much for a long time, and although a significant outb of manoeuvring eventually netted him an extra pawn in the N+P ending, Black’s king was very active and he held the balance. -

Chess Moves.Qxp

ChessChess MovesMoveskk ENGLISH CHESS FEDERATION | MEMBERS’ NEWSLETTER | July 2013 EDITION InIn thisthis issueissue ------ 100100 NOTNOT OUT!OUT! FEAFEATURETURE ARARTICLESTICLES ...... -- thethe BritishBritish ChessChess ChampionshipsChampionships 4NCL4NCL EuropeanEuropean SchoolsSchools 20132013 -- thethe finalfinal weekendweekend -- picturespictures andand resultsresults GreatGreat BritishBritish ChampionsChampions TheThe BunrBunrattyatty ChessChess ClassicClassic -- TheThe ‘Absolute‘Absolute ChampionChampion -- aa looklook backback -- NickNick PertPert waswas therethere && annotatesannotates thethe atat JonathanJonathan Penrose’Penrose’ss championshipchampionship bestbest games!games! careercareer MindedMinded toto SucceedSucceed BookshelfBookshelf lookslooks atat thethe REALREAL secretssecrets ofof successsuccess PicturedPictured -- 20122012 BCCBCC ChampionsChampions JovankJovankaa HouskHouskaa andand GawainGawain JonesJones PhotogrPhotographsaphs courtesycourtesy ofof BrendanBrendan O’GormanO’Gorman [https://picasawe[https://picasaweb.google.com/105059642136123716017]b.google.com/105059642136123716017] CONTENTS News 2 Chess Moves Bookshelf 30 100 NOT OUT! 4 Book Reviews - Gary Lane 35 Obits 9 Grand Prix Leader Boards 36 4NCL 11 Calendar 38 Great British Champions - Jonathan Penrose 13 Junior Chess 18 The Bunratty Classic - Nick Pert 22 Batsford Competition 29 News GM Norm for Yang-Fang Zhou Congratulations to 18-year-old Yang-Fan Zhou (far left), who won the 3rd Big Slick International (22-30 June) GM norm section with a score of 7/9 and recorded his first grandmaster norm. He finished 1½ points ahead of GMs Keith Arkell, Danny Gormally and Bogdan Lalic, who tied for second. In the IM norm section, 16-year-old Alan Merry (left) achieved his first international master norm by winning the section with an impressive score of 7½ out of 9. An Englishman Abroad It has been a successful couple of months for England’s (and the world’s) most travelled grandmaster, Nigel Short. In the Sigeman & Co. -

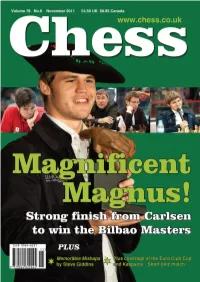

CHESS-Nov2011 Cbase.Pdf

November 2011 Cover_Layout 1 03/11/2011 14:52 Page 1 Contents Nov 2011_Chess mag - 21_6_10 03/11/2011 11:59 Page 1 Chess Contents Chess Magazine is published monthly. Founding Editor: B.H. Wood, OBE. M.Sc † Editorial Editor: Jimmy Adams Malcolm Pein on the latest developments in chess 4 Acting Editor: John Saunders ([email protected]) Executive Editor: Malcolm Pein Readers’ Letters ([email protected]) You have your say ... Basman on Bilbao, etc 7 Subscription Rates: United Kingdom Sao Paulo / Bilbao Grand Slam 1 year (12 issues) £49.95 The intercontinental super-tournament saw Ivanchuk dominate in 2 year (24 issues) £89.95 Brazil, but then Magnus Carlsen took over in Spain. 8 3 year (36 issues) £125.00 Cartoon Time Europe Oldies but goldies from the CHESS archive 15 1 year (12 issues) £60.00 2 year (24 issues) £112.50 Sadler Wins in Oslo 3 year (36 issues) £165.00 Matthew Sadler is on a roll! First, Barcelona, and now Oslo. He annotates his best game while Yochanan Afek covers the action 16 USA & Canada 1 year (12 issues) $90 London Classic Preview 2 year (24 issues) $170 We ask the pundits what they expect to happen next month 20 3 year (36 issues) $250 Interview: Nigel Short Rest of World (Airmail) Carl Portman caught up with the English super-GM in Shropshire 22 1 year (12 issues) £72 2 year (24 issues) £130 Kasparov - Short Blitz Match 3 year (36 issues) £180 Garry and Nigel re-enacted their 1993 title match at a faster time control in Belgium. -

Read Book Garry Kasparov on My Great Predecessors

GARRY KASPAROV ON MY GREAT PREDECESSORS: PT. 4 PDF, EPUB, EBOOK Garry Kasparov | 496 pages | 01 Jan 2005 | EVERYMAN CHESS | 9781857443950 | English | London, United Kingdom Garry Kasparov on My Great Predecessors: Pt. 4 PDF Book Whether you've loved the book or not, if you give your honest and detailed thoughts then people will find new books that are right for them. Community Reviews. Zlatko Dimitrioski rated it it was amazing Aug 31, As always the analysis is top notch and the anecdotal stories are great! Jovany Agathe rated it liked it Nov 13, Both are great players and I would give Fischer and edge due to his results and his work ethic, whereas Kasparov could also deserve the top billing as the strongest player of his generation, a generation that was weened on the games of Fischer and others and played against players who had a deeper understanding of the game. For these reasons alone, I would call it a significant book, perhaps even one of this year's best. The primary value in Kasparov's series is that he teaches the most important classics volume 1 covers Greko through Alekhine. In that case, we can't The indefatigable Staunton, who had long dreamed of organizing a tournament of the leading players in the world, decided to make use of a convenient occasion - the Great Industrial Exhibition in London right from the moment that Prince Albert proposed it in In this book, a must for all serious chessplayers, Kasparov analyses deeply Fischer's greatest games and assesses the legacy of this great American genius. -

The Nigel Short Interview

Exclusive Interview with GM Nigel Short MBE Carl Portman Chess Correspondent for Defence Focus Magazine Nigel Short is an elite English chess Grandmaster earning the title at the age of 19. He is often regarded as the strongest English player of the 20th century as he was ranked third in the world, from January 1988 – July 1989 and in 1993, he challenged Garry Kasparov for the World Chess Championship in London. In October 2011, he gave a 34 board simultaneous chess display against Shropshire chess players winning every game except for two, which were drawn. Just before the event, I interviewed Nigel exclusively for Defence FOCUS magazine – the in-house journal of the Ministry of Defence, read by soldiers, sailors, airmen and civilians across the world. Hello Nigel, thanks for taking the time to talk to me today. You’ve been busy lately. Why are you doing a tour of the UK giving simultaneous exhibitions? Obviously for one thing it pays, but more than that there’s often a big gap in terms of contact between club players and the professionals. Very often they don’t even see them. With what I am doing you get that contact. It’s not like that in most other sports; you watch your football team - for example Arsenal on TV. You only ever see them on a bus! This is a way of closing the gulf. You are playing again in the London Chess Classic this year. Have you any surprises up your sleeve? I have played twice and finished last on both occasions. -

Download the London Chess Classic

Chess.Jan.aw.3/1/11 3/1/11 22:05 Page 1 www.chess.co.uk Volume 75 No.10 London Chess Classic 2010 Souvenir Issue January 2011 £3.95 UK $9.95 Canada Carlsen edges out McShane and Anand in Classic thriller Exclusive annotations by PLUS Luke McShane and Vishy Anand Remembering of their wins against Magnus Carlsen Larry Evans SPECIAL OFFER Subscribe to CHESS Magazine click here (12 issues per year) RRP OFFER PRICE* United Kingdom £44.95 £25 Europe £54.95 £35 USA & Canada $90 $60 Rest of World (AIRMAIL) £64.95 £40 COVER PRICE OF EACH ISSUE - £3.95 *offer is only valid to customers who have never had a subscription to CHESS in the past Click here to order a printed copy of the January 2011 issue of CHESS Magazine (London Chess Classic Souvenir Issue) CHESS Magazine - January 2011 issue - RRP £3.95 UK's most popular CHESS Magazine - established 1935! All the coverage of the 2010 London Chess Classic plus: - Off The Shelf: Books of 2010 - Sean Marsh reflects on the top chess titles of 2010... Kasparov, Aagaard, Nunn, etc... - The Multiple Whammy Part 3 - by David LeMoir. Sacrifices come in all shapes and sizes. - Sympathy for Kramnik - Mike Hughes reflects on Vlad’s big blunder against Fritz - 4NCL British Team League - Andrew Greet reports on the opening weekend Click here to order back-issues of CHESS Magazine £3.95 each Click here to order souvenirs from the 2010 London Chess Classic - Official Tournament Programme - London Chess Classic T-Shirt - London Chess Classic Mug - London Chess Classic Pen Contents Jan 2011_Chess mag - 21_6_10 04/01/2011 10:46 Page 1 Chess Contents Chess Magazine is published monthly. -

BC Junior Championship Submissions

Chess Canada 2015.01 2 Chess Canada Chess Canada (CCN) is the elec- Chess Canada tronic newsletter of the Chess Federation of Canada. Opinions 2015.01 Next Issue... expressed in it are those of the You Gotta See This... (2) credited authors and/or editor, You Gotta See This... and do not necessarily reflect Pt 3: those of the CFC, its Governors, 2014 News Makers agents or employees, living or ...................................................... 11 Canadian Chess dead. Endgame Defence Year in Review subscriptions ..................................................... 32 CCN is distributed by email to Coded Messages? Profile: Qiyu Zhou CFC members who have submit- .................................................... 42 ted their email address to the Coming Soon... CFC: [email protected] Around Canada Student Issue · World U16 Teams BC Junior Championship submissions .................................................. 43 · BC Junior CCN is looking for contributions: tournament reports, photos, an- · 2014 Pan-Ams notated games. For examples, Appendix · Canadian University Ch see this issue or read the 2013.06 · Nicholas Vettese anada Appendix for other ideas. World Championship Blunders .................................................. 50 C suggestions Cover: Magnus Carlsen mosaic If you have an idea for a story you Columns Upcoming Events ..................................... 3 would like to write, email me: every photo of inter- [email protected] Editor’s notes .......................................... 5 national chess appear- -

Please Kindly Find Below Iran Chess Federation Statement in Response to the Proposed Resolution by Mr

TO: FIDE President, FIDE Council, All esteemed FIDE members Associations, Please kindly find below Iran Chess Federation statement in response to the proposed resolution by Mr. Nigel Short, FIDE Vice President and England Chess Federation to the 2020 FIDE Online General Assembly: Introduction: Iran Chess Federation has taken extensive measures in recent years to develop chess in Iran and its 45,252 FIDE members, it worth to consider this number of members makes up about 5% of the total FIDE chess community around the world. Iran is in top place in the list of organizers of Rated tournaments in the world with more than 1000 Rated tournaments per year. This means chess is very popular among Iranian people and has many professional followers. Iran hold the 2017 Women's World championship, while it was repeatedly delayed for nearly a year due to the lack of a host, at the total cost of 900,000 USD (nine hundred thousand dollars). This is an evidence how Iran is interested in supporting to expand the chess very closely with the FIDE. In response to FIDE letter on 06/08/2020, Iran Chess Federation sent its reply on 06/22/2020 and has not explained how it never violated the Olympic Charter. And Iran has tried its best to expand the field of chess and restore hope among 80 million Iranians during the Corona pandemic outbreak. Please noted that in Iran there is no prohibiting law for not competing with any other country and this is a personal beliefs of the players who do not participate in some matches.