Episode 3.09 – UTF-8 Encoding and Unicode Code Points

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1 Introduction 1

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Base64 Character Encoding and Decoding Modeling

Base64 Character Encoding and Decoding Modeling Isnar Sumartono1, Andysah Putera Utama Siahaan2, Arpan3 Faculty of Computer Science,Universitas Pembangunan Panca Budi Jl. Jend. Gatot Subroto Km. 4,5 Sei Sikambing, 20122, Medan, Sumatera Utara, Indonesia Abstract: Security is crucial to maintaining the confidentiality of the information. Secure information is the information should not be known to the unreliable person, especially information concerning the state and the government. This information is often transmitted using a public network. If the data is not secured in advance, would be easily intercepted and the contents of the information known by the people who stole it. The method used to secure data is to use a cryptographic system by changing plaintext into ciphertext. Base64 algorithm is one of the encryption processes that is ideal for use in data transmission. Ciphertext obtained is the arrangement of the characters that have been tabulated. These tables have been designed to facilitate the delivery of data during transmission. By applying this algorithm, errors would be avoided, and security would also be ensured. Keywords: Base64, Security, Cryptography, Encoding I. INTRODUCTION Security and confidentiality is one important aspect of an information system [9][10]. The information sent is expected to be well received only by those who have the right. Information will be useless if at the time of transmission intercepted or hijacked by an unauthorized person [7]. The public network is one that is prone to be intercepted or hijacked [1][2]. From time to time the data transmission technology has developed so rapidly. Security is necessary for an organization or company as to maintain the integrity of the data and information on the company. -

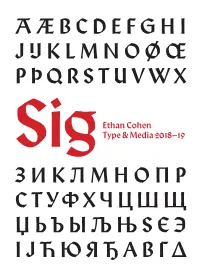

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

A Ruse Secluded Character Set for the Source

Mukt Shabd Journal Issn No : 2347-3150 A Ruse Secluded character set for the Source Mr. J Purna Prakash1, Assistant Professor Mr. M. Rama Raju 2, Assistant Professor Christu Jyothi Institute of Technology & Science Abstract We are rich in data, but information is poor, typically world wide web and data streams. The effective and efficient analysis of data in which is different forms becomes a challenging task. Searching for knowledge to match the exact keyword is big task in Internet such as search engine. Now a days using Unicode Transform Format (UTF) is extended to UTF-16 and UTF-32. With helps to create more special characters how we want. China has GB 18030-character set. Less number of website are using ASCII format in china, recently. While searching some keyword we are unable get the exact webpage in search engine in top place. Issues in certain we face this problem in results announcement, notifications, latest news, latest products released. Mainly on government websites are not shown in the front page. To avoid this trap from common people, we require special character set to match the exact unique keyword. Most of the keywords are encoded with the ASCII format. While searching keyword called cbse net results thousands of websites will have the common keyword as cbse net results. Matching the keyword, it is already encoded in all website as ASCII format. Most of the government websites will not offer search engine optimization. Match a unique keyword in government, banking, Institutes, Online exam purpose. Proposals is to create a character set from A to Z and a to z, for the purpose of data cleaning. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

A Decision Procedure for String to Code Point Conversion‹

A Decision Procedure for String to Code Point Conversion‹ Andrew Reynolds1, Andres Notzli¨ 2, Clark Barrett2, and Cesare Tinelli1 1 Department of Computer Science, The University of Iowa, Iowa City, USA 2 Department of Computer Science, Stanford University, Stanford, USA Abstract. In text encoding standards such as Unicode, text strings are sequences of code points, each of which can be represented as a natural number. We present a decision procedure for a concatenation-free theory of strings that includes length and a conversion function from strings to integer code points. Furthermore, we show how many common string operations, such as conversions between lowercase and uppercase, can be naturally encoded using this conversion function. We describe our implementation of this approach in the SMT solver CVC4, which contains a high-performance string subsolver, and show that the use of a native procedure for code points significantly improves its performance with respect to other state-of-the-art string solvers. 1 Introduction String processing is an important part of many kinds of software. In particular, strings often serve as a common representation for the exchange of data at interfaces between different programs, between different programming languages, and between programs and users. At such interfaces, strings often represent values of types other than strings, and developers have to be careful to sanitize and parse those strings correctly. This is a challenging task, making the ability to automatically reason about such software and interfaces appealing. Applications of automated reasoning about strings include finding or proving the absence of SQL injections and XSS vulnerabilities in web applications [28, 25, 31], reasoning about access policies in cloud infrastructure [7], and generating database tables from SQL queries for unit testing [29]. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

PCL PC-8, Code Page 437 Page 1 of 5 PCL PC-8, Code Page 437

PCL PC-8, Code Page 437 Page 1 of 5 PCL PC-8, Code Page 437 PCL Symbol Set: 10U Unicode glyph correspondence tables. Contact:[email protected] http://pcl.to -- -- -- -- $90 U00C9 Ê Uppercase e acute $21 U0021 Ë Exclamation $91 U00E6 Ì Lowercase ae diphthong $22 U0022 Í Neutral double quote $92 U00C6 Î Uppercase ae diphthong $23 U0023 Ï Number $93 U00F4 & Lowercase o circumflex $24 U0024 ' Dollar $94 U00F6 ( Lowercase o dieresis $25 U0025 ) Per cent $95 U00F2 * Lowercase o grave $26 U0026 + Ampersand $96 U00FB , Lowercase u circumflex $27 U0027 - Neutral single quote $97 U00F9 . Lowercase u grave $28 U0028 / Left parenthesis $98 U00FF 0 Lowercase y dieresis $29 U0029 1 Right parenthesis $99 U00D6 2 Uppercase o dieresis $2A U002A 3 Asterisk $9A U00DC 4 Uppercase u dieresis $2B U002B 5 Plus $9B U00A2 6 Cent sign $2C U002C 7 Comma, decimal separator $9C U00A3 8 Pound sterling $2D U002D 9 Hyphen $9D U00A5 : Yen sign $2E U002E ; Period, full stop $9E U20A7 < Pesetas $2F U002F = Solidus, slash $9F U0192 > Florin sign $30 U0030 ? Numeral zero $A0 U00E1 ê Lowercase a acute $31 U0031 A Numeral one $A1 U00ED B Lowercase i acute $32 U0032 C Numeral two $A2 U00F3 D Lowercase o acute $33 U0033 E Numeral three $A3 U00FA F Lowercase u acute $34 U0034 G Numeral four $A4 U00F1 H Lowercase n tilde $35 U0035 I Numeral five $A5 U00D1 J Uppercase n tilde $36 U0036 K Numeral six $A6 U00AA L Female ordinal (a) http://www.pclviewer.com (c) RedTitan Technology 2005 PCL PC-8, Code Page 437 Page 2 of 5 $37 U0037 M Numeral seven $A7 U00BA N Male ordinal (o) $38 U0038 -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

Invisible-Punctuation.Pdf

... ' I •e •e •4 I •e •e •4 •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • ••••• • • •• • • • • • I •e •e •4 In/visible Punctuation • • • • •• • • • •• • • • •• • • • • • •• • • • • • •• • • • • • • •• • • • • • •• • • • • • ' •• • • • • • John Lennard •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I ••• • • 4 I.e• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 • • •' .•. • . • .•. •. • ' .. ' • • •' .•. • . • .•. • . • ' . ' . UNIVERSITY OF THE WEST INDIES- LENNARD, 121-138- VISIBLE LANGUAGE 45.1/ 2 I •e •e' • • • • © VISIBLE LANGUAGE, 2011 -RHODE ISLAND SCHOOL OF DESIGN- PROVIDENCE, RHODE ISLAND 02903 .. ' ABSTRACT The article offers two approaches to the question of 'invisible punctuation,' theoretical and critical. The first is a taxonomy of modes of punctuational invisibility, · identifying denial, repression, habituation, error and absence. Each is briefly discussed and some relations with technologies of reading are considered. The second considers the paragraphing, or lack of it, in Sir Philip Sidney's Apology for Poetry: one of the two early printed editions and at least one of the two MSS are mono paragraphic, a feature always silently eliminated by editors as a supposed carelessness. It is argued that this is improbable