Interdisciplinary Education at Liberal Arts Institutions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Life and Career Preparation Through a Liberal Arts Education Darcie

Life and Career Preparation through a Liberal Arts Education Darcie Scales Word Count: 1169 History & Geography, Bachelor of Arts The liberal arts experience was the college environment I craved. The small classrooms, distinct learning environments, and focus on collaboration drew me like no other universities. Most importantly, public liberal arts schools, like Georgia College, make it affordable and accessible to achieve such an education. Otherwise, the private universities that dominate the liberal arts can limit the types of people who attend and require students to take on even greater debt. Of course, the most important aspect of any university is the experience within the classroom. Course layouts varied from professor to professor, making each class a new experience. Often, courses were a mixture of lecture, discussion, collaboration, and student-led topics. This lent a more well-rounded experience that is more representative of real collaborations and workplaces. Without my class experiences, I would not feel as prepared to enter graduate school or the workforce. Interdisciplinary classes allowed me to listen to well- crafted, and engaging lectures from my talented professors, and they permitted the opportunity for discussion. Several of my history professors were completely engaging, and they made history and its importance come alive for me. Additionally, many of my professors regularly guided students into discussion during lectures, but they also set aside days just for seminars that allotted time for lively discussions pertaining to the materials. In several of my history classes, we debated how primary sources affected our understanding of events and people, in addition to monographs that enlightened the subjects with others’ research. -

Do Students Understand Liberal Arts Disciplines?

DONALD E. ELMORE, JULIA C. PRENTICE, AND CAROL TROSSET Do Students Understand Liberal Arts Disciplines? WHAT IS THE EDUCATIONAL PURPOSE of the curricular breadth encouraged at liberal arts in- PERSPECTIVES stitutions? Presumably we want students to ac- quire a variety of skills and knowledge, but we often claim that most skills are taught “across the curriculum,” and liberal arts colleges tend to One important downplay disciplinary information when listing educational their educational goals. In this article, we argue that one important educational outcome should outcome should be be for students to develop accurate perceptions for students to of the disciplines they study. develop accurate The research described here originated perceptions of when Elmore and Prentice were seniors at Grinnell College. Both were pursuing double the disciplines majors across academic divisions they study (chemistry/English and biology/sociology). Both regularly noticed—and were disturbed by—negative and inaccurate impressions of their major fields held by students studying other disciplines. They became interested in C. P. Snow’s concept of “two cultures” (1988, 3), which suggests that “the intellectual life of the whole of western society is increasingly being split” into sciences and humanities. Therefore, we designed a study that would measure students’ perceptions of the various DONALD E. ELMORE is assistant professor of chemistry at Wellesley College, JULIA C. PRENTICE is a health services research fellow at the Center for Health Quality, Outcomes, and Economic Research at Boston University, and CAROL TROSSET is director of Institutional Research at Hampshire College. 48 L IBERAL E DUCATION W INTER 2006 Wellesley College liberal arts disciplines and see whether they If one of the goals of a broad liberal arts cur- changed during the four years of college. -

Liberal Arts Colleges in American Higher Education

Liberal Arts Colleges in American Higher Education: Challenges and Opportunities American Council of Learned Societies ACLS OCCASIONAL PAPER, No. 59 In Memory of Christina Elliott Sorum 1944-2005 Copyright © 2005 American Council of Learned Societies Contents Introduction iii Pauline Yu Prologue 1 The Liberal Arts College: Identity, Variety, Destiny Francis Oakley I. The Past 15 The Liberal Arts Mission in Historical Context 15 Balancing Hopes and Limits in the Liberal Arts College 16 Helen Lefkowitz Horowitz The Problem of Mission: A Brief Survey of the Changing 26 Mission of the Liberal Arts Christina Elliott Sorum Response 40 Stephen Fix II. The Present 47 Economic Pressures 49 The Economic Challenges of Liberal Arts Colleges 50 Lucie Lapovsky Discounts and Spending at the Leading Liberal Arts Colleges 70 Roger T. Kaufman Response 80 Michael S. McPherson Teaching, Research, and Professional Life 87 Scholars and Teachers Revisited: In Continued Defense 88 of College Faculty Who Publish Robert A. McCaughey Beyond the Circle: Challenges and Opportunities 98 for the Contemporary Liberal Arts Teacher-Scholar Kimberly Benston Response 113 Kenneth P. Ruscio iii Liberal Arts Colleges in American Higher Education II. The Present (cont'd) Educational Goals and Student Achievement 121 Built To Engage: Liberal Arts Colleges and 122 Effective Educational Practice George D. Kuh Selective and Non-Selective Alike: An Argument 151 for the Superior Educational Effectiveness of Smaller Liberal Arts Colleges Richard Ekman Response 172 Mitchell J. Chang III. The Future 177 Five Presidents on the Challenges Lying Ahead The Challenges Facing Public Liberal Arts Colleges 178 Mary K. Grant The Importance of Institutional Culture 188 Stephen R. -

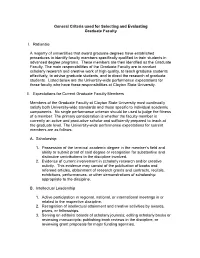

General Criteria Used for Selecting and Evaluating Graduate Faculty I

General Criteria used for Selecting and Evaluating Graduate Faculty I. Rationale A majority of universities that award graduate degrees have established procedures to identify faculty members specifically qualified to train students in advanced degree programs. These members are then identified as the Graduate Faculty. The main responsibilities of the Graduate Faculty are to conduct scholarly research and creative work of high quality, to teach graduate students effectively, to advise graduate students, and to direct the research of graduate students. Listed below are the University-wide performance expectations for those faculty who have these responsibilities at Clayton State University. II. Expectations for Current Graduate Faculty Members Members of the Graduate Faculty at Clayton State University must continually satisfy both University-wide standards and those specific to individual academic components. No single performance criterion should be used to judge the fitness of a member. The primary consideration is whether the faculty member is currently an active and productive scholar and sufficiently prepared to teach at the graduate level. The University-wide performance expectations for current members are as follows: A. Scholarship 1. Possession of the terminal academic degree in the member’s field and ability to submit proof of said degree or recognition for substantive and distinctive contributions to the discipline involved. 2. Evidence of current involvement in scholarly research and/or creative activity. This evidence may consist of the publication of books and refereed articles, obtainment of research grants and contracts, recitals, exhibitions, performances, or other demonstrations of scholarship appropriate to the discipline. B. Intellectual Leadership 1. Active participation in regional, national, or international meetings in or related to the respective discipline. -

Liberal Arts Education and Information Technology

VIEWPOINT Liberal Arts Education and Information Technology: Time for Another Renewal Liberal arts colleges must accommodate the powerful changes that are taking place in the way people communicate and learn by Todd D. Kelley hree significant societal trends and that institutional planning and deci- Five principal reasons why the future will most certainly have a broad sion making must reflect the realities of success of liberal arts colleges is tied to Timpact on information services rapidly changing information technol- their effective use of information tech- and higher education for some time to ogy. Boards of trustees and senior man- nology are: come: agement must not delay in examining • Liberal arts colleges have an obliga- • the increasing use of information how these changes in society will impact tion to prepare their students for life- technology (IT) for worldwide com- their institutions, both negatively and long learning and for the leadership munications and information cre- positively, and in embracing the idea roles they will assume when they ation, storage, and retrieval; that IT is integral to fulfilling their liberal graduate. • the continuing rapid change in the arts missions in the future. • Liberal arts colleges must demon- development, maturity, and uses of (2) To plan and support technological strate the use of the most significant information technology itself; and change effectively, their colleges must approaches to problem solving and • the growing need in society for have sufficient high-quality information communications to have emerged skilled workers who can understand services staff and must take a flexible and since the invention of the printing and take advantage of the new meth- creative approach to recruiting and press and movable type. -

Catalog 2008-09

DePauw University Catalog 2008-09 Preamble ...............................................................................................2 Section I: The University .....................................................................3 Section II: Graduation Requirements ...................................................8 Section III: Majors, Minors, Courses .................................................14 School of Music......................................................................18 College of Liberal Arts ...........................................................30 Graduate Programs in Education..........................................136 Section IV: Academic Policies .........................................................138 Section V: The DePauw Experience.................................................159 Section VI Campus Living ...............................................................176 Section VII: Admission, Expenses, Financial Aid ...........................184 Section VIII: University Personnel ..................................................196 This is a PDF copy of the official DePauw University Catalog, 2008-09, which is available at http://www.depauw.edu/catalog. This reproduction was created on September 15, 2008. Contact: Dr. Ken Kirkpatrick Registrar DePauw University 313 S. Locust St. Greencastle, IN 46135 [email protected] 765-658-4141 Preamble to the Catalog Accuracy of Catalog Information Every effort has been made to ensure that information in this catalog is accurate at the time of publication. -

The Magisterium of the Faculty of Theology of Paris in the Seventeenth Century

Theological Studies 53 (1992) THE MAGISTERIUM OF THE FACULTY OF THEOLOGY OF PARIS IN THE SEVENTEENTH CENTURY JACQUES M. GRES-GAYER Catholic University of America S THEOLOGIANS know well, the term "magisterium" denotes the ex A ercise of teaching authority in the Catholic Church.1 The transfer of this teaching authority from those who had acquired knowledge to those who received power2 was a long, gradual, and complicated pro cess, the history of which has only partially been written. Some sig nificant elements of this history have been overlooked, impairing a full appreciation of one of the most significant semantic shifts in Catholic ecclesiology. One might well ascribe this mutation to the impetus of the Triden tine renewal and the "second Roman centralization" it fostered.3 It would be simplistic, however, to assume that this desire by the hier archy to control better the exposition of doctrine4 was never chal lenged. There were serious resistances that reveal the complexity of the issue, as the case of the Faculty of Theology of Paris during the seventeenth century abundantly shows. 1 F. A. Sullivan, Magisterium (New York: Paulist, 1983) 181-83. 2 Y. Congar, 'Tour une histoire sémantique du terme Magisterium/ Revue des Sci ences philosophiques et théologiques 60 (1976) 85-98; "Bref historique des formes du 'Magistère' et de ses relations avec les docteurs," RSPhTh 60 (1976) 99-112 (also in Droit ancien et structures ecclésiales [London: Variorum Reprints, 1982]; English trans, in Readings in Moral Theology 3: The Magisterium and Morality [New York: Paulist, 1982] 314-31). In Magisterium and Theologians: Historical Perspectives (Chicago Stud ies 17 [1978]), see the remarks of Y. -

OAKWOOD UNIVERSITY Faculty Handbook

FACULTY HANDBOOK OAKWOOD UNIVERSITY Faculty Handbook (Revised 2008) Faculty Handbook Page 1 FACULTY HANDBOOK Table of Contents Section I General Administration Information Oakwood University Mission Statement ........................................................................................... 5 Historic Glimpses .............................................................................................................................. 5 Accreditation ...................................................................................................................................... 5 Affirmative Action and Religious Institution Exemption .................................................................. 6 Sexual Harassment ............................................................................................................................. 6 Drug-free Environment ...................................................................................................................... 7 Establishment and Dissolution of Departments and Majors .............................................................. 8 Section II Policies Governing Faculty Functions Faculty Classifications ...................................................................................................................... 10 Academic Rank ................................................................................................................................. 11 Promotions ....................................................................................................................................... -

1 2. Classical Reception Pedagogy in Liberal Arts Education

CUCD Bulletin 46 2017 ***Teaching the Classical Reception Revolution*** 2. Classical Reception Pedagogy in Liberal Arts Education Emma Cole University of Bristol iberal Arts degrees, sometimes called Bachelor of Arts and Sciences degrees, represent one of the fastest growing programmes in British universities. 1 The courses aim to L combine a breadth and depth of study, allowing students to gain a broad, interdisciplinary education within the arts and humanities, and sometimes also the social sciences, with a depth of expertise focused around a specific major, or pathway. In addition, they encourage students to build links between their degree and wider culture and society, incorporating internships, language modules, and study abroad trips as the norm rather than the exception and often as credit-bearing, compulsory elements. Interestingly, classicists have played a remarkably prominent role in the development of these courses.2 I myself have taught on two such programmes, and in both instances have used the Liberal Arts remit as a framework for classical reception topics. Here I discuss the pedagogical strategies I have employed in Liberal Arts modules, providing a template for other academics wishing to find homes for classical reception topics within non-classics degree structures. In addition, I demonstrate the potential that the interdisciplinary Liberal Arts curricula hold for early-career scholars wanting to engage in research-led teaching. Such teaching provides crucial formative training for junior scholars seeking to progress their academic careers, and I argue that the experience has a flow-on effect in preparing scholars for teaching in a more traditional, single-honours environment. The rhetoric surrounding British Liberal Arts degrees revolves around the importation of a US- style Liberal Arts college model. -

Students | All Main Fields of Study 2016 | Czech Republic | Students | All Main Fields of Study Who We Are

Universum Talent Research 2016 Partner Report | Silesian University in Opava Czech Edition | Students | All main fields of study 2016 | Czech Republic | Students | All main fields of study Who We Are Present in 60 countries with Helping the world’s leading Surveying more than Thought leaders in Employer regional offices in New York organizations strengthen 1.3 million career-seekers, Branding, publishing content City, Paris, Shanghai, their Employer Brands for partnering with thousands of on C-suite level subjects. Singapore and Stockholm. over 25 years. universities and organizations. Serving more than 1 700 Full service Employer Our Employer Branding clients globally, including Branding partner, taking content is published yearly in Fortune 100 companies. clients from identifying renowned media, e.g. WSJ, challenges, engaging talent CNN, Le Monde, to measuring success. BusinessWeek. 2016 | Czech Republic | Students | All main fields of study Sample client list Some of the world´s most attractive employers 3 2016 | Czech Republic | Students | All main fields of study Universum in the Media Universum Rankings and Thought leadership Publishers 4 2016 | Czech Republic | Students | All main fields of study We help higher educational institutions Universum is the global leader in the field of employer branding and talent research. Through our market research, consulting and media solutions we aim to close the gap between the expectations of employers and talent, as well as support Higher Education Institutions in their roles. Through our unique -

Liberal Arts Colleges

A Classic Education What is the study of Liberal Arts? Approach to Education In-depth study of a broad range of subjects Intended to increase understanding of: . Multiple academic subjects . The connections between those subjects . How those subjects affect the experiences of people Basically, Liberal Arts is the study of humankind in both breadth and depth What is Liberal Arts? Liberal Arts is NOT: . The study of the arts exclusively . The study of fields from a (politically) liberal point of view . A professional, vocational, or technical curriculum Some liberal arts colleges have professional, vocational, or technical elements within the context of the liberal arts curriculum But that is not their focus What Does Liberal Arts Seek to Understand? 1. The Natural World • How scientists devise experiments to test hypotheses • How to measure those experiments • How to interpret those measures • To attempt to find truth and to prove or disprove the hypotheses • To learn that it is ok to fail . You learn more that way! • That there is wonder in the act of discovery What Does Liberal Arts Seek to Understand? 2. Mathematics, Formal Reasoning, and Logic • How mathematics is used . As the “language” of science . By social scientists to test and use models • Mathematics has its own beauty Fibonacci numbers Fractals! What Does Liberal Arts Seek to Understand? 3. Social Sciences • Cause and Effect . How people influence events . How people are influenced by events . How social scientists build models to explain human behavior What Does Liberal Arts Seek to Understand? 4. Humanities and Literature • How humans react to various circumstances • To become skilled at communicating ideas • To read closely • The power of imagination • How to find bridges across historical and cultural divides • The human condition • To value the beauty of words! What Does Liberal Arts Seek to Understand? 5. -

A Newsletter for Graduates of the Program of Liberal Studies Vol VI

% u S B A Newsletter for Graduates of the Program of Liberal Studies Vol VI No 1 University of Notre Dame January, 1982 The View from 318 Greetings from the Program - - its faculty, its students and staff (Mrs. Mary Etta Rees) - - in this New Year! It has "been a very full and lively year in and around 318. The return address on Pro gramma has already informed you that the Program did change its name. A simple change of dropping the word "General" was finally seen as most desirable, since it was helpful in avoiding misunderstandings of the Program "by misassociation and in maintaining continuity with the strong academic and personal tradition in education which the Program has represented at Notre Dame for the past thirty-two years. On the very occasion of changing the name, the faculty indicated that the change did not signify any departure at all from the traditions and characteristic emphases that have made the Pro- gram so successful a liberal educator. The logo you saw on the cover was .designed by Richard JHoughton, a junior in the Program, to mark the initiation of the new name. ""It was selected by the faculty from a number of proposals made by students, faculty and friends of the Program. The septilateral figure represents the traditional seven liberal arts of the trivium (logic, grammar and rhetoric) and the quadrivium (arithmetic, geometry, music and astronomy.) The liberal arts are the Program's approaches to the circle of knowledge, unified and unbroken. At the center of the Program's effort are the Great Books, the primary sources uoon which the liberal arts are practiced and in and through which genuine learning can be attained.