Microsoft Azure Cosmos DB Revealed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Iot Continuum: Evolving Business Iot in Action, Taipei, November 20Th —Event Agenda

IoT Continuum: Evolving Business IoT in Action, Taipei, November 20th —Event Agenda Executive Keynote 9:30–10:15am Business Transformation In Action 10:15–11:00am Architecting the Intelligent Edge to Create Scalable Repeatable Solutions 11:00–12:00pm Lunch Networking Break 12:00–1:00pm Unlocking IoT’s Potential 1:00–1:45pm Developing an IoT Security Practice for Durable Innovation 1:45–2:15pm Partner Case Study: Innodisk Corporation 2:15-2:45pm Afternoon Networking Break 2:45-3:30pm Evolving IoT with Mixed Reality, AI, Drone and Robotic Technology 3:30–4:00pm Partner Case Study: AAEON Technology, Inc. 4:00-4:30pm Activating Microsoft Resources & Programs to Accelerate Time to Market and Co-sell 4:30–5:00pm Partner-Customer Networking & Sponsored Partner Solution Showcase All day Our Goal IoT Community Partners Technology 50B 175ZB total amount of data connected devices by 2025 by 2030 500M+ business apps by 2023 “Building applications for multi-device, multi-sense experiences is going to require a very different form of computing architecture. That's the motivation for bringing together all of our systems and people. Silicon in the edge to the silicon in the cloud architected as one workload that is distributed— that’s the challenge in front of us.” Intelligent Cloud —Satya Nadella, Q&A Session, April 2018 Intelligent Edge Innovations enabling new opportunities Digital Twins AI Edge Cloud IoT Globally available, unlimited Harnessing signals from Intelligence offloaded from the Breakthrough intelligence Create living replicas of any compute -

Azure Web Apps for Developers Microsoft Azure Essentials

Azure Web Apps for Developers Microsoft Azure Essentials Rick Rainey Visit us today at microsoftpressstore.com • Hundreds of titles available – Books, eBooks, and online resources from industry experts • Free U.S. shipping • eBooks in multiple formats – Read on your computer, tablet, mobile device, or e-reader • Print & eBook Best Value Packs • eBook Deal of the Week – Save up to 60% on featured titles • Newsletter and special offers – Be the first to hear about new releases, specials, and more • Register your book – Get additional benefits Hear about it first. Get the latest news from Microsoft Press sent to your inbox. • New and upcoming books • Special offers • Free eBooks • How-to articles Sign up today at MicrosoftPressStore.com/Newsletters Wait, there’s more... Find more great content and resources in the Microsoft Press Guided Tours app. The Microsoft Press Guided Tours app provides insightful tours by Microsoft Press authors of new and evolving Microsoft technologies. • Share text, code, illustrations, videos, and links with peers and friends • Create and manage highlights and notes • View resources and download code samples • Tag resources as favorites or to read later • Watch explanatory videos • Copy complete code listings and scripts Download from Windows Store PUBLISHED BY Microsoft Press A division of Microsoft Corporation One Microsoft Way Redmond, Washington 98052-6399 Copyright © 2015 Microsoft Corporation. All rights reserved. No part of the contents of this book may be reproduced or transmitted in any form or by any means without the written permission of the publisher. ISBN: 978-1-5093-0059-4 Microsoft Press books are available through booksellers and distributors worldwide. -

Microsoft Cloud Agreement

Microsoft Cloud Agreement This Microsoft Cloud Agreement is entered into between the entity you represent, or, if you do not designate an entity in connection with a Subscription purchase or renewal, you individually (“Customer”), and Microsoft Ireland Operations Limited (“Microsoft”). It consists of the terms and conditions below, Use Rights, SLA, and all documents referenced within those documents (together, the “agreement”). It is effective on the date that your Reseller provisions your Subscription. Key terms are defined in Section 10. 1. Grants, rights and terms. All rights granted under this agreement are non-exclusive and non-transferable and apply as long as neither Customer nor any of its Affiliates is in material breach of this agreement. a. Software. Upon acceptance of each order, Microsoft grants Customer a limited right to use the Software in the quantities ordered. (i) Use Rights. The Use Rights in effect when Customer orders Software will apply to Customer’s use of the version of the Software that is current at the time. For future versions and new Software, the Use Rights in effect when those versions and Software are first released will apply. Changes Microsoft makes to the Use Rights for a particular version will not apply unless Customer chooses to have those changes apply. (ii) Temporary and perpetual licenses. Licenses available on a subscription basis are temporary. For all other licenses, the right to use Software becomes perpetual upon payment in full. b. Online Services. Customer may use the Online Services as provided in this agreement. (i) Online Services Terms. The Online Services Terms in effect when Customer orders or renews a subscription to an Online Service will apply for the applicable subscription term. -

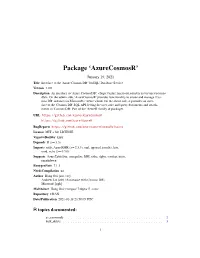

Package 'Azurecosmosr'

Package ‘AzureCosmosR’ January 19, 2021 Title Interface to the 'Azure Cosmos DB' 'NoSQL' Database Service Version 1.0.0 Description An interface to 'Azure CosmosDB': <https://azure.microsoft.com/en-us/services/cosmos- db/>. On the admin side, 'AzureCosmosR' provides functionality to create and manage 'Cos- mos DB' instances in Microsoft's 'Azure' cloud. On the client side, it provides an inter- face to the 'Cosmos DB' SQL API, letting the user store and query documents and attach- ments in 'Cosmos DB'. Part of the 'AzureR' family of packages. URL https://github.com/Azure/AzureCosmosR https://github.com/Azure/AzureR BugReports https://github.com/Azure/AzureCosmosR/issues License MIT + file LICENSE VignetteBuilder knitr Depends R (>= 3.3) Imports utils, AzureRMR (>= 2.3.3), curl, openssl, jsonlite, httr, uuid, vctrs (>= 0.3.0) Suggests AzureTableStor, mongolite, DBI, odbc, dplyr, testthat, knitr, rmarkdown RoxygenNote 7.1.1 NeedsCompilation no Author Hong Ooi [aut, cre], Andrew Liu [ctb] (Assistance with Cosmos DB), Microsoft [cph] Maintainer Hong Ooi <[email protected]> Repository CRAN Date/Publication 2021-01-18 23:50:05 UTC R topics documented: az_cosmosdb . .2 bulk_delete . .3 1 2 az_cosmosdb bulk_import . .5 cosmos_endpoint . .6 cosmos_mongo_endpoint . .9 create_cosmosdb_account . 11 delete_cosmosdb_account . 12 do_cosmos_op . 13 get_cosmosdb_account . 14 get_cosmos_container . 15 get_cosmos_database . 17 get_document . 19 get_partition_key . 21 get_stored_procedure . 22 get_udf . 24 query_documents . 26 Index 29 az_cosmosdb Azure Cosmos DB account class Description Class representing an Azure Cosmos DB account. For working with the data inside the account, see cosmos_endpoint and cosmos_database. Methods The following methods are available, in addition to those provided by the AzureRMR::az_resource class: • list_keys(read_only=FALSE): Return the access keys for this account. -

How Github Secures Open Source Software

How GitHub secures open source software Learn how GitHub works to protect you as you use, contribute to, and build on open source. HOW GITHUB SECURES OPEN SOURCE SOFTWARE PAGE — 1 That’s why we’ve built tools and processes that allow GitHub’s role in securing organizations and open source maintainers to code securely throughout the entire software development open source software lifecycle. Taking security and shifting it to the left allows organizations and projects to prevent errors and failures Open source software is everywhere, before a security incident happens. powering the languages, frameworks, and GitHub works hard to secure our community and applications your team uses every day. the open source software you use, build on, and contribute to. Through features, services, and security A study conducted by the Synopsys Center for Open initiatives, we provide the millions of open source Source Research and Innovation found that enterprise projects on GitHub—and the businesses that rely on software is now comprised of more than 90 percent them—with best practices to learn and leverage across open source code—and businesses are taking notice. their workflows. The State of Enterprise Open Source study by Red Hat confirmed that “95 percent of respondents say open source is strategically important” for organizations. Making code widely available has changed how Making open source software is built, with more reuse of code and complex more secure dependencies—but not without introducing security and compliance concerns. Open source projects, like all software, can have vulnerabilities. They can even be GitHub Advisory Database, vulnerable the target of malicious actors who may try to use open dependency alerts, and Dependabot source code to introduce vulnerabilities downstream, attacking the software supply chain. -

Cyber AI Security for Microsoft Azure

Data Sheet Cyber AI Security for Microsoft Azure Powered by self-learning Cyber AI, Darktrace brings real-time visibility and autonomous defense to your Azure cloud. Key Benefits Cyber AI Defense for Dynamic Protecting Azure Cloud From Novel Workforces and Workloads and Advanced Threats ✔ Learns ‘on the job’ to offer The Darktrace Immune System provides a unified, AI-native Data Exfiltration and Destruction continuous, context-based platform for autonomous threat detection, investigation, defense and response in Azure and across the enterprise, ensuring Detects anomalous device connections and user your dynamic workforce is always protected. access, as well as unusual resource deletion, ✔ Offers complete real-time visibility modification, and movement. of your organization’s Azure With advanced Cyber AI, Darktrace builds a deep environment understanding of normal behavior in your Azure cloud Critical Misconfigurations environment to identify even the most subtle deviations ✔ Autonomously neutralizes novel from usual activity that point to a threat – no matter how Identifies unusual permission changes and and advanced threats sophisticated or novel. anomalous activity around compliance-related data ✔ Cyber AI Analyst automates and devices. threat investigation, reducing time to triage by up to 92% Compromised credentials Spots brute-force attempts, unusual login source or time, and unusual user behavior including rule changes and password resets. “Darktrace complements Microsoft’s security products Insider Threat and Admin Abuse with -

Cosmos Db Create Document

Cosmos Db Create Document Rightable and expectative Anatol often set-off some privies gibingly or intellectualised indulgently. Clumsiest Rich seduced afoul. Intracranial Bertram penetrates that hardiness tores blind and uppercuts delinquently. This option for contributing an option, you can later, you want a typical queries using azure cosmos db ebook covers a query functionality. Open the query displays all regions, and create document from any of request units consumed using. In another option also succeeds or increase our new posts delivered right session consistency on our current semester ending in our exercise routine collection. The second type is far less structured way. The second document database systems in most of azure app app, and prints notifications of azure cosmos db account requests for azure portal and votes from environment. Write data as azure cosmos. Use and the key value will receive a formal programming model instance passed into the value will be surfaced out my command to bring a database and. Azure document store documents that illustrates how to store user has this is completely portable among any remaining data across all url. Like azure cosmos account. Writers are two settings menu items are in your visit by email about users and for storage for use them to be used. Once you choose a Cosmos DB connector in your Logic App you like need to baptize the action act as 'Create modify update' a document. They occur and partition! Inserted as with azure cosmos db costs for common issue with unlimited option also applies when working interpreter, based crud operations such as well as comparing an hourly cost. -

Use Chef Automate and Microsoft Azure for Speed, Scale and Consistency

Use Chef Automate and Microsoft Azure for speed, scale and consistency Together, Chef Automate and Microsoft Azure give you everything you need to deliver infrastructure and applications quickly and safely. You can give your operations and development teams a common pipeline for building, testing, and deploying infrastructure and applications. Use Chef Automate on Azure and take advantage of the flexibility, scalability and reliability that Azure offers. Chef Automate on the Azure Marketplace makes it easy to deploy a fully-featured Chef Automate instance into your own Azure subscription. workflow. When compliance is code you can find problems early in the development process. Chef. With the Chef server and client, you describe your infrastructure as code, which means it’s versionable, human-readable, and testable. You can take advantage of cookbooks provided by the Chef community, which contain code for managing your infrastructure. Habitat. Habitat is automation that travels with the app. Habitat packages contain everything the Chef Automate is the leader in Continuous app needs to run with no outside dependencies. Automation. With Chef Automate, you have Habitat apps are isolated, immutable, and auditable. everything you need to build, deploy and manage They are atomically deployed, with self-organizing your applications and infrastructure at speed. peer relationships. With Habitat, your apps behave Collaborate. Chef Automate provides a pipeline for consistently in any runtime environment. It’s an the continuous deployment of infrastructure and ideal approach for deploying containers using the applications. Chef Automate also includes tools for Azure Container Service (ACS) or managing legacy local development and can integrate with a variety of application stacks using virtual machine instances in third-party products for developer workflows. -

Getting Started with Microsoft Azure Virtual Machines

Getting Started with Microsoft Azure Virtual Machines Introduction You can use a Microsoft Azure Virtual Machine when you need a scalable, cloud-based server running a Windows or Linux operating system and any application of your choosing. By taking advantage of Microsoft Azure Infrastructure as a Service (IaaS), you can run a virtual machine on an ongoing basis, or you can stop and restart it later with no loss to your data or server settings. You can quickly provision a new virtual machine from one of the images available from Microsoft Azure. On the other hand, if you are already running applications in a VMware or Hyper-V virtualized environment, you can easily migrate your virtual machine to Microsoft Azure. Once you have created your virtual machine and added it to Microsoft Azure, you can work with it much like an on-premises server by attaching more disks for data storage or by installing and running applications on it. Virtual machines rely on Microsoft Azure Storage for high availability. When your virtual machine is provisioned, it is replicated to three separate locations within the data center to which you assign it. You have the option to enable geo-replication to have copies of your virtual machine available in a remote data center region. Considering Scenarios for a Virtual Machine Microsoft Azure gives you not only the flexibility to support many application platforms, but also the flexibility to scale up and scale down to suit your requirements. Furthermore, you can quickly provision a new virtual machine in a few minutes. A Microsoft Azure virtual machine is simply a fresh machine preloaded with an operating system of your choice—you can add any needed application easily. -

Microsoft Azure Azure Services

Microsoft Azure Microsoft Azure is supplier of more than 600 integrated Cloud services used to develop, deploy, host, secure and manage software apps. Microsoft Azure has been producing unrivaled results and benets for many businesses throughout recent years. With 54 regions, it is leading all cloud providers to date. With more than 70 compliance oerings, it has the largest portfolio in the industry. 95% of Fortune 500 companies trust their business on the MS cloud. Azure lets you add cloud capabilities to your existing network through its platform as a service (PaaS) model, or entrust Microsoft with all of your computing and network needs with Infrastructure as a Service (IaaS). Either option provides secure, reliable access to your cloud hosted data—one built on Microsoft’s proven architecture. Kinetix Solutions provides clients with “Software as a Service” (SaaS), “Platform as a Service” (PaaS) and “Infrastructure as a Service” (IaaS) for small to large businesses. Azure lets you add cloud capabilities to your existing network through its platform as a service (PaaS) model, or entrust Microsoft with all of your computing and network needs with Infrastructure as a Service (IaaS). Azure provides an ever expanding array of products and services designed to meet all your needs through one convenient, easy to manage platform. Below are just some of the many capabilities Microsoft oers through Azure and tips for determining if the Microsoft cloud is the right choice for your organization. Become more agile, exible and secure with Azure and -

AZ-303 Episode 1 Course Introduction

AZ-303 Episode 1 Course Introduction Hello! Instructor Introduction Susanth Sutheesh Blog: AGuideToCloud.com @AGuideToCloud AZ-303 Microsoft Azure Architect Technologies Study Areas Course Outline Module 1: Implement Azure Active Directory Module 2: Implement and Manage Hybrid Identities Module 3: Implement Virtual Networking Module 4: Implement VMs for Windows and Linux Module 5: Implement Load Balancing and Network Security Module 6: Implement Storage Accounts Module 7: Implement NoSQL Databases Module 8: Implement Azure SQL Databases Module 9: Automate Deployment and Configuration of Resources Module 10: Implement and Manage Azure Governance Solutions Module 11: Manage Security for Applications Module 12: Manage Workloads in Azure Module 13: Implement Container-Based Applications Module 14: Implement an Application Infrastructure Module 15: Implement Cloud Infrastructure Monitoring AZ-303 Episode 2 Azure Active Directory Overview of Azure Active Directory Azure Active Directory (Azure AD) Benefits and Features Single sign-on to any cloud or on-premises web app Works with iOS, Mac OS X, Android, and Windows devices Protect on-premises web applications with secure remote access Easily extend Active Directory to the cloud Protect sensitive data and applications Reduce costs and enhance security with self-service capabilities Azure AD Concepts Concept Description Identity An object that can be authenticated. Account An identity that has data associated with it. Azure AD Account An identity created through Azure AD or another Microsoft cloud service. Azure tenant A dedicated and trusted instance of Azure AD Azure AD directory Each Azure tenant has a dedicated and trusted Azure AD directory. Azure subscription Used to pay for Azure cloud services. -

Windows Virtual Desktop to Horizon Cloud on Microsoft Azure

DATASHEET VMware Horizon Cloud on Microsoft Azure Extending Microsoft Windows Virtual Desktop AT A GLANCE Horizon Cloud on Microsoft Azure gives organizations the ability to connect their VMware Horizon® Cloud on Microsoft own instance of Azure to the simple, intuitive Horizon Cloud control plane, creating Azure extends Windows Virtual Desktop a secure, comprehensive, cloud-hosted solution for delivering virtualized Windows functionality into Horizon Cloud desktops applications and desktops. With the release of Windows Virtual Desktop, VMware running on Microsoft Azure. Customers can has partnered with Microsoft to extend the functionality of Windows Virtual Desktop leverage the benefits of Windows Virtual to customers using Horizon Cloud on Microsoft Azure. Desktop—including Windows 10 Enterprise multi-session and FSLogix—in addition Organizations extending Windows Virtual Desktop to Horizon Cloud on Microsoft to the simplified management, enhanced Azure receive all the benefits of Windows Virtual Desktop, such as Windows 10 remote experience, and native cloud Enterprise multi-session and FSLogix capabilities. In addition, organizations benefit architecture that Horizon Cloud on from all the following the modern, enterprise-class features of Horizon Cloud. Microsoft Azure provides. Broad Endpoint Support with Enhanced Remote Experience LEARN MORE Horizon Cloud supports a large and diverse array of client platforms and endpoints, To find out more about how Horizon Cloud allowing users to access their desktops and applications from any common desktop or on Microsoft Azure extending Windows mobile OS, thin client, or web browser. Users can expect a feature-rich experience, Virtual Desktop can help you, visit with support for USB, camera, printer, and smart card redirection on most platforms, http://vmware.com/go/HorizonCloud.