Pattern Extraction from the World Wide Web

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

6012904936.Pdf

912288 УДК . ББК .Англ Г Гулов А. П. Olympway. форматов олимпиадных заданий по английскому языку Электронное издание М.: МЦНМО, 8 с. ISBN ---- Учебное пособие предназначено для подготовки к олимпиадам по английскому язы- ку учащихся – классов, включает в себя материалы по разделам «Лексика» и «Грам- матика». Материалы пособия могутбыть использованы для подготовки ко всем этапам олимпиад, от школьного до всероссийского; как при индивидуальных занятиях, так и при работе в классе. Издание адресовано учащимся и учителям средней школы. Подготовлено на основе книги: А. П. Гулов. Olympway. форматов олимпиадных заданий по английскому языку. — М.: МЦНМО, . — ISBN ----. 12+ Издательство Московского центра непрерывного математического образования , Москва, Большой Власьевский пер., , тел. () ––. http://www.mccme.ru © Гулов А. П., . ISBN ---- © МЦНМО, . UNIT 1 Task 1. Choose the correct answer. 1 fi sh in ____ waters (извлекать выгоду) muddy drumly blurred foggy troubled 2 like shooting fi sh in a ____ (очень легко) barrel jar cask teapot kettle 3 need (something) like a fi sh needs a ____ (абсолютно не испытывать потребности в чем-то) car bicycle coach truck scooter 4 there are plenty more fi sh in the ____ (существует много возможностей для успеха) pond lake sea loch river 5 big fi sh in a small ____ (важная персона для небольшой организации) pond lake sea loch river Task 2. Match the two columns. [COLLECTIVE NOUNS] 1 herd A of bees 2 swarm B of fi sh 3 bunch C of dancers 4 shoal D of cattle 5 troupe E of fl owers Task 3. Choose the correct answer. [COMMONLY CONFUSED WORDS] 1 “I’m not insinuating anything,” responded he blandly, “but I angel / angle have to look at things from every ____ there is.” 2 They say he succeeded in making her believe that he was an angel / angle ____ of Retribution. -

Download Placebo English Summer Rain Mp3

Download Placebo English Summer Rain Mp3 Download Placebo English Summer Rain Mp3 1 / 3 2 / 3 Download the album or mp3, watch videos Placebo. All video clips of all ... Get MP3 album, 2003-09-15 ... 1996. 125, Placebo - English Summer Rain, English .... Zu ihrem 20-jährigen Jubiläum veröffentlichen Placebo die Retrospektive ... MP3 Download Amazon ... English Summer Rain (Single Version).. Download Placebo English Summer Rain Mp3 >>> http://urllio.com/y5zo8 c1bf6049bf 8 Jun 2012 - 3 min - Uploaded by PLACEBOSubscribe: .... "English Summer Rain" is the fourth single from Placebo's fourth studio album Sleeping with ... Print/export. Create a book · Download as PDF · Printable version .... Listen English Summer Rain mp3 songs free online by Placebo. Download English Summer Rain on Hungama Music app & get access to Sleeping With Ghosts .... Watch the video for English Summer Rain from Placebo's Sleeping with Ghosts for free, and see the artwork, lyrics and similar artists.. Mendengarkan English Summer Rain (Single Version) oleh Placebo di JOOX sekarang. Lagu dari album A Place For Us To Dream.. Werbefrei streamen oder als CD und MP3 kaufen bei Amazon.de. ... weil er untypisch für dieses Album ist, dessen Perlen english summer rain, this picture, the .... Entdecken Sie English Summer Rain von Placebo bei Amazon Music. Werbefrei streamen oder als CD und MP3 kaufen bei Amazon.de.. 9. The Bitter End 10. Without You I'm Nothing (feat. David Bowie) 11. English Summer Rain 12. Breathe Underwater (Slow) 13. Soulmates 14.. Nghe & tải nhạc English Summer Rain (Single Version) - Placebo | GetLinkAZ MP3, ZWZCAZBO English Summer Rain (Single Version), Placebo Download ... -

The BG News December 4, 1998

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 12-4-1998 The BG News December 4, 1998 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News December 4, 1998" (1998). BG News (Student Newspaper). 6417. https://scholarworks.bgsu.edu/bg-news/6417 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. FRIDAY,The Dec. 4, 1998 A dailyBG Independent student News press Volume 85* No. 63 Speaker: HIGH: 57 ^k LOW: 50 'Sex is not an , emergency □ The safe sex discussion sponsored by Womyn for Womyn adressed many topics ■ The men's basketball of concern for women. team heads to Eastern Michigan Saturday. By mENE SHARON SCOTT The BG News Birth control methods, tools for safer sex and other issues ■ The women's were addressed at a "Safe Sex" basketball team is ready roundtable Thursday. The dis- for the Cougar BG News Photo/JASON SUGGS cussion was sponsored by Womyn for Womyn. shoot-out. People at Mark's Pub enjoy a drink Thursday. Below, John Tuylka, a senior psychology major takes a shot. Leah McGary, a certified fam- ily nurse practitioner from the Center for Choice, led the discus- Bars claim responsibility sion. ■ The men's swimming McGray emphasized the and diving teams are importance of using a birth con- BATTLE to curb binge drinking trol method. -

Big Hits Karaoke Song Book

Big Hits Karaoke Songs by Artist Karaoke Shack Song Books Title DiscID Title DiscID 3OH!3 Angus & Julia Stone You're Gonna Love This BHK034-11 And The Boys BHK004-03 3OH!3 & Katy Perry Big Jet Plane BHKSFE02-07 Starstruck BHK001-08 Ariana Grande 3OH!3 & Kesha One Last Time BHK062-10 My First Kiss BHK010-01 Ariana Grande & Iggy Azalea 5 Seconds Of Summer Problem BHK053-02 Amnesia BHK055-06 Ariana Grande & Weeknd She Looks So Perfect BHK051-02 Love Me Harder BHK060-10 ABBA Ariana Grande & Zedd Waterloo BHKP001-04 Break Free BHK055-02 Absent Friends Armin Van Buuren I Don't Wanna Be With Nobody But You BHK000-02 This Is What It Feels Like BHK042-06 I Don't Wanna Be With Nobody But You BHKSFE01-02 Augie March AC-DC One Crowded Hour BHKSFE02-06 Long Way To The Top BHKP001-05 Avalanche City You Shook Me All Night Long BHPRC001-05 Love, Love, Love BHK018-13 Adam Lambert Avener Ghost Town BHK064-06 Fade Out Lines BHK060-09 If I Had You BHK010-04 Averil Lavinge Whataya Want From Me BHK007-06 Smile BHK018-03 Adele Avicii Hello BHK068-09 Addicted To You BHK049-06 Rolling In The Deep BHK018-07 Days, The BHK058-01 Rumour Has It BHK026-05 Hey Brother BHK047-06 Set Fire To The Rain BHK021-03 Nights, The BHK061-10 Skyfall BHK036-07 Waiting For Love BHK065-06 Someone Like You BHK017-09 Wake Me Up BHK044-02 Turning Tables BHK030-01 Avicii & Nicky Romero Afrojack & Eva Simons I Could Be The One BHK040-10 Take Over Control BHK016-08 Avril Lavigne Afrojack & Spree Wilson Alice (Underground) BHK006-04 Spark, The BHK049-11 Here's To Never Growing Up BHK042-09 -

(You Gotta) Fight for Your Right (To Party!) 3 AM ± Matchbox Twenty. 99 Red Ballons ± Nena

(You Gotta) Fight For Your Right (To Party!) 3 AM ± Matchbox Twenty. 99 Red Ballons ± Nena. Against All Odds ± Phil Collins. Alive and kicking- Simple minds. Almost ± Bowling for soup. Alright ± Supergrass. Always ± Bon Jovi. Ampersand ± Amanda palmer. Angel ± Aerosmith Angel ± Shaggy Asleep ± The Smiths. Bell of Belfast City ± Kristy MacColl. Bitch ± Meredith Brooks. Blue Suede Shoes ± Elvis Presely. Bohemian Rhapsody ± Queen. Born In The USA ± Bruce Springstein. Born to Run ± Bruce Springsteen. Boys Will Be Boys ± The Ordinary Boys. Breath Me ± Sia Brown Eyed Girl ± Van Morrison. Brown Eyes ± Lady Gaga. Chasing Cars ± snow patrol. Chasing pavements ± Adele. Choices ± The Hoosiers. Come on Eileen ± Dexy¶s midnight runners. Crazy ± Aerosmith Crazy ± Gnarles Barkley. Creep ± Radiohead. Cupid ± Sam Cooke. Don¶t Stand So Close to Me ± The Police. Don¶t Speak ± No Doubt. Dr Jones ± Aqua. Dragula ± Rob Zombie. Dreaming of You ± The Coral. Dreams ± The Cranberries. Ever Fallen In Love? ± Buzzcocks Everybody Hurts ± R.E.M. Everybody¶s Fool ± Evanescence. Everywhere I go ± Hollywood undead. Evolution ± Korn. FACK ± Eminem. Faith ± George Micheal. Feathers ± Coheed And Cambria. Firefly ± Breaking Benjamin. Fix Up, Look Sharp ± Dizzie Rascal. Flux ± Bloc Party. Fuck Forever ± Babyshambles. Get on Up ± James Brown. Girl Anachronism ± The Dresden Dolls. Girl You¶ll Be a Woman Soon ± Urge Overkill Go Your Own Way ± Fleetwood Mac. Golden Skans ± Klaxons. Grounds For Divorce ± Elbow. Happy ending ± MIKA. Heartbeats ± Jose Gonzalez. Heartbreak Hotel ± Elvis Presely. Hollywood ± Marina and the diamonds. I don¶t love you ± My Chemical Romance. I Fought The Law ± The Clash. I Got Love ± The King Blues. I miss you ± Blink 182. -

Completeandleft

MEN WOMEN 1. BA Bryan Adams=Canadian rock singer- Brenda Asnicar=actress, singer, model=423,028=7 songwriter=153,646=15 Bea Arthur=actress, singer, comedian=21,158=184 Ben Adams=English singer, songwriter and record Brett Anderson=English, Singer=12,648=252 producer=16,628=165 Beverly Aadland=Actress=26,900=156 Burgess Abernethy=Australian, Actor=14,765=183 Beverly Adams=Actress, author=10,564=288 Ben Affleck=American Actor=166,331=13 Brooke Adams=Actress=48,747=96 Bill Anderson=Scottish sportsman=23,681=118 Birce Akalay=Turkish, Actress=11,088=273 Brian Austin+Green=Actor=92,942=27 Bea Alonzo=Filipino, Actress=40,943=114 COMPLETEandLEFT Barbara Alyn+Woods=American actress=9,984=297 BA,Beatrice Arthur Barbara Anderson=American, Actress=12,184=256 BA,Ben Affleck Brittany Andrews=American pornographic BA,Benedict Arnold actress=19,914=190 BA,Benny Andersson Black Angelica=Romanian, Pornstar=26,304=161 BA,Bibi Andersson Bia Anthony=Brazilian=29,126=150 BA,Billie Joe Armstrong Bess Armstrong=American, Actress=10,818=284 BA,Brooks Atkinson Breanne Ashley=American, Model=10,862=282 BA,Bryan Adams Brittany Ashton+Holmes=American actress=71,996=63 BA,Bud Abbott ………. BA,Buzz Aldrin Boyce Avenue Blaqk Audio Brother Ali Bud ,Abbott ,Actor ,Half of Abbott and Costello Bob ,Abernethy ,Journalist ,Former NBC News correspondent Bella ,Abzug ,Politician ,Feminist and former Congresswoman Bruce ,Ackerman ,Scholar ,We the People Babe ,Adams ,Baseball ,Pitcher, Pittsburgh Pirates Brock ,Adams ,Politician ,US Senator from Washington, 1987-93 Brooke ,Adams -

A Place for Us to Dream

A Place For Us To Dream Musik | Porträt: Placebo zum 20. Geburtstag Ein Song-Medley der ganz besonderen Art: Zum 20-jährigen Bandjubiläum lassen Brian Molko und Stefan Olsdal nicht nur ordentlich die Bässe klingen und internationale Bühnen mit einer Geburtstagstournee beben, sondern haben noch ganz andere Alternative-Rock-Überraschungen in petto. Von MONA KAMPE »As an artist, you want your venue to be filled with people having a good time. That’s what you live for. It’s the kind of energy that feeds your soul. / Als Künstler möchtest du deine Bühne mit Leuten umgeben, die Spaß haben. Dafür lebst du. Das ist die Energie, die deine Seele nährt.« Es könnte wahrlich kein besseres Statement geben, welches den Spirit der Londoner Indie-Rockband ›Placebo‹ besser verkörpert, als jenes, welches Sänger und Songwriter Brian Molko dem britischen Magazin ›M‹ im Sommer dieses Jahres offenbarte. A Place For Us To Dream A Place For Us To Dream Placebo (2016) Abb: Universal Music Seit ihrer Geburtsstunde 1994 (unter dem Namen ›Ashtray Heart‹) und ihrem Debütalbum ›Placebo‹ im Juni 1996 hat die aktuell zweiköpfige Band um Molko und Bassist Stefan Olsdal weltweit über 12 Million Tonträger verkauft und erhielt 2009 den ›MTV Europe Music Award‹ als ›Best Alternative Act‹. Und das, obwohl das einstige Trio – mit dem ehemaligen Gründungsmitglied Drummer Robert Shultzberg, der die Band bereits 1996 verließ – die Szene Mitte der Neunziger Jahre mit dem androgynen Auftreten des Leadsängers Molko und düsterem Erscheinungsbild – tiefschwarzer Mascara, Eyeliner sowie Nägeln – irritierte. Für allgemeines Staunen sorgte auch die Wahl des Bandnamens ›Placebo‹ (lateinisch: Ich werde gefallen), der eine Scheindroge bezeichnet. -

Revista Solo-Rock Abril 2014

Mayo 2014 RIVAS ROCK Rock versus Fútbol CRÓNICAS: Loquillo The Fuzztones Siniestro Total Johnny Winter The Coup... Entrevistas: Jeff Scott Soto Jorge Salán P.L. Girls 1 Portada de : Chema Pérez Rosendo Mercado Esta revista ha sido posible gracias a: Alex García Alfonso Camarero Caperucita Rock Chema Pérez David Collados Héctor Checa Jackster Javier G. Espinosa José Ángel Romera José Luis Carnes Néstor Daniel Carrasco Santiago Cerezo Salcedo Víctor Martín Iglesias www.solo-rock.com se fundó en 2005 con la intención de contar a nuestra manera qué ocurre en el mundo de la música, poniendo especial atención a los conciertos. Desde entonces hemos cubierto la información de más de dos mil con- ciertos, además de informar de miles de noticias relacionadas con el Rock y la música de calidad en general. Solamente en los dos últimos años hemos estado en más de 600 conciertos, documentados de forma fotográfica y escrita. Los redactores de la web viven el Rock, no del Rock, por lo que sus opi- niones son libres. La web no se responsabiliza de los comentarios que haga cada colaborador si no están expresamente firmados por la misma. Si quieres contactar con nosotros para mandarnos información o promo- cional, puedes hacerlo por correo electrónico o por vía postal. Estas son las direcciones: Correo Electrónico: [email protected] Dirección Postal: Aptdo. Correos 50587 – 28080 Madrid 2 Sumario Crónicas de conciertos Pág. 4 Rivas Rock Pág. 5 Loquillo Pág. 10 The Fuzztones + Dark Colours since 1685 Pág. 13 The Dream Syndicate + Laredo Pág. 16 Siniestro Total Pág. 18 The Coup Pág. -

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

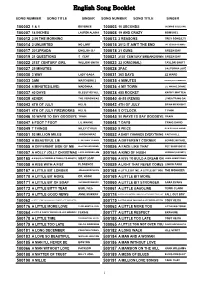

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

Market Ending Firstseason

Men's soccer Inside Kingham Arts Page 5 comes back to are firstin Campus Life, Pages 2-3 Opinion Page 4 the Blend division Puzzles Page6 See page See page 7 News Pages 1,9-12 5 Sports Pages 7-8 The Thunderword Oct. 19, 2006/Volume 46, No. 4/Highline Community College Happy harvest inDes Moines New college trustee sees position as challenge By Robert Lamirande staff reporter Athis firstBoard ofTrustees meeting Tuesday, Michael Re- geimbal kept up withan agenda that leapt from democracy to student programs to next year's budget. "It was fun,"Regeimbal said with a tone that indicated he re- ally,really meant it. "It was ex- citing." - -Regeimbal previously served on the Highline Foundation and is the newest member on the Board of Trustees; he is replac- ingMike Emerson, who retired from the board. The board is comprised of five people who —are appointed by the governor they provide plans toencour- age Highline's progress and Alicia oversee its operations. Mendez/Thunderword Regeimbal, who has pre- Market customer purchases sweet potatoes a vendor Olsen Farms Potatoes. ADes Moines Farmers from for sided over the Rotary Club and the chamber of commerce in the past, said he was ready for something new. Farmers market ending first season "Been there done that," Re- geimbal said. "I've enjoyed it, and it's been a lot of fun. This By set apart Alicia Mendez inits fivemonths of operation. More than 70 percent of the To themselves from is something Ihave not been in- of the local markets, the Des staff reporter "First off, the opening vendors are farmers, while oth- other volved in, so it's kind of a new market has been an incredibly ers sell everything fromcrafts to Moines market featured live challenge forme." The Des Moines Farmers solid start. -

Karaoke Catalog Updated On: 11/01/2019 Sing Online on in English Karaoke Songs

Karaoke catalog Updated on: 11/01/2019 Sing online on www.karafun.com In English Karaoke Songs 'Til Tuesday What Can I Say After I Say I'm Sorry The Old Lamplighter Voices Carry When You're Smiling (The Whole World Smiles With Someday You'll Want Me To Want You (H?D) Planet Earth 1930s Standards That Old Black Magic (Woman Voice) Blackout Heartaches That Old Black Magic (Man Voice) Other Side Cheek to Cheek I Know Why (And So Do You) DUET 10 Years My Romance Aren't You Glad You're You Through The Iris It's Time To Say Aloha (I've Got A Gal In) Kalamazoo 10,000 Maniacs We Gather Together No Love No Nothin' Because The Night Kumbaya Personality 10CC The Last Time I Saw Paris Sunday, Monday Or Always Dreadlock Holiday All The Things You Are This Heart Of Mine I'm Not In Love Smoke Gets In Your Eyes Mister Meadowlark The Things We Do For Love Begin The Beguine 1950s Standards Rubber Bullets I Love A Parade Get Me To The Church On Time Life Is A Minestrone I Love A Parade (short version) Fly Me To The Moon 112 I'm Gonna Sit Right Down And Write Myself A Letter It's Beginning To Look A Lot Like Christmas Cupid Body And Soul Crawdad Song Peaches And Cream Man On The Flying Trapeze Christmas In Killarney 12 Gauge Pennies From Heaven That's Amore Dunkie Butt When My Ship Comes In My Own True Love (Tara's Theme) 12 Stones Yes Sir, That's My Baby Organ Grinder's Swing Far Away About A Quarter To Nine Lullaby Of Birdland Crash Did You Ever See A Dream Walking? Rags To Riches 1800s Standards I Thought About You Something's Gotta Give Home Sweet Home