Practical Concurrency Testing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

WORDS MADE FLESH Code, Culture, Imagination Florian Cramer

WORDS MADE FLESH Code, Culture, Imagination Florian Cramer Me dia De s ign Re s e arch Pie t Z w art Ins titute ins titute for pos tgraduate s tudie s and re s e arch W ille m de Kooning Acade m y H oge s ch ool Rotte rdam 3 ABSTRACT: Executable code existed centuries before the invention of the computer in magic, Kabbalah, musical composition and exper- imental poetry. These practices are often neglected as a historical pretext of contemporary software culture and electronic arts. Above all, they link computations to a vast speculative imagination that en- compasses art, language, technology, philosophy and religion. These speculations in turn inscribe themselves into the technology. Since even the most simple formalism requires symbols with which it can be expressed, and symbols have cultural connotations, any code is loaded with meaning. This booklet writes a small cultural history of imaginative computation, reconstructing both the obsessive persis- tence and contradictory mutations of the phantasm that symbols turn physical, and words are made flesh. Media Design Research Piet Zwart Institute institute for postgraduate studies and research Willem de Kooning Academy Hogeschool Rotterdam http://www.pzwart.wdka.hro.nl The author wishes to thank Piet Zwart Institute Media Design Research for the fellowship on which this book was written. Editor: Matthew Fuller, additional corrections: T. Peal Typeset by Florian Cramer with LaTeX using the amsbook document class and the Bitstream Charter typeface. Front illustration: Permutation table for the pronounciation of God’s name, from Abraham Abulafia’s Or HaSeichel (The Light of the Intellect), 13th century c 2005 Florian Cramer, Piet Zwart Institute Permission is granted to copy, distribute and/or modify this document under the terms of any of the following licenses: (1) the GNU General Public License as published by the Free Software Foun- dation; either version 2 of the License, or any later version. -

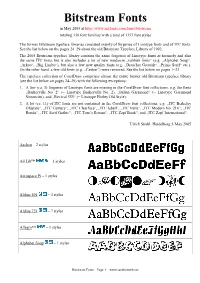

Bitstream Fonts in May 2005 at Totaling 350 Font Families with a Total of 1357 Font Styles

Bitstream Fonts in May 2005 at http://www.myfonts.com/fonts/bitstream totaling 350 font families with a total of 1357 font styles The former Bitstream typeface libraries consisted mainly of forgeries of Linotype fonts and of ITC fonts. See the list below on the pages 24–29 about the old Bitstream Typeface Library of 1992. The 2005 Bitstream typeface library contains the same forgeries of Linotype fonts as formerly and also the same ITC fonts, but it also includes a lot of new mediocre „rubbish fonts“ (e.g. „Alphabet Soup“, „Arkeo“, „Big Limbo“), but also a few new quality fonts (e.g. „Drescher Grotesk“, „Prima Serif“ etc.). On the other hand, a few old fonts (e.g. „Caxton“) were removed. See the list below on pages 1–23. The typeface collection of CorelDraw comprises almost the entire former old Bitstream typeface library (see the list below on pages 24–29) with the following exceptions: 1. A few (ca. 3) forgeries of Linotype fonts are missing in the CorelDraw font collections, e.g. the fonts „Baskerville No. 2“ (= Linotype Baskerville No. 2), „Italian Garamond“ (= Linotype Garamond Simoncini), and „Revival 555“ (= Linotype Horley Old Style). 2. A lot (ca. 11) of ITC fonts are not contained in the CorelDraw font collections, e.g. „ITC Berkeley Oldstyle“, „ITC Century“, „ITC Clearface“, „ITC Isbell“, „ITC Italia“, „ITC Modern No. 216“, „ITC Ronda“, „ITC Serif Gothic“, „ITC Tom’s Roman“, „ITC Zapf Book“, and „ITC Zapf International“. Ulrich Stiehl, Heidelberg 3-May 2005 Aachen – 2 styles Ad Lib™ – 1 styles Aerospace Pi – 1 styles Aldine -

Advanced Systems Security: Symbolic Execution

Systems and Internet Infrastructure Security Network and Security Research Center Department of Computer Science and Engineering Pennsylvania State University, University Park PA Advanced Systems Security:! Symbolic Execution Trent Jaeger Systems and Internet Infrastructure Security (SIIS) Lab Computer Science and Engineering Department Pennsylvania State University Systems and Internet Infrastructure Security (SIIS) Laboratory Page 1 Problem • Programs have flaws ‣ Can we find (and fix) them before adversaries can reach and exploit them? • Then, they become “vulnerabilities” Systems and Internet Infrastructure Security (SIIS) Laboratory Page 2 Vulnerabilities • A program vulnerability consists of three elements: ‣ A flaw ‣ Accessible to an adversary ‣ Adversary has the capability to exploit the flaw • Which one should we focus on to find and fix vulnerabilities? ‣ Different methods are used for each Systems and Internet Infrastructure Security (SIIS) Laboratory Page 3 Is It a Flaw? • Problem: Are these flaws? ‣ Failing to check the bounds on a buffer write? ‣ printf(char *n); ‣ strcpy(char *ptr, char *user_data); ‣ gets(char *ptr) • From man page: “Never use gets().” ‣ sprintf(char *s, const char *template, ...) • From gnu.org: “Warning: The sprintf function can be dangerous because it can potentially output more characters than can fit in the allocation size of the string s.” • Should you fix all of these? Systems and Internet Infrastructure Security (SIIS) Laboratory Page 4 Is It a Flaw? • Problem: Are these flaws? ‣ open( filename, O_CREAT ); -

Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK

1 From Start-ups to Scale-ups: Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK Abstract—This paper1 describes some of the challenges and research questions that target the most productive intersection opportunities when deploying static and dynamic analysis at we have yet witnessed: that between exciting, intellectually scale, drawing on the authors’ experience with the Infer and challenging science, and real-world deployment impact. Sapienz Technologies at Facebook, each of which started life as a research-led start-up that was subsequently deployed at scale, Many industrialists have perhaps tended to regard it unlikely impacting billions of people worldwide. that much academic work will prove relevant to their most The paper identifies open problems that have yet to receive pressing industrial concerns. On the other hand, it is not significant attention from the scientific community, yet which uncommon for academic and scientific researchers to believe have potential for profound real world impact, formulating these that most of the problems faced by industrialists are either as research questions that, we believe, are ripe for exploration and that would make excellent topics for research projects. boring, tedious or scientifically uninteresting. This sociological phenomenon has led to a great deal of miscommunication between the academic and industrial sectors. I. INTRODUCTION We hope that we can make a small contribution by focusing on the intersection of challenging and interesting scientific How do we transition research on static and dynamic problems with pressing industrial deployment needs. Our aim analysis techniques from the testing and verification research is to move the debate beyond relatively unhelpful observations communities to industrial practice? Many have asked this we have typically encountered in, for example, conference question, and others related to it. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

Chapter 13, Using Fonts with X

13 Using Fonts With X Most X clients let you specify the font used to display text in the window, in menus and labels, or in other text fields. For example, you can choose the font used for the text in fvwm menus or in xterm windows. This chapter gives you the information you need to be able to do that. It describes what you need to know to select display fonts for use with X client applications. Some of the topics the chapter covers are: The basic characteristics of a font. The font-naming conventions and the logical font description. The use of wildcards and aliases for simplifying font specification The font search path. The use of a font server for accessing fonts resident on other systems on the network. The utilities available for managing fonts. The use of international fonts and character sets. The use of TrueType fonts. Font technology suffers from a tension between what printers want and what display monitors want. For example, printers have enough intelligence built in these days to know how best to handle outline fonts. Sending a printer a bitmap font bypasses this intelligence and generally produces a lower quality printed page. With a monitor, on the other hand, anything more than bitmapped information is wasted. Also, printers produce more attractive print with variable-width fonts. While this is true for monitors as well, the utility of monospaced fonts usually overrides the aesthetic of variable width for most contexts in which we’re looking at text on a monitor. This chapter is primarily concerned with the use of fonts on the screen. -

P Font-Change Q UV 3

p font•change q UV Version 2015.2 Macros to Change Text & Math fonts in TEX 45 Beautiful Variants 3 Amit Raj Dhawan [email protected] September 2, 2015 This work had been released under Creative Commons Attribution-Share Alike 3.0 Unported License on July 19, 2010. You are free to Share (to copy, distribute and transmit the work) and to Remix (to adapt the work) provided you follow the Attribution and Share Alike guidelines of the licence. For the full licence text, please visit: http://creativecommons.org/licenses/by-sa/3.0/legalcode. 4 When I reach the destination, more than I realize that I have realized the goal, I am occupied with the reminiscences of the journey. It strikes to me again and again, ‘‘Isn’t the journey to the goal the real attainment of the goal?’’ In this way even if I miss goal, I still have attained goal. Contents Introduction .................................................................................. 1 Usage .................................................................................. 1 Example ............................................................................... 3 AMS Symbols .......................................................................... 3 Available Weights ...................................................................... 5 Warning ............................................................................... 5 Charter ....................................................................................... 6 Utopia ....................................................................................... -

(2009), No. 2 TEX People: the TUG Interviews Project and Book Karl

196 TUGboat, Volume 30 (2009), No. 2 TEX People: The TUG interviews project 2 The interview method and book Interview subjects are1 chosen based on (a) seeking Karl Berry and David Walden diversity in many dimensions, (b) recommendations from people about who should be interviewed, and Abstract (c) potential interview subjects being willing to be We present the history and evolution of the TUG interviewed. interviews project. We discuss the interviewing pro- The interviews have almost all been done via cess as well as our methods for creating web pages exchange of email using plain text; a couple of ex- and a printed book from the interviews, using m4 as ceptions were done in LATEX. a preprocessor targeting either HTML or LATEX. We For the first several interviews, Dave sent a also describe some business decisions relating to the more or less complete list of questions (once the book. We don't claim great generality for what we interviewee agreed to participate), on the theory that have done, but we hope some of our experience will this would minimize the burden on the interviewee. be educational. However, having a dozen questions to answer at one time proved to be daunting to interviewees. Thus, Dave switched to a process wherein he sent a couple of standard initial questions (namely, \Tell me a 1 Introduction bit about yourself and your history outside of TEX," Dave had two ideas in mind when he suggested (orig- and \How and when did you first get involved with inally to Karl) this interview series in 2004: (a) tech- TEX?"). -

Static Program Analysis As a Fuzzing Aid

Static Program Analysis as a Fuzzing Aid Bhargava Shastry1( ), Markus Leutner1, Tobias Fiebig1, Kashyap Thimmaraju1, Fabian Yamaguchi2, Konrad Rieck2, Stefan Schmid3, Jean-Pierre Seifert1, and Anja Feldmann1 1 TU Berlin, Berlin, Germany [email protected] 2 TU Braunschweig, Braunschweig, Germany 3 Aalborg University, Aalborg, Denmark Abstract. Fuzz testing is an effective and scalable technique to per- form software security assessments. Yet, contemporary fuzzers fall short of thoroughly testing applications with a high degree of control-flow di- versity, such as firewalls and network packet analyzers. In this paper, we demonstrate how static program analysis can guide fuzzing by aug- menting existing program models maintained by the fuzzer. Based on the insight that code patterns reflect the data format of inputs pro- cessed by a program, we automatically construct an input dictionary by statically analyzing program control and data flow. Our analysis is performed before fuzzing commences, and the input dictionary is sup- plied to an off-the-shelf fuzzer to influence input generation. Evaluations show that our technique not only increases test coverage by 10{15% over baseline fuzzers such as afl but also reduces the time required to expose vulnerabilities by up to an order of magnitude. As a case study, we have evaluated our approach on two classes of network applications: nDPI, a deep packet inspection library, and tcpdump, a network packet analyzer. Using our approach, we have uncovered 15 zero-day vulnerabilities in the evaluated software that were not found by stand-alone fuzzers. Our work not only provides a practical method to conduct security evalua- tions more effectively but also demonstrates that the synergy between program analysis and testing can be exploited for a better outcome. -

A Randomized Dynamic Program Analysis Technique for Detecting Real Deadlocks

A Randomized Dynamic Program Analysis Technique for Detecting Real Deadlocks Pallavi Joshi Chang-Seo Park Mayur Naik Koushik Sen Intel Research, Berkeley, USA EECS Department, UC Berkeley, USA [email protected] {pallavi,parkcs,ksen}@cs.berkeley.edu Abstract cipline followed by the parent software and this sometimes results We present a novel dynamic analysis technique that finds real dead- in deadlock bugs [17]. locks in multi-threaded programs. Our technique runs in two stages. Deadlocks are often difficult to find during the testing phase In the first stage, we use an imprecise dynamic analysis technique because they happen under very specific thread schedules. Coming to find potential deadlocks in a multi-threaded program by observ- up with these subtle thread schedules through stress testing or ing an execution of the program. In the second stage, we control random testing is often difficult. Model checking [15, 11, 7, 14, 6] a random thread scheduler to create the potential deadlocks with removes these limitations of testing by systematically exploring high probability. Unlike other dynamic analysis techniques, our ap- all thread schedules. However, model checking fails to scale for proach has the advantage that it does not give any false warnings. large multi-threaded programs due to the exponential increase in We have implemented the technique in a prototype tool for Java, the number of thread schedules with execution length. and have experimented on a number of large multi-threaded Java Several program analysis techniques, both static [19, 10, 2, 9, programs. We report a number of previously known and unknown 27, 29, 21] and dynamic [12, 13, 4, 1], have been developed to de- real deadlocks that were found in these benchmarks. -

Optimal Use of Fonts on Linux

Optimal Use of Fonts on Linux Avi Alkalay Donovan Rebbechi Hal Burgiss Copyright © 2006 Avi Alkalay, Donovan Rebbechi, Hal Burgiss 2007−04−15 Revision History Revision 2007−04−15 15 Apr 2007 Revised by: avi Included support to SUSE installation for the RPM scriptlets on template spec file, listed SUSE as a BCI−enabled distro. Revision 2007−02−08 08 Feb 2007 Revised by: avi Fixed some typos, updated Luc's page URL, added DejaVu sections, added link to FC6 Freetype RPMs, added link to Debian MS Core fonts, and added reference to the gnome−font−properties command. Revision 2006−07−02 02 Jul 2006 Revised by: avi Included link to Debian FreeType BCI package, improved the glossary with Latin1 descriptions, more clear links on the webcore fonts section, instructions on how to rebuild source RPM packages in the BCI appendix, updated the freetype recompilation appendix to cover new versions of the lib, authorship section reorganized. Revision 2006−04−02 02 Apr 2006 Revised by: avi Included link to FC5 Freetype.bci contribution by Cody DeHaan. Revision 2006−03−25 25 Mar 2006 Revised by: avi Updated link to BCI Freetype RPMs to be more distro version specific. Revision 2005−07−19 19 May 2005 Revised by: avi Renamed Microsoft Fonts to Webcore Fonts, and links updated.Added X.org Subsystems section. Revision 2005−05−25 25 May 2005 Revised by: avi Comment related to web pages in the Microsoft Fonts section Revision 2005−05−10 10 May 2005 Revised by: avi Old section−based glossary converted to real DocBook glossary.Modernized terms and explanations on the glossary.Included concepts as charsets, Unicode and UTF−8 in the glossary. -

GET, Arris QAM Gateway, DTV Decoder SW

Open Source Used In SUNGW CUG- 96.1 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-151990932 Open Source Used In SUNGW CUG-96.1 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-151990932 Contents 1.1 ASN1C 0.9.23 1.1.1 Available under license 1.2 BSD Readelf.h NON_PRISTINE 1.9 1.2.1 Available under license 1.3 busybox 1.18.5 1.3.1 Available under license 1.4 cjson 2009 1.4.1 Available under license 1.5 crc32 1.1.1.1 1.5.1 Available under license 1.6 curl 7.26.0 1.6.1 Available under license 1.7 EGL headers 1.4 1.7.1 Available under license 1.8 expat 2.1.0 1.8.1 Available under license 1.9 ffmpeg 0.11.1 1.9.1 Available under license 1.10 flex 2.5.35 1.10.1 Available under license 1.11 freeBSD 4.8 :distro-fusion 1.11.1 Available under license 1.12 FREETYPE2 2.4.4 1.12.1 Available under license 1.13 iptables 1.4.1 1.13.1 Available under license Open Source Used In SUNGW CUG-96.1 2 1.14 isc dhcp 4.1-ESV-R4 1.14.1 Available under license 1.15