Exploring the Readability of Assessment Tasks: the Influence of Text and Reader Factors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Comparing the Word Processing and Reading Comprehension of Skilled and Less Skilled Readers

Educational Sciences: Theory & Practice - 12(4) • Autumn • 2822-2828 ©2012 Educational Consultancy and Research Center www.edam.com.tr/estp Comparing the Word Processing and Reading Comprehension of Skilled and Less Skilled Readers İ. Birkan GULDENOĞLU Tevhide KARGINa Paul MILLER Ankara University Ankara University University of Haifa Abstract The purpose of this study was to compare the word processing and reading comprehension skilled in and less skilled readers. Forty-nine, 2nd graders (26 skilled and 23 less skilled readers) partici- pated in this study. They were tested with two experiments assessing their processing of isolated real word and pseudoword pairs as well as their reading comprehension skills. Findings suggest that there is a significant difference between skilled and less skilled readers both with regard to their word processing skills and their reading comprehension skills. In addition, findings suggest that word processing skills and reading comprehension skills correlate positively both skilled and less skilled readers. Key Words Reading, Reading Comprehension, Word Processing Skills, Reading Theories. Reading is one of the most central aims of school- According to the above mentioned phonologi- ing and all children are expected to acquire this cal reading theory, readers first recognize written skill in school (Güzel, 1998; Moates, 2000). The words phonologically via their spoken lexicon and, ability to read is assumed to rely on two psycho- subsequently, apply their linguistic (syntactic, se- linguistic processes: -

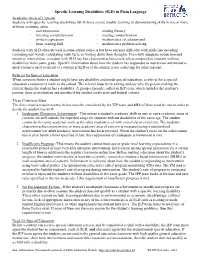

Specific Learning Disabilities (SLD) in Plain Language

Specific Learning Disabilities (SLD) in Plain Language Academic Areas of Concern Students with specific learning disabilities (SLD) have severe trouble learning or demonstrating skills in one or more of these academic areas: oral expression reading fluency listening comprehension reading comprehension written expression mathematics calculation and basic reading skill mathematics problem solving Students with SLD often do well in some school subjects but have extreme difficulty with skills like decoding (sounding out) words, calculating math facts, or writing down their thoughts. Even with adequate instruction and intensive intervention, a student with SLD has low classroom achievement when compared to students without disabilities in the same grade. Specific information about how the student has responded to instruction and intensive intervention is used to decide if a student is SLD or if the student is not achieving for other reasons. Referral for Special Education When someone thinks a student might have any disability and needs special education, a referral for a special education evaluation is made to the school. The referral must be in writing and say why the person making the referral thinks the student has a disability. A group of people, called an IEP team, which includes the student’s parents, does an evaluation and decides if the student meets state and federal criteria. Three Criteria to Meet The three criteria (requirements) below must be considered by the IEP team and all 3 of them must be met in order to decide the student has SLD. 1. Inadequate Classroom Achievement - This means a student's academic skills in one or more academic areas of concern are well outside the expected range for students without disabilities of the same age. -

Reading Comprehension Difficulties Handout for Students

Reading Comprehension Difficulties Definition: Reading comprehension is a process in which knowledge of language, knowledge of the world, and knowledge of a topic interact to make complex meaning. It involves decoding, word association, context association, summary, analogy, integration, and critique. The Symptoms: Students who have difficulty with reading comprehension may have trouble identifying and understanding important ideas in reading passages, may have trouble with basic reading skills such as word recognition, may not remember what they’ve read, and may frequently avoid reading due to frustration. How to improve your reading comprehension: It is important to note that this is not just an English issue—all school subjects require reading comprehension. The following techniques can help to improve your comprehension: Take a look at subheadings and any editor’s introduction before you begin reading so that you can predict what the reading will be about. Ask yourself what you know about a subject before you begin reading to activate prior knowledge. Ask questions about the text as you read—this engages your brain. Take notes as you read, either in the book or on separate paper: make note of questions you may have, interesting ideas, and important concepts, anything that strikes your fancy. This engages your brain as you read and gives you something to look back on so that you remember what to discuss with your instructor and/or classmates or possibly use in your work for the class. Make note of how texts in the subject area are structured you know how and where to look for different types of information. -

Effective Vocabulary Instruction by Joan Sedita

Published in “Insights on Learning Disabilities” 2(1) 33-45, 2005 Effective Vocabulary Instruction By Joan Sedita Why is vocabulary instruction important? Vocabulary is one of five core components of reading instruction that are essential to successfully teach children how to read. These core components include phonemic awareness, phonics and word study, fluency, vocabulary, and comprehension (National Reading Panel, 2000). Vocabulary knowledge is important because it encompasses all the words we must know to access our background knowledge, express our ideas and communicate effectively, and learn about new concepts. “Vocabulary is the glue that holds stories, ideas and content together… making comprehension accessible for children.” (Rupley, Logan & Nichols, 1998/99). Students’ word knowledge is linked strongly to academic success because students who have large vocabularies can understand new ideas and concepts more quickly than students with limited vocabularies. The high correlation in the research literature of word knowledge with reading comprehension indicates that if students do not adequately and steadily grow their vocabulary knowledge, reading comprehension will be affected (Chall & Jacobs, 2003). There is a tremendous need for more vocabulary instruction at all grade levels by all teachers. The number of words that students need to learn is exceedingly large; on average students should add 2,000 to 3,000 new words a year to their reading vocabularies (Beck, McKeown & Kucan, 2002). For some categories of students, there are significant obstacles to developing sufficient vocabulary to be successful in school: • Students with limited or no knowledge of English. Literate English (English used in textbooks and printed material) is different from spoken or conversational English. -

Is Vocabulary a Strong Variable Predicting Reading Comprehension and Does the Prediction Degree of Vocabulary Vary According to Text Types

Kuram ve Uygulamada Eğitim Bilimleri • Educational Sciences: Theory & Practice - 11(3) • Summer • 1541-1547 ©2011 Eğitim Danışmanlığı ve Araştırmaları İletişim Hizmetleri Tic. Ltd. Şti. Is Vocabulary a Strong Variable Predicting Reading Comprehension and Does the Prediction Degree of Vocabulary Vary according to Text Types Kasım YILDIRIMa Mustafa YILDIZ Seyit ATEŞ Ahi Evran University Gazi University Gazi University Abstract The purpose of this study was to explore whether there was a significant correlation between vocabulary and re- ading comprehension in terms of text types as well as whether the vocabulary was a predictor of reading comp- rehension in terms of text types. In this regard, the correlational research design was used to explain specific research objectives. The study was conducted in Ankara-Sincan during the 2008-2009 academic years. A total of 120 students having middle socioeconomic status participated in this study. The students in this research were in the fifth-grade at a public school. Reading comprehension and vocabulary tests were developed to evaluate the students’ reading comprehension and vocabulary levels. Correlation and bivariate linear regression analy- ses were used to assess the data obtained from the study. The research findings indicated that there was a me- dium correlation between vocabulary and narrative text comprehension. In addition, there was a large correlati- on between vocabulary and expository text comprehension. Compared to the narrative text comprehension, vo- cabulary was also a strong predictor of expository text comprehension. Vocabulary made more contribution to expository text comprehension than narrative text comprehension. Key Words Vocabulary, Reading Comprehension, Text Types. Reading comprehension is defined as a complex Reader factor includes prior knowledge, linguistic process in which many skills are used (Cain, Oa- skill, and metacognitive awareness. -

Dyslexia Or Ld in Reading: What Is the Difference?

DYSLEXIA OR LD IN READING: WHAT IS THE DIFFERENCE? Anise Flowers & Donna Black, Pearson Dyslexia or LD in Reading? TCASE 2017 Image by Photographer’s Name (Credit in black type) or Image by Photographer’s Name (Credit in white type) International Dyslexia Association Dyslexia is a specific learning disability that is neurological in origin. It is characterized by Dyslexia or LD in Reading: What difficulties with accurate and/or fluent word is the Difference? recognition and by poor spelling and decoding abilities. These difficulties typically result from a deficit in the phonological component of language that is often unexpected in relation to other cognitive abilities and the provision of Presented by effective classroom instruction. Secondary Anise Flowers, Ph.D. Donna Black, LSSP consequences may include problems in reading comprehension and reduced reading experience TCASE that can impede growth of vocabulary and January 2017 background knowledge. Presentation Title Arial Bold 7 pt 1 2 Dyslexia Identification and Services in Texas Dyslexia Definition (in Texas) Texas Education Code (TEC)§38.003 defines Texas Education Code (TEC)§38.003 definition: dyslexia and mandates testing and the provision of 1. “Dyslexia” means a disorder of constitutional instruction origin manifested by a difficulty in learning to State Board of Education (SBOE) adopts rules and read, write, or spell, despite conventional standards for administering testing and instruction instruction, adequate intelligence, and TEC §7.028(b) relegates responsibility for school sociocultural opportunity. compliance to the local school board 2. “Related disorders” include disorders similar to or 19 (TAC)§74.28 outlines responsibilities of districts related to dyslexia such as developmental auditory and charter schools in the delivery of services to imperceptions, dysphasia, specific developmental students with dyslexia dyslexia, developmental dysgraphia, and The Rehabilitation Act of 1973, §504, establishes developmental spelling disability. -

Whole Language and Traditional Reading Instruction: a Comparison of Teacher Views and Techniques

DOCUMENT RESUME ED 365 963 CS 011 558 AUTHOR Morrison, Julie; Mosser, Leigh Ann TITLE Whole Language and Traditional Reading Instruction: A Comparison of Teacher Views and Techniques. PUB DATE [93] NOTE 18p. PUB TYPE Reports Research/Technical (143) EDRS PRICE MF01/PC01 Plus Postage. DESCRIPTORS Comparative Analysis; *Conventional Instruction; Elementary Education; *Reading Instruction; Reading Research; *Teacher Attitudes; *Teacher Behavior; *Whole Language Approach IDENTIFIERS Teacher Surveys ABSTRACT A study examined two methods of reading instruction, the whole language literature-based approach and the traditional basal approach. Eighty teachers from four diverse school districts in two midwestern states were surveyed to find out which method was the most widely used. Results indicated that 847. of the 50 teachers who responded used a combination approach. The teachers believed that by using key aspects of each approach, a more powerful tool for reading instruction would result.(RS) *********************************************************************** * Reproductions supplied by EDRS are the best that can be made * * from the original document. * *********************************************************************** Views and Techniques 1 Whole Language and Traditional Reading Instruction: A Comparison of Teacher Views and Techniques Julie Morrison and Leigh Ann Mosser Fremont City Schools DEPARTMENT OF EDUCATION Offtce or Educatoo(ai Research rto improvement EDUCATIONAL RESOURCES INFORMATION PERMISSION TO REPRODUCE THIS CENTER -

Defending Whole Language: the Limits of Phonics Instruction and the Efficacy of Whole Language Instruction

Defending Whole Language: The Limits of Phonics Instruction and the Efficacy of Whole Language Instruction Stephen Krashen Reading Improvement 39 (1): 32-42, 2002 The Reading Wars show no signs of stopping. There appear to be two factions: Those who support the Skill-Building hypothesis and those who support the Comprehension Hypothesis. The former claim that literacy is developed from the bottom up; the child learns to read by first learning to read outloud, by learning sound-spelling correspondences. This is done through explicit instruction, practice, and correction. This knowledge is first applied to words. Ultimately, the child uses this ability to read larger texts, as the knowledge of sound-spelling correspondences becomes automatic. According to this view, real reading of interesting texts is helpful only to the extent that it helps children "practice their skills." The Comprehension Hypothesis claims that we learn to read by understanding messages on the page; we "learn to read by reading" (Goodman, 1982; Smith, 1994). Reading pedagogy, according to the Comprehension Hypothesis, focuses on providing students with interesting, comprehensible texts, and the job of the teacher is to help children read these texts, that is, help make them comprehensible. The direct teaching of "skills" is helpful only when it makes texts more comprehensible. The Comprehension Hypothesis also claims that reading is the source of much of our vocabulary knowledge, writing style, advanced grammatical competence, and spelling. It is also the source of most of our knowledge of phonics. Whole Language The term "whole language" does not refer only to providing interesting comprehensible texts and helping children understand less comprehensible texts. -

1 the Whole Language Approach to Reading

1 The Whole Language Approach to Reading: An empiricist critique A revised version of: Hempenstall, K. (1996). The whole language approach to reading: An empiricist critique. Australian Journal of Learning Disabilities, 1(3), 22-32. Historically, the consideration of learning disability has emphasised within-person factors to explain the unexpected difficulty that academic skill development poses for students with such disability. Unfortunately, the impact of the quality of initial and subsequent instruction in ameliorating or exacerbating the outcomes of such disability has received rather less exposure until recently. During the 1980’s an approach to education, whole language, became the major model for educational practice in Australia (House of Representatives Standing Committee on Employment, Education, and Training, 1992). Increasing controversy developed, both in the research community (Eldredge, 1991; Fields & Kempe, 1992; Gersten & Dimino, 1993; Liberman & Liberman, 1990; Mather, 1992; McCaslin, 1989; Stahl & Miller, 1989; Vellutino, 1991; Weir, 1990), and in the popular press (Hempenstall, 1994, 1995; Prior, 1993) about the impact of the approach on the attainments of students educated within this framework. In particular, concern was expressed (Bateman, 1991; Blachman, 1991; Liberman, Shankweiler, & Liberman, 1989; Yates, 1988) about the possibly detrimental effects on "at-risk" students (including those with learning disabilities). Whole language: History The whole language approach has its roots in the meaning-emphasis, whole-word model of teaching reading. Its more recent relation was an approach called "language experience" which became popular in the mid-1960's. The language experience approach emphasized the knowledge which children bring to the reading situation - a position diametrically opposed to the Lockian view of "tabula rasa" (the child's mind as a blank slate on which education writes its message). -

Literature, Literacy Comprehension Strategies

Moss How can we—as elementary school teachers— move beyond basal readers to strategies that encourage Literature, our students to truly understand what they’re reading and connect the work they’re doing in the classroom with the world around them? Literacy & For Joy F. Moss, the answer has been to build a literature program in which both struggling and highly Literature, able readers learn a series of “reading-thinking strategies” as they study literature together. This Comprehension Literacy program is made up of literature units designed to help students grow as independent readers and writers and develop long-term connections with literature that & allow them to more fully understand texts, themselves, and others. These practical units are structured around Comprehension cumulative read-aloud/think-aloud group sessions in Strategies which students collaborate to construct meaning and Strategies explore intertextual links between increasingly complex and diverse texts. in the In addition to the rich annotated lists of children’s in and young adult literature found in this book, Moss the Elementary School offers teachers a framework for developing units in Elementary which comprehension instruction is embedded in the study of text sets that are geared to the particular interests and needs of their students. This helps teachers bring the literary/literacy learning experiences into their own classrooms. School National Council of Teachers of English 1111 W. Kenyon Road Urbana, Illinois 61801-1096 800-369-6283 or 217-328-3870 Joy F. Moss www.ncte.org Acknowledgments v Contents Acknowledgments vii 1. Theory into Practice 1 2. Text Sets in the Kindergarten 28 3. -

An Examination of Language and Reading Comprehension in Late Childhood and Early Adolescence

An Examination of Language and Reading Comprehension in Late Childhood and Early Adolescence DISSSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Margaret Beard Graduate Program in Education: Physical Activity and Education Services The Ohio State University 2017 Dissertation Committee: Kisha M. Radliff, Ph.D., Advisor Antoinette Miranda, Ph.D. Stephen A. Petrill, Ph.D. Copyrighted by Margaret E. Beard 2017 Abstract The simple view of reading suggests that proficient reading comprehension is the product of two factors, decoding (word recognition) and language comprehension (understanding spoken language), that are developmental in nature. Decoding accounts for more variance and predicts reading abilities in younger children, then as children age, language comprehension becomes more of a primary influence and predictor for comprehension ability. This study fills a gap by assessing the relationship between language ability and reading comprehension in older children. This study sample included participants in late childhood (n=582) and late adolescence (n=530) who were part of a larger longitudinal twin project. Results suggested significant positive correlations between decoding, vocabulary, language comprehension, and reading comprehension during both late childhood and early adolescence. Decoding and language comprehension explained 48.5% of the variance in reading comprehension in late childhood and 42.7% of the variance in early adolescence. Vocabulary made a unique contribution to reading comprehension above and beyond that made by decoding and language comprehension and significantly explained an additional 9.4% of the variance in late childhood and 17.3% in early adolescence. -

The Effectiveness of Using Inner Speech and Communicative Speech in Reading Literacy Development: a Synthesis of Research

International Journal of Social Science and Humanity, Vol. 5, No. 8, August 2015 The Effectiveness of Using Inner Speech and Communicative Speech in Reading Literacy Development: A Synthesis of Research Yuan-Hsuan Lee rehearsal, and gave examples that suggest inner speech can Abstract—This paper provides a theoretical understanding of improve reading ability. inner speech and communicative speech and their impact on the process of reading literacy. The aim of this paper is to probe into questions, such as what the characteristics of inner speech II. THEORETICAL FRAMEWORK are, what the relationship between inner speech and subvocalization is, what inner speech can do to help reading, and what technology can do to help practice of inner speech and A. Huey’s Inner Speech literacy development. Review of literature has suggested that (1) Huey held that inner speech is part of the ordinary reading inner speech are abbreviated form of easily stored meaning [5]. Huey conducted experiments in which unrelated words units; (2) inner speech as a form of subvocal rehearsal can were exposed four seconds each and the readers are required retrieve information in the working memory and help solve to state what they saw [5]. He found that "the inner reading problems; (3) inner speech is a mechanism that put chunks of text into compact meaning units and that elicits pronunciation of these words and of the suggested phrases meanings when reading cognition becomes problematic; (4) constituted much of the most prominent part of the reader's technology can help literacy development but more emphasis consciousness of them." (p.