FAA Software Verification Tools Assessment Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Getting to Grips with Unix and the Linux Family

Getting to grips with Unix and the Linux family David Chiappini, Giulio Pasqualetti, Tommaso Redaelli Torino, International Conference of Physics Students August 10, 2017 According to the booklet At this end of this session, you can expect: • To have an overview of the history of computer science • To understand the general functioning and similarities of Unix-like systems • To be able to distinguish the features of different Linux distributions • To be able to use basic Linux commands • To know how to build your own operating system • To hack the NSA • To produce the worst software bug EVER According to the booklet update At this end of this session, you can expect: • To have an overview of the history of computer science • To understand the general functioning and similarities of Unix-like systems • To be able to distinguish the features of different Linux distributions • To be able to use basic Linux commands • To know how to build your own operating system • To hack the NSA • To produce the worst software bug EVER A first data analysis with the shell, sed & awk an interactive workshop 1 at the beginning, there was UNIX... 2 ...then there was GNU 3 getting hands dirty common commands wait till you see piping 4 regular expressions 5 sed 6 awk 7 challenge time What's UNIX • Bell Labs was a really cool place to be in the 60s-70s • UNIX was a OS developed by Bell labs • they used C, which was also developed there • UNIX became the de facto standard on how to make an OS UNIX Philosophy • Write programs that do one thing and do it well. -

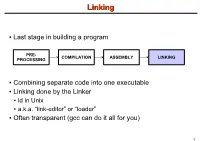

Linking + Libraries

LinkingLinking ● Last stage in building a program PRE- COMPILATION ASSEMBLY LINKING PROCESSING ● Combining separate code into one executable ● Linking done by the Linker ● ld in Unix ● a.k.a. “link-editor” or “loader” ● Often transparent (gcc can do it all for you) 1 LinkingLinking involves...involves... ● Combining several object modules (the .o files corresponding to .c files) into one file ● Resolving external references to variables and functions ● Producing an executable file (if no errors) file1.c file1.o file2.c gcc file2.o Linker Executable fileN.c fileN.o Header files External references 2 LinkingLinking withwith ExternalExternal ReferencesReferences file1.c file2.c int count; #include <stdio.h> void display(void); Compiler extern int count; int main(void) void display(void) { file1.o file2.o { count = 10; with placeholders printf(“%d”,count); display(); } return 0; Linker } ● file1.o has placeholder for display() ● file2.o has placeholder for count ● object modules are relocatable ● addresses are relative offsets from top of file 3 LibrariesLibraries ● Definition: ● a file containing functions that can be referenced externally by a C program ● Purpose: ● easy access to functions used repeatedly ● promote code modularity and re-use ● reduce source and executable file size 4 LibrariesLibraries ● Static (Archive) ● libname.a on Unix; name.lib on DOS/Windows ● Only modules with referenced code linked when compiling ● unlike .o files ● Linker copies function from library into executable file ● Update to library requires recompiling program 5 LibrariesLibraries ● Dynamic (Shared Object or Dynamic Link Library) ● libname.so on Unix; name.dll on DOS/Windows ● Referenced code not copied into executable ● Loaded in memory at run time ● Smaller executable size ● Can update library without recompiling program ● Drawback: slightly slower program startup 6 LibrariesLibraries ● Linking a static library libpepsi.a /* crave source file */ … gcc .. -

AR400 User Guide 2.7.1

AR400 SERIES User Guide Software Release 2.7.1 AR410 AR440S AR441S AR450S AR400 Series Router User Guide for Software Release 2.7.1 Document Number C613-02021-00 REV F. Copyright © 2004 Allied Telesyn International Corp. 19800 North Creek Parkway, Suite 200, Bothell, WA 98011, USA. All rights reserved. No part of this publication may be reproduced without prior written permission from Allied Telesyn. Allied Telesyn International Corp. reserves the right to make changes in specifications and other information contained in this document without prior written notice. The information provided herein is subject to change without notice. In no event shall Allied Telesyn be liable for any incidental, special, indirect, or consequential damages whatsoever, including but not limited to lost profits, arising out of or related to this manual or the information contained herein, even if Allied Telesyn has been advised of, known, or should have known, the possibility of such damages. All trademarks are the property of their respective owner. Contents CHAPTER 1 Introduction Why Read this User Guide? ............................................................................... 7 Where To Find More Information ...................................................................... 8 The Documentation Set .............................................................................. 8 Technical support .............................................................................................. 9 Features of the Router ..................................................................................... -

The Linux Command Line

The Linux Command Line Fifth Internet Edition William Shotts A LinuxCommand.org Book Copyright ©2008-2019, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042. A version of this book is also available in printed form, published by No Starch Press. Copies may be purchased wherever fine books are sold. No Starch Press also offers elec- tronic formats for popular e-readers. They can be reached at: https://www.nostarch.com. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. Release History Version Date Description 19.01A January 28, 2019 Fifth Internet Edition (Corrected TOC) 19.01 January 17, 2019 Fifth Internet Edition. 17.10 October 19, 2017 Fourth Internet Edition. 16.07 July 28, 2016 Third Internet Edition. 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. Table of Contents Introduction....................................................................................................xvi Why Use the Command Line?......................................................................................xvi -

BASIC UNIX COMMANDS FILES and DIRECTORIES All Information Is Stored in files

BASIC UNIX COMMANDS FILES AND DIRECTORIES All information is stored in files. File names and COMMANDS commands are case sensitive. Case matters. Files Commands are what you type at the prompt. Com- are contained in directories. You start out in your mands have arguments on which they operate. For own home directory, and your prompt usually tells example, in rm temp, the command is rm and the ar- its name. At any given time, one of these directories gument is temp; this command removes the file called is your working directory, the one you are in. temp. Here I put arguments in UPPER CASE. Thus, You can refer to files in your working directory by words such as FILE are taken to stand for some other just their names. You can refer to a file that is in a word, such as temp. In the following list, I use [ ] for subdirectory by giving a subdirectory name, a slash, optional arguments that are not typicaly used. and the file name, e.g., Mail/baron. You can refer to Commands have options that are controlled with any file on the computer by giving its full name, start- switches, which are usually letters following a single ing with a slash, such as /home7/b/baron/mbox. dash. Usually you can write several letters after one dash. For example ls -l lists files in a long format, If the file is a program, typing its name will run it. with more information. ls -a lists all the files, in- (That is what commands do.) If the program is some- cluding those that begin with ., which are usually thing you have just written and is in the director you files used by various programs. -

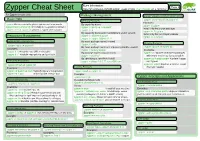

Zypper Cheat Sheet Or Type M an Zypper on a Terminal

More Information: Page 1 Zypper Cheat Sheet https://en.opensuse.org/SDB:Zypper_usage or type m an zypper on a terminal For Zypper version 1.0.9 Package Management Source Packages and Build Dependencies Basic Help Selecting Packages zypper source-install or zypper si Examples: zypper #list the available global options and commands By capability name: zypper si zypper zypper help [command] #Print help for a specific command zypper in 'perl(Log::Log4perl)' Install only the source package zypper shell or zypper sh #Open a zypper shell session zypper in qt zypper in -D zypper By capability name and/or architecture and/or version Install only the build dependencies zypper in 'zypper<0.12.10' Repository Management zypper in -d zypper zypper in zypper.i586=0.12.11 Listing Defined Repositories By exact package name (--name) Updating Packages zypper in -n ftp zypper repos or zypper lr By exact package name and repository (implies --name) zypper update or zypper up Examples: zypper in factory:zypper Examples: zypper lr -u #include repo URI on the table By package name using wildcards zypper up #update all installed packages zypper lr -P #include repo priority and sort by it zypper in yast*ftp* with newer version as far as possible By specifying a .rpm file to install zypper up libzypp zypper #update libzypp Refreshing Repositories zypper in skype-2.0.0.72-suse.i586.rpm and zypper zypper refresh or zypper ref zypper in sqlite3 #update sqlite3 or install Installing Packages Examples: if not yet installed zypper ref packman main #specify repos to be -

APPENDIX a Aegis and Unix Commands

APPENDIX A Aegis and Unix Commands FUNCTION AEGIS BSD4.2 SYSS ACCESS CONTROL AND SECURITY change file protection modes edacl chmod chmod change group edacl chgrp chgrp change owner edacl chown chown change password chpass passwd passwd print user + group ids pst, lusr groups id +names set file-creation mode mask edacl, umask umask umask show current permissions acl -all Is -I Is -I DIRECTORY CONTROL create a directory crd mkdir mkdir compare two directories cmt diff dircmp delete a directory (empty) dlt rmdir rmdir delete a directory (not empty) dlt rm -r rm -r list contents of a directory ld Is -I Is -I move up one directory wd \ cd .. cd .. or wd .. move up two directories wd \\ cd . ./ .. cd . ./ .. print working directory wd pwd pwd set to network root wd II cd II cd II set working directory wd cd cd set working directory home wd- cd cd show naming directory nd printenv echo $HOME $HOME FILE CONTROL change format of text file chpat newform compare two files emf cmp cmp concatenate a file catf cat cat copy a file cpf cp cp Using and Administering an Apollo Network 265 copy std input to std output tee tee tee + files create a (symbolic) link crl In -s In -s delete a file dlf rm rm maintain an archive a ref ar ar move a file mvf mv mv dump a file dmpf od od print checksum and block- salvol -a sum sum -count of file rename a file chn mv mv search a file for a pattern fpat grep grep search or reject lines cmsrf comm comm common to 2 sorted files translate characters tic tr tr SHELL SCRIPT TOOLS condition evaluation tools existf test test -

Rational Purifyplus for UNIX

Develop Fast, Reliable Code Rational PurifyPlus for UNIX Customers and end-users demand that your Automatically Pinpoint code works reliably and offers adequate execu- Hard-To-Find Bugs tion performance. But the reality of your deliv- Reliability problems, such as runtime errors and ery schedule often forces you to sacrifice relia- memory leaks in C/C++, can kill a software bility or performance – sometimes both. And company’s reputation. These errors are hard to without adequate time for testing, you may not find, hard to reproduce, and hardest of all, to even know these issues exist. So you reluctant- explain to customers who discover them. No ly deliver your code before it’s ready, knowing one intentionally relies on their customer as good and well that the integrity of your work their "final QA." But without adequate tools, this may be suspect. is the inevitable outcome. Even the best pro- Rational Software has a solution for ensuring grammers make mistakes in their coding. that your code is both fast and reliable. Rational PurifyPlus for UNIX automatically finds HIGHLIGHTS Rational® PurifyPlus for UNIX combines runtime these reliability errors in C/C++ code that can’t error detection, application performance profil- Automatically finds runtime be found in any other way. It finds the errors ing, and code coverage analysis into a single, errors in C/C++ code even before visible symptoms occur (such as a complete package. Together, these functions system crash or other spurious behavior). And help developers ensure the highest reliability Quickly isolates application it shows you exactly where the error originated, and performance of their software from the very performance bottlenecks regardless of how remote from the visible first release. -

Differential Response: a Primer for Child Welfare Professionals

FACTSHEETS | OCTOBER 2020 Differential Response: A Primer for Child Welfare Professionals Recognizing that a one-size-fits-all approach WHAT'S INSIDE does not serve children and families well, many agencies have implemented differential response Overview (DR), a system reform that establishes multiple pathways to respond to child maltreatment reports. DR routes families with a screened- Approaches to implementing differential response in report of child maltreatment through a traditional investigation pathway or an alternative assessment response pathway, depending on Differential response in the field other State policies and program requirements. Rather than initiating an investigation every time a family has a screened-in report, DR practice Conclusion seeks to assess a family's needs and connect them with services that will help them keep their References children safe. By linking families with services that will strengthen their ability to safely care for their children, DR can reduce the number of children entering foster care and decrease future involvement with the child welfare system. This factsheet provides child welfare professionals with an overview of DR and considerations for practice. Children's Bureau/ACYF/ACF/HHS | 800.394.3366 | Email: [email protected] | https://www.childwelfare.gov 1 OVERVIEW Both pathways share underlying principles and goals, including a focus on child safety, DR—also called alternative response, family permanency, and well-being, and include assessment response, multiple response, child safety and/or risk assessments. The or dual track—is a way of structuring child pathway assignment for a family could change protective services (CPS) to allow for more based on new information, such as potential flexibility in how it responds to low- and criminal behavior or imminent danger of moderate-risk cases and better meet the maltreatment. -

The Developer's Guide to Debugging

The Developer’s Guide to Debugging Thorsten Grotker¨ · Ulrich Holtmann Holger Keding · Markus Wloka The Developer’s Guide to Debugging 123 Thorsten Gr¨otker Ulrich Holtmann Holger Keding Markus Wloka Internet: http://www.debugging-guide.com Email: [email protected] ISBN: 978-1-4020-5539-3 e-ISBN: 978-1-4020-5540-9 Library of Congress Control Number: 2008929566 c 2008 Springer Science+Business Media B.V. No part of this work may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, microfilming, recording or otherwise, without written permission from the Publisher, with the exception of any material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Printed on acid-free paper 987654321 springer.com Foreword Of all activities in software development, debugging is probably the one that is hated most. It is guilt-ridden because a technical failure suggests personal fail- ure; because it points the finger at us showing us that we have been wrong. It is time-consuming because we have to rethink every single assumption, every single step from requirements to implementation. Its worst feature though may be that it is unpredictable: You never know how much time it will take you to fix a bug - and whether you’ll be able to fix it at all. Ask a developer for the worst moments in life, and many of them will be related to debugging. It may be 11pm, you’re still working on it, you are just stepping through the program, and that’s when your spouse calls you and asks you when you’ll finally, finally get home, and you try to end the call as soon as possible as you’re losing grip on the carefully memorized observations and deductions. -

Heapmon: a Low Overhead, Automatic, and Programmable Memory Bug Detector ∗

Appears in the Proceedings of the First IBM PAC2 Conference HeapMon: a Low Overhead, Automatic, and Programmable Memory Bug Detector ∗ Rithin Shetty, Mazen Kharbutli, Yan Solihin Milos Prvulovic Dept. of Electrical and Computer Engineering College of Computing North Carolina State University Georgia Institute of Technology frkshetty,mmkharbu,[email protected] [email protected] Abstract memory bug detection tool, improves debugging productiv- ity by a factor of ten, and saves $7,000 in development costs Detection of memory-related bugs is a very important aspect of the per programmer per year [10]. Memory bugs are not easy software development cycle, yet there are not many reliable and ef- to find via code inspection because a memory bug may in- ficient tools available for this purpose. Most of the tools and tech- volve several different code fragments which can even be in niques available have either a high performance overhead or require different files or modules. The compiler is also of little help a high degree of human intervention. This paper presents HeapMon, in finding heap-related memory bugs because it often fails to a novel hardware/software approach to detecting memory bugs, such fully disambiguate pointers [18]. As a result, detection and as reads from uninitialized or unallocated memory locations. This new identification of memory bugs must typically be done at run- approach does not require human intervention and has only minor stor- time [1, 2, 3, 4, 6, 7, 8, 9, 11, 13, 14, 18]. Unfortunately, the age and execution time overheads. effects of a memory bug may become apparent long after the HeapMon relies on a helper thread that runs on a separate processor bug has been triggered. -

Antibiotic Resistance Threats in the United States, 2019

ANTIBIOTIC RESISTANCE THREATS IN THE UNITED STATES 2019 Revised Dec. 2019 This report is dedicated to the 48,700 families who lose a loved one each year to antibiotic resistance or Clostridioides difficile, and the countless healthcare providers, public health experts, innovators, and others who are fighting back with everything they have. Antibiotic Resistance Threats in the United States, 2019 (2019 AR Threats Report) is a publication of the Antibiotic Resistance Coordination and Strategy Unit within the Division of Healthcare Quality Promotion, National Center for Emerging and Zoonotic Infectious Diseases, Centers for Disease Control and Prevention. Suggested citation: CDC. Antibiotic Resistance Threats in the United States, 2019. Atlanta, GA: U.S. Department of Health and Human Services, CDC; 2019. Available online: The full 2019 AR Threats Report, including methods and appendices, is available online at www.cdc.gov/DrugResistance/Biggest-Threats.html. DOI: http://dx.doi.org/10.15620/cdc:82532. ii U.S. Centers for Disease Control and Prevention Contents FOREWORD .............................................................................................................................................V EXECUTIVE SUMMARY ........................................................................................................................ VII SECTION 1: THE THREAT OF ANTIBIOTIC RESISTANCE ....................................................................1 Introduction .................................................................................................................................................................3