The Reliability of the Glasgow Coma Scale: a Systematic Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glasgow Coma Scale

CalculatedPOWERED BY Decisions Clinical Decision Support for Pediatric Emergency Medicine Practice Subscribers Glasgow Coma Scale Introduction: The Glasgow Coma Scale (GCS) estimates coma severity based on eye, verbal, and motor criteria. Click the thumbnail above to access the calculator. Points & Pearls • The Glasgow Coma Scale (GCS) allows provid- agreement between providers, training and ers in multiple settings and with varying levels education are available online from the GCS of training to communicate succinctly about a creators at www.glasgowcomascale.org. patient’s mental status. • Simpler scores that have been shown to per- • The GCS has been shown to have statistical form as well as the GCS in the prehospital and correlation with a broad array of adverse neuro- emergency department setting (for initial evalu- logic outcomes, including brain injury, need for ation); these are often contracted versions of the neurosurgery, and mortality. GCS itself. For example, the Simplified Motor • The GCS score has been incorporated into Score (SMS) uses the motor portion of the GCS numerous guidelines and assessment scores only. THE SMS and other contracted scores are (eg, Advanced Cardiac Life Support, Advanced less well studied than the GCS for outcomes Trauma Life Support, Acute Physiology and like long-term mortality, and the GCS has been Chronic Health Evaluation I-III, the Trauma and studied as trended over time, while the SMS has Injury Severity Score, and the World Federation not. of Neurologic Surgeons Subarachnoid Hemor- rhage Grading Scale) Critical Actions Although it has been adopted widely and in a Points to keep in mind: variety of settings, the GCS score is not intended • Correlation with outcome and severity is most for quantitative use. -

Sequential Organ Failure Assessment (SOFA) Score

CalculatedPOWERED BY Decisions Clinical Decision Support for Emergency Medicine Practice Subscribers Sequential Organ Failure Assessment (SOFA) Score Introduction: The SOFA score predicts mortality risk for patients in the intensive care unit based on lab results and clinical data. Click the thumbnail above to access the calculator. Points & Pearls • The Sequential Organ Failure Assessment namically or to determine the success or failure of (SOFA) is a mortality prediction score that is an intervention in the ICU. based on the degree of dysfunction of 6 organ systems. • The score is calculated at admission and every Why to Use 24 hours until discharge, using the worst param- The SOFA score can be used to determine the eters measured during the prior 24 hours. level of organ dysfunction and mortality risk in • The scores can be used in several ways, including: ICU patients. » As individual scores for each organ to deter- mine the progression of organ dysfunction. » As a sum of scores on a single intensive care When to Use unit (ICU) day. • The SOFA can be used on all patients who » As a sum of the worst scores during the ICU are admitted to an ICU. stay. • It is not clear whether the SOFA is reliable for • The SOFA score stratifies mortality risk in ICU patients who were transferred from another patients without restricting the data used to ICU. admission values. Critical Actions Instructions Clinical prediction scores such as the SOFA and the Calculate the SOFA score using the worst value Acute Physiologic Assessment and Chronic Health for each variable in the preceding 24-hour Evaluation (APACHE II) can be measured on all period. -

Trauma Measure #5 Documentation of Glasgow Coma Score at Time of Initial Evaluation in TBI

Trauma Measure #5 Documentation of Glasgow Coma Score at Time of Initial Evaluation in TBI National Quality Strategy Domain: Communication and Care Coordination Measure Type (Process/Outcome): Process 2016 PQRS OPTIONS FOR INDIVIDUAL MEASURES: REGISTRY DESCRIPTION: Percentage of trauma activations for patients aged 18 years or older evaluated as part of a trauma activation or trauma consultation who have a traumatic intracranial hemorrhage on initial head CT for which a Glasgow Coma Score (GCS) at the time of initial evaluation is documented in the medical record within 1 hour of hospital arrival. INSTRUCTIONS: This measure is to be reported each time a patient is evaluated as part of a trauma activation or trauma consultation and is found to have a traumatic intracranial hemorrhage on initial head CT. It is anticipated that clinicians who are responsible for the initial trauma evaluation of TBI patients as specified in the denominator coding will submit this measure. Measure Reporting via Registry: Abbreviated Injury Scale (AIS) codes, patient demographics, and the medical record are used to identify patients who are included in the measure’s denominator. The listed numerator options are used to report the numerator of the measure. DENOMINATOR: All patients aged 18 years or older evaluated by an eligible professional as part of a trauma activation or trauma consultation who have a traumatic intracranial hemorrhage on initial head CT). Denominator Criteria: Patients aged 18 years or older AND Patients evaluated as part of a trauma activation -

On-Line Table 1: Clinical, EEG, and Perfusion Data of Patients with NCSE Age Pre- Lat Signs/ Perfusion (Visual Seizure No

On-line Table 1: Clinical, EEG, and perfusion data of patients with NCSE Age Pre- Lat Signs/ Perfusion (visual Seizure No. (yr) Sex seizure Jerks GCS Ventilation EEG analysis) Classification Epilepsy Comorbidity Survival 1.1 59 M Coma R/Ϫ 6 ϩ SW Hyperperfusion Ins L Coma with NCSE – Cardiac arrest – FT L 1.2 72 F GTCS L/Ϫ 3 ϩ SW Hyperperfusion SSE ϩ Chronic infarction L ϩ PR PR 1.3 60 F GTCS No/Ϫ 3 ϩ SW Hyperperfusion SSE ϩ Traumatic brain injury ϩ FR FR 1.4 72 F GTCS R/ϩ 7 – SW Hyperperfusion SSE ϩ Chronic infarction L – TL TL 1.5 89 F GTCS L/Ϫ 8 – SW Hyperperfusion SSE ϩ Chronic infarction R – FR FR 1.6 67 F CP No/ϩ 11 – SW No Hyperperfusion CPSE ϩ Chronic infarction L ϩ FL 1.7 62 F GTCS R/Ϫ 5 ϩ SW Hyperperfusion SSE – Meningo-encephalitis – FR Ins,TL 1.8 67 M GTCS R/ϩ 5 ϩ SL Hyperperfusion SSE – Subacute SDH L ϩ FP L Ins L 1.9 63 F CP L/Ϫ 11 – SW No hyperperfusion CPSE ϩ Chronic infarction L ϩ TP L Note:—Lat indicates lateralizing; Pre, preceding: R, right; L, left; SW, spike-wave activity; F, frontal; T, temporal; P, parietal; Ins, insular; SDH, subdural hemorrhage; SL, slowing; EEG, electroencephalography; NCSE, nonconvulsive status epilepticus; GCS, Glasgow Coma Scale; GTCS, generalized tonic-clonic seizure; CP, complex-partial; CPSE, complex-partial status epilepticus; SSE, subtle status epilepticus. AJNR Am J Neuroradiol ͉͉www.ajnr.org E1 On-line Table 2: Clinical, EEG, and perfusion data of patients with a postictal state Age Pre- Lat Perfusion (visual No. -

Pediatric Index of Mortality 2 Score As an Outcome Predictor in Pediatric Intensive Care Unit in India

Research Article Pediatric index of mortality 2 score as an outcome predictor in pediatric Intensive Care Unit in India Jeyanthi Gandhi, Shanthi Sangareddi, Poovazhagi Varadarajan, Saradha Suresh Background and Aims: Pediatric index of mortality (PIM) 2 score is one of the severity Access this article online scoring systems being used for predicting outcome of patients admitted to intensive Website: www.ijccm.org care units (ICUs). The aim of the present study was to evaluate the usefulness of PIM2 DOI: 10.4103/0972-5229.120320 score in predicting mortality in a tertiary care pediatric ICU (PICU) and to assess the Quick Response Code: Abstract associated factors in predicting mortality such as presence of shock, need for assisted ventilation and Glasgow coma scale <8. Materials and Methods: This was a prospective observation study done at tertiary care PICU from May 2011 to July 2011. Consecutive 119 patients admitted to PICU (aged 1 month to 12 years) were enrolled in the study. PIM2 scoring was done for all patients. The outcome was recorded as death or discharge. The associated factors for mortality were analyzed with SPSS 17. Results: PIM2 score discriminated between death and survival at a 99.8 cut-off, with area under receiver operating characteristic curve 0.843 with 95% confi dence interval (CI) (0.765, 0.903). Most patients were referred late to this hospital, which explains higher death rate (46.2%), lesser length of hospital stay (mean 2.98 days) in the mortality group, and increased rate of mechanical ventilation (68.1%). Presence of shock was independently associated with mortality, as evidenced by binary logistic regression. -

Predictive Value of Scoring System in Severe Pediatric Head Injury

Medicina (Kaunas) 2007; 43(11) 861 Predictive value of scoring system in severe pediatric head injury Dovilė Evalda Grinkevičiūtė, Rimantas Kėvalas, Viktoras Šaferis1, Algimantas Matukevičius1, Vytautas Ragaišis2, Arimantas Tamašauskas1 Department of Children’s Diseases, 1Institute for Biomedical Research, 2Department of Neurosurgery, Kaunas University of Medicine, Lithuania Key words: pediatric head trauma; Glasgow Coma Scale, Pediatric Trauma Score; Glasgow Outcome Scale, Pediatric Index of Mortality 2. Summary. Objectives. To determine the threshold values of Pediatric Index of Mortality 2 (PIM 2) score, Pediatric Trauma Score (PTS), and Glasgow Coma Scale (GCS) score for mortality in children after severe head injury and to evaluate changes in outcomes of children after severe head injury on discharge and after 6 months. Material and methods. All children with severe head injury admitted to the Pediatric Intensive Care Unit of Kaunas University of Medicine Hospital, Lithuania, from January 2004 to June 2006 were prospectively included in the study. The severity of head injury was categorized according to the GCS score ≤8. As initial assessment tools, the PTS, postresuscitation GCS, and PIM 2 scores were calculated for each patient. Outcome was assessed according to Glasgow Outcome Scale on discharge and after 6 months. Results. The study population consisted of 59 children with severe head injury. The group consisted of 37 (62.7%) boys and 22(37.3%) girls; the mean age was 10.6±6.02. The mean GCS, PTS, and PIM 2 scores were 5.9±1.8, 4.8±2.7, and 14.0±19.5, respectively. In terms of overall outcome, 46 (78.0%) patients survived and 13 (22.0%) died. -

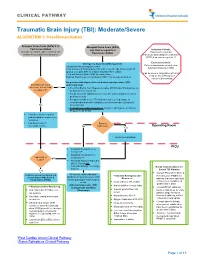

Traumatic Brain Injury (TBI): Moderate/Severe ALGORITHM 1: Post-Resuscitation

CLINICAL PATHWAY Traumatic Brain Injury (TBI): Moderate/Severe ALGORITHM 1: Post-Resuscitation Glasgow Coma Scale (GCS) 9-12 Glasgow Coma Scale (GCS) Post resuscitation less than or equal to 8 Inclusion Criteria: (Consider for children under 2 years old with Post-resuscitation Patient with traumatic concern for acute abusive head trauma) brain injury and Glasgow Coma Scale (GCS) less than or equal to 12 Exclusion Criteria: Emergency Department Management Patients found down without clear Trauma and Neurosurgery Consult traumatic brain injury (TBI) Head Computed Tomography (CT) scan- if not already obtained (STAT upload) or a quick MRI as a viable (radiation-free) option (Can be used in conjunction with Post 2 IVs with Normal Saline (NS) as maintenance Cardiac Arrest Pathway if Modified Rapid sequence intubation (RSI) if not already performed considered beneficial) For patients with signs of elevated intracranial pressure (ICP) Monitor GCS. GCS empirically treat decrease to less than Yes • 3% (0.5 mEq/mL Na+) Hypertonic saline (HTS) bolus 5mL/kg/dose via or equal to 8? peripheral IV or central line • For patients with ongoing seizures use the status epilepticus clinical pathway (below). • For patients with severe TBI who have not received a dose of levetiracetam at another institution, give levetiracetam 20mg/kg IV No (max 2 grams) • If transporting to Operating Room: Consider mild hyperventilation to PCO2 of 32-35 mmHg • Consider comfort sedation and standard neuroprotective measures. • Complete frequent Surgery neurologic exams. No Indicated? -

Predictive Value of EEG-Awakening for Behavioral Awakening from Coma

Kang et al. Ann. Intensive Care (2015) 5:52 DOI 10.1186/s13613-015-0094-4 RESEARCH Open Access Predictive value of EEG‑awakening for behavioral awakening from coma Xiao‑gang Kang, Feng Yang, Wen Li, Chen Ma, Li Li* and Wen Jiang* Abstract Background: A reliable predictor for early recovery of consciousness in comatose patients is of great clinical signifi‑ cance. Here we aimed to investigate the potentially prognostic value of electroencephalogram-reactivity (EEG-R) in combination with sleep spindles, termed EEG-awakening, for behavioral awakening in etiologically diverse comatose patients. Methods: We performed a prospectively observational study on a sample of patients, all of whom were in coma last‑ ing longer than 3 days. Continuous EEG monitoring was performed for at least 24 h to detect the presence of EEG-R and sleep spindles. We then followed patients for 1 month to determine their subsequent level of consciousness, classifying them as either awakened or non-awakened. Finally, Univariate and multivariate analyses were employed to assess the association of predictors with consciousness recovery. Results: One hundred and six patients with different etiologies leading to coma were included in the study. Of these, 48 patients (45.3 %) awoke and 58 patients (54.7 %) did not awake in the month after the onset of the study. Of note, 26 patients (24.5 %) had a good neurological outcome, and 31 patients (29.3 %) died. Univariate analysis revealed that the Glasgow Coma Scale (GCS) score, EEG-R, sleep spindles, and EEG-awakening were all associated with one-month awakening. Comparisons of the area under the receiving operator characteristic curve (AUC) showed that EEG- awakening (0.839; 0.757–0.921) was superior to all of the following: EEG-R (0.798; 0.710–0.886), sleep spindles (0.772; 0.680–0.864), and GCS scores (0.720; 0.623–0.818). -

Clinical Monitoring Scales in Acute Brain Injury: Assessment of Coma, Pain, Agitation, and Delirium

Neurocrit Care DOI 10.1007/s12028-014-0025-5 REVIEW ARTICLE Clinical Monitoring Scales in Acute Brain Injury: Assessment of Coma, Pain, Agitation, and Delirium Richard R. Riker • Jennifer E. Fugate • And the Participants in the International Multi-disciplinary Consensus Conference on Multimodality Monitoring Ó Springer Science+Business Media New York 2014 Abstract Serial clinical examination represents the most as the Richmond Area Sedation Scale (RASS) and Seda- fundamental and basic form of neurological monitoring, tion–Agitation Scale (SAS) may provide reasonable tools and is often the first and only form of such monitoring in in some neurocritical care patients. The use of sedative patients. Even in patients subjected to physiological mon- drugs and neuromuscular blockers may invalidate the use itoring using a range of technologies, the clinical of some clinical examination tools in others. The use of examination remains an essential tool to follow neurolog- sedation interruption to assess neurological status can ical progress. Key aspects of the clinical examination have result in physiological derangement in unstable patients now been systematized into scoring schemes, and address (such as those with uncontrolled intracranial hypertension), consciousness, pain, agitation, and delirium (PAD). The and is not recommended. Glasgow Coma Scale has been the traditional tool to measure consciousness, but the full outline of unrespon- Keywords Coma Á Scale Á Neurologic examination Á siveness (FOUR) score has recently been validated in a Delirium Á Pain Á Sedation variety of settings, and at present, both represent clinically useful tools. Assessment of PAD in neurologically com- promised patients present special challenges. For pain, the Introduction Numeric Rating Scale is the preferred initial approach, with either the Behavioral Pain Scale or the Critical Care Although serial clinical examinations represent a central Pain Observation Tool in subjects who are not able to part of neurological evaluation and the foundation for all respond. -

Non-Convulsive Status Epilepticus: Usefulness of Clinical Features in Selecting Patients for Urgent EEG a M Husain, G J Horn, M P Jacobson

J Neurol Neurosurg Psychiatry: first published as 10.1136/jnnp.74.2.189 on 1 February 2003. Downloaded from 189 PAPER Non-convulsive status epilepticus: usefulness of clinical features in selecting patients for urgent EEG A M Husain, G J Horn, M P Jacobson ............................................................................................................................. J Neurol Neurosurg Psychiatry 2003;74:189–191 Background: Non-convulsive status epilepticus (NCSE) is status epilepticus without obvious tonic–clonic activity. Patients with NCSE have altered mental state. An EEG is needed to confirm the diagnosis, but obtaining an EEG on every patient with altered mental state is not practical. Objective: To determine whether clinical features could be used to predict which patients were more likely to be in NCSE and thus in need of an urgent EEG. See end of article for Methods: Over a six month period, all patients for whom an urgent EEG was ordered to identify NCSE authors’ affiliations were enrolled. Neurology residents examined the patients and filled out a questionnaire without knowl- ....................... edge of the EEG results. The patients were divided into two groups, NCSE and non-NCSE, depending Correspondence to: on the EEG result. The clinical features were compared between the two groups. The sensitivity and Dr Aatif M Husain, Box specificity of the features were calculated. 3678, 202 Bell Building, Results: 48 patients were enrolled, 12 in NCSE and 36 not in NCSE. Remote risk factors for seizures, Duke University Medical severely impaired mental state, and ocular movement abnormalities were seen significantly more often Center, Durham, NC 27710, USA; in the NCSE group. The combined sensitivity of remote risk factors for seizures and ocular movement [email protected] abnormalities was 100%. -

The Glasgow Coma Scale (GCS) in the Lancet in 1974 As an Aid in the Clinical Assessment of Post- Traumatic Unconsciousness

WIDESPREAD USE More than 4500 publications have appeared to its use (MEDLINE search performed in October 2005) Component of APACHE II Acute Physiology and Chronic Health Evaluation score RTS Revised Trauma Score, TRISS Trauma and Injury Severity Score CRAMS The Circulation, Respiration, Abdomen, Motor,Speech Scale NEED Developed primarily to facilitate the assessment and recording of initial severity of brain dysfunction and of ultimate outcome in a multicenter study of outcome after severe brain damage. Aim was to use Simple terms that could be Readily understood by a wide range of Observers Doctors Nurses Others. Repeated observations of the coma scale displayed on a bedside chart give it a second use Monitoring of improvement or deterioration in conscious level as an indication of recovery or of complications. HISTORY Early in World War II Medical Research Council(MRC) ,Britain issued Glossary of 16 psychological terms commonly used in cases of head injury that included Coma Semicoma, Confusion - mild, moderate and severe Stupor Automatism. Post-war neurosurgical practice was much more concerned with elective surgery for tumors and aneurysms Fatalistic attitude to head injuries believing little could be done to affect the outcome. Good resuscitation and intensive care saved many severely head injured patients Neurosurgeons were challenged to Assist in reducing mortality Save as much of the damaged brain as possible Studies in Glasgow revealed Much of the mortality and persisting disability after head injury was potentially avoidable, As reflected in the title of one paper ‘Head injuries who talk and die’* * Reilly PL, Adams JH, Graham DI, et al: Patients with head injury who talk and die. -

Deep Coma Does Not Always Predict Poor Outcomes Among Patients with Polytrauma

European Journal of Trauma and Emergency Surgery (2019) 45:455–460 https://doi.org/10.1007/s00068-018-0917-8 ORIGINAL ARTICLE Deep coma does not always predict poor outcomes among patients with polytrauma Jen‑Fu Huang1 · Chun‑Hsiang Ou Yang1 · Chih‑Yuan Fu1 · Yu‑Tung Wu1 Received: 19 September 2017 / Accepted: 31 January 2018 / Published online: 9 February 2018 © Springer-Verlag GmbH Germany, part of Springer Nature 2018 Abstract Purposes This study aimed to clarify the prognosis of polytrauma patients presenting to the emergency department (ED) with a Glasgow Coma Scale score (GCS) of 3. Methods A trauma registry system has been established at our institution since 2009. The current study reviewed patients in the registry who presented to the ED with a GCS of 3 from January 2011 to December 2015. Surviving and non-surviving patients were compared to identify the prognostic factors of patient survival. The study also aimed to determine the factors contributing to patients who survived with a GCS > 13 at discharge. Results During the study period, 145 patients were enrolled in the study, 119 of whom (82.1%) did not survive the traumatic insult. Of the 26 survivors, 13 (9.0%) had a GCS of 14 or 15 at discharge. The multiple logistic regression revealed that a lack of bilateral dilated and fixed pupils (BFDP) (OR 5.967, 95% CI 1.780–19.997, p = 0.004) and a GCS > 3 after resuscitation (OR 6.875, 95% CI 2.135–22.138, p = 0.001) were independent prognostic factors of survival. Based on the multiple logistic regression, an age under 40 years (OR 16.405, 95% CI 1.520–177.066, p = 0.021) and a GCS > 3 after resuscitation (OR 12.100, 95% CI 1.058–138.352, p = 0.045) were independent prognostic factors of a GCS > 13 at discharge.