Exec Com April 2007

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toronto Has No History!’

‘TORONTO HAS NO HISTORY!’ INDIGENEITY, SETTLER COLONIALISM AND HISTORICAL MEMORY IN CANADA’S LARGEST CITY By Victoria Jane Freeman A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Department of History University of Toronto ©Copyright by Victoria Jane Freeman 2010 ABSTRACT ‘TORONTO HAS NO HISTORY!’ ABSTRACT ‘TORONTO HAS NO HISTORY!’ INDIGENEITY, SETTLER COLONIALISM AND HISTORICAL MEMORY IN CANADA’S LARGEST CITY Doctor of Philosophy 2010 Victoria Jane Freeman Graduate Department of History University of Toronto The Indigenous past is largely absent from settler representations of the history of the city of Toronto, Canada. Nineteenth and twentieth century historical chroniclers often downplayed the historic presence of the Mississaugas and their Indigenous predecessors by drawing on doctrines of terra nullius , ignoring the significance of the Toronto Purchase, and changing the city’s foundational story from the establishment of York in 1793 to the incorporation of the City of Toronto in 1834. These chroniclers usually assumed that “real Indians” and urban life were inimical. Often their representations implied that local Indigenous peoples had no significant history and thus the region had little or no history before the arrival of Europeans. Alternatively, narratives of ethical settler indigenization positioned the Indigenous past as the uncivilized starting point in a monological European theory of historical development. i i iii In many civic discourses, the city stood in for the nation as a symbol of its future, and national history stood in for the region’s local history. The national replaced ‘the Indigenous’ in an ideological process that peaked between the 1880s and the 1930s. -

IWH Accomplishments Report 2003

Table of Contents Introduction .................................................................1 Research Behavioural Consequences of Insurance and Regulation Overview .......................3 Systematic Review of the Literature on Workers’ Compensation System and Occupational Health and Safety Features and their Consequences for Work-related Injury Experiences (Disability Lit Review: 4/440/860) ..........................................5 The Impact of Experience Rating and Occupational Health and Safety on Claims Experiences in the Ontario Jurisdiction (ER and OHS Studies: 4/440/416) ...........7 Five Country Comparison on Declining Workers’ Compensation Injury Rates (Five Country Study: 4/440/412) ...........................................10 WSIB Lost-time Injuries and Income Sources Post-injury (WSIB Lost-time Injuries: 4/440/406) .......................................11 Labour Market Experiences and Health Overview ...................................14 Ten-year Mortality Follow-up for Occupations in the 1991 Canadian Census (Mortality Follow-up: 5/440/461) ..........................................16 Health and Labour Market Trajectories (Market Trajectories: 5/440/448) .................18 Growth Curve Analysis of Work Stressors and Distress/Depression (NPHS 1994-2000) (NPHS Longitudinal: 4/420/202) ...........................................20 Analytic Methods for Population-based Health and Health Care Resource Allocation (Methods for Resource Allocation: 5/440/476) ................................22 Work Injuries and Teens (Work -

Towards Race Equity in Education

TOWARDS RACE EQUITY IN EDUCATION The Schooling of Black Students in the Greater Toronto Area April 2017 The Jean Augustine Chair in Education, Community & Diaspora Contents About This Project Acknowledgements PART 1: INTRODUCTION 1 PART 2: THE CURRENT CONTEXT 6 PART 3: DEMOGRAPHIC OVERVIEW 20 OF ONTARIO’S BLACK POPULATION PART 4: RACIAL DIFFERENCES IN THE 25 EDUCATION OF STUDENTS 4.1 What the TDSB data tells us about the educational situation of Black students 4.2 Perspectives from the community PART 5: DISCUSSION & CONCLUSION 63 PART 6: RECOMMENDATIONS 68 REFERENCES Black students are as capable, as competent, as creative, and as determined as all other students. The ways that Black students are constantly misjudged and mistreated by teachers and guidance counsellors is an injustice to our community. As educators who seek to enrich an increasingly diverse nation, it is your duty and responsibility to encourage, motivate, challenge and strengthen Black students like all others. When you begin to see Black students as part of your community, only then will you effectively fulfill your job as an educator. ~ Black Student About This Project This report is the result of a collaborative project between Dr. Carl James, Jean Augustine Chair in Education, Community & Diaspora at York University; the African Canadian Legal Clinic (ACLC); and the Ontario Alliance of Black School Educators (ONABSE). The community consultations were organized by the ACLC and ONABSE, while the research (data collection, analysis, and report writing) was led by Dr. Carl James with Tana Turner. JEAN AUGUSTINE CHAIR IN EDUCATION, COMMUNITY & DIASPORA The Jean Augustine Chair in Education, Community & Diaspora is a university chair in the Faculty of Education which aims to advance access, equity and inclusivity to education through community engagement and collaborative action. -

Sport-Scan Daily Brief

SPORT-SCAN DAILY BRIEF NHL 1/26/2021 Anaheim Ducks Detroit Red Wings 1200498 Hampus Lindholm doing some heavy lifting for Ducks’ 1200527 Detroit Red Wings' Anthony Mantha rips his own defense corps performance in 5 of 6 games 1200528 Some glitches, $100,000-plus losses in first weekend of Arizona Coyotes Michigan online sports betting 1200499 Former Coyotes GM John Chayka suspended from NHL 1200529 'I can redeem myself': Red Wings' Anthony Mantha through 2021, reports say fighting to crawl out of early-season slump 1200530 Red Wings’ Anthony Mantha: ‘I need to get my game Boston Bruins going’ 1200500 Bruins’ David Pastrnak hits the ice for full contact, may return to action by this weekend Edmonton Oilers 1200501 Bruins Notebook: David Pastrnak inching closer to return 1200531 Oil Spills: In Mikko the Oilers have to trust 1200502 Pastrnak close to returning? Bruins coach gives promising 1200532 Puljujarvi and Oilers need a repeat performance against update the Jets 1200503 Pastrnak Closing On Bruins’ Return, ‘Itching To Get Out 1200533 JONES: Puljujarvi stepping up as Oilers still looking for There” back-to-back wins 1200504 Time For Boston Bruins-Style Mea Culpa From Ritchie 1200534 'Jesse has all the tools': Oilers' Puljujarvi a force against Critics the Jets 1200505 David Pastrnak, surgery behind him, relishes pain-free play: ‘It’s wonderful’ Florida Panthers 1200506 Pain, prayer, peace: Former Bruin Adam McQuaid feels 1200535 Florida Panthers would ‘rather play than practice.’ They’re fulfilled upon retirement about to start playing -

A Night at the Garden (S): a History of Professional Hockey Spectatorship

A Night at the Garden(s): A History of Professional Hockey Spectatorship in the 1920s and 1930s by Russell David Field A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Exercise Sciences University of Toronto © Copyright by Russell David Field 2008 Library and Bibliotheque et 1*1 Archives Canada Archives Canada Published Heritage Direction du Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A0N4 Ottawa ON K1A0N4 Canada Canada Your file Votre reference ISBN: 978-0-494-39833-3 Our file Notre reference ISBN: 978-0-494-39833-3 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library permettant a la Bibliotheque et Archives and Archives Canada to reproduce, Canada de reproduire, publier, archiver, publish, archive, preserve, conserve, sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par Plntemet, prefer, telecommunication or on the Internet, distribuer et vendre des theses partout dans loan, distribute and sell theses le monde, a des fins commerciales ou autres, worldwide, for commercial or non sur support microforme, papier, electronique commercial purposes, in microform, et/ou autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in et des droits moraux qui protege cette these. this thesis. Neither the thesis Ni la these ni des extraits substantiels de nor substantial extracts from it celle-ci ne doivent etre imprimes ou autrement may be printed or otherwise reproduits sans son autorisation. -

Toronto City Council Decision Document Regular Meeting on January 31, February 1 and 2, 2006

Ulli S. Watkiss City Clerk City Clerk’s Office Secretariat Tel: 416-392-7032 Marilyn Toft Fax: 416-392-2980 Council Secretariat Support e-mail: [email protected] City Hall, 12th Floor, West Web: www.toronto.ca 100 Queen Street West Toronto, Ontario M5H 2N2 TORONTO CITY COUNCIL DECISION DOCUMENT REGULAR MEETING ON JANUARY 31, FEBRUARY 1 AND 2, 2006 City Council’s actions on each Clause in the following Reports and Notices of Motions considered at the meeting are contained in this Decision Document. Declarations of Interest, if any, are included and all additional material noted in this document is on file in the City Clerk’s Office, Toronto City Hall. Please refer to the Council Minutes for the official record of Council’s proceedings. Deferred Clauses: Policy and Finance Committee Report 8 (2005)................................................................. 1 Administration Committee Report 9 (2005)....................................................................... 1 Scarborough Community Council Report 9 (2005)............................................................ 2 Planning and Transportation Committee Report 10 (2005)................................................ 2 New Reports: Policy and Finance Committee Report 1 ............................................................................ 3 Administration Committee Report 1................................................................................. 20 Board of Health Report 1................................................................................................. -

GAME NOTES Oakland Athletics Baseball Company 510-638-4900 | Athletics.Com | @Athletics

GAME NOTES Oakland Athletics Baseball Company 510-638-4900 | athletics.com | @athletics OAKLAND ATHLETICS (19-13) VS. TORONTO BLUE JAYS (15-14) THURSDAY, MAY 6, 2021 — OAKLAND COLISEUM — 12:37 PDT RHP MIKE FIERS (0-1, 4.50) VS. LHP HYUN JIN RYU (1-2, 2.60) NBCSCA — A’S CAST ON IHEART RADIO — A’S RADIO NETWORK (960 AM, 103.7 FM HD2, 1140 AM) ABOUT THE A’S: Had their three-game winning streak snapped last night and DEFENSIVE DOINGS: The A’s rank third in the American League in fielding per- are now 5-6 since their 13-game winning streak…are 19-7 over the last 26 centage (.987) and their 15 errors are tied for third fewest…however, have games after starting the season 0-6 and are 19-13 (.594) overall, which is -20 defensive runs saved, which is the fewest in the majors (Angels, -18)…A’s the best record in the majors…however, have been out-scored 142-135 for a pitchers are tied for third in the AL in pickoffs (2)…A’s opponents have been run differential of -7…have held at least a share of first place in the American successful in just 11-of-16 (69.7%) stole base attempts, which is fourth low- League West for each of the last 16 days and have been alone in first for each est in the AL. of the last 13…currently have a season-high tying two game lead…went from sole possession of last on April 11 to sole possession of first on April 21… HOME AND AWAY: The A’s are 3-3 on this season-long 10-game homestand have spent 14 days alone in first place this year, two days tied for first, one against Baltimore (1-2), Toronto (2-1) and Tampa Bay (three games)…had day tied for second, three days in third, two days in fourth, three days tied for their eight-game home winning streak snapped last Friday night and are fourth and 10 days in fifth. -

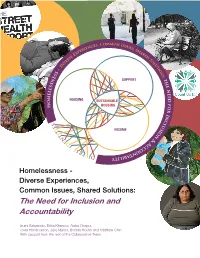

The Need for Inclusion and Accountability

Common ces, Issu en es, ri Sh pe a Ex re e d s S r o e l iv u D t i - o n s s s : e t SUPPORT n h s e s e n l e e e d m HOUSING SUSTAINABLE o f HOUSING o h r I n c l u S E s I i INCOME o T I n T & N E A c D I c o E u S n R E t a V b I i D l i t y Homelessness - Diverse Experiences, Common Issues, Shared Solutions: The Need for Inclusion and Accountability Izumi Sakamoto, Erika Khandor, Aisha Chapra, Tekla Hendrickson, Julie Maher, Brenda Roche and Matthew Chin With support from the rest of the Collaborative Team Homelessness – Diverse Voices, Common Experiences, Shared Solutions: The Need for Inclusion and Accountability Published: Toronto: Factor-Inwentash Faculty of Social Work, University of Toronto. October 2008 Authors: Izumi Sakamoto, Erika Khandor, Aisha Chapra, Tekla Hendrickson, Julie Maher, Brenda Roche and Matthew Chin Support and assistance for writing this document were provided by Cyndy Baskin, Nancy Viva Davis Halifax, David Hulchanski, Kate Mason, Catherine Moravac, Grace Piekielko, Michael Shapcott, and the rest of the Collaborative Team. Collaborative Team: Izumi Sakamoto (Lead Researcher), Assistant Professor, Factor-Inwentash Faculty of Social Work, University of Toronto Cyndy Baskin, Associate Professor, School of Social Work, Ryerson University Aisha Chapra, Research Co-Coordinator, Collaborative Arts Research Project, Factor-Inwentash Faculty of Social Work, University of Toronto Matthew Chin, Research Co-Coordinator, Collaborative Arts Research Project, Factor-Inwentash Faculty of Social Work, University of Toronto -

Illuminating the Past Brightening the Future

1903 –2003 By Edward E. Seymour • Local Union 353 • International Brotherhood of Electrical Workers • AN ILLUSTRATED HISTORY • International Brotherhood of Electrical Workers • Local Union 353 • 1903 –2003 By Edward E. Seymour 1903 2003 ILLUMINATING THE PAST YEARS of Electrifying Progress BRIGHTENING THE FUTURE BRIGHTENING THE FUTURE AN ILLUSTRATED HISTORY AN ILLUSTRATED HISTORY • International Brotherhood of Electrical Workers Local Union 353 1903 –2003 ILLUMINATING THE PAST By Edward E. Seymour 1903 2003 ILLUMINATING THE PAST YEARS of Electrifying Progress BRIGHTENING THE FUTURE AN ILLUSTRATED HISTORY International Brotherhood of Electrical Workers Local Union 353 1903 –2003 By Edward E. Seymour 1903 2003 YEARS of Electrifying Progress Edward E. Seymour Edward E. Seymour is the owner of Solidarity Consulting, a labour relations consulting firm for unions. He is also a partner with Michael Lyons and Sherril Murray in Resolutions Unlimited (2000), a firm that concentrates on resolving harassment and discrimina- tion issues in the work place. Ed serves as a nominee on arbitration boards for several unions including the Ontario Public Service Employees Union, the Canadian Union of Public Employees and the Communications Energy and Paper Workers Union. Born in Port aux Basques, Newfoundland and raised in Sydney, Nova Scotia, Ed moved to Ontario in 1958. His trade union experience dates from 1962 when he became a member of Lodge 1246 of the International Association of Machinists and Aerospace Workers. He received his Bachelor of Arts in Political Science from the University of Waterloo in 1974 after attending classes during the day and working at night. Ed was the Canadian Education and Publicity Director for the Textile Workers Union of America (now part of U.N.I.T.E.) from 1970 to 1977. -

Caring for Kids

Project1:Layout 1 6/10/2014 1:13 PM Page 1 MLB: Rays beat Blue Jays to break road losing streak / B1 MONDAY TOD AY C I T R U S C O U N T Y & e x t m o r n i n g HIGH 92 S cattered rain. LOW Keeping w atch on tropical storm . 72 PAGE A4 w w w.chronicleonline.com JULY 5, 2021 Florida’s Best Community Newspaper Serving Florida’s Best Community $1 VOL. 126 ISSUE 271 INSIDE Officials say wall collapse not dire damage to the Chronicle, which “... This wall is installed for FDOT: Heavy rains caused problem forwarded it to the Florida De- slope protection against erosion. partment of Transportation for an The heavy rains experienced the MIKE WRIGHT slope between the northbound and explanation. previous week resulted in some Staff writer southbound lanes, collapsed some- FDOT spokeswoman Ivette washout of the dirt beneath the time after heavy rains that hit Cit- Ruiz-Paz’s response: slope pavement and affected some Fourth fun State transportation officials say rus County on Friday, “First and foremost, the wall of the concrete panel sections, ei- Citrus County carries on there is no cause for concern over June 25. shown in the photo is not for re- ther late Sunday night or early with its Fourth of July a concrete panel that collapsed on The overpass crosses Southern taining or structural purposes and Monday morning, leading to the a Suncoast Parkway underpass Street near Seven Rivers Christian Florida’s Turnpike Enterprise as- wall’s current appearance. -

GD18-51 Annual Report Research and Innovation 2018

McGILL UNIVERSITY BOARD OF GOVERNORS Memorandum Office of the Vice-Principal (Research and Innovation) James Administration Building, room 419 845 Sherbrooke Street W. Montreal (QC) H3A 2T5 Tel: (514) 398-2995 | Fax: (514) 398-8257 TO: Board of Governors FROM: Professor Martha Crago, Vice-Principal (Research and Innovation) SUBJECT: Annual Report on Research and Innovation 2018 DATE: April 25, 2019 DOCUMENT #: GD18-51 ACTION INFORMATION APPROVAL/DECISION REQUIRED: ISSUE & EXPECTED Indicators of McGill’s recent research funding performance and OUTCOME performance in areas related to innovation and partnership-building between the University and external communities are presented to the Board of Governors for information. BACKGROUND & The Annual Report on Research and Innovation is a review of McGill’s RATIONALE performance in major funding programs, and progress in areas related to innovation and partnerships in FY2017 and three-year averages from FY2015 to FY2017. It examines how McGill compares to comparable universities and U15 peers in terms of: - Amount and sources of sponsored research funding - Sponsored research funding per faculty member - Tri-Agency funding - Fonds de recherche du Québec funding - Industry sponsored research funding including revenue from inventions, licences and options - National competitions such as Canada Foundation for Innovation, Canada First Research Excellence Fund, and Canada Excellence Research Chairs - Publications ALIGNMENT WITH The goal of the report is to inform members of the Board of Governors and MISSION AND the wider McGill community of significant issues related to research STRATEGIC funding while outlining how the University’s research enterprise is PRIORITIES becoming better suited to compete in the future. The report therefore aims to inform Governors of McGill’s progress in its mission to remain one of Canada and the world’s best research-intensive universities as well as toward its strategic priority of enhancing and strengthening its engagement with the full range of community partners. -

January 2020 the Canadian South East Asia Refugee Historical

January 2020 The Canadian South East Asia Refugee Historical Research Project: Hearts of Freedom Background paper on the archival media research: Toronto Star and The Globe and Mail (1975-1985) Dr. Filipe Duarte Assistant Professor School of Social Work University of Windsor Ontario This background paper offers a detailed overview of the archival media research conducted on the historical records (microfilms and online databases) of two Canadian newspapers – the Toronto Star and The Globe and Mail, between May 1, 1975, and December 31, 1985. The archival media research reviewed between 20,000 and 25,000 results, on both newspapers, over a five- month period (February-June 2019). Research was undertaken by searching selected keywords identified in the book Running on Empty: Canada and the Indochinese Refugees, 1975-1980, written by Michael J. Molloy, Peter Duschinsky, Kurt F. Jensen and Robert Shalka. The keywords identified were: refugees, boat people, southeast Asian, Indochinese, Vietnam, Laos, Cambodia, Vietnamese, Cambodian(s), Laotian(s), resettlement, and sponsorship. Subsequently, the analysis of the press clipping then proceeded by grouping the findings according four different categories and stages: (1) beginning of the arrival of refugees (migration journey, etc.); (2) public and political/government opinions; (3) sponsorship – individuals, churches and groups; and (4) resettlement and integration into Canadian society. The beginning of the arrival of refugees The analysis of the findings under the first category – “beginning of the arrival of refugees”, showed that, the first refugees, a family of five, landed at Toronto airport on May 8, 1975. They had already a family member living in Toronto. One month earlier, 53 orphans also arrived in Canada.