ISBRA 2012 Short Abstracts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lineage-Specific Evolution of the Vertebrate Otopetrin Gene Family Revealed by Comparative Genomic Analyses

Hurle et al. BMC Evolutionary Biology 2011, 11:23 http://www.biomedcentral.com/1471-2148/11/23 RESEARCHARTICLE Open Access Lineage-specific evolution of the vertebrate Otopetrin gene family revealed by comparative genomic analyses Belen Hurle1, Tomas Marques-Bonet2,3, Francesca Antonacci3, Inna Hughes4, Joseph F Ryan1, NISC Comparative Sequencing Program1,5, Evan E Eichler3, David M Ornitz6, Eric D Green1,5* Abstract Background: Mutations in the Otopetrin 1 gene (Otop1) in mice and fish produce an unusual bilateral vestibular pathology that involves the absence of otoconia without hearing impairment. The encoded protein, Otop1, is the only functionally characterized member of the Otopetrin Domain Protein (ODP) family; the extended sequence and structural preservation of ODP proteins in metazoans suggest a conserved functional role. Here, we use the tools of sequence- and cytogenetic-based comparative genomics to study the Otop1 and the Otop2-Otop3 genes and to establish their genomic context in 25 vertebrates. We extend our evolutionary study to include the gene mutated in Usher syndrome (USH) subtype 1G (Ush1g), both because of the head-to-tail clustering of Ush1g with Otop2 and because Otop1 and Ush1g mutations result in inner ear phenotypes. Results: We established that OTOP1 is the boundary gene of an inversion polymorphism on human chromosome 4p16 that originated in the common human-chimpanzee lineage more than 6 million years ago. Other lineage- specific evolutionary events included a three-fold expansion of the Otop genes in Xenopus tropicalis and of Ush1g in teleostei fish. The tight physical linkage between Otop2 and Ush1g is conserved in all vertebrates. -

Grammar String: a Novel Ncrna Secondary Structure Representation

Grammar string: a novel ncRNA secondary structure representation Rujira Achawanantakun, Seyedeh Shohreh Takyar, and Yanni Sun∗ Department of Computer Science and Engineering, Michigan State University, East Lansing, MI 48824 , USA ∗Email: [email protected] Multiple ncRNA alignment has important applications in homologous ncRNA consensus structure derivation, novel ncRNA identification, and known ncRNA classification. As many ncRNAs’ functions are determined by both their sequences and secondary structures, accurate ncRNA alignment algorithms must maximize both sequence and struc- tural similarity simultaneously, incurring high computational cost. Faster secondary structure modeling and alignment methods using trees, graphs, probability matrices have thus been developed. Despite promising results from existing ncRNA alignment tools, there is a need for more efficient and accurate ncRNA secondary structure modeling and alignment methods. In this work, we introduce grammar string, a novel ncRNA secondary structure representation that encodes an ncRNA’s sequence and secondary structure in the parameter space of a context-free grammar (CFG). Being a string defined on a special alphabet constructed from a CFG, it converts ncRNA alignment into sequence alignment with O(n2) complexity. We align hundreds of ncRNA families from BraliBase 2.1 using grammar strings and compare their consensus structure with Murlet using the structures extracted from Rfam as reference. Our experiments have shown that grammar string based multiple sequence alignment competes favorably in consensus structure quality with Murlet. Source codes and experimental data are available at http://www.cse.msu.edu/~yannisun/grammar-string. 1. INTRODUCTION both the sequence and structural conservations. A successful application of SCFG is ncRNA classifica- Annotating noncoding RNAs (ncRNAs), which are tion, which classifies query sequences into annotated not translated into protein but function directly as ncRNA families such as tRNA, rRNA, riboswitch RNA, is highly important to modern biology. -

120421-24Recombschedule FINAL.Xlsx

Friday 20 April 18:00 20:00 REGISTRATION OPENS in Fira Palace 20:00 21:30 WELCOME RECEPTION in CaixaForum (access map) Saturday 21 April 8:00 8:50 REGISTRATION 8:50 9:00 Opening Remarks (Roderic GUIGÓ and Benny CHOR) Session 1. Chair: Roderic GUIGÓ (CRG, Barcelona ES) 9:00 10:00 Richard DURBIN The Wellcome Trust Sanger Institute, Hinxton UK "Computational analysis of population genome sequencing data" 10:00 10:20 44 Yaw-Ling Lin, Charles Ward and Steven Skiena Synthetic Sequence Design for Signal Location Search 10:20 10:40 62 Kai Song, Jie Ren, Zhiyuan Zhai, Xuemei Liu, Minghua Deng and Fengzhu Sun Alignment-Free Sequence Comparison Based on Next Generation Sequencing Reads 10:40 11:00 178 Yang Li, Hong-Mei Li, Paul Burns, Mark Borodovsky, Gene Robinson and Jian Ma TrueSight: Self-training Algorithm for Splice Junction Detection using RNA-seq 11:00 11:30 coffee break Session 2. Chair: Bonnie BERGER (MIT, Cambrige US) 11:30 11:50 139 Son Pham, Dmitry Antipov, Alexander Sirotkin, Glenn Tesler, Pavel Pevzner and Max Alekseyev PATH-SETS: A Novel Approach for Comprehensive Utilization of Mate-Pairs in Genome Assembly 11:50 12:10 171 Yan Huang, Yin Hu and Jinze Liu A Robust Method for Transcript Quantification with RNA-seq Data 12:10 12:30 120 Zhanyong Wang, Farhad Hormozdiari, Wen-Yun Yang, Eran Halperin and Eleazar Eskin CNVeM: Copy Number Variation detection Using Uncertainty of Read Mapping 12:30 12:50 205 Dmitri Pervouchine Evidence for widespread association of mammalian splicing and conserved long range RNA structures 12:50 13:10 169 Melissa Gymrek, David Golan, Saharon Rosset and Yaniv Erlich lobSTR: A Novel Pipeline for Short Tandem Repeats Profiling in Personal Genomes 13:10 13:30 217 Rory Stark Differential oestrogen receptor binding is associated with clinical outcome in breast cancer 13:30 15:00 lunch break Session 3. -

Python for Bioinformatics, Second Edition

PYTHON FOR BIOINFORMATICS SECOND EDITION CHAPMAN & HALL/CRC Mathematical and Computational Biology Series Aims and scope: This series aims to capture new developments and summarize what is known over the entire spectrum of mathematical and computational biology and medicine. It seeks to encourage the integration of mathematical, statistical, and computational methods into biology by publishing a broad range of textbooks, reference works, and handbooks. The titles included in the series are meant to appeal to students, researchers, and professionals in the mathematical, statistical and computational sciences, fundamental biology and bioengineering, as well as interdisciplinary researchers involved in the field. The inclusion of concrete examples and applications, and programming techniques and examples, is highly encouraged. Series Editors N. F. Britton Department of Mathematical Sciences University of Bath Xihong Lin Department of Biostatistics Harvard University Nicola Mulder University of Cape Town South Africa Maria Victoria Schneider European Bioinformatics Institute Mona Singh Department of Computer Science Princeton University Anna Tramontano Department of Physics University of Rome La Sapienza Proposals for the series should be submitted to one of the series editors above or directly to: CRC Press, Taylor & Francis Group 3 Park Square, Milton Park Abingdon, Oxfordshire OX14 4RN UK Published Titles An Introduction to Systems Biology: Statistical Methods for QTL Mapping Design Principles of Biological Circuits Zehua Chen Uri Alon -

University of Nevada, Reno American Shinto Community of Practice

University of Nevada, Reno American Shinto Community of Practice: Community formation outside original context A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts in Anthropology By Craig E. Rodrigue Jr. Dr. Erin E. Stiles/Thesis Advisor May, 2017 THE GRADUATE SCHOOL We recommend that the thesis prepared under our supervision by CRAIG E. RODRIGUE JR. Entitled American Shinto Community Of Practice: Community Formation Outside Original Context be accepted in partial fulfillment of the requirements for the degree of MASTER OF ARTS Erin E. Stiles, Advisor Jenanne K. Ferguson, Committee Member Meredith Oda, Graduate School Representative David W. Zeh, Ph.D., Dean, Graduate School May, 2017 i Abstract Shinto is a native Japanese religion with a history that goes back thousands of years. Because of its close ties to Japanese culture, and Shinto’s strong emphasis on place in its practice, it does not seem to be the kind of religion that would migrate to other areas of the world and convert new practitioners. However, not only are there examples of Shinto being practiced outside of Japan, the people doing the practice are not always of Japanese heritage. The Tsubaki Grand Shrine of America is one of the only fully functional Shinto shrines in the United States and is run by the first non-Japanese Shinto priest. This thesis looks at the community of practice that surrounds this American shrine and examines how membership is negotiated through action. There are three main practices that form the larger community: language use, rituals, and Aikido. Through participation in these activities members engage with an American Shinto community of practice. -

Childbearing in Japanese Society: Traditional Beliefs and Contemporary Practices

Childbearing in Japanese Society: Traditional Beliefs and Contemporary Practices by Gunnella Thorgeirsdottir A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield Faculty of Social Sciences School of East Asian Studies August 2014 ii iii iv Abstract In recent years there has been an oft-held assumption as to the decline of traditions as well as folk belief amidst the technological modern age. The current thesis seeks to bring to light the various rituals, traditions and beliefs surrounding pregnancy in Japanese society, arguing that, although changed, they are still very much alive and a large part of the pregnancy experience. Current perception and ideas were gathered through a series of in depth interviews with 31 Japanese females of varying ages and socio-cultural backgrounds. These current perceptions were then compared to and contrasted with historical data of a folkloristic nature, seeking to highlight developments and seek out continuities. This was done within the theoretical framework of the liminal nature of that which is betwixt and between as set forth by Victor Turner, as well as theories set forth by Mary Douglas and her ideas of the polluting element of the liminal. It quickly became obvious that the beliefs were still strong having though developed from a person-to- person communication and into a set of knowledge aquired by the mother largely from books, magazines and or offline. v vi Acknowledgements This thesis would never have been written had it not been for the endless assistance, patience and good will of a good number of people. -

2008 UPRISING in TIBET: CHRONOLOGY and ANALYSIS © 2008, Department of Information and International Relations, CTA First Edition, 1000 Copies ISBN: 978-93-80091-15-0

2008 UPRISING IN TIBET CHRONOLOGY AND ANALYSIS CONTENTS (Full contents here) Foreword List of Abbreviations 2008 Tibet Uprising: A Chronology 2008 Tibet Uprising: An Analysis Introduction Facts and Figures State Response to the Protests Reaction of the International Community Reaction of the Chinese People Causes Behind 2008 Tibet Uprising: Flawed Tibet Policies? Political and Cultural Protests in Tibet: 1950-1996 Conclusion Appendices Maps Glossary of Counties in Tibet 2008 UPRISING IN TIBET CHRONOLOGY AND ANALYSIS UN, EU & Human Rights Desk Department of Information and International Relations Central Tibetan Administration Dharamsala - 176215, HP, INDIA 2010 2008 UPRISING IN TIBET: CHRONOLOGY AND ANALYSIS © 2008, Department of Information and International Relations, CTA First Edition, 1000 copies ISBN: 978-93-80091-15-0 Acknowledgements: Norzin Dolma Editorial Consultants Jane Perkins (Chronology section) JoAnn Dionne (Analysis section) Other Contributions (Chronology section) Gabrielle Lafitte, Rebecca Nowark, Kunsang Dorje, Tsomo, Dhela, Pela, Freeman, Josh, Jean Cover photo courtesy Agence France-Presse (AFP) Published by: UN, EU & Human Rights Desk Department of Information and International Relations (DIIR) Central Tibetan Administration (CTA) Gangchen Kyishong Dharamsala - 176215, HP, INDIA Phone: +91-1892-222457,222510 Fax: +91-1892-224957 Email: [email protected] Website: www.tibet.net; www.tibet.com Printed at: Narthang Press DIIR, CTA Gangchen Kyishong Dharamsala - 176215, HP, INDIA ... for those who lost their lives, for -

A POPULAR DICTIONARY of Shinto

A POPULAR DICTIONARY OF Shinto A POPULAR DICTIONARY OF Shinto BRIAN BOCKING Curzon First published by Curzon Press 15 The Quadrant, Richmond Surrey, TW9 1BP This edition published in the Taylor & Francis e-Library, 2005. “To purchase your own copy of this or any of Taylor & Francis or Routledge’s collection of thousands of eBooks please go to http://www.ebookstore.tandf.co.uk/.” Copyright © 1995 by Brian Bocking Revised edition 1997 Cover photograph by Sharon Hoogstraten Cover design by Kim Bartko All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the publisher. British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library ISBN 0-203-98627-X Master e-book ISBN ISBN 0-7007-1051-5 (Print Edition) To Shelagh INTRODUCTION How to use this dictionary A Popular Dictionary of Shintō lists in alphabetical order more than a thousand terms relating to Shintō. Almost all are Japanese terms. The dictionary can be used in the ordinary way if the Shintō term you want to look up is already in Japanese (e.g. kami rather than ‘deity’) and has a main entry in the dictionary. If, as is very likely, the concept or word you want is in English such as ‘pollution’, ‘children’, ‘shrine’, etc., or perhaps a place-name like ‘Kyōto’ or ‘Akita’ which does not have a main entry, then consult the comprehensive Thematic Index of English and Japanese terms at the end of the Dictionary first. -

Michael S. Waterman: Breathing Mathematics Into Genes >>>

ISSUE 13 Newsletter of Institute for Mathematical Sciences, NUS 2008 Michael S. Waterman: Breathing Mathematics into Genes >>> setting up of the Center for Computational and Experimental Genomics in 2001, Waterman and his collaborators and students continue to provide a road map for the solution of post-genomic computational problems. For his scientific contributions he was elected fellow or member of prestigious learned bodies like the American Academy of Arts and Sciences, National Academy of Sciences, American Association for the Advancement of Science, Institute of Mathematical Statistics, Celera Genomics and French Acadèmie des Sciences. He was awarded a Gairdner Foundation International Award and the Senior Scientist Accomplishment Award of the International Society of Computational Biology. He currently holds an Endowed Chair at USC and has held numerous visiting positions in major universities. In addition to research, he is actively involved in the academic and social activities of students as faculty master Michael Waterman of USC’s International Residential College at Parkside. Interview of Michael S. Waterman by Y.K. Leong Waterman has served as advisor to NUS on genomic research and was a member of the organizational committee Michael Waterman is world acclaimed for pioneering and of the Institute’s thematic program Post-Genome Knowledge 16 fundamental work in probability and algorithms that has Discovery (Jan – June 2002). On one of his advisory tremendous impact on molecular biology, genomics and visits to NUS, Imprints took the opportunity to interview bioinformatics. He was a founding member of the Santa him on 7 February 2007. The following is an edited and Cruz group that launched the Human Genome Project in enhanced version of the interview in which he describes the 1990, and his work was instrumental in bringing the public excitement of participating in one of the greatest modern and private efforts of mapping the human genome to their scientific adventures and of unlocking the mystery behind completion in 2003, two years ahead of schedule. -

BIOINFORMATICS ISCB NEWS Doi:10.1093/Bioinformatics/Btp280

Vol. 25 no. 12 2009, pages 1570–1573 BIOINFORMATICS ISCB NEWS doi:10.1093/bioinformatics/btp280 ISMB/ECCB 2009 Stockholm Marie-France Sagot1, B.J. Morrison McKay2,∗ and Gene Myers3 1INRIA Grenoble Rhône-Alpes and University of Lyon 1, Lyon, France, 2International Society for Computational Biology, University of California San Diego, La Jolla, CA and 3Howard Hughes Medical Institute Janelia Farm Research Campus, Ashburn, Virginia, USA ABSTRACT Computational Biology (http://www.iscb.org) was formed to take The International Society for Computational Biology (ISCB; over the organization, maintain the institutional memory of ISMB http://www.iscb.org) presents the Seventeenth Annual International and expand the informational resources available to members of the Conference on Intelligent Systems for Molecular Biology bioinformatics community. The launch of ECCB (http://bioinf.mpi- (ISMB), organized jointly with the Eighth Annual European inf.mpg.de/conferences/eccb/eccb.htm) 8 years ago provided for a Conference on Computational Biology (ECCB; http://bioinf.mpi- focus on European research activities in years when ISMB is held inf.mpg.de/conferences/eccb/eccb.htm), in Stockholm, Sweden, outside of Europe, and a partnership of conference organizing efforts 27 June to 2 July 2009. The organizers are putting the finishing for the presentation of a single international event when the ISMB touches on the year’s premier computational biology conference, meeting takes place in Europe every other year. with an expected attendance of 1400 computer scientists, The multidisciplinary field of bioinformatics/computational mathematicians, statisticians, biologists and scientists from biology has matured since gaining widespread recognition in the other disciplines related to and reliant on this multi-disciplinary early days of genomics research. -

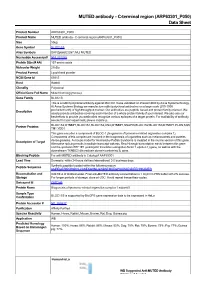

MUTED Antibody - C-Terminal Region (ARP63301 P050) Data Sheet

MUTED antibody - C-terminal region (ARP63301_P050) Data Sheet Product Number ARP63301_P050 Product Name MUTED antibody - C-terminal region (ARP63301_P050) Size 50ug Gene Symbol BLOC1S5 Alias Symbols DKFZp686E2287; MU; MUTED Nucleotide Accession# NM_201280 Protein Size (# AA) 187 amino acids Molecular Weight 21kDa Product Format Lyophilized powder NCBI Gene Id 63915 Host Rabbit Clonality Polyclonal Official Gene Full Name Muted homolog (mouse) Gene Family BLOC1S This is a rabbit polyclonal antibody against MUTED. It was validated on Western Blot by Aviva Systems Biology. At Aviva Systems Biology we manufacture rabbit polyclonal antibodies on a large scale (200-1000 Description products/month) of high throughput manner. Our antibodies are peptide based and protein family oriented. We usually provide antibodies covering each member of a whole protein family of your interest. We also use our best efforts to provide you antibodies recognize various epitopes of a target protein. For availability of antibody needed for your experiment, please inquire (). Partner Proteins BLOC1S2,DTNBP1,BLOC1S1,BLOC1S2,CNO,DTNBP1,SNAPIN,BLOC1S2,BLOC1S3,DTNBP1,PLDN,SQS TM1,YOD1 This gene encodes a component of BLOC-1 (biogenesis of lysosome-related organelles complex 1). Components of this complex are involved in the biogenesis of organelles such as melanosomes and platelet- Description of Target dense granules. A mouse model for Hermansky-Pudlak Syndrome is mutated in the murine version of this gene. Alternative splicing results in multiple transcript variants. Read-through transcription exists between this gene and the upstream EEF1E1 (eukaryotic translation elongation factor 1 epsilon 1) gene, as well as with the downstream TXNDC5 (thioredoxin domain containing 5) gene. -

F. Alex Feltus, Ph.D

F. Alex Feltus, Ph.D. Curriculum Vitae 001010101000001000100001011001010101001000101001010010000100001010101001001000010010001000100001010001001010100100010001000101001000011110101000110010100010101010101010110101010100001000010010101010100100100000101001010010001010110100010 Clemson University • Department of Genetics & Biochemistry Biosystems Research Complex Rm 302C • 105 Collings St. • Clemson, SC 29634 (864)656-3231 (office) • (864) 654-5403 (home) • Skype: alex.feltus • [email protected] https://www.clemson.edu/science/departments/genetics-biochemistry/people/profiles/ffeltus https://orcid.org/0000-0002-2123-6114 https://www.linkedin.com/in/alex-feltus-86a0073a 001010101000001010101010101010101010100110000101100101010100100010100101001000010000101010100100100001001000100010000101000100101010010001000100010100100001111010100011001010001000001000010010101010100100100000101001010010001010110100010 Educational Background: Ph.D. Cell Biology (2000) Vanderbilt University (Nashville, TN) B.Sc. Biochemistry (1992) Auburn University (Auburn, AL) Ph.D. Dissertation Title: Transcriptional Regulation of Human Type II 3β-Hydroxysteroid Dehydrogenase: Stat5- Centered Control by Steroids, Prolactin, EGF, and IL-4 Hormones. Professional Experience: 2018- Professor, Clemson University Department of Genetics and Biochemistry 2017- Core Faculty, Biomedical Data Science and Informatics (BDSI) PhD Program 2018- Faculty Member, Clemson Center for Human Genetics 2020- Faculty Scholar, Clemson University School of Health Research (CUSHR) 2019- co-Founder,