Anti-Virus Comparative August 2009

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hostscan 4.8.01064 Antimalware and Firewall Support Charts

HostScan 4.8.01064 Antimalware and Firewall Support Charts 10/1/19 © 2019 Cisco and/or its affiliates. All rights reserved. This document is Cisco public. Page 1 of 76 Contents HostScan Version 4.8.01064 Antimalware and Firewall Support Charts ............................................................................... 3 Antimalware and Firewall Attributes Supported by HostScan .................................................................................................. 3 OPSWAT Version Information ................................................................................................................................................. 5 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.890.0 for Windows .................................................. 5 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.890.0 for Windows ........................................................ 44 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.824.0 for macos .................................................... 65 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.824.0 for macOS ........................................................... 71 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.730.0 for Linux ...................................................... 73 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.730.0 for Linux .............................................................. 76 ©201 9 Cisco and/or its affiliates. All rights reserved. This document is Cisco Public. -

Security Survey 2014

Security Survey 2014 www.av-comparatives.org IT Security Survey 2014 Language: English Last Revision: 28th February 2014 www.av-comparatives.org - 1 - Security Survey 2014 www.av-comparatives.org Overview Use of the Internet by home and business users continues to grow in all parts of the world. How users access the Internet is changing, though. There has been increased usage of smartphones by users to access the Internet. The tablet market has taken off as well. This has resulted in a drop in desktop and laptop sales. With respect to attacks by cyber criminals, this means that their focus has evolved. This is our fourth1 annual survey of computer users worldwide. Its focus is which security products (free and paid) are employed by users, OS usage, and browser usage. We also asked respondents to rank what they are looking for in their security solution. Survey methodology Report results are based on an Internet survey run by AV-Comparatives between 17th December 2013 and 17th January 2014. A total of 5,845 computer users from around the world anonymously answered the questions on the subject of computers and security. Key Results Among respondents, the three most important aspects of a security protection product were (1) Low impact on system performance (2) Good detection rate (3) Good malware removal and cleaning capabilities. These were the only criteria with over 60% response rate. Europe, North America and Central/South America were similar in terms of which products they used, with Avast topping the list. The share of Android as the mobile OS increased from 51% to 70%, while Symbian dropped from 21% to 5%. -

Page 1 of 375 6/16/2021 File:///C:/Users/Rtroche

Page 1 of 375 :: Access Flex Bear High Yield ProFund :: Schedule of Portfolio Investments :: April 30, 2021 (unaudited) Repurchase Agreements(a) (27.5%) Principal Amount Value Repurchase Agreements with various counterparties, 0.00%, dated 4/30/21, due 5/3/21, total to be received $129,000. $ 129,000 $ 129,000 TOTAL REPURCHASE AGREEMENTS (Cost $129,000) 129,000 TOTAL INVESTMENT SECURITIES 129,000 (Cost $129,000) - 27.5% Net other assets (liabilities) - 72.5% 340,579 NET ASSETS - (100.0%) $ 469,579 (a) The ProFund invests in Repurchase Agreements jointly with other funds in the Trust. See "Repurchase Agreements" in the Appendix to view the details of each individual agreement and counterparty as well as a description of the securities subject to repurchase. Futures Contracts Sold Number Value and Unrealized of Expiration Appreciation/ Contracts Date Notional Amount (Depreciation) 5-Year U.S. Treasury Note Futures Contracts 3 7/1/21 $ (371,977) $ 2,973 Centrally Cleared Swap Agreements Credit Default Swap Agreements - Buy Protection (1) Implied Credit Spread at Notional Premiums Unrealized Underlying Payment Fixed Deal Maturity April 30, Amount Paid Appreciation/ Variation Instrument Frequency Pay Rate Date 2021(2) (3) Value (Received) (Depreciation) Margin CDX North America High Yield Index Swap Agreement; Series 36 Daily 5 .00% 6/20/26 2.89% $ 450,000 $ (44,254) $ (38,009) $ (6,245) $ 689 (1) When a credit event occurs as defined under the terms of the swap agreement, the Fund as a buyer of credit protection will either (i) receive from the seller of protection an amount equal to the par value of the defaulted reference entity and deliver the reference entity or (ii) receive a net amount equal to the par value of the defaulted reference entity less its recovery value. -

Aluria Security Center Avira Antivir Personaledition Classic 7

Aluria Security Center Avira AntiVir PersonalEdition Classic 7 - 8 Avira AntiVir Personal Free Antivirus ArcaVir Antivir/Internet Security 09.03.3201.9 x64 Ashampoo FireWall Ashampoo FireWall PRO 1.14 ALWIL Software Avast 4.0 Grisoft AVG 7.x Grisoft AVG 6.x Grisoft AVG 8.x Grisoft AVG 8.x x64 Avira Premium Security Suite 2006 Avira WebProtector 2.02 Avira AntiVir Personal - Free Antivirus 8.02 Avira AntiVir PersonalEdition Premium 7.06 AntiVir Windows Workstation 7.06.00.507 Kaspersky AntiViral Toolkit Pro BitDefender Free Edition BitDefender Internet Security BullGuard BullGuard AntiVirus BullGuard AntiVirus x64 CA eTrust AntiVirus 7 CA eTrust AntiVirus 7.1.0192 eTrust AntiVirus 7.1.194 CA eTrust AntiVirus 7.1 CA eTrust Suite Personal 2008 CA Licensing 1.57.1 CA Personal Firewall 9.1.0.26 CA Personal Firewall 2008 CA eTrust InoculateIT 6.0 ClamWin Antivirus ClamWin Antivirus x64 Comodo AntiSpam 2.6 Comodo AntiSpam 2.6 x64 COMODO AntiVirus 1.1 Comodo BOClean 4.25 COMODO Firewall Pro 1.0 - 3.x Comodo Internet Security 3.8.64739.471 Comodo Internet Security 3.8.64739.471 x64 Comodo Safe Surf 1.0.0.7 Comodo Safe Surf 1.0.0.7 x64 DrVirus 3.0 DrWeb for Windows 4.30 DrWeb Antivirus for Windows 4.30 Dr.Web AntiVirus 5 Dr.Web AntiVirus 5.0.0 EarthLink Protection Center PeoplePC Internet Security 1.5 PeoplePC Internet Security Pack / EarthLink Protection Center ESET NOD32 file on-access scanner ESET Smart Security 3.0 eTrust EZ Firewall 6.1.7.0 eTrust Personal Firewall 5.5.114 CA eTrust PestPatrol Anti-Spyware Corporate Edition CA eTrust PestPatrol -

Heuristic / Behavioural Protection Test 2013

Anti‐Virus Comparative ‐ Retrospective test – March 2013 www.av‐comparatives.org Anti-Virus Comparative Retrospective/Proactive test Heuristic and behavioural protection against new/unknown malicious software Language: English March 2013 Last revision: 16th August 2013 www.av-comparatives.org ‐ 1 ‐ Anti‐Virus Comparative ‐ Retrospective test – March 2013 www.av‐comparatives.org Contents 1. Introduction 3 2. Description 3 3. False-alarm test 5 4. Test Results 5 5. Summary results 6 6. Awards reached in this test 7 7. Copyright and disclaimer 8 ‐ 2 ‐ Anti‐Virus Comparative ‐ Retrospective test – March 2013 www.av‐comparatives.org 1. Introduction This test report is the second part of the March 2013 test1. The report is delivered several months later due to the large amount of work required, deeper analysis, preparation and dynamic execution of the retrospective test-set. This type of test is performed only once a year and includes a behavioural protection element, where any malware samples are executed, and the results observed. Although it is a lot of work, we usually receive good feedback from various vendors, as this type of test allows them to find bugs and areas for improvement in the behavioural routines (as this test evaluates specifically the proactive heuristic and behavioural protection components). The products used the same updates and signatures that they had on the 28th February 2013. This test shows the proactive protection capabilities that the products had at that time. We used 1,109 new, unique and very prevalent malware samples that appeared for the first time shortly after the freezing date. The size of the test-set has also been reduced to a smaller set containing only one unique sam- ple per variant, in order to enable vendors to peer-review our results in a timely manner. -

Rethinking Security

RETHINKING SECURITY Fighting Known, Unknown and Advanced Threats kaspersky.com/business “Merchants, he said, are either not running REAL DANGERS antivirus on the servers managing point- of-sale devices or they’re not being updated AND THE REPORTED regularly. The end result in Home Depot’s DEMISE OF ANTIVIRUS case could be the largest retail data breach in U.S. history, dwarfing even Target.” 1 Regardless of its size or industry, your business is in real danger of becoming a victim of ~ Pat Belcher of Invincea cybercrime. This fact is indisputable. Open a newspaper, log onto the Internet, watch TV news or listen to President Obama’s recent State of the Union address and you’ll hear about another widespread breach. You are not paranoid when you think that your financial data, corporate intelligence and reputation are at risk. They are and it’s getting worse. Somewhat more controversial, though, are opinions about the best methods to defend against these perils. The same news sources that deliver frightening stories about costly data breaches question whether or not anti-malware or antivirus (AV) is dead, as reported in these articles from PC World, The Wall Street Journal and Fortune magazine. Reports about the death by irrelevancy of anti-malware technology miss the point. Smart cybersecurity today must include advanced anti-malware at its core. It takes multiple layers of cutting edge technology to form the most effective line of cyberdefense. This eBook explores the features that make AV a critical component of an effective cybersecurity strategy to fight all hazards targeting businesses today — including known, unknown and advanced cyberthreats. -

Release Notes

ESAP 1.6.1 Support has been added for the following products in ESAP1.6.1: Antivirus Products [Antiy Labs] Antiy Ghostbusters 6.x [Comodo Group] COMODO Internet Security 4.x [Kingsoft Corp.] Internet Security 2010.x [SOFTWIN] BitDefender Free Edition 2009 12.x [Sunbelt Software] VIPRE Enterprise 4.x [Sunbelt Software] VIPRE Enterprise Premium 4.x [Symantec Corp.] Norton AntiVirus 18.x [Symantec Corp.] Symantec Endpoint Protection Agent 5.x Antispyware Products [Symantec Corp.] Norton AntiVirus [AntiSpyware] 18.x Firewall Products [Check Point, Inc] ZoneAlarm Firewall 9.x [Comodo Group] COMODO Internet Security 4.x [Sunbelt Software] VIPRE Enterprise Premium 4.x [Symantec Corp.] Norton Internet Security 18.x [Symantec Corp.] Symantec Protection Agent 5.1 5.x Issues Fixed in ESAP1.6.1: OPSWAT : 1. Custom install of Symantec Endpoint Protection 11.x not getting detected (499991) Shavlik: No Shavlik fixes are included. Issues on Upgrading to ESAP1.6.1: OPSWAT: 1. Upgrade from ESAP1.5.2 or older fails if a firewall policy is configured where “Require Specific Products” is checked and McAfee Desktop Firewall (8.0) is selected. The upgrade doesn’t fail if McAfee Desktop Firewall (8.0.x) is selected. To successfully upgrade to ESAP 1.5.3 or greater, unselect McAfee Desktop Firewall (8.0) and select McAfee Desktop Firewall (8.0.x). This doesn’t result in any loss of functionality. Shavlik: 1. The following note applies only to the patch assessment functionality. When upgrading ESAP from a 1.5.1 or older release to the current release, the services on the SA or IC device needs to be restarted for the binaries on the endpoint to be automatically upgraded. -

Comparison with Avast! Internet Security 6.0

Comparison with avast! Internet Security 6.0 WHAT’S NEW IN AVAST! INTERNET PRODUCT NAME avast! Internet Norton Internet McAfee Internet Kaspersky Internet Eset Smart Security 6.0 Security 2011 Security 2011 Security 2011 Security 4 SECURITY VERsiON 6.0? Number of program languages 35 27 31 17 30 new avast! SafeZoneTM Size of the installation package 73 MB 133 MB 141 MB 98,9 MB 47,7 MB Opens a new (clean) virtual desktop so that other applications don’t see what’s happening – Program size after installation 310 MB 1 307 MB 271 MB 404 MB 230 MB perfect for banking or secure ordering/shopping 1-year price up to 3 PCs $ 69.99 $ 69.99 $ 79.99 $ 79.95 $89.99 – and leaves no traces once it’s closed. TM On-Demand Scanning Test, AV-Comparatives.org - Aug 2010 improved avast! Sandbox Provides an extra layer of security for you to Detection 99.3% 2 98.7% 99.4% 98.3% 98.6% run your PC and its applications in a virtual Scanning speed [MB/s) 17.2 13.3 12.3 9.8 9.6 environment. Number of False Positives few few many many few new avast! AutoSandboxTM (virtualization) Prompts users to run suspicious items in the 3 GENERAL FEATURES virtual Sandbox environment. TM Antivirus and anti-spyware engine Signature-/Heuristic-based a a a a a new avast! WebRep Provides website reliability and reputation Behavior-based a a a a a ratings according to community-provided Real-time anti-rootkit protection On-demand detection a a a a a feedback. -

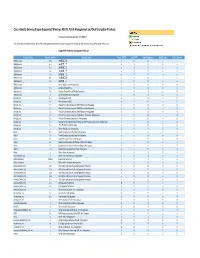

Cisco Identity Services Engine Supported Windows AV/AS/PM/DE

Cisco Identity Services Engine Supported Windows AS/AV, Patch Management and Disk Encryption Products Compliance Module Version 3.6.10363.2 This document provides Windows AS/AV, Patch Management and Disk Encryption support information on the the Cisco AnyConnect Agent Version 4.2. Supported Windows Antispyware Products Vendor_Name Product_Version Product_Name Check_FSRTP Set_FSRTP VirDef_Signature VirDef_Time VirDef_Version 360Safe.com 10.x 360安全卫士 vX X v v 360Safe.com 4.x 360安全卫士 vX X v v 360Safe.com 5.x 360安全卫士 vX X v v 360Safe.com 6.x 360安全卫士 vX X v v 360Safe.com 7.x 360安全卫士 vX X v v 360Safe.com 8.x 360安全卫士 vX X v v 360Safe.com 9.x 360安全卫士 vX X v v 360Safe.com x Other 360Safe.com Antispyware Z X X Z X Agnitum Ltd. 7.x Outpost Firewall Pro vX X X O Agnitum Ltd. 6.x Outpost Firewall Pro 2008 [AntiSpyware] v X X v O Agnitum Ltd. x Other Agnitum Ltd. Antispyware Z X X Z X AhnLab, Inc. 2.x AhnLab SpyZero 2.0 vv O v O AhnLab, Inc. 3.x AhnLab SpyZero 2007 X X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2007 Platinum AntiSpyware v X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2008 Platinum AntiSpyware v X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2009 Platinum AntiSpyware v v O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 7.0 Platinum Enterprise AntiSpyware v X O v O AhnLab, Inc. 8.x AhnLab V3 Internet Security 8.0 AntiSpyware v v O v O AhnLab, Inc. -

Cisco Identity Services Engine Release 1.2 Supported Windows

Cisco Identity Services Engine Supported Windows AV/AS Products Compliance Module Version 3.5.6317.2 This document provides Windows 8/7/Vista/XP AV/AS support information on the Cisco NAC Agent version 4.9.0.x and later. For other support information and complete release updates, refer to the Release Notes for Cisco Identity Services Engine corresponding to your Cisco Identity Services Engine release version. Supported Windows AV/AS Product Summary Added New AV Definition Support: COMODO Antivirus 5.x COMODO Internet Security 3.5.x COMODO Internet Security 3.x COMODO Internet Security 4.x Kingsoft Internet Security 2013.x Added New AV Products Support: V3 Click 1.x avast! Internet Security 8.x avast! Premier 8.x avast! Pro Antivirus 8.x Gen-X Total Security 1.x K7UltimateSecurity 13.x Kaspersky Endpoint Security 10.x Kaspersky PURE 13.x Norman Security Suite 10.x Supported Windows AntiVirus Products Product Name Product Version Installation Virus Definition Live Update 360Safe.com 360 Antivirus 1.x 4.9.0.28 / 3.4.21.1 4.9.0.28 / 3.4.21.1 yes 360 Antivirus 3.x 4.9.0.29 / 3.5.5767.2 4.9.0.29 / 3.5.5767.2 - 360杀毒 1.x 4.9.0.28 / 3.4.21.1 4.9.0.28 / 3.4.21.1 - 360杀毒 2.x 4.9.0.29 / 3.4.25.1 4.9.0.29 / 3.4.25.1 - 360杀毒 3.x 4.9.0.29 / 3.5.2101.2 - Other 360Safe.com Antivirus x 4.9.0.29 / 3.5.2101.2 - AEC, spol. -

CONTENTS in THIS ISSUE Fighting Malware and Spam

OCTOBER 2010 Fighting malware and spam CONTENTS IN THIS ISSUE 2 COMMENT SPAM COLLECTING Changing times Claudiu Musat and George Petre explain why spam feeds matter in the anti-spam fi eld and discuss the 3 NEWS importance of effective spam-gathering methods. Overall fall in fraud, but online banking page 21 losses rise National Cybersecurity Awareness Month NEW KIDS ON THE BLOCK Dip in Canadian Pharmacy spam New anti-malware companies and products seem to spring up with increasing frequency, many 3 VIRUS PREVALENCE TABLE reworking existing detection engines into new forms, as well as several that are working on their MALWARE ANALYSES own detection technology. John Hawes takes a 4 It’s just spam, it can’t hurt, right? quick look at a few of the up-and-coming products which he expects to see taking part in the VB100 13 Rooting about in TDSS comparatives in the near future. page 25 16 TECHNICAL FEATURE Anti-unpacker tricks – part thirteen VB100 CERTIFICATION ON WINDOWS SERVER 2003 21 FEATURE This month the VB test team put On the relevance of spam feeds 38 products through their paces on Windows Server 2003. John Oct 2010 25 REVIEW FEATURE Hawes has the details of the VB100 Things to come winners and those who failed to make the grade. 29 COMPARATIVE REVIEW page 29 Windows Server 2003 59 END NOTES & NEWS ISSN 1749-7027 COMMENT ‘Ten years ago the While mostly very tongue-in-cheek, a substantial amount of what he wrote was accurate. idea of malware He predicted that by 2010 the PC would no longer be the writing becoming most prevalent computing platform in the world, having a profi t-making been overtaken in number by pervasive computing devices – in other words, PDAs and web phones. -

PCSL Greater China Region False Positive Test (2011 January)

PC Security Labs PCSL Greater China Region False Positive Test (2011 January) PC Security Labs www.pcsecuritylabs.net Menu Menu 1. Test introduction and highlights 2. Test set and award criteria 3. Participating vendors and products 4. Detailed test results 5. Monthly award logo 6. Disclaimer We Understand China www.pcsecuritylabs.net PC Security Labs 1. TEST INTRODUCTION AND HIGHLIGHTS A "false positive" is when antivirus software reports a clean file or a non-malicious activity as malicious. False positives can cause system crashes and other severe problems that may directly lead to serious economic losses. Please note that the false positive test does not tell you anything about the effectiveness of protection that any participating product may provide. For this new test: A. Our set of popular software has been updated. B. For the first time, the test bed also covers popular software fromTaiwan, especially banking and securities software. C. We chose 30 types of commonly used applications, in order to check whether security software blocks the installation of normal applications besides the normal static scan of our clean file database. D. We will quote the data from this test and use it in the coming China region malware comparatives test and the total protection test. 2. TEST SET AND AWARD CRITERIA The sample set covers recent newly added software and popular software in the Greater China region (Mainland China, Hong Kong, Macau and Taiwan). The following clean files were reported by security software during this test: 3 We Understand