Corpus Linguistics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Usage of IT Terminology in Corpus Linguistics Mavlonova Mavluda Davurovna

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8, Issue-7S, May 2019 Usage of IT Terminology in Corpus Linguistics Mavlonova Mavluda Davurovna At this period corpora were used in semantics and related Abstract: Corpus linguistic will be the one of latest and fast field. At this period corpus linguistics was not commonly method for teaching and learning of different languages. used, no computers were used. Corpus linguistic history Proposed article can provide the corpus linguistic introduction were opposed by Noam Chomsky. Now a day’s modern and give the general overview about the linguistic team of corpus. corpus linguistic was formed from English work context and Article described about the corpus, novelties and corpus are associated in the following field. Proposed paper can focus on the now it is used in many other languages. Alongside was using the corpus linguistic in IT field. As from research it is corpus linguistic history and this is considered as obvious that corpora will be used as internet and primary data in methodology [Abdumanapovna, 2018]. A landmark is the field of IT. The method of text corpus is the digestive modern corpus linguistic which was publish by Henry approach which can drive the different set of rules to govern the Kucera. natural language from the text in the particular language, it can Corpus linguistic introduce new methods and techniques explore about languages that how it can inter-related with other. for more complete description of modern languages and Hence the corpus linguistic will be automatically drive from the these also provide opportunities to obtain new result with text sources. -

Linguistic Annotation of the Digital Papyrological Corpus: Sematia

Marja Vierros Linguistic Annotation of the Digital Papyrological Corpus: Sematia 1 Introduction: Why to annotate papyri linguistically? Linguists who study historical languages usually find the methods of corpus linguis- tics exceptionally helpful. When the intuitions of native speakers are lacking, as is the case for historical languages, the corpora provide researchers with materials that replaces the intuitions on which the researchers of modern languages can rely. Using large corpora and computers to count and retrieve information also provides empiri- cal back-up from actual language usage. In the case of ancient Greek, the corpus of literary texts (e.g. Thesaurus Linguae Graecae or the Greek and Roman Collection in the Perseus Digital Library) gives information on the Greek language as it was used in lyric poetry, epic, drama, and prose writing; all these literary genres had some artistic aims and therefore do not always describe language as it was used in normal commu- nication. Ancient written texts rarely reflect the everyday language use, let alone speech. However, the corpus of documentary papyri gets close. The writers of the pa- pyri vary between professionally trained scribes and some individuals who had only rudimentary writing skills. The text types also vary from official decrees and orders to small notes and receipts. What they have in common, though, is that they have been written for a specific, current need instead of trying to impress a specific audience. Documentary papyri represent everyday texts, utilitarian prose,1 and in that respect, they provide us a very valuable source of language actually used by common people in everyday circumstances. -

Creating and Using Multilingual Corpora in Translation Studies Claudio Fantinuoli and Federico Zanettin

Chapter 1 Creating and using multilingual corpora in translation studies Claudio Fantinuoli and Federico Zanettin 1 Introduction Corpus linguistics has become a major paradigm and research methodology in translation theory and practice, with practical applications ranging from pro- fessional human translation to machine (assisted) translation and terminology. Corpus-based theoretical and descriptive research has investigated written and interpreted language, and topics such as translation universals and norms, ideol- ogy and individual translator style (Laviosa 2002; Olohan 2004; Zanettin 2012), while corpus-based tools and methods have entered the curricula at translation training institutions (Zanettin, Bernardini & Stewart 2003; Beeby, Rodríguez Inés & Sánchez-Gijón 2009). At the same time, taking advantage of advancements in terms of computational power and increasing availability of electronic texts, enormous progress has been made in the last 20 years or so as regards the de- velopment of applications for professional translators and machine translation system users (Coehn 2009; Brunette 2013). The contributions to this volume, which are centred around seven European languages (Basque, Dutch, German, Greek, Italian, Spanish and English), add to the range of studies of corpus-based descriptive studies, and provide exam- ples of some less explored applications of corpus analysis methods to transla- tion research. The chapters, which are based on papers first presented atthe7th congress of the European Society of Translation Studies held in Germersheim in Claudio Fantinuoli & Federico Zanettin. 2015. Creating and using multilin- gual corpora in translation studies. In Claudio Fantinuoli & Federico Za- nettin (eds.), New directions in corpus-based translation studies, 1–11. Berlin: Language Science Press Claudio Fantinuoli and Federico Zanettin July/August 20131, encompass a variety of research aims and methodologies, and vary as concerns corpus design and compilation, and the techniques used to ana- lyze the data. -

Corpus Linguistics As a Tool in Legal Interpretation Lawrence M

BYU Law Review Volume 2017 | Issue 6 Article 5 August 2017 Corpus Linguistics as a Tool in Legal Interpretation Lawrence M. Solan Tammy Gales Follow this and additional works at: https://digitalcommons.law.byu.edu/lawreview Part of the Applied Linguistics Commons, Constitutional Law Commons, and the Legal Profession Commons Recommended Citation Lawrence M. Solan and Tammy Gales, Corpus Linguistics as a Tool in Legal Interpretation, 2017 BYU L. Rev. 1311 (2018). Available at: https://digitalcommons.law.byu.edu/lawreview/vol2017/iss6/5 This Article is brought to you for free and open access by the Brigham Young University Law Review at BYU Law Digital Commons. It has been accepted for inclusion in BYU Law Review by an authorized editor of BYU Law Digital Commons. For more information, please contact [email protected]. 2.GALESSOLAN_FIN.NO HEADERS.DOCX (DO NOT DELETE) 4/26/2018 3:54 PM Corpus Linguistics as a Tool in Legal Interpretation Lawrence M. Solan* & Tammy Gales** In this paper, we set out to explore conditions in which the use of large linguistic corpora can be optimally employed by judges and others tasked with construing authoritative legal documents. Linguistic corpora, sometimes containing billions of words, are a source of information about the distribution of language usage. Thus, corpora and the tools for using them are most likely to assist in addressing legal issues when the law considers the distribution of language usage to be legally relevant. As Thomas R. Lee and Stephen C. Mouritsen have so ably demonstrated in earlier work, corpus analysis is especially helpful when the legal standard for construction is the ordinary meaning of the document’s terms. -

Against Corpus Linguistics

Against Corpus Linguistics JOHN S. EHRETT* Corpus linguistics—the use of large, computerized word databases as tools for discovering linguistic meaning—has increasingly become a topic of interest among scholars of constitutional and statutory interpretation. Some judges and academics have recently argued, across the pages of multiple law journals, that members of the judiciary ought to employ these new technologies when seeking to ascertain the original public meaning of a given text. Corpus linguistics, in the minds of its proponents, is a powerful instrument for rendering constitutional originalism and statutory textualism “scientific” and warding off accusations of interpretive subjectivity. This Article takes the opposite view: on balance, judges should refrain from the use of corpora. Although corpus linguistics analysis may appear highly promising, it carries with it several under-examined dangers—including the collapse of essential distinctions between resource quality, the entrenchment of covert linguistic biases, and a loss of reviewability by higher courts. TABLE OF CONTENTS INTRODUCTION……………………………………………………..…….51 I. THE RISE OF CORPUS LINGUISTICS……………..……………..……54 A. WHAT IS CORPUS LINGUISTICS? …………………………………54 1. Frequency……………………………………………...…54 2. Collocation……………………………………………….55 3. Keywords in Context (KWIC) …………………………...55 B. CORPUS LINGUISTICS IN THE COURTS……………………………56 1. United States v. Costello…………………………………..56 2. State v. Canton……………………………………………58 3. State v. Rasabout………………………………………….59 II. AGAINST “JUDICIALIZING”C ORPUS LINGUISTICS………………….61 A. SUBVERSION OF SOURCE AUTHORITY HIERARCHIES……………..61 * Yale Law School, J.D. 2017. © 2019, John S. Ehrett. 51 THE GEORGETOWN LAW JOURNAL ONLINE [VOL. 108 B. IMPROPER PARAMETRIC OUTSOURCING…………………………65 C. METHODOLOGICAL INACCESSIBILITY…………………………...68 III. THE FUTURE OF JUDGING AND CORPUS LINGUISTICS………………70 INTRODUCTION “Corpus linguistics” may sound like a forensic investigative procedure on CSI or NCIS, but the reality is far less dramatic—though no less important. -

TEI and the Documentation of Mixtepec-Mixtec Jack Bowers

Language Documentation and Standards in Digital Humanities: TEI and the documentation of Mixtepec-Mixtec Jack Bowers To cite this version: Jack Bowers. Language Documentation and Standards in Digital Humanities: TEI and the documen- tation of Mixtepec-Mixtec. Computation and Language [cs.CL]. École Pratique des Hauts Études, 2020. English. tel-03131936 HAL Id: tel-03131936 https://tel.archives-ouvertes.fr/tel-03131936 Submitted on 4 Feb 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Préparée à l’École Pratique des Hautes Études Language Documentation and Standards in Digital Humanities: TEI and the documentation of Mixtepec-Mixtec Soutenue par Composition du jury : Jack BOWERS Guillaume, JACQUES le 8 octobre 2020 Directeur de Recherche, CNRS Président Alexis, MICHAUD Chargé de Recherche, CNRS Rapporteur École doctorale n° 472 Tomaž, ERJAVEC Senior Researcher, Jožef Stefan Institute Rapporteur École doctorale de l’École Pratique des Hautes Études Enrique, PALANCAR Directeur de Recherche, CNRS Examinateur Karlheinz, MOERTH Senior Researcher, Austrian Center for Digital Humanities Spécialité and Cultural Heritage Examinateur Linguistique Emmanuel, SCHANG Maître de Conférence, Université D’Orléans Examinateur Benoit, SAGOT Chargé de Recherche, Inria Examinateur Laurent, ROMARY Directeur de recherche, Inria Directeur de thèse 1. -

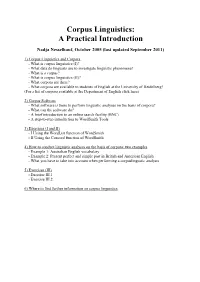

Corpus Linguistics: a Practical Introduction

Corpus Linguistics: A Practical Introduction Nadja Nesselhauf, October 2005 (last updated September 2011) 1) Corpus Linguistics and Corpora - What is corpus linguistics (I)? - What data do linguists use to investigate linguistic phenomena? - What is a corpus? - What is corpus linguistics (II)? - What corpora are there? - What corpora are available to students of English at the University of Heidelberg? (For a list of corpora available at the Department of English click here) 2) Corpus Software - What software is there to perform linguistic analyses on the basis of corpora? - What can the software do? - A brief introduction to an online search facility (BNC) - A step-to-step introduction to WordSmith Tools 3) Exercises (I and II) - I Using the WordList function of WordSmith - II Using the Concord function of WordSmith 4) How to conduct linguistic analyses on the basis of corpora: two examples - Example 1: Australian English vocabulary - Example 2: Present perfect and simple past in British and American English - What you have to take into account when performing a corpuslingustic analysis 5) Exercises (III) - Exercise III.1 - Exercise III.2 6) Where to find further information on corpus linguistics 1) Corpus Linguistics and Corpora What is corpus linguistics (I)? Corpus linguistics is a method of carrying out linguistic analyses. As it can be used for the investigation of many kinds of linguistic questions and as it has been shown to have the potential to yield highly interesting, fundamental, and often surprising new insights about language, it has become one of the most wide-spread methods of linguistic investigation in recent years. -

A Scaleable Automated Quality Assurance Technique for Semantic Representations and Proposition Banks K

A scaleable automated quality assurance technique for semantic representations and proposition banks K. Bretonnel Cohen Lawrence E. Hunter Computational Bioscience Program Computational Bioscience Program U. of Colorado School of Medicine U. of Colorado School of Medicine Department of Linguistics [email protected] University of Colorado at Boulder [email protected] Martha Palmer Department of Linguistics University of Colorado at Boulder [email protected] Abstract (Leech, 1993; Ide and Brew, 2000; Wynne, 2005; Cohen et al., 2005a; Cohen et al., 2005b) that ad- This paper presents an evaluation of an auto- dress architectural, sampling, and procedural issues, mated quality assurance technique for a type as well as publications such as (Hripcsak and Roth- of semantic representation known as a pred- schild, 2005; Artstein and Poesio, 2008) that address icate argument structure. These representa- issues in inter-annotator agreement. However, there tions are crucial to the development of an im- portant class of corpus known as a proposi- is not yet a significant body of work on the subject tion bank. Previous work (Cohen and Hunter, of quality assurance for corpora, or for that matter, 2006) proposed and tested an analytical tech- for many other types of linguistic resources. (Mey- nique based on a simple discovery proce- ers et al., 2004) describe three error-checking mea- dure inspired by classic structural linguistic sures used in the construction of NomBank, and the methodology. Cohen and Hunter applied the use of inter-annotator agreement as a quality control technique manually to a small set of repre- measure for corpus construction is discussed at some sentations. -

A Corpus-Based Investigation of Lexical Cohesion in En & It

A CORPUS-BASED INVESTIGATION OF LEXICAL COHESION IN EN & IT NON-TRANSLATED TEXTS AND IN IT TRANSLATED TEXTS A thesis submitted To Kent State University in partial Fufillment of the requirements for the Degree of Doctor of Philosophy by Leonardo Giannossa August, 2012 © Copyright by Leonardo Giannossa 2012 All Rights Reserved ii Dissertation written by Leonardo Giannossa M.A., University of Bari – Italy, 2007 B.A., University of Bari, Italy, 2005 Approved by ______________________________, Chair, Doctoral Dissertation Committee Brian Baer ______________________________, Members, Doctoral Dissertation Committee Richard K. Washbourne ______________________________, Erik Angelone ______________________________, Patricia Dunmire ______________________________, Sarah Rilling Accepted by ______________________________, Interim Chair, Modern and Classical Language Studies Keiran J. Dunne ______________________________, Dean, College of Arts and Sciences Timothy Moerland iii Table of Contents LIST OF FIGURES .......................................................................................................... vii LIST OF TABLES ........................................................................................................... viii DEDICATION ................................................................................................................... xi ACKNOWLEDGEMENTS .............................................................................................. xii ABSTRACT .................................................................................................................... -

Verb Similarity: Comparing Corpus and Psycholinguistic Data

Corpus Linguistics and Ling. Theory 2017; aop Lara Gil-Vallejo*, Marta Coll-Florit, Irene Castellón and Jordi Turmo Verb similarity: Comparing corpus and psycholinguistic data DOI 10.1515/cllt-2016-0045 Abstract: Similarity, which plays a key role in fields like cognitive science, psycho- linguistics and natural language processing, is a broad and multifaceted concept. In this work we analyse how two approaches that belong to different perspectives, the corpus view and the psycholinguistic view, articulate similarity between verb senses in Spanish. Specifically, we compare the similarity between verb senses based on their argument structure, which is captured through semantic roles, with their similarity defined by word associations. We address the question of whether verb argument structure, which reflects the expression of the events, and word associations, which are related to the speakers’ organization of the mental lexicon, shape similarity between verbs in a congruent manner, a topic which has not been explored previously. While we find significant correlations between verb sense similarities obtained from these two approaches, our findings also highlight some discrepancies between them and the importance of the degree of abstraction of the corpus annotation and psycholinguistic representations. Keywords: similarity, semantic roles, word associations 1 Introduction Similarity is a crucial notion that underpins a number of cognitive models and psycholinguistic accounts of the lexicon. Furthermore, it is also important for natural -

Helping Language Learners Get Started with Concordancing

TESOL International Journal 91 Helping Language Learners Get Started with Concordancing Stephen Jeaco* Xi’an Jiaotong-Liverpool University, China Abstract While studies exploring the overall effectiveness of Data Driven Learning activities have been positive, learner participants often seem to report difculties in deciding what to look up, and how to formulate appropriate queries for a search (Gabel, 2001; Sun, 2003; Yeh, Liou, & Li, 2007). The Prime Machine (Jeaco, 2015) was developed as a concordancing tool to be used specically for looking up, comparing and exploring vocabulary and language patterns for English language teaching and self-tutoring. The design of this concordancer took a pedagogical perspective on the corpus techniques and methods to be used, focusing on English for Academic Purposes and including important software design principles from Computer Aided Language Learning. The software includes a range of search support and display features which try to make the comparison process for exploring specic words and collocations easier. This paper reports on student use of this concordancer, drawing on log data records from mouse clicks and software features as well as questionnaire responses from the participants. Twenty-three undergraduate students from a Sino-British university in China participated in the evaluation. Results from logs of search support features and general use of the software are compared with questionnaire responses from before and after the session. It is believed that The Prime Machine can be a very useful corpus tool which, while simple to operate, provides a wealth of information for language learning. Key words: Concordancer, Data Driven Learning, Lexical Priming, Corpus linguistics. -

Rule-Based Machine Translation for the Italian–Sardinian Language Pair

The Prague Bulletin of Mathematical Linguistics NUMBER 108 JUNE 2017 221–232 Rule-Based Machine Translation for the Italian–Sardinian Language Pair Francis M. Tyers,ab Hèctor Alòs i Font,a Gianfranco Fronteddu,e Adrià Martín-Mord a UiT Norgga árktalaš universitehta, Tromsø, Norway b Arvutiteaduse instituut, Tartu Ülikool, Tartu, Estonia c Universitat de Barcelona, Barcelona d Universitat Autònoma de Barcelona, Barcelona e Università degli Studi di Cagliari, Cagliari Abstract This paper describes the process of creation of the first machine translation system from Italian to Sardinian, a Romance language spoken on the island of Sardinia in the Mediterranean. The project was carried out by a team of translators and computational linguists. The article focuses on the technology used (Rule-Based Machine Translation) and on some of the rules created, as well as on the orthographic model used for Sardinian. 1. Introduction This paper presents a shallow-transfer rule-based machine translation (MT) sys- tem from Italian to Sardinian, two languages of the Romance group. Italian is spoken in Italy, although it is an official language in countries like the Republic of Switzer- land, San Marino and Vatican City, and has approximately 58 million speakers, while Sardinian is spoken principally in Sardinia and has approximately one million speak- ers (Lewis, 2009). The objective of the project was to make a system for creating almost-translated text that needs post-editing before being publishable. For translating between closely- related languages where one language is a majority language and the other a minority or marginalised language, this is relevant as MT of post-editing quality into a lesser- resourced language can help with creating more text in that language.