Constraint Programming Techniques for Generating Efficient Hardware Architectures for Field Programmable Gate Arrays

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

At—At, Batch—Execute Commands at a Later Time

at—at, batch—execute commands at a later time at [–csm] [–f script] [–qqueue] time [date] [+ increment] at –l [ job...] at –r job... batch at and batch read commands from standard input to be executed at a later time. at allows you to specify when the commands should be executed, while jobs queued with batch will execute when system load level permits. Executes commands read from stdin or a file at some later time. Unless redirected, the output is mailed to the user. Example A.1 1 at 6:30am Dec 12 < program 2 at noon tomorrow < program 3 at 1945 pm August 9 < program 4 at now + 3 hours < program 5 at 8:30am Jan 4 < program 6 at -r 83883555320.a EXPLANATION 1. At 6:30 in the morning on December 12th, start the job. 2. At noon tomorrow start the job. 3. At 7:45 in the evening on August 9th, start the job. 4. In three hours start the job. 5. At 8:30 in the morning of January 4th, start the job. 6. Removes previously scheduled job 83883555320.a. awk—pattern scanning and processing language awk [ –fprogram–file ] [ –Fc ] [ prog ] [ parameters ] [ filename...] awk scans each input filename for lines that match any of a set of patterns specified in prog. Example A.2 1 awk '{print $1, $2}' file 2 awk '/John/{print $3, $4}' file 3 awk -F: '{print $3}' /etc/passwd 4 date | awk '{print $6}' EXPLANATION 1. Prints the first two fields of file where fields are separated by whitespace. 2. Prints fields 3 and 4 if the pattern John is found. -

The Price of Safety: Evaluating IOMMU Performance Preliminary Results

The Price of Safety: Evaluating IOMMU Performance Preliminary Results Muli Ben-Yehuda [email protected] IBM Haifa Research Lab The Price of Safety: Evaluating IOMMU Performance, 2007 Spring Xen Summit – p.1/14 Table of Contents IOMMUs Preliminary Performance Results Optimizations and Trade-offs Conclusions The Price of Safety: Evaluating IOMMU Performance, 2007 Spring Xen Summit – p.2/14 IOMMUs IOMMU - IO Memory Management Unit Think MMU for IO devices - separate address spaces! IOMMUs enable virtual machines direct hardware access for PV and FV guests ... while protecting the rest of the system from mis-behaving or malicious virtual machines But at what cost? The Price of Safety: Evaluating IOMMU Performance, 2007 Spring Xen Summit – p.3/14 Setting the Stage Full results to appear at OLS ’07 Calgary (PCI-X) and Calgary (PCI-e) IOMMUs Linux and Xen implementations ... with some corroborating evidence from the DART IOMMU on PPC JS21 blades and the IBM Research Hypervisor Joint work with Jimi Xenidis, Michal Ostrowski, Karl Rister, Alexis Bruemmer and Leendert Van Doorn Utilizing IOMMUs for Virtualization in Linux and Xen, by by M. Ben-Yehuda, J. Mason, O. Krieger, J. Xenidis, L. Van Doorn, A. Mallick, J. Nakajima, and E. Wahlig, OLS ’06 The Price of Safety: Evaluating IOMMU Performance, 2007 Spring Xen Summit – p.4/14 On Comparisons What are we comparing against? not emulation not virtual IO (frontend / backend drivers) Direct hardware access without an IOMMU compared with direct hardware access with an IOMMU on the IO path The Price of Safety: -

Linux Performance Tools

Linux Performance Tools Brendan Gregg Senior Performance Architect Performance Engineering Team [email protected] @brendangregg This Tutorial • A tour of many Linux performance tools – To show you what can be done – With guidance for how to do it • This includes objectives, discussion, live demos – See the video of this tutorial Observability Benchmarking Tuning Stac Tuning • Massive AWS EC2 Linux cloud – 10s of thousands of cloud instances • FreeBSD for content delivery – ~33% of US Internet traffic at night • Over 50M subscribers – Recently launched in ANZ • Use Linux server tools as needed – After cloud monitoring (Atlas, etc.) and instance monitoring (Vector) tools Agenda • Methodologies • Tools • Tool Types: – Observability – Benchmarking – Tuning – Static • Profiling • Tracing Methodologies Methodologies • Objectives: – Recognize the Streetlight Anti-Method – Perform the Workload Characterization Method – Perform the USE Method – Learn how to start with the questions, before using tools – Be aware of other methodologies My system is slow… DEMO & DISCUSSION Methodologies • There are dozens of performance tools for Linux – Packages: sysstat, procps, coreutils, … – Commercial products • Methodologies can provide guidance for choosing and using tools effectively • A starting point, a process, and an ending point An#-Methodologies • The lack of a deliberate methodology… Street Light An<-Method 1. Pick observability tools that are: – Familiar – Found on the Internet – Found at random 2. Run tools 3. Look for obvious issues Drunk Man An<-Method • Tune things at random until the problem goes away Blame Someone Else An<-Method 1. Find a system or environment component you are not responsible for 2. Hypothesize that the issue is with that component 3. Redirect the issue to the responsible team 4. -

10Gb TR-D 10GBAS B/S SFP+ Dxxxl-N00 E-LR/LW Bidi Opti 0

TR-DXxxL-N00 Rev1.6 10Gb/s SFP+ BiDi Optical Tran sceiver TR-DXxxL-N00, 10Km SMF Application 10GBASE-LR/LW Bi-directional, LC connector Features 10Gb/sserialopticalinterfacecompliantto 802.3ae10GBASE-LR,singleLCconnector forbi-directionalapplication,over10km SMF Electrical interface compliant to SFF-8431 specifications 1270/1330nm DFB transmitter, PINphoto- detector,integratedWDM 2-wireinterfaceformanagement specificationscompliantwithSFF8472 Partnumber(0°Cto70°C): Applications – TR-DX12L-N00,1270TX/1330RX High speed storage area – TR-DX33L-N00,1330TX/1270RX networks Lineside,clientsideloopbackfunction; Computerclustercross-connect Advancedfirmwareallowcustomersystem Customhigh-speeddatapipes encryption information to be stored in transceiver ROHScompliant Figure11: Application in System InnoLight Technology Corp. Page1of13 TR-DXxxL-N00 Rev1.6 1. GENERAL DESCRIPTION This10GigabitSFP+BiDitransceiverisdesignedtotransmitandreceiveopticaldata oversinglemodeopticalfiberforlinklength20km. TheSFP+BiDimoduleelectricalinterfaceiscomplianttoSFIelectricalspecifications. Thetransmitterinputandreceiveroutputimpedanceis100Ohmsdifferential.Data linesareinternally AC coupled. The module provides differential termination and reduce differential to common mode conversion for quality signal termination and lowEMI.SFItypicallyoperatesover200mmofimprovedFR4materialoruptoabout 150mmofstandardFR4withoneconnector. Thetransmitterconverts10Gbit/sserialPECLorCMLelectricaldataintoserialoptical data compliant with the 10GBASE-LR standard. An open collector -

System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

Bash-Prompt-HOWTO.Pdf

Bash Prompt HOWTO $Revision: 0.93 $, $Date: 2003/11/06 02:12:02 $ Giles Orr Copyright © 1998, 1999, 2000, 2001, 2003 Giles Orr Creating and controlling terminal and xterm prompts is discussed, including incorporating standard escape sequences to give username, current working directory, time, etc. Further suggestions are made on how to modify xterm title bars, use external functions to provide prompt information, and how to use ANSI colours. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front−Cover Texts, and with no Back−Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". Bash Prompt HOWTO Table of Contents Chapter 1. Introduction and Administrivia.....................................................................................................1 1.1. Introduction.......................................................................................................................................1 1.2. Revision History...............................................................................................................................1 1.3. Requirements....................................................................................................................................1 1.4. How To Use This Document............................................................................................................2 -

BASH Programming − Introduction HOW−TO BASH Programming − Introduction HOW−TO

BASH Programming − Introduction HOW−TO BASH Programming − Introduction HOW−TO Table of Contents BASH Programming − Introduction HOW−TO.............................................................................................1 by Mike G mikkey at dynamo.com.ar.....................................................................................................1 1.Introduction...........................................................................................................................................1 2.Very simple Scripts...............................................................................................................................1 3.All about redirection.............................................................................................................................1 4.Pipes......................................................................................................................................................1 5.Variables...............................................................................................................................................2 6.Conditionals..........................................................................................................................................2 7.Loops for, while and until.....................................................................................................................2 8.Functions...............................................................................................................................................2 -

Writing Shell Scripts Day(S) Ref : SHL OBJECTIVES Participants the UNIX Shell Is Both an Interactive Interface to the UNIX System and a Powerful Scripting Language

Hands-on course , 3 Writing Shell Scripts day(s) Ref : SHL OBJECTIVES Participants The UNIX Shell is both an interactive interface to the UNIX system and a powerful scripting language. This UNIX/Linux administrator training session will present detailed functionalities to help you understanding shell programming. You will performing system acquire Shell programming skills in concrete domains like survey, task automatization, software installation, administration duties including file processing...). installation, maintenance and integration or developer of text processing software. 1) Overview of the UNIX Shell 4) Advanced Shell programming and the Korn Shell Pre-requisites 2) Interfacing UNIX with the interactive Shell 3) An introduction to Shell scripting 5) Other UNIX powerful scripting tools Basic UNIX/Linux skills. Knowledge of any programming language is added advantage. 1) Overview of the UNIX Shell - History of the UNIX Shell. UNIX fork/exec system calls. Next sessions - Arguments and environment of a UNIX program. - How the Shell reads the command line. - Differences between Bourne, Korn and Bourne Again Shells. 2) Interfacing UNIX with the interactive Shell - Starting an interactive Shell. Initialization of the Shell. - Line editing, vi and emacs Ksh modes. Line editing with Bash. - Name completion. Shell options and the set built-in. - Customizing the environment. The command search path. - Shell commands and scripts. - Sourcing Shell commands. Execution of a Shell script. UNIX execution of a Shell script, the she-bang. - Creation and use of Shell variables. Passing arguments to a Shell script. - Differences between exec, background and sub-shells. Using pipelines and lists. 3) An introduction to Shell scripting - Basic Shell programming. -

Linux Shell Scripting Tutorial V2.0

Linux Shell Scripting Tutorial v2.0 Written by Vivek Gite <[email protected]> and Edited By Various Contributors PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Mon, 31 May 2010 07:27:26 CET Contents Articles Linux Shell Scripting Tutorial - A Beginner's handbook:About 1 Chapter 1: Quick Introduction to Linux 4 What Is Linux 4 Who created Linux 5 Where can I download Linux 6 How do I Install Linux 6 Linux usage in everyday life 7 What is Linux Kernel 7 What is Linux Shell 8 Unix philosophy 11 But how do you use the shell 12 What is a Shell Script or shell scripting 13 Why shell scripting 14 Chapter 1 Challenges 16 Chapter 2: Getting Started With Shell Programming 17 The bash shell 17 Shell commands 19 The role of shells in the Linux environment 21 Other standard shells 23 Hello, World! Tutorial 25 Shebang 27 Shell Comments 29 Setting up permissions on a script 30 Execute a script 31 Debug a script 32 Chapter 2 Challenges 33 Chapter 3:The Shell Variables and Environment 34 Variables in shell 34 Assign values to shell variables 38 Default shell variables value 40 Rules for Naming variable name 41 Display the value of shell variables 42 Quoting 46 The export statement 49 Unset shell and environment variables 50 Getting User Input Via Keyboard 50 Perform arithmetic operations 54 Create an integer variable 56 Create the constants variable 57 Bash variable existence check 58 Customize the bash shell environments 59 Recalling command history 63 Path name expansion 65 Create and use aliases 67 The tilde expansion 69 Startup scripts 70 Using aliases 72 Changing bash prompt 73 Setting shell options 77 Setting system wide shell options 82 Chapter 3 Challenges 83 Chapter 4: Conditionals Execution (Decision Making) 84 Bash structured language constructs 84 Test command 86 If structures to execute code based on a condition 87 If. -

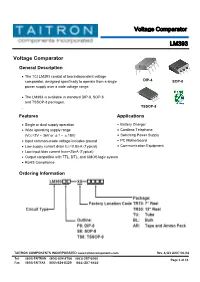

LM393 Voltage Comparator

Voltage Comparator LM393 Voltage Comparator General Description • The TCI LM393 consist of two independent voltage comparator, designed specifically to operate from a single DIP-8 SOP-8 power supply over a wide voltage range. • The LM393 is available in standard DIP-8, SOP-8 and TSSOP-8 packages. TSSOP-8 Features Applications • Single or dual supply operation • Battery Charger • Wide operating supply range • Cordless Telephone (VCC=2V ~ 36V or ±1 ~ ±18V) • Switching Power Supply • Input common-mode voltage includes ground • PC Motherboard • Low supply current drain ICC=0.8mA (Typical) • Communication Equipment • Low input bias current IBIAS=25nA (Typical) • Output compatible with TTL, DTL, and CMOS logic system • RoHS Compliance Ordering Information TAITRON COMPONENTS INCORPORATED www.taitroncomponents.com Rev. A/DX 2007-06-04 Tel: (800)-TAITRON (800)-824-8766 (661)-257-6060 Page 1 of 11 Fax: (800)-TAITFAX (800)-824-8329 (661)-257-6415 Voltage Comparator LM393 Pin Configuration Block Diagram Rev. A/DX 2007-06-04 www.taitroncomponents.com Page 2 of 11 Voltage Comparator LM393 Absolute Maximum Ratings Symbol Description LM393 Unit VCC Supply Voltage ±18 or 36 V VI(DIFF) Differential Input Voltage 36 V VIN Input Voltage -0.3 ~ 36 V TSSOP-8 570 Power PD SOP-8 660 mW Dissipation DIP-8 780 TJ Operating Junction Temperature 150 ° C TOPR Operating Temperature Range -40 ~ 85 ° C TSTG Storage Temperature Range -65 ~ 150 ° C Note: Absolute maximum ratings are those values beyond which the device could be permanently damaged. Absolute maximum ratings are stress ratings only and functional device operation is not implied. Electrical Characteristics (VCC=5.0V, TA=25ºC, all voltage referenced to GND unless otherwise specified) LM393 Symbol Description Unit Conditions Min. -

Introduction to Using Unix/Linux Systems Date: 07/17/08 Course

School of Computing and Information Sciences Course Title: Introduction to Using Unix/Linux Systems Date: 07/17/08 Course Number: COP 3348 Number of Credits: 3 Subject Area: System Subject Area Coordinator: Nagarajan Prabakar email: [email protected] Catalog Description: Techniques of Unix/Linux systems. Basic use, file system structure, process system structure, unix tools (regular expressions, grep, find), simple and complex shell scripts, Xwindows. Textbook: “Guide to Unix Using Linux” (4th Edition) by Palmer Course Technology 2008 (ISBN: 1-4188-3723-7) References: Prerequisites Courses: COP 2250 or CGS 2423 or equivalent. Corequisites Courses: None Type: Elective Prerequisites Topics: • Primitive data types • Basic program control structures • Familiarity with methods or functions Course Outcomes: 1. Be familiar with Unix and Linux operating Systems 2. Master the techniques to use a Linux system 3. Be familiar with the Unix file system and its basic operations 4. Be familiar with the Unix command interpreters 5. Master the techniques of shell programming 1 School of Computing and Information Sciences COP 3344 Introduction to Using Unix/Linux Systems Outline Topic Number of Outcome Lecture Hours • Introduction 3 1 o Overview of operating systems o Multi-user, multi-tasking o User-mode, kernel mode o Shells, pipe, input/output redirection • File system 6 3 o Physical storage partitions o File system hierarchy, paths, mounting o Files, directories, file/dir commands o Editor (vi), regular expressions • Advanced file processing 6 2,3 o -

Bash Script Colors Cheat Sheet by Chrissoper1 Via Cheatography.Com/24894/Cs/8483

Bash Script Colors Cheat Sheet by chrissoper1 via cheatography.com/24894/cs/8483/ TPUT Control Codes LS_COLORS File Codes ANSI Control Codes setf Foreground color (text) Block Device (buffered) bd Reset 0 setaf Foreground color (ANSI escaped) File with Capability ca Bold 1 setb Background color Character Device (unbuff ered) cd Dim 2 setab Background color (ANSI escaped) Directory di Underline on 4 bold Brighten all colors Door do Underline off 24 dim Darken all colors End Code ec Blink start 5 rev Reverse color pallete Executable File ex Blink end 25 smul Begin underli ning File fi Inverse pallete start 7 rmul Stop underli ning Left Code lc Inverse pallete end 27 smso Enter Standout Mode Symbolic Link ln Conceal 8 rmso Exit Standout Mode Multi Hard Link mh Normal 22 (File with more than one link) smcup Clear see console _co des(4) for more informa tion clear Clear Missing File mi (Pointed to by a symlink) reset Reset Screen Size ANSI Color Codes Orphaned Symbolic Link or rmcup Restore Color Text Backg rou nd (Points to a missing file) sgr0 Revert all attribute changes Black 30 40 Other-W rit able Directory ow Red 31 41 Usage: tput <con tro l> [color] Named Pipe pi Red Hello: Green 32 42 Right Code rc tput setaf 1;echo "H ell o";tput sgr0 Yellow 33 43 Socket File so Light Green ps: Blue 34 44 tput setaf 2;tput bold;ps ;tput sgr0 Directory Sticky (+t) st Magenta 35 45 File setuid (u+s) su Cyan 36 46 TPUT Color Codes Sticky AND Other-W rit able tw Light Grey 37 47 Black 0 File Extension Specific *.example Red 1 .example Green 2 Create a dircolors file with dircolors -p > Yellow 3 ~/.dircolors Blue 4 see dir_col ors (1), dir_col ors(5) for more info Magenta 5 Cyan 6 Light Grey 7 By chrissoper1 Published 22nd June, 2016.