Spectral Color Management in Virtual Reality Scenes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding Color and Gamut Poster

Understanding Colors and Gamut www.tektronix.com/video Contact Tektronix: ASEAN / Australasia (65) 6356 3900 Austria* 00800 2255 4835 Understanding High Balkans, Israel, South Africa and other ISE Countries +41 52 675 3777 Definition Video Poster Belgium* 00800 2255 4835 Brazil +55 (11) 3759 7627 This poster provides graphical Canada 1 (800) 833-9200 reference to understanding Central East Europe and the Baltics +41 52 675 3777 high definition video. Central Europe & Greece +41 52 675 3777 Denmark +45 80 88 1401 Finland +41 52 675 3777 France* 00800 2255 4835 To order your free copy of this poster, please visit: Germany* 00800 2255 4835 www.tek.com/poster/understanding-hd-and-3g-sdi-video-poster Hong Kong 400-820-5835 India 000-800-650-1835 Italy* 00800 2255 4835 Japan 81 (3) 6714-3010 Luxembourg +41 52 675 3777 MPEG-2 Transport Stream Advanced Television Systems Committee (ATSC) Mexico, Central/South America & Caribbean 52 (55) 56 04 50 90 ISO/IEC 13818-1 International Standard Program and System Information Protocol (PSIP) for Terrestrial Broadcast and cable (Doc. A//65B and A/69) System Time Table (STT) Rating Region Table (RRT) Direct Channel Change Table (DCCT) ISO/IEC 13818-2 Video Levels and Profiles MPEG Poster ISO/IEC 13818-1 Transport Packet PES PACKET SYNTAX DIAGRAM 24 bits 8 bits 16 bits Syntax Bits Format Syntax Bits Format Syntax Bits Format 4:2:0 4:2:2 4:2:0, 4:2:2 1920x1152 1920x1088 1920x1152 Packet PES Optional system_time_table_section(){ rating_region_table_section(){ directed_channel_change_table_section(){ High Syntax -

ROCK-COLOR CHART with Genuine Munsell® Color Chips

geological ROCK-COLOR CHART with genuine Munsell® color chips Produced by geological ROCK-COLOR CHART with genuine Munsell® color chips 2009 Year Revised | 2009 Production Date put into use This publication is a revision of the previously published Geological Society of America (GSA) Rock-Color Chart prepared by the Rock-Color Chart Committee (representing the U.S. Geological Survey, GSA, the American Association of Petroleum Geologists, the Society of Economic Geologists, and the Association of American State Geologists). Colors within this chart are the same as those used in previous GSA editions, and the chart was produced in cooperation with GSA. Produced by 4300 44th Street • Grand Rapids, MI 49512 • Tel: 877-888-1720 • munsell.com The Rock-Color Chart The pages within this book are cleanable and can be exposed to the standard environmental conditions that are met in the field. This does not mean that the book will be able to withstand all of the environmental conditions that it is exposed to in the field. For the cleaning of the colored pages, please make sure not to use a cleaning agent or materials that are coarse in nature. These materials could either damage the surface of the color chips or cause the color chips to start to delaminate from the pages. With the specifying of the rock color it is important to remember to replace the rock color chart book on a regular basis so that the colors being specified are consistent from one individual to another. We recommend that you mark the date when you started to use the the book. -

Ph.D. Thesis Abstractindex

COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS December 1996 Francisco Hideki Imai Graduate School of Science and Technology Chiba University, JAPAN Dedicated to my parents ii COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS A dissertation submitted to the Graduate School of Science and Technology of Chiba University in partial fulfillment of the requirements for the degree of Doctor of Philosophy by Francisco Hideki Imai December 1996 iii Declaration This is to certify that this work has been done by me and it has not been submitted elsewhere for the award of any degree or diploma. Countersigned Signature of the student ___________________________________ _______________________________ Yoichi Miyake, Professor Francisco Hideki Imai iv The undersigned have examined the dissertation entitled COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS presented by _______Francisco Hideki Imai____________, a candidate for the degree of Doctor of Philosophy, and hereby certify that it is worthy of acceptance. ______________________________ ______________________________ Date Advisor Yoichi Miyake, Professor EXAMINING COMMITTEE ____________________________ Yoshizumi Yasuda, Professor ____________________________ Toshio Honda, Professor ____________________________ Hirohisa Yaguchi, Professor ____________________________ Atsushi Imiya, Associate Professor v Abstract In recent years, many imaging systems have been developed, and it became increasingly important to exchange image data through the computer network. Therefore, it is required to reproduce color independently on each imaging device. In the studies of device independent color reproduction, colorimetric color reproduction has been done, namely color with same chromaticity or tristimulus values is reproduced. However, even if the tristimulus values are the same, color appearance is not always same under different viewing conditions. -

Medtronic Brand Color Chart

Medtronic Brand Color Chart Medtronic Visual Identity System: Color Ratios Navy Blue Medtronic Blue Cobalt Blue Charcoal Blue Gray Dark Gray Yellow Light Orange Gray Orange Medium Blue Sky Blue Light Blue Light Gray Pale Gray White Purple Green Turquoise Primary Blue Color Palette 70% Primary Neutral Color Palette 20% Accent Color Palette 10% The Medtronic Brand Color Palette was created for use in all material. Please use the CMYK, RGB, HEX, and LAB values as often as possible. If you are not able to use the LAB values, you can use the Pantone equivalents, but be aware the color output will vary from the other four color breakdowns. If you need a spot color, the preference is for you to use the LAB values. Primary Blue Color Palette Navy Blue Medtronic Blue C: 100 C: 99 R: 0 L: 15 R: 0 L: 31 M: 94 Web/HEX Pantone M: 74 Web/HEX Pantone G: 30 A: 2 G: 75 A: -2 Y: 47 #001E46 533 C Y: 17 #004B87 2154 C B: 70 B: -20 B: 135 B: -40 K: 43 K: 4 Cobalt Blue Medium Blue C: 81 C: 73 R: 0 L: 52 R: 0 L: 64 M: 35 Web/HEX Pantone M: 12 Web/HEX Pantone G: 133 A: -12 G: 169 A: -23 Y: 0 #0085CA 2382 C Y: 0 #00A9E0 2191 C B: 202 B: -45 B: 224 B: -39 K: 0 K : 0 Sky Blue Light Blue C: 55 C: 29 R: 113 L: 75 R: 185 L: 85 M: 4 Web/HEX Pantone M: 5 Web/HEX Pantone G: 197 A: -20 G: 217 A: -9 Y: 4 #71C5E8 297 C Y: 5 #B9D9EB 290 C B: 232 B: -26 B: 235 B: -13 K: 0 K: 0 Primary Neutral Color Palette Charcoal Gray Blue Gray C: 0 Pantone C: 68 R: 83 L: 36 R: 91 L: 51 M: 0 Web/HEX Cool M: 40 Web/HEX Pantone G: 86 A: 0 G: 127 A: -9 Y: 0 #53565a Gray Y: 28 #5B7F95 5415 -

Digital Color Workflows and the HP Dreamcolor Lp2480zx Professional Display

Digital Color Workflows and the HP DreamColor LP2480zx Professional Display Improving accuracy and predictability in color processing at the designer’s desk can increase productivity and improve quality of digital color projects in animation, game development, film/video post production, broadcast, product design, graphic arts and photography. Introduction ...................................................................................................................................2 Managing color .............................................................................................................................3 The property of color ...................................................................................................................3 The RGB color set ....................................................................................................................3 The CMYK color set .................................................................................................................3 Color spaces...........................................................................................................................4 Gamuts..................................................................................................................................5 The digital workflow ....................................................................................................................5 Color profiles..........................................................................................................................5 -

Comparing Color Vision Testing Using the Farnsworth-Munsell 100-Hue, Ishihara Compatible, and Digital TCV Software Rachel A

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CommonKnowledge Pacific nivU ersity CommonKnowledge College of Optometry Theses, Dissertations and Capstone Projects 4-23-2015 Comparing Color Vision Testing Using the Farnsworth-Munsell 100-Hue, Ishihara Compatible, and Digital TCV Software Rachel A. Murphy Pacific nU iversity, [email protected] Recommended Citation Murphy, Rachel A., "Comparing Color Vision Testing Using the Farnsworth-Munsell 100-Hue, Ishihara Compatible, and Digital TCV Software" (2015). College of Optometry. Paper 9. http://commons.pacificu.edu/opt/9 This Thesis is brought to you for free and open access by the Theses, Dissertations and Capstone Projects at CommonKnowledge. It has been accepted for inclusion in College of Optometry by an authorized administrator of CommonKnowledge. For more information, please contact [email protected]. Comparing Color Vision Testing Using the Farnsworth-Munsell 100-Hue, Ishihara Compatible, and Digital TCV Software Abstract It is crucial that eye care professionals be able to provide quick, accurate, and complete testing of color vision, both to enhance the lives of patients and to satisfy the requirements laid out by industry standards. With the growing popularity of the use of digital equipment in offices, there is a natural progression to digital color vision screening tests, which have the advantage of being fast, inexpensive, and readily portable with automated scoring for greater consistency. Few studies have sought to validate specific digital tests. The aim of this study is to compare two traditionally accepted manual tests for detecting congenital color vision deficiency (CCVD) with analogous digital versions. -

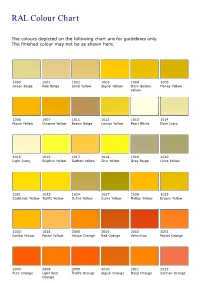

RAL Colour Chart

RAL Colour Chart The colours depicted on the following chart are for guidelines only. The finished colour may not be as shown here. 1000 1001 1002 1003 1004 1005 Green Beige Pale Beige Sand Yellow Signal Yellow Dark Golden Honey Yellow Yellow 1006 1007 1011 1012 1013 1014 Maize Yellow Chrome Yellow Brown Beige Lemon Yellow Pearl White Dark Ivory 1015 1016 1017 1018 1019 1020 Light Ivory Sulphur Yellow Saffron Yellow Zinc Yellow Grey Beige Olive Yellow 1021 1023 1024 1027 1028 1032 Cadmium Yellow Traffic Yellow Ochre Yellow Curry Yellow Mellon Yellow Broom Yellow 1033 1034 2000 2001 2002 2003 Dahlia Yellow Pastel Yellow Yellow Orange Red Orange Vermillion Pastel Orange 2004 2008 2009 2010 2011 2012 Pure Orange Light Red Traffic Orange Signal Orange Deep Orange Salmon Orange Orange 3000 3001 3002 3003 3004 3005 Flame Red RAL Signal Red Carmine Red Ruby Red Purple Red Wine Red 3007 3009 3011 3012 3013 3014 Black Red Oxide Red Brown Red Beige Red Tomato Red Antique Pink 3015 3016 3017 3018 3020 3022 Light Pink Coral Red Rose Strawberry Red Traffic Red Dark Salmon Red 3027 3031 4001 4002 4003 4004 Raspberry Red Orient Red Red Lilac Red Violet Heather Violet Claret Violet 4005 4006 4007 4008 4009 4010 Blue Lilac Traffic Purple Purple Violet Signal Violet Pastel Violet Telemagenta 5000 5001 5002 5003 5004 5005 Violet Blue Green Blue Ultramarine Blue dark Sapphire Black Blue Signal Blue Blue 5007 5008 5009 5010 5011 5012 Brilliant Blue Grey Blue Light Azure Blue Gentian Blue Steel Blue Light Blue 5013 5014 5015 5017 5018 5019 Dark Cobalt Blue -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

RAL COLOR CHART ***** This Chart Is to Be Used As a Guide Only. Colors May Appear Slightly Different ***** Green Beige Purple V

RAL COLOR CHART ***** This Chart is to be used as a guide only. Colors May Appear Slightly Different ***** RAL 1000 Green Beige RAL 4007 Purple Violet RAL 7008 Khaki Grey RAL 4008 RAL 7009 RAL 1001 Beige Signal Violet Green Grey Tarpaulin RAL 1002 Sand Yellow RAL 4009 Pastel Violet RAL 7010 Grey RAL 1003 Signal Yellow RAL 5000 Violet Blue RAL 7011 Iron Grey RAL 1004 Golden Yellow RAL 5001 Green Blue RAL 7012 Basalt Grey Ultramarine RAL 1005 Honey Yellow RAL 5002 RAL 7013 Brown Grey Blue RAL 1006 Maize Yellow RAL 5003 Saphire Blue RAL 7015 Slate Grey Anthracite RAL 1007 Chrome Yellow RAL 5004 Black Blue RAL 7016 Grey RAL 1011 Brown Beige RAL 5005 Signal Blue RAL 7021 Black Grey RAL 1012 Lemon Yellow RAL 5007 Brillant Blue RAL 7022 Umbra Grey Concrete RAL 1013 Oyster White RAL 5008 Grey Blue RAL 7023 Grey Graphite RAL 1014 Ivory RAL 5009 Azure Blue RAL 7024 Grey Granite RAL 1015 Light Ivory RAL 5010 Gentian Blue RAL 7026 Grey RAL 1016 Sulfer Yellow RAL 5011 Steel Blue RAL 7030 Stone Grey RAL 1017 Saffron Yellow RAL 5012 Light Blue RAL 7031 Blue Grey RAL 1018 Zinc Yellow RAL 5013 Cobolt Blue RAL 7032 Pebble Grey Cement RAL 1019 Grey Beige RAL 5014 Pigieon Blue RAL 7033 Grey RAL 1020 Olive Yellow RAL 5015 Sky Blue RAL 7034 Yellow Grey RAL 1021 Rape Yellow RAL 5017 Traffic Blue RAL 7035 Light Grey Platinum RAL 1023 Traffic Yellow RAL 5018 Turquiose Blue RAL 7036 Grey RAL 1024 Ochre Yellow RAL 5019 Capri Blue RAL 7037 Dusty Grey RAL 1027 Curry RAL 5020 Ocean Blue RAL 7038 Agate Grey RAL 1028 Melon Yellow RAL 5021 Water Blue RAL 7039 Quartz Grey -

340 K2017D.Pdf

Contents / Contenido Introduction Game Introducción Color 4 49 Model Game Color Air 7 54 Liquid Color Cases Gold Maletines 16 56 Model Weathering Air Effects 17 60 Metal Pigments Color Pigmentos 42 68 Panzer Model Wash Aces Lavados 46 72 Surface Primer Paint Imprimación Stand 76 90 Diorama Accessories Effects Accesorios 78 92 Premium Publications RC Color Publicaciones 82 94 Auxiliaries Displays Auxiliares Expositores 84 97 Brushes Health & Safety Pinceles Salud y seguridad 88 102 Made in Spain All colors in our catalogue conform to ASTM Pictures courtesy by: Due to the printing process, the colors D-4236 standards and EEC regulation 67/548/ Imágenes cedidas por: reproduced in this catalogue are to be considered CEE, and do not require health labelling. as approximate only, and may not correspond Avatars of War, Juanjo Baron, José Brito, Murat exactly to the originals. Todos los colores en nuestro catálogo están Conform to Özgül, Chema Cabrero, Freebooterminiatures, ASTM D-4236 conformes con las normas ASTM D-4236 (American Angel Giraldez, Raúl Garcia La Torre, Jaime Ortiz, Debido a la impresión en cuatricromía, los colores and Society for Testing and Materials) y con la normativa en este catálogo pueden variar de los colores EEC regulation Plastic Soldier Company Ltd, Euromodelismo, 67/548/CEE Europea 67/548/ CEE. Robert Karlsson “Rogland”, Scratchmod. originales, y su reproducción es tan solo orientativa. Introduction The Vallejo Company was established in 1965, in New Jersey, EN U.S.A. In the first years the company specialized in the manufacture of Film Color, waterbased acrylic colors for animated films (cartoons). -

Spectral Primary Decomposition for Rendering with RGB Reflectance

Eurographics Symposium on Rendering (DL-only Track) (2019) T. Boubekeur and P. Sen (Editors) Spectral Primary Decomposition for Rendering with sRGB Reflectance Ian Mallett1 and Cem Yuksel1 1University of Utah Ground Truth Our Method Meng et al. 2015 D65 Environment 35 Error (Noise & Imprecision) Error (Color Distortion) E D CIE76 0:0 Lambertian Plane Figure 1: Spectral rendering of a texture containing the entire sRGB gamut as the Lambertian albedo for a plane under a D65 environment. In this configuration, ideally, rendered sRGB pixels should match the texture’s values. Prior work by Meng et al. [MSHD15] produces noticeable color distortion, whereas our method produces no error beyond numerical precision and Monte Carlo sampling noise (the magnitude of the DE induced by this noise varies with the image because sRGB is perceptually nonlinear). Contemporary work [JH19] is also nearly able to achieve this, but at a significant implementation and memory cost. Abstract Spectral renderers, as-compared to RGB renderers, are able to simulate light transport that is closer to reality, capturing light behavior that is impossible to simulate with any three-primary decomposition. However, spectral rendering requires spectral scene data (e.g. textures and material properties), which is not widely available, severely limiting the practicality of spectral rendering. Unfortunately, producing a physically valid reflectance spectrum from a given sRGB triple has been a challenging problem, and indeed until very recently constructing a spectrum without colorimetric round-trip error was thought to be impos- sible. In this paper, we introduce a new procedure for efficiently generating a reflectance spectrum from any given sRGB input data. -

Chromatic Adaptation Transform by Spectral Reconstruction Scott A

Chromatic Adaptation Transform by Spectral Reconstruction Scott A. Burns, University of Illinois at Urbana-Champaign, [email protected] February 28, 2019 Note to readers: This version of the paper is a preprint of a paper to appear in Color Research and Application in October 2019 (Citation: Burns SA. Chromatic adaptation transform by spectral reconstruction. Color Res Appl. 2019;44(5):682-693). The full text of the final version is available courtesy of Wiley Content Sharing initiative at: https://rdcu.be/bEZbD. The final published version differs substantially from the preprint shown here, as follows. The claims of negative tristimulus values being “failures” of a CAT are removed, since in some circumstances such as with “supersaturated” colors, it may be reasonable for a CAT to produce such results. The revised version simply states that in certain applications, tristimulus values outside the spectral locus or having negative values are undesirable. In these cases, the proposed method will guarantee that the destination colors will always be within the spectral locus. Abstract: A color appearance model (CAM) is an advanced colorimetric tool used to predict color appearance under a wide variety of viewing conditions. A chromatic adaptation transform (CAT) is an integral part of a CAM. Its role is to predict “corresponding colors,” that is, a pair of colors that have the same color appearance when viewed under different illuminants, after partial or full adaptation to each illuminant. Modern CATs perform well when applied to a limited range of illuminant pairs and a limited range of source (test) colors. However, they can fail if operated outside these ranges.