Test and Integration Environment for PCI Coprocessor Cards

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

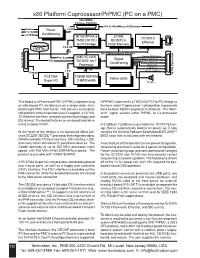

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

SPARC/CPU-5V Technical Reference Manual

SPARC/CPU-5V Technical Reference Manual P/N 203651 Edition 5.0 February 1998 FORCE COMPUTERS Inc./GmbH All Rights Reserved This document shall not be duplicated, nor its contents used for any purpose, unless express permission has been granted. Copyright by FORCE COMPUTERS CPU-5V Technical Reference Manual Table of Contents SECTION 1 INTRODUCTION ....................................................................................1 1. Getting Started ..................................................................................................................................... 1 1.1. The SPARC CPU-5V Technical Reference Manual Set.................................................................. 1 1.2. Summary of the SPARC CPU-5V ................................................................................................... 2 1.3. Specifications ................................................................................................................................... 4 1.3.1. Ordering Information........................................................................................................... 6 1.4. History of the Manual ...................................................................................................................... 9 SECTION 2 INSTALLATION ....................................................................................11 2. Introduction........................................................................................................................................ 11 2.1. Caution -

Discontinued Emulex- Branded Products

Broadcom 1320 Ridder Park Drive San Jose, CA 95131 broadcom.com Discontinued Emulex- branded Products . Fibre Channel Host Bus Adapters . Fibre Channel HUBS . Switches . Software Solutions October 30, 2020 This document only applies to Emulex- branded products. Consult your supplier for specific information on OEM-branded products. Discontinued Emulex-branded Products Overview Broadcom Limited is committed to our customers by delivering product functionality and reliability that exceeds their expectation. Below is a description of key terminology and discontinued product tables. Table 1—Fibre Channel Host Bus Adapters . Table 2—Fibre Channel Optical Transceiver Kits . Table 3—Fibre Channel HUBs . Table 4—Switches . Table 5—Software Solutions Key Terminology End of Life (EOL) notification EOL indicates the date that distributors were notified of Broadcom intent to discontinue an Emulex model in production. Distributors are typically given a three-to-six month notice of Broadcom plans to discontinue a particular model. Final order This is the last date that Broadcom will accept orders from a customer or distributor. End of support This is the last date technical support is available for reporting software issues on discontinued models. The tables below indicate the last date of support or whether support has already ended. Software updates Active devices: Drivers for already supported operating system (OS) versions and firmware are updated periodically with new features and/ or corrections. Customers should check www.broadcom.com or call technical support for support of new OS versions for a given device. End of maintenance, Support Availability For software solutions, this is the last date software upgrades or updates are available. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Sun Ultratm 2 Workstation Just the Facts

Sun UltraTM 2 Workstation Just the Facts Copyrights 1999 Sun Microsystems, Inc. All Rights Reserved. Sun, Sun Microsystems, the Sun Logo, Ultra, SunFastEthernet, Sun Enterprise, TurboGX, TurboGXplus, Solaris, VIS, SunATM, SunCD, XIL, XGL, Java, Java 3D, JDK, S24, OpenWindows, Sun StorEdge, SunISDN, SunSwift, SunTRI/S, SunHSI/S, SunFastEthernet, SunFDDI, SunPC, NFS, SunVideo, SunButtons SunDials, UltraServer, IPX, IPC, SLC, ELC, Sun-3, Sun386i, SunSpectrum, SunSpectrum Platinum, SunSpectrum Gold, SunSpectrum Silver, SunSpectrum Bronze, SunVIP, SunSolve, and SunSolve EarlyNotifier are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the United States and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the United States and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. OpenGL is a registered trademark of Silicon Graphics, Inc. UNIX is a registered trademark in the United States and other countries, exclusively licensed through X/Open Company, Ltd. Display PostScript and PostScript are trademarks of Adobe Systems, Incorporated. DLT is claimed as a trademark of Quantum Corporation in the United States and other countries. Just the Facts May 1999 Sun Ultra 2 Workstation Figure 1. The Sun UltraTM 2 workstation Sun Ultra 2 Workstation Scalable Computing Power for the Desktop Sun UltraTM 2 workstations are designed for the technical users who require high performance and multiprocessing (MP) capability. The Sun UltraTM 2 desktop series combines the power of multiprocessing with high-bandwidth networking, high-performance graphics, and exceptional application performance in a compact desktop package. Users of MP-ready and multithreaded applications will benefit greatly from the performance of the Sun Ultra 2 dual-processor capability. -

Sbus Specification B.O Written by Edward H

SBus Specification B.O Written by Edward H. Frank and Jim Lyle. Edited by Jim Lyle and Mike Harvey. Copyright ©1990 Sun Microsystems, Inc.-Printed in U.S.A. The Sun logo, Sun Microsystems, and Sun Workstation are registered trademarks of Sun Microsystems, Inc. Sun, Sun-2, Sun-3, Sun-4, Sun386i, Sunlnstall, SunOS, SunView, NFS, SunLink, NeWS, SPARC, and SPARCstation 1 are trademarks of Sun Microsystems, Inc. UNIX is a registered trademark of AT&T. The Sun Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun's licensees. All other products or services mentioned in this document are identified by the trademarks or service marks of their respective companies or organizations, and Sun Microsystems, Inc. disclaims any responsibility for specifying which marks are owned by which companies or organizations. All rights reserved. No part of this work covered by copyright hereon may be reproduced in any form or by any means-graphic, electronic, or mechanical-including photocopying, recording, taping, or storage in an information retrieval system, without the prior written permission of the copyright owner. Restricted rights legend: use, duplication, or disclosure by the U.S. government is subject to restrictions set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and in similar clauses in the FAR and NASA FAR Supplement. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Archived: Getting Started with Your SB-GPIB and NI-488.2M for Solaris 1

Getting Started with Your SB-GPIB and the NI-488.2M™ Software for Solaris 1 November 1993 Edition Part Number 320299-01 © Copyright 1991, 1994 National Instruments Corporation. All Rights Reserved. National Instruments Corporate Headquarters 6504 Bridge Point Parkway Austin, TX 78730-5039 (512) 794-0100 Technical support fax: (800) 328-2203 (512) 794-5678 Branch Offices: Australia (03) 879 9422, Austria (0662) 435986, Belgium 02/757.00.20, Canada (Ontario) (519) 622-9310, Canada (Québec) (514) 694-8521, Denmark 45 76 26 00, Finland (90) 527 2321, France (1) 48 14 24 24, Germany 089/741 31 30, Italy 02/48301892, Japan (03) 3788-1921, Netherlands 03480-33466, Norway 32-848400, Spain (91) 640 0085, Sweden 08-730 49 70, Switzerland 056/20 51 51, U.K. 0635 523545 Limited Warranty The SB-GPIB is warranted against defects in materials and workmanship for a period of two years from the date of shipment, as evidenced by receipts or other documentation. National Instruments will, at its option, repair or replace equipment that proves to be defective during the warranty period. This warranty includes parts and labor. The media on which you receive National Instruments software are warranted not to fail to execute programming instructions, due to defects in materials and workmanship, for a period of 90 days from date of shipment, as evidenced by receipts or other documentation. National Instruments will, at its option, repair or replace software media that do not execute programming instructions if National Instruments receives notice of such defects during the warranty period. National Instruments does not warrant that the operation of the software shall be uninterrupted or error free. -

Ece585 Lec2.Pdf

ECE 485/585 Microprocessor System Design Lecture 2: Memory Addressing 8086 Basics and Bus Timing Asynchronous I/O Signaling Zeshan Chishti Electrical and Computer Engineering Dept Maseeh College of Engineering and Computer Science Source: Lecture based on materials provided by Mark F. Basic I/O – Part I ECE 485/585 Outline for next few lectures Simple model of computation Memory Addressing (Alignment, Byte Order) 8088/8086 Bus Asynchronous I/O Signaling Review of Basic I/O How is I/O performed Dedicated/Isolated /Direct I/O Ports Memory Mapped I/O How do we tell when I/O device is ready or command complete? Polling Interrupts How do we transfer data? Programmed I/O DMA ECE 485/585 Simplified Model of a Computer Control Control Data, Address, Memory Data Path Microprocessor Keyboard Mouse [Fetch] Video display [Decode] Printer [Execute] I/O Device Hard disk drive Audio card Ethernet WiFi CD R/W DVD ECE 485/585 Memory Addressing Size of operands Bytes, words, long/double words, quadwords 16-bit half word (Intel: word) 32-bit word (Intel: doubleword, dword) 0x107 64-bit double word (Intel: quadword, qword) 0x106 Note: names are non-standard 0x105 SUN Sparc word is 32-bits, double is 64-bits 0x104 0x103 Alignment 0x102 Can multi-byte operands begin at any byte address? 0x101 Yes: non-aligned 0x100 No: aligned. Low order address bit(s) will be zero ECE 485/585 Memory Operand Alignment …Intel IA speak (i.e. word = 16-bits = 2 bytes) 0x107 0x106 0x105 0x104 0x103 0x102 0x101 0x100 Aligned Unaligned Aligned Unaligned Aligned Unaligned word word Double Double Quad Quad address address word word word word -----0 address address address address -----00 ----000 ECE 485/585 Memory Operand Alignment Why do we care? Unaligned memory references Can cause multiple memory bus cycles for a single operand May also span cache lines Requiring multiple evictions, multiple cache line fills Complicates memory system and cache controller design Some architectures restrict addresses to be aligned Even in architectures without alignment restrictions (e.g. -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each. -

World's First High- Performance X86 With

World’s First High- Performance x86 with Integrated AI Coprocessor Linley Spring Processor Conference 2020 April 8, 2020 G Glenn Henry Dr. Parviz Palangpour Chief AI Architect AI Software Deep Dive into Centaur’s New x86 AI Coprocessor (Ncore) • Background • Motivations • Constraints • Architecture • Software • Benchmarks • Conclusion Demonstrated Working Silicon For Video-Analytics Edge Server Nov, 2019 ©2020 Centaur Technology. All Rights Reserved Centaur Technology Background • 25-year-old startup in Austin, owned by Via Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Shipped by IBM, HP, Dell, Samsung, Lenovo… ©2020 Centaur Technology. All Rights Reserved Genesis of the AI Coprocessor (Ncore) • Centaur was developing SoC (CHA) with new x86 cores • Targeted at edge/cloud server market (high-end x86 features) • Huge inference markets beyond hyperscale cloud, IOT and mobile • Video analytics, edge computing, on-premise servers • However, x86 isn’t efficient at inference • High-performance inference requires external accelerator • CHA has 44x PCIe to support GPUs, etc. • But adds cost, power, another point of failure, etc. ©2020 Centaur Technology. All Rights Reserved Why not integrate a coprocessor? • Very low cost • Many components already on SoC (“free” to Ncore) Caches, memory, clock, power, package, pins, busses, etc. • There often is “free” space on complex SOCs due to I/O & pins • Having many high-performance x86 cores allows flexibility • The x86 cores can do some of the work, in parallel • Didn’t have to implement all strange/new functions • Allows fast prototyping of new things • For customer: nothing extra to buy ©2020 Centaur Technology.