Financial Computing on Gpus Lecture 1: Cpus and Gpus Mike Giles

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cell Processorprocessor Andand Cellcell Processorprocessor Basedbased Devicesdevices

CellCell ProcessorProcessor andand CellCell ProcessorProcessor BasedBased DevicesDevices May 31, 2005 SPXXL Barry Bolding, WW Deep Computing Thanks to Ashwini Nanda IBM TJ Watson Research Center New York Pathway to the Digital Media Revolution Incremental Technology Innovations Provide Stepping Stones of Progress to the Future Virtual Communities Immersive WEB Portals Virtual Travel & eCommerce 20xx "Matrix" HD Content Virtual Tokyo Creation & Delivery Real-time Creation and Modification of Content Immersive Environment Needs enormous Real-Time Engineering computing Design Collaboration power 2004 Incremental Technology Innovations on the Horizon: On-Demand Computing & Communication Infrastructures Online Games Application Optimized Processor and System Architectures Leading Edge Communication Bandwidth/Storage Capacities Immersion HW and SW Technologies Rich Media Applications, Middleware and OS CellCell ProcessorProcessor OverviewOverview CellCell HistoryHistory • IBM, SCEI/Sony, Toshiba Alliance formed in 2000 • Design Center opened in March 2001 • Based in Austin, Texas • February 7, 2005: First technical disclosures CellCell HighlightsHighlights • Multi-core microprocessor (9 cores) • The possibilities… – Supercomputer on a chip – Digital home to distributed computing and supercomputing – >10x performance potential for many kernels/algorithms • Current prototypes running <3 GHz clock frequency • Demonstrated Beehive at Electronic Entertainment Expo Meeting – TRE (Terrain Rendering Engine application) IntroducingIntroducing CellCell -

The GPU Computing Revolution

The GPU Computing Revolution From Multi-Core CPUs to Many-Core Graphics Processors A Knowledge Transfer Report from the London Mathematical Society and Knowledge Transfer Network for Industrial Mathematics By Simon McIntosh-Smith Copyright © 2011 by Simon McIntosh-Smith Front cover image credits: Top left: Umberto Shtanzman / Shutterstock.com Top right: godrick / Shutterstock.com Bottom left: Double Negative Visual Effects Bottom right: University of Bristol Background: Serg64 / Shutterstock.com THE GPU COMPUTING REVOLUTION From Multi-Core CPUs To Many-Core Graphics Processors By Simon McIntosh-Smith Contents Page Executive Summary 3 From Multi-Core to Many-Core: Background and Development 4 Success Stories 7 GPUs in Depth 11 Current Challenges 18 Next Steps 19 Appendix 1: Active Researchers and Practitioner Groups 21 Appendix 2: Software Applications Available on GPUs 23 References 24 September 2011 A Knowledge Transfer Report from the London Mathematical Society and the Knowledge Transfer Network for Industrial Mathematics Edited by Robert Leese and Tom Melham London Mathematical Society, De Morgan House, 57–58 Russell Square, London WC1B 4HS KTN for Industrial Mathematics, Surrey Technology Centre, Surrey Research Park, Guildford GU2 7YG 2 THE GPU COMPUTING REVOLUTION From Multi-Core CPUs To Many-Core Graphics Processors AUTHOR Simon McIntosh-Smith is head of the Microelectronics Research Group at the Univer- sity of Bristol and chair of the Many-Core and Reconfigurable Supercomputing Conference (MRSC), Europe’s largest conference dedicated to the use of massively parallel computer architectures. Prior to joining the university he spent fifteen years in industry where he designed massively parallel hardware and software at companies such as Inmos, STMicro- electronics and Pixelfusion, before co-founding ClearSpeed as Vice-President of Architec- ture and Applications. -

OLCF AR 2016-17 FINAL 9-7-17.Pdf

Oak Ridge Leadership Computing Facility Annual Report 2016–2017 1 Outreach manager – Katie Bethea Writers – Eric Gedenk, Jonathan Hines, Katie Jones, and Rachel Harken Designer – Jason Smith Editor – Wendy Hames Photography – Jason Richards and Carlos Jones Stock images – iStockphoto™ Oak Ridge Leadership Computing Facility Oak Ridge National Laboratory P.O. Box 2008, Oak Ridge, TN 37831-6161 Phone: 865-241-6536 Email: [email protected] Website: https://www.olcf.ornl.gov Facebook: https://www.facebook.com/oakridgeleadershipcomputingfacility Twitter: @OLCFGOV The research detailed in this publication made use of the Oak Ridge Leadership Computing Facility, a US Department of Energy Office of Science User Facility located at DOE’s Oak Ridge National Laboratory. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov. 2 Contents LETTER In a Record 25th Year, We Celebrate the Past and Look to the Future 4 SCIENCE Streamlining Accelerated Computing for Industry 6 A Seismic Mapping Milestone 8 The Shape of Melting in Two Dimensions 10 A Supercomputing First for Predicting Magnetism in Real Nanoparticles 12 Researchers Flip Script for Lithium-Ion Electrolytes to Simulate Better Batteries 14 A Real CAM-Do Attitude 16 FEATURES Big Data Emphasis and New Partnerships Highlight the Path to Summit 18 OLCF Celebrates 25 Years of HPC Leadership 24 PEOPLE & PROGRAMS Groups within the OLCF 28 OLCF User Group and Executive Board 30 INCITE, ALCC, DD 31 SYSTEMS & SUPPORT Resource Overview 32 User Experience 34 Education, Outreach, and Training 35 ‘TitanWeek’ Recognizes Contributions of Nation’s Premier Supercomputer 36 Selected Publications 38 Acronyms 41 3 In a Record 25th Year, We Celebrate the Past and Look to the Future installed at the turn of the new millennium—to the founding of the Center for Computational Sciences at the US Department of Energy’s Oak Ridge National Laboratory. -

E-Business on Demand- Messaging Guidebook

IBM Systems & Technology Group IBM Deep Computing Rebecca Austen Director, Deep Computing Marketing © 2005 IBM Corporation 1 IBM Systems & Technology Group Deep Computing Innovation Addressing Challenges Beyond Computation System Design Data – Scalability – Data management – Packaging & density – Archival & compliance – Network infrastructure – Performance & reliability – Power consumption & cooling – Simulation & modeling – Data warehousing & mining Software – Capacity management & virtualization – System management – Security – Software integration Economics – Programming models & – Hybrid financial & delivery productivity models – Software licensing 2 © 2005 IBM Corporation IBM Systems & Technology Group Deep Computing Collaboration Innovation Through Client and Industry Partnerships System, Application & User Requirements, Best Practices – SPXXL, ScicomP – BG Consortium Infrastructure – DEISA, TeraGrid, MareNostrum Software & Open Standards – GPFS evolution – Linux, Grid Research & Development – Technology/systems – Blue Gene, Cell – Collaborative projects – Genographic, WCG 3 © 2005 IBM Corporation IBM Systems & Technology Group Deep Computing Embraces a Broad Spectrum of Markets Life Sciences Research, drug discovery, diagnostics, information-based medicine Digital Media Business Digital content creation, Intelligence management and Data warehousing and distribution data mining Petroleum Oil and gas exploration and production Financial Services Optimizing IT infrastructure, risk management and Industrial/Product compliance, -

Legendsincomputing.Pdf

Anita Jones z 2007 IEEE Founders Medal z Director of Defense Research and Engineering at the U.S. Department of Defense from 1993 to 1997 z Fellow of the z Association for Computing Machinery (ACM) z American Association for the Advancement of Science z IEEE z Author of two books and more than 40 papers z U.S. Air Force Meritorious Civilian Service Award z Distinguished Public Service Award z Congressional Record tribute z Augusta Ada Lovelace Award from the Association for Women in Computing z Lawrence R. Quarles Professor in the Computer Science Department at the University of Virginia’s School of Engineering and Applied Science Legends in Computing Amy Pearl Designer and implementer of the Sun Link Service, an open protocol for creating hypertext links between elements of desktop applications Legends in Computing Programming the Eniac z Programs were not stored z Every new problem required new connections Legends in Computing Stephanie Rosenthal z Computing Research Association Outstanding Female Undergraduate Award, 2007 z research at CMU on social robotics led to two publications. z research on collaborative learning, potential interfaces for use with interactive whiteboards and experiments about issues in collaboration, resulted in a first-authored publication. Legends in Computing 1950s Assembler Programming Class This would be so much easier with a computer… Legends in Computing Elaine Kant z Founder and president of SciComp z Fellow of the American Association for Artificial Intelligence z Fellow of the American Association for the Advancement of Science z Outstanding Achievement Award in Science/Technology, from University YWCA z U.S. Patent No. -

IBM Power Systems Compiler Roadmap

Software Group Compilation Technology IBM Power Systems Compiler Roadmap Roch Archambault IBM Toronto Laboratory [email protected] SCICOMP Barcelona | May 21, 2009 @ 2009 IBM Corporation Software Group Compilation Technology IBM Rational Disclaimer © Copyright IBM Corporation 2008. All rights reserved. The information contained in these materials is provided for informational purposes only, and is provided AS IS without warranty of any kind, express or implied. IBM shall not be responsible for any damages arising out of the use of, or otherwise related to, these materials. Nothing contained in these materials is intended to, nor shall have the effect of, creating any warranties or representations from IBM or its suppliers or licensors, or altering the terms and conditions of the applicable license agreement governing the use of IBM software. References in these materials to IBM products, programs, or services do not imply that they will be available in all countries in which IBM operates. Product release dates and/or capabilities referenced in these materials may change at any time at IBM’s sole discretion based on market opportunities or other factors, and are not intended to be a commitment to future product or feature availability in any way. IBM, the IBM logo, Rational, the Rational logo, Telelogic, the Telelogic logo, and other IBM products and services are trademarks of the International Business Machines Corporation, in the United States, other countries or both. Other company, product, or service names may be trademarks or service marks of others. 2 SCICOMP Barcelona | IBM Power Systems Compiler Roadmap © 2009 IBM Corporation Software Group Compilation Technology Agenda . -

IBM ACTC: Helping to Make Supercomputing Easier

IBM ACTC: Helping to Make Supercomputing Easier Luiz DeRose Advanced Computing Technology Center IBM Research HPC Symposium University of Oklahoma [email protected] Thomas J. Watson Research Center PO Box 218 Sept 12, 2002 © 2002 Yorktown Heights, NY 10598 Outline Who we are ¾ Mission statement ¾ Functional overview and organization ¾ History What we do ¾ Industry solutions and activities Education and training STC community building Application consulting Performance tools research Sept 12, 2002 ACTC - © 2002 - Luiz DeRose [email protected] 2 1 ACTC Mission ¾ To close the gap between HPC users and IBM ¾ Conduct research on applications for IBM servers within the scientific and technical community Technical directions Emerging technologies ACTC - Research ¾ Software tools and libraries ¾ HPC applications ¾ Research collaborations ¾ Education and training Focus ¾ AIX and Linux platforms Sept 12, 2002 ACTC - © 2002 - Luiz DeRose [email protected] 3 ACTC Functional Overview Outside Technology: • Linux • Computational Grids ACTC • DPCL, OpenMP, MPI IBM Technology: • Power 4, RS/6000 SP • GPFS Solutions: • PSSP, LAPI • Tools • Libraries Customer Needs: • App Consulting • Reduce Cost of Ownership • Collaboration • Optimized Applications • Education + User Training • Education • User Groups Sept 12, 2002 ACTC - © 2002 - Luiz DeRose [email protected] 4 2 ACTC History Created in September, 1998 ¾ Emphasis on helping new customers to port and optimize on IBM system ¾ Required establishing relationships with scientists on research level Expanded operations via alignment with Web/Server Division: ¾ EMEA extended in April, 1999 ¾ AP extended (TRL) in September, 2000 ¾ Partnership with IBM Centers of Competency (Server Group) Sept 12, 2002 ACTC - © 2002 - Luiz DeRose [email protected] 5 ACTC Education 1st Power4 Workshop ¾ Jan. -

Regular Expressions Direct Urls

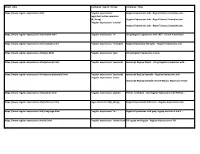

Direct_URLs Combined_Search_Strings Combined_Titles https://www.regular-expressions.info/ "regular expressions" Regular-Expressions.info - Regex Tutorial, Examples and ... regex best online resources (B_String) Regular-Expressions.info - Regex Tutorial, Examples and ... "regular expressions" tutorial Regular-Expressions.info - Regex Tutorial, Examples and ... https://www.regular-expressions.info/dotnet.html "regular expressions" c# Using Regular Expressions with .NET - C# and Visual Basic https://www.regular-expressions.info/examples.html "regular expressions" examples Regular Expression Examples - Regular-Expressions.info https://www.regular-expressions.info/java.html "regular expressions" java Using Regular Expressions in Java https://www.regular-expressions.info/javascript.html "regular expressions" javascript JavaScript RegExp Object - Using Regular Expressions with ... https://www.regular-expressions.info/javascriptexample.html "regular expressions" javascript JavaScript RegExp Example - Regular-Expressions.info "regular expressions" tester JavaScript RegExp Example: Online Regular Expression Tester https://www.regular-expressions.info/python.html "regular expressions" python Python re Module - Use Regular Expressions with Python ... https://www.regular-expressions.info/reference.html regex cheat sheet (B_String) Regular Expressions Reference - Regular-Expressions.info https://www.regular-expressions.info/rlanguage.html "regular expressions" in r Regular Expressions with grep, regexp and sub in the R ... https://www.regular-expressions.info/tcl.html -

Downloading It and Then, by Using Software Technology from Across the Alliance Partners – Xilinx, the Tools, Activate Their Internal ‘Switches’

news The University of Edinburgh Issue 54, summer 2005 www.epcc.ed.ac.uk CONTENTS 2 High End Computing training 3 Advanced Computing Facility opens 4 Computational science on Blue Gene 5 Working on QCDOC 6 FPGA computing 8 QCDOC and Blue Gene workshop 9 Computational biology on a Power5 system 10 Interview with Professor Ken Bowler 13 EPCC at Sun HPC 14 ISC’05 report 15 ScicomP11 in Edinburgh 16 SuperComputing’05 Third Annual HPCx Seminar New arrivals at the zoo ��� ��������������������������������� FOCUS: HARDWARE Editorial Gavin J. Pringle To mark our 15th anniversary, this issue focuses on HPC Jason Crain reports on the biological simulations running platforms at EPCC. The majority of articles are concerned on the new IBM Power5 system, and I report on EPCC’s with the most recent additions to EPCC’s ‘zoo’ of machines, presence at SunHPC in Heidelberg this year. such as the IBM Blue Gene platform, QCDOC and the new FPGA machine, among others. Just before he took early retirement, Prof. Ken Bowler, one of EPCC’s original founders, talked to me about his involvement This zoo has become so large that we have built a new facility with EPCC, supercomputers he has known, and his thoughts to house it: the Advanced Computing Facility (ACF). The ACF on the future. We present a condensed paraphrasing of that was formally opened on the 1st of July by His Royal Highness, conversation. the Duke of Edinburgh, and this event is covered on the opposite page. For the second year running, EPCC had a booth at the International Supercomputing Conference (ISC) in The new FPGA machine was also launched recently. -

Introduction to Parallel Programming

Introduction to parallel programming SULI seminar series June 20, 2019 Stéphane Ethier ([email protected]) Princeton Plasma Physics Lab Why Parallel Computing? Why not run n instances of my code? Isn’t that parallel computing? YES… but • You want to speed up your calculation because it takes a week to run! • Your problem size is too large to fit in the memory of a single node • Want to use those extra cores on your “multicore” processor • Solution: – Split the work between several processor cores so that they can work in parallel – Exchange data between them when needed • How? – Message Passing Interface (MPI) on distributed memory systems (works also on shared memory nodes) – OpenMP directives on shared memory node – and some other methods not as popular (pthreads, Intel TBB, Fortran Co-Arrays) Big Science requires Big Computers 15,312 nodes, 979,968 cores Nov 2018 List of the top supercomputers in the world (www.top500.org) SUMMIT - #1 World Supercomputer (200 PFLOPS) at the Oak Ridge Leadership Computing Facility • 4,600 IBM AC922 nodes • Each node contains: – 2 IBM Power 9 processors (42 cores) – 6 Nvidia V100 Tesla GPUs – 512 GB DdR4 memory for CPUs – 96 GB HBM2 memory for GPUs – 1.6 TB NVMe storage • Power 9 processor – SIMd Multi-Core (21) – 4 hardware threads per core – 32 kB L1 private cache, 512 kB shared L2, 10 MB shared L3 • V100 “Volta” GPU – 7.8 TFLOPS double precision (X 6) • dual-rail EdR Infiniband interconnect between nodes SUMMIT node architecture Interconnect Interconnect Next Next node node Power9 CPU architecture 512 -

![Capability Computing the Newsletter of the Hpcx Community [ISSUE 10, AUTUMN 2007]](https://docslib.b-cdn.net/cover/7540/capability-computing-the-newsletter-of-the-hpcx-community-issue-10-autumn-2007-7167540.webp)

Capability Computing the Newsletter of the Hpcx Community [ISSUE 10, AUTUMN 2007]

Capability Computing The newsletter of the HPCx community [ISSUE 10, AUTUMN 2007] HECToR... Now online See page 4. Modelling the ocean with HPCx CONTENTS 2 Sixth DEISA training event 12 Modelling non-adiabatic processes 3 Complementary capability computing and the future of HPCx 15 The MSc in HPC: looking back, looking forward 4 HECToR service is ready 16 Programming, parallelism, petaflops... panic! 5 John Fisher: an appreciation 17 HPC-Europa 7 Single node performance on HPCx Phase 3 18 Service Administration from EPCC (SAFE) 8 High resolution modelling of the northwest European shelf seas using POLCOMS 19 Event reviews 10 Profiling parallel codes on HPCx 20 Forthcoming events Editorial Kenton D’Mellow, EPCC Welcome to the latest edition of Capability Computing, the HPCx includes feature articles on state-of-the-art oceanographic community newsletter. This landmark tenth edition marks five simulations, scientific calculations enabled by novel computational successful years of service, over which the machine itself has taken methods that specifically exploit parallelism, the modelling of many forms. We are now well into Phase 3, and have recently radiation damage in metals, and charge and energy transfer opened a new large jobs queue of 1536 processors. We hope to processes in nanoscale systems. interest several consortia with this prospect. We are also pleased to present technical updates and primers from These are exciting times for the HPC community. In this issue our own Applications Support and Terascaling teams: these include we announce the arrival of HECToR, the next generation of UK single-node performance of the Phase 3 system, a guide to profiling national academic supercomputing service. -

Gpu-Accelerated Applications Gpu‑Accelerated Applications

GPU-ACCELERATED APPLICATIONS GPU-ACCELERATED APPLICATIONS Accelerated computing has revolutionized a broad range of industries with over six hundred applications optimized for GPUs to help you accelerate your work. CONTENTS 1 Computational Finance 62 Research: Higher Education and Supercomputing NUMERICAL ANALYTICS 2 Climate, Weather and Ocean Modeling PHYSICS 2 Data Science and Analytics SCIENTIFIC VISUALIZATION 5 Artificial Intelligence 68 Safety and Security DEEP LEARNING AND MACHINE LEARNING 71 Tools and Management 13 Federal, Defense and Intelligence 79 Agriculture 14 Design for Manufacturing/Construction: 79 Business Process Optimization CAD/CAE/CAM CFD (MFG) CFD (RESEARCH DEVELOPMENTS) COMPUTATIONAL STRUCTURAL MECHANICS DESIGN AND VISUALIZATION ELECTRONIC DESIGN AUTOMATION INDUSTRIAL INSPECTION Test Drive the 29 Media and Entertainment ANIMATION, MODELING AND RENDERING World’s Fastest COLOR CORRECTION AND GRAIN MANAGEMENT COMPOSITING, FINISHING AND EFFECTS Accelerator – Free! (VIDEO) EDITING Take the GPU Test Drive, a free and (IMAGE & PHOTO) EDITING easy way to experience accelerated ENCODING AND DIGITAL DISTRIBUTION computing on GPUs. You can run ON-AIR GRAPHICS your own application or try one of ON-SET, REVIEW AND STEREO TOOLS the preloaded ones, all running on a WEATHER GRAPHICS remote cluster. Try it today. www.nvidia.com/gputestdrive 44 Medical Imaging 47 Oil and Gas 48 Life Sciences BIOINFORMATICS MICROSCOPY MOLECULAR DYNAMICS QUANTUM CHEMISTRY (MOLECULAR) VISUALIZATION AND DOCKING Computational Finance APPLICATION NAME COMPANYNAME PRODUCT DESCRIPTION SUPPORTED FEATURES GPU SCALING Accelerated Elsen Secure, accessible, and accelerated • Web-like API with Native bindings for Multi-GPU Computing Engine back-testing, scenario analysis, Python, R, Scala, C Single Node risk analytics and real-time trading • Custom models and data streams designed for easy integration and rapid development.