Building a Spoken Programming Language: Exploiting Program Structure to Disambiguate Utterances

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DC Console Using DC Console Application Design Software

DC Console Using DC Console Application Design Software DC Console is easy-to-use, application design software developed specifically to work in conjunction with AML’s DC Suite. Create. Distribute. Collect. Every LDX10 handheld computer comes with DC Suite, which includes seven (7) pre-developed applications for common data collection tasks. Now LDX10 users can use DC Console to modify these applications, or create their own from scratch. AML 800.648.4452 Made in USA www.amltd.com Introduction This document briefly covers how to use DC Console and the features and settings. Be sure to read this document in its entirety before attempting to use AML’s DC Console with a DC Suite compatible device. What is the difference between an “App” and a “Suite”? “Apps” are single applications running on the device used to collect and store data. In most cases, multiple apps would be utilized to handle various operations. For example, the ‘Item_Quantity’ app is one of the most widely used apps and the most direct means to take a basic inventory count, it produces a data file showing what items are in stock, the relative quantities, and requires minimal input from the mobile worker(s). Other operations will require additional input, for example, if you also need to know the specific location for each item in inventory, the ‘Item_Lot_Quantity’ app would be a better fit. Apps can be used in a variety of ways and provide the LDX10 the flexibility to handle virtually any data collection operation. “Suite” files are simply collections of individual apps. Suite files allow you to easily manage and edit multiple apps from within a single ‘store-house’ file and provide an effortless means for device deployment. -

Clostridium Difficile Infection: How to Deal with the Problem DH INFORMATION RE ADER B OX

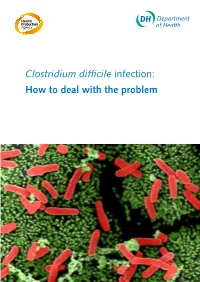

Clostridium difficile infection: How to deal with the problem DH INFORMATION RE ADER B OX Policy Estates HR / Workforce Commissioning Management IM & T Planning / Finance Clinical Social Care / Partnership Working Document Purpose Best Practice Guidance Gateway Reference 9833 Title Clostridium difficile infection: How to deal with the problem Author DH and HPA Publication Date December 2008 Target Audience PCT CEs, NHS Trust CEs, SHA CEs, Care Trust CEs, Medical Directors, Directors of PH, Directors of Nursing, PCT PEC Chairs, NHS Trust Board Chairs, Special HA CEs, Directors of Infection Prevention and Control, Infection Control Teams, Health Protection Units, Chief Pharmacists Circulation List Description This guidance outlines newer evidence and approaches to delivering good infection control and environmental hygiene. It updates the 1994 guidance and takes into account a national framework for clinical governance which did not exist in 1994. Cross Ref N/A Superseded Docs Clostridium difficile Infection Prevention and Management (1994) Action Required CEs to consider with DIPCs and other colleagues Timing N/A Contact Details Healthcare Associated Infection and Antimicrobial Resistance Department of Health Room 528, Wellington House 133-155 Waterloo Road London SE1 8UG For Recipient's Use Front cover image: Clostridium difficile attached to intestinal cells. Reproduced courtesy of Dr Jan Hobot, Cardiff University School of Medicine. Clostridium difficile infection: How to deal with the problem Contents Foreword 1 Scope and purpose 2 Introduction 3 Why did CDI increase? 4 Approach to compiling the guidance 6 What is new in this guidance? 7 Core Guidance Key recommendations 9 Grading of recommendations 11 Summary of healthcare recommendations 12 1. -

Unix (And Linux)

AWK....................................................................................................................................4 BC .....................................................................................................................................11 CHGRP .............................................................................................................................16 CHMOD.............................................................................................................................19 CHOWN ............................................................................................................................26 CP .....................................................................................................................................29 CRON................................................................................................................................34 CSH...................................................................................................................................36 CUT...................................................................................................................................71 DATE ................................................................................................................................75 DF .....................................................................................................................................79 DIFF ..................................................................................................................................84 -

5 Command Line Functions by Barbara C

ADAPS: Chapter 5. Command Line Functions 5 Command Line Functions by Barbara C. Hoopes and James F. Cornwall This chapter describes ADAPS command line functions. These are functions that are executed from the UNIX command line instead of from ADAPS menus, and that may be run manually or by automated means such as “cron” jobs. Most of these functions are NOT accessible from the ADAPS menus. These command line functions are described in detail below. 5.1 Hydra Although Hydra is available from ADAPS at the PR sub-menu, Edit Time Series Data using Hydra (TS_EDIT), it can also be started from the command line. However, to start Hydra outside of ADAPS, a DV or UV RDB file needs to be available to edit. The command is “hydra rdb_file_name.” For a complete description of using Hydra, refer to Section 4.5.2 Edit Time-Series Data using Hydra (TS_EDIT). 5.2 nwrt2rdb This command is used to output rating information in RDB format. It writes RDB files with a table containing the rating equation parameters or the rating point pairs, with all other information contained in the RDB comments. The following arguments can be used with this command: nwrt2rdb -ooutfile -zdbnum -aagency -nstation -dddid -trating_type -irating_id -e (indicates to output ratings in expanded form; it is ignored for equation ratings.) -l loctzcd (time zone code or local time code "LOC") -m (indicates multiple output files.) -r (rounding suppression) Rules • If -o is omitted, nwrt2rdb writes to stdout; AND arguments -n, -d, -t, and -i must be present. • If -o is present, no other arguments are required, and the program will use ADAPS routines to prompt for them. -

An Attribute- Grammar Compiling System Based on Yacc, Lex, and C Kurt M

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital Repository @ Iowa State University Computer Science Technical Reports Computer Science 12-1992 Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Kurt M. Bischoff Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Bischoff, Kurt M., "Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C" (1992). Computer Science Technical Reports. 23. http://lib.dr.iastate.edu/cs_techreports/23 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Abstract Ox generalizes the function of Yacc in the way that attribute grammars generalize context-free grammars. Ordinary Yacc and Lex specifications may be augmented with definitions of synthesized and inherited attributes written in C syntax. From these specifications, Ox generates a program that builds and decorates attributed parse trees. Ox accepts a most general class of attribute grammars. The user may specify postdecoration traversals for easy ordering of side effects such as code generation. Ox handles the tedious and error-prone details of writing code for parse-tree management, so its use eases problems of security and maintainability associated with that aspect of translator development. -

Unix Programmer's Manual

There is no warranty of merchantability nor any warranty of fitness for a particu!ar purpose nor any other warranty, either expressed or imp!ied, a’s to the accuracy of the enclosed m~=:crials or a~ Io ~helr ,~.ui~::~::.j!it’/ for ~ny p~rficu~ar pur~.~o~e. ~".-~--, ....-.re: " n~ I T~ ~hone Laaorator es 8ssumg$ no rO, p::::nS,-,,.:~:y ~or their use by the recipient. Furln=,, [: ’ La:::.c:,:e?o:,os ~:’urnes no ob~ja~tjon ~o furnish 6ny a~o,~,,..n~e at ~ny k:nd v,,hetsoever, or to furnish any additional jnformstjcn or documenta’tjon. UNIX PROGRAMMER’S MANUAL F~ifth ~ K. Thompson D. M. Ritchie June, 1974 Copyright:.©d972, 1973, 1974 Bell Telephone:Laboratories, Incorporated Copyright © 1972, 1973, 1974 Bell Telephone Laboratories, Incorporated This manual was set by a Graphic Systems photo- typesetter driven by the troff formatting program operating under the UNIX system. The text of the manual was prepared using the ed text editor. PREFACE to the Fifth Edition . The number of UNIX installations is now above 50, and many more are expected. None of these has exactly the same complement of hardware or software. Therefore, at any particular installa- tion, it is quite possible that this manual will give inappropriate information. The authors are grateful to L. L. Cherry, L. A. Dimino, R. C. Haight, S. C. Johnson, B. W. Ker- nighan, M. E. Lesk, and E. N. Pinson for their contributions to the system software, and to L. E. McMahon for software and for his contributions to this manual. -

Standard TECO (Text Editor and Corrector)

Standard TECO TextEditor and Corrector for the VAX, PDP-11, PDP-10, and PDP-8 May 1990 This manual was updated for the online version only in May 1990. User’s Guide and Language Reference Manual TECO-32 Version 40 TECO-11 Version 40 TECO-10 Version 3 TECO-8 Version 7 This manual describes the TECO Text Editor and COrrector. It includes a description for the novice user and an in-depth discussion of all available commands for more advanced users. General permission to copy or modify, but not for profit, is hereby granted, provided that the copyright notice is included and reference made to the fact that reproduction privileges were granted by the TECO SIG. © Digital Equipment Corporation 1979, 1985, 1990 TECO SIG. All Rights Reserved. This document was prepared using DECdocument, Version 3.3-1b. Contents Preface ............................................................ xvii Introduction ........................................................ xix Preface to the May 1985 edition ...................................... xxiii Preface to the May 1990 edition ...................................... xxv 1 Basics of TECO 1.1 Using TECO ................................................ 1–1 1.2 Data Structure Fundamentals . ................................ 1–2 1.3 File Selection Commands ...................................... 1–3 1.3.1 Simplified File Selection .................................... 1–3 1.3.2 Input File Specification (ER command) . ....................... 1–4 1.3.3 Output File Specification (EW command) ...................... 1–4 1.3.4 Closing Files (EX command) ................................ 1–5 1.4 Input and Output Commands . ................................ 1–5 1.5 Pointer Positioning Commands . ................................ 1–5 1.6 Type-Out Commands . ........................................ 1–6 1.6.1 Immediate Inspection Commands [not in TECO-10] .............. 1–7 1.7 Text Modification Commands . ................................ 1–7 1.8 Search Commands . -

Young Adult Conservation Corps Program (YACC)

• • . UEST. F FJECOR POSITION UTHOITY LE"VE BLAt.1< (See Instructions on reverse) JOB NO TO GENERAL SERVICES ADMINISTRATION, NATIONAL ARCHIVES AND RECORDS SERVICE, WASHINGTON, DC 20408 1. FROM (AGENCY OR ESTABLISHMENT) US Department of A riculture 2. MAJOR SUBDIVISION Forest Service 3. MINOR SUBDIVISION Administration (Human Resource Pro rams) 4. NAME OF PERSON WITH WHOM TO CONFER 5. TEL EXT -J.R-Rk Norina G. Mosby 47-6101 0111e 6. CERTIFICATE OF AGENCY REPRESENTATIVE I hereby certify that I am authorized to act for this agency in matters pertaining to the disposal of the agency's records; that the records proposed for disposal in this Request of 5 page(s) are not now needed for the business of this agency or will not be needed after the retention periods specified. D A Request for immediate disposal. [XI B Request for disposal after a specified period of time or request for permanent retention. C. DATE D.SIGNATURE OF AGENCY REPRESENTATIVE E. TITLE Group Leader, Records Management 9. 7. 8. E CRIPTION OF ITEM 10. SAMPLE OR ITEM NO (Withlnclu e Dates or Retention Periods) ACTION TAKEN JOB NO. This retention and disposal! schedule covens 1870 Series of records relative to Forest Service participation in the Young Adult Conservation Corps Program (YACC). The objec tive of the program is to provide up to one year of labor intensive employment for youth 16-23 working in conservatio type projects. The YACC program is administered jointly by the Departments of Labor, Interior, and Agriculture (Forest Service). The Departments of Agriculture and Interior are responsible for the administration of their own programs within the pol ices and procedures described in the Intradepartmental Young Adult Conservation Corps Memorandum of Understanding. -

Programming Guide DP800 Series Programmable Linear DC Power

RIGOL Programming Guide DP800 Series Programmable Linear DC Power Supply Dec. 2015 RIGOL TECHNOLOGIES, INC. RIGOL Guaranty and Declaration Copyright © 2013 RIGOL TECHNOLOGIES, INC. All Rights Reserved. Trademark Information RIGOL is a registered trademark of RIGOL TECHNOLOGIES, INC. Publication Number PGH03108-1110 Software Version 00.01.14 Software upgrade might change or add product features. Please acquire the latest version of the manual from RIGOL website or contact RIGOL to upgrade the software. Notices RIGOL products are covered by P.R.C. and foreign patents, issued and pending. RIGOL reserves the right to modify or change parts of or all the specifications and pricing policies at the company’s sole decision. Information in this publication replaces all previously released materials. Information in this publication is subject to change without notice. RIGOL shall not be liable for either incidental or consequential losses in connection with the furnishing, use, or performance of this manual, as well as any information contained. Any part of this document is forbidden to be copied, photocopied, or rearranged without prior written approval of RIGOL. Product Certification RIGOL guarantees that this product conforms to the national and industrial standards in China as well as the ISO9001:2008 standard and the ISO14001:2004 standard. Other international standard conformance certifications are in progress. Contact Us If you have any problem or requirement when using our products or this manual, please contact RIGOL. E-mail: [email protected] Website: www.rigol.com DP800 Programming Guide I RIGOL Document Overview This manual introduces how to program the power supply over remote interfaces in details. -

DC DMV Communication Related to Reinstating Suspended Driver Licenses and Driving Privileges (As of December 10, 2018)

DC DMV Communication Related to Reinstating Suspended Driver Licenses and Driving Privileges (As of December 10, 2018) In accordance with District Law L22-0175, Traffic and Parking Ticket Penalty Amendment Act of 2017, the DC Department of Motor Vehicles (DC DMV) has reinstated driver licenses and driving privileges for residents and non-residents whose credential was suspended for one of the following reasons: • Failure to pay a moving violation; • Failure to pay a moving violation after being found liable at a hearing; or • Failure to appear for a hearing on a moving violation. DC DMV is mailing notification letters to residents and non-residents affected by the law. District residents who have their driver license or learner permit, including commercial driver license (CDL), reinstated and have outstanding tickets are boot eligible if they have two or more outstanding tickets. If a District resident has an unpaid moving violation in a different jurisdiction, then his or her driving privileges may still be suspended in that jurisdiction until the moving violation is paid. If the resident’s driver license or CDL is not REAL ID compliant (i.e., there is a black star in the upper right-hand corner) and expired, then to renew the credential, the resident will need to provide DC DMV with: • One proof of identity; • One proof of Social Security Number; and • Two proofs of DC residency. If the resident has a name change, then additional documentation, such as a marriage license, divorce order, or name change court order is required. DC DMV only accepts the documents listed on its website at www.dmv.dc.gov. -

Clostridioides Difficile Infection in Adults and Children

Guidelines for Clinical Care Quality Department Clostridioides difficile Infection Guideline Team Clostridioides difficile Infection Team Leads in Adults and Children Tejal N Gandhi MD Infectious Diseases Patient population: Adults and children with a primary or recurrent episode of Clostridioides Krishna Rao, MD difficile infection (CDI). Infectious Diseases Team Members Objectives: Gregory Eschenauer, PharmD 1. Provide a brief overview of the epidemiology of, and risk factors for development Pharmacy of CDI. John Y Kao, MD 2. Provide guidance regarding which patients should be tested for CDI, summarize merits and Gastroenterology limitations of available diagnostic tests, and describe the optimal approach to laboratory diagnosis. Lena M Napolitano, MD 3. Review the most effective treatment strategies for patients with CDI including patients with Surgery recurrences or complications. F Jacob Seagull, PhD Leaning Heath Sciences Key Points for Adult Patients: David M Somand, MD Emergency Medicine Diagnosis Alison C Tribble, MD Definitive diagnosis of CDI requires either the presence of toxigenic C. difficile in stool with Pediatric Infectious Diseases compatible symptoms, or clinical evidence of pseudomembranous colitis (Table 2, Figure 4). Amanda M Valyko, MPH, CIC Once identified, CDI should be classified according to severity (Table 3). Infection Control Although risk factors for CDI (Table 1) should guide suspicion for CDI, testing should be ordered Michael E Watson Jr, MD, only when indicated (Figure 1). Use judgment and consider not testing in patients that have PhD recently started tube feeds, are taking a laxative medication, or have recently received oral Pediatric Infectious Diseases radiologic contrast material. [IC] Consultants: Choice of test should be guided by a multi-step algorithm for the rapid diagnosis of CDI (Figure 2). -

Should I Tell My GP That I Had a C. Difficile Infection in Hospital?

How will I know if the C. difficile What should I do when I am has gone? discharged home from hospital? When your normal bowel habit returns, it is Normal procedures of routine hand washing considered the infection has gone. and cleaning of the home environment are all that are needed. There is no need for a follow-up test. Wash hands with soap and water, especially after using the toilet and before handling Clostridium difficile is one of the many Should I tell my GP that I had a food. bacteria (germs) that are found in the C. difficile infection in hospital? Clean surfaces in bathrooms, kitchens bowel and can live there harmlessly. and other areas on a regular basis with Your GP should have been informed about household detergent. About three per cent of healthy adults your C. difficile infection in your discharge letter. in the community carries C. difficile in their bowel. However, if you visit your GP after discharge Will other family members/guests from hospital, you should tell him/her that you Sometimes having antibiotics can had a C. difficile infection. in my home by affected? affect the normal balance of bacteria in People in good health do not usually get a the bowel. If this happens, C. difficile C. difficile infection so friends and relatives may have a chance to multiply and What if the diarrhoea returns? can visit. C. difficile does not present a risk cause symptoms (infection). to your pets. Symptoms of C. difficile diarrhoea should The information in this leaflet will help have improved significantly, if not completely patients who have had a C.