A Programming Language for a New Multidisciplinary Oceanographic Float

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DC Console Using DC Console Application Design Software

DC Console Using DC Console Application Design Software DC Console is easy-to-use, application design software developed specifically to work in conjunction with AML’s DC Suite. Create. Distribute. Collect. Every LDX10 handheld computer comes with DC Suite, which includes seven (7) pre-developed applications for common data collection tasks. Now LDX10 users can use DC Console to modify these applications, or create their own from scratch. AML 800.648.4452 Made in USA www.amltd.com Introduction This document briefly covers how to use DC Console and the features and settings. Be sure to read this document in its entirety before attempting to use AML’s DC Console with a DC Suite compatible device. What is the difference between an “App” and a “Suite”? “Apps” are single applications running on the device used to collect and store data. In most cases, multiple apps would be utilized to handle various operations. For example, the ‘Item_Quantity’ app is one of the most widely used apps and the most direct means to take a basic inventory count, it produces a data file showing what items are in stock, the relative quantities, and requires minimal input from the mobile worker(s). Other operations will require additional input, for example, if you also need to know the specific location for each item in inventory, the ‘Item_Lot_Quantity’ app would be a better fit. Apps can be used in a variety of ways and provide the LDX10 the flexibility to handle virtually any data collection operation. “Suite” files are simply collections of individual apps. Suite files allow you to easily manage and edit multiple apps from within a single ‘store-house’ file and provide an effortless means for device deployment. -

Dot at Command Firmware Release 4.0.0 Release 3.3.5

Product Change Notification Software Release Notes Dot AT Command Firmware Release 4.0.0 Release 3.3.5 MultiTech xDot® MultiTech mDot™ Date: March 30, 2021 Contents Product Change Notification (PCN) Number PCN 03302021-001 (Dot) I. Overview II. Suggested Action Plan I. Overview MultiTech announces the availability of new AT Command III. Release 4.0.0 Overview Firmware for use on MultiTech® Long Range LoRa® Modules: MultiTech xDot® (MTXDOT Series) IV. Release 3.3.5 Overview MultiTech mDot™ (MTDOT Series) V. Schedule New versions are currently available for download and VI. Flashing mDot Firmware evaluation: VII. Part Numbers Impacted Release 4.0.0 (shipping in June 2021) Release 3.3.5 VIII. Mbed OS Overview IX. xDot Overview Release 4.0.0 will released into standard MultiTech product starting in June 2021. Currently, product ships with X. mDot Overview Release 3.2.1 XI. xDot Micro Developer Kit II. Suggested Action Plan XII. Additional Information Customers Review the information in this PCN and forward to Attachments others within your organization who are actively Identifying Firmware Version involved with the development of IoT applications using the MultiTech xDot and MultiTech mDot. Identifying Date of Manufacture Consider downloading the firmware available on MultiTech or Mbed websites to check compatibility mPower & mLinux Software with existing deployments. PCN 03302021-001 (Dot) Page 1 of 12 https://support.multitech.com Review the release schedule for the upcoming firmware release and understand the effect on your manufacturing and deployment schedules. Distributors Forward this announcement to others within your organization who are actively involved in the sale or support of LoRa-enabled sensors. -

Dell EMC Powerstore CLI Guide

Dell EMC PowerStore CLI Guide May 2020 Rev. A01 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2020 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Contents Additional Resources.......................................................................................................................4 Chapter 1: Introduction................................................................................................................... 5 Overview.................................................................................................................................................................................5 Use PowerStore CLI in scripts.......................................................................................................................................5 Set up the PowerStore CLI client........................................................................................................................................5 Install the PowerStore CLI client.................................................................................................................................. -

Shell Variables

Shell Using the command line Orna Agmon ladypine at vipe.technion.ac.il Haifux Shell – p. 1/55 TOC Various shells Customizing the shell getting help and information Combining simple and useful commands output redirection lists of commands job control environment variables Remote shell textual editors textual clients references Shell – p. 2/55 What is the shell? The shell is the wrapper around the system: a communication means between the user and the system The shell is the manner in which the user can interact with the system through the terminal. The shell is also a script interpreter. The simplest script is a bunch of shell commands. Shell scripts are used in order to boot the system. The user can also write and execute shell scripts. Shell – p. 3/55 Shell - which shell? There are several kinds of shells. For example, bash (Bourne Again Shell), csh, tcsh, zsh, ksh (Korn Shell). The most important shell is bash, since it is available on almost every free Unix system. The Linux system scripts use bash. The default shell for the user is set in the /etc/passwd file. Here is a line out of this file for example: dana:x:500:500:Dana,,,:/home/dana:/bin/bash This line means that user dana uses bash (located on the system at /bin/bash) as her default shell. Shell – p. 4/55 Starting to work in another shell If Dana wishes to temporarily use another shell, she can simply call this shell from the command line: [dana@granada ˜]$ bash dana@granada:˜$ #In bash now dana@granada:˜$ exit [dana@granada ˜]$ bash dana@granada:˜$ #In bash now, going to hit ctrl D dana@granada:˜$ exit [dana@granada ˜]$ #In original shell now Shell – p. -

Clostridium Difficile Infection: How to Deal with the Problem DH INFORMATION RE ADER B OX

Clostridium difficile infection: How to deal with the problem DH INFORMATION RE ADER B OX Policy Estates HR / Workforce Commissioning Management IM & T Planning / Finance Clinical Social Care / Partnership Working Document Purpose Best Practice Guidance Gateway Reference 9833 Title Clostridium difficile infection: How to deal with the problem Author DH and HPA Publication Date December 2008 Target Audience PCT CEs, NHS Trust CEs, SHA CEs, Care Trust CEs, Medical Directors, Directors of PH, Directors of Nursing, PCT PEC Chairs, NHS Trust Board Chairs, Special HA CEs, Directors of Infection Prevention and Control, Infection Control Teams, Health Protection Units, Chief Pharmacists Circulation List Description This guidance outlines newer evidence and approaches to delivering good infection control and environmental hygiene. It updates the 1994 guidance and takes into account a national framework for clinical governance which did not exist in 1994. Cross Ref N/A Superseded Docs Clostridium difficile Infection Prevention and Management (1994) Action Required CEs to consider with DIPCs and other colleagues Timing N/A Contact Details Healthcare Associated Infection and Antimicrobial Resistance Department of Health Room 528, Wellington House 133-155 Waterloo Road London SE1 8UG For Recipient's Use Front cover image: Clostridium difficile attached to intestinal cells. Reproduced courtesy of Dr Jan Hobot, Cardiff University School of Medicine. Clostridium difficile infection: How to deal with the problem Contents Foreword 1 Scope and purpose 2 Introduction 3 Why did CDI increase? 4 Approach to compiling the guidance 6 What is new in this guidance? 7 Core Guidance Key recommendations 9 Grading of recommendations 11 Summary of healthcare recommendations 12 1. -

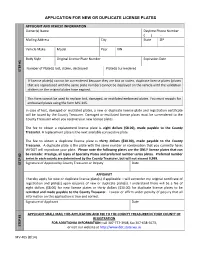

Application for New Or Duplicate License Plates

APPLICATION FOR NEW OR DUPLICATE LICENSE PLATES APPLICANT AND VEHICLE INFORMATION Owner(s) Name Daytime Phone Number ( ) - Mailing Address City State ZIP Vehicle Make Model Year VIN Body Style Original License Plate Number Expiration Date Number of Plate(s) lost, stolen, destroyed Plate(s) Surrendered STEP STEP #1 If license plate(s) cannot be surrendered because they are lost or stolen, duplicate license plates (plates that are reproduced with the same plate number) cannot be displayed on the vehicle until the validation stickers on the original plates have expired. This form cannot be used to replace lost, damaged, or mutilated embossed plates. You must reapply for embossed plates using the form MV-145. In case of lost, damaged or mutilated plates, a new or duplicate license plate and registration certificate will be issued by the County Treasurer. Damaged or mutilated license plates must be surrendered to the County Treasurer when you receive your new license plates. The fee to obtain a replacement license plate is eight dollars ($8.00), made payable to the County Treasurer. A replacement plate is the next available consecutive plate. The fee to obtain a duplicate license plate is thirty dollars ($30.00), made payable to the County Treasurer. A duplicate plate is the plate with the same number or combination that you currently have; WYDOT will reproduce your plate. Please note the following plates are the ONLY license plates that can be remade: Prestige, all types of Specialty Plates and preferred number series plates. Preferred number series in each county are determined by the County Treasurer, but will not exceed 9,999. -

Unix (And Linux)

AWK....................................................................................................................................4 BC .....................................................................................................................................11 CHGRP .............................................................................................................................16 CHMOD.............................................................................................................................19 CHOWN ............................................................................................................................26 CP .....................................................................................................................................29 CRON................................................................................................................................34 CSH...................................................................................................................................36 CUT...................................................................................................................................71 DATE ................................................................................................................................75 DF .....................................................................................................................................79 DIFF ..................................................................................................................................84 -

5 Command Line Functions by Barbara C

ADAPS: Chapter 5. Command Line Functions 5 Command Line Functions by Barbara C. Hoopes and James F. Cornwall This chapter describes ADAPS command line functions. These are functions that are executed from the UNIX command line instead of from ADAPS menus, and that may be run manually or by automated means such as “cron” jobs. Most of these functions are NOT accessible from the ADAPS menus. These command line functions are described in detail below. 5.1 Hydra Although Hydra is available from ADAPS at the PR sub-menu, Edit Time Series Data using Hydra (TS_EDIT), it can also be started from the command line. However, to start Hydra outside of ADAPS, a DV or UV RDB file needs to be available to edit. The command is “hydra rdb_file_name.” For a complete description of using Hydra, refer to Section 4.5.2 Edit Time-Series Data using Hydra (TS_EDIT). 5.2 nwrt2rdb This command is used to output rating information in RDB format. It writes RDB files with a table containing the rating equation parameters or the rating point pairs, with all other information contained in the RDB comments. The following arguments can be used with this command: nwrt2rdb -ooutfile -zdbnum -aagency -nstation -dddid -trating_type -irating_id -e (indicates to output ratings in expanded form; it is ignored for equation ratings.) -l loctzcd (time zone code or local time code "LOC") -m (indicates multiple output files.) -r (rounding suppression) Rules • If -o is omitted, nwrt2rdb writes to stdout; AND arguments -n, -d, -t, and -i must be present. • If -o is present, no other arguments are required, and the program will use ADAPS routines to prompt for them. -

An Attribute- Grammar Compiling System Based on Yacc, Lex, and C Kurt M

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital Repository @ Iowa State University Computer Science Technical Reports Computer Science 12-1992 Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Kurt M. Bischoff Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Bischoff, Kurt M., "Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C" (1992). Computer Science Technical Reports. 23. http://lib.dr.iastate.edu/cs_techreports/23 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Abstract Ox generalizes the function of Yacc in the way that attribute grammars generalize context-free grammars. Ordinary Yacc and Lex specifications may be augmented with definitions of synthesized and inherited attributes written in C syntax. From these specifications, Ox generates a program that builds and decorates attributed parse trees. Ox accepts a most general class of attribute grammars. The user may specify postdecoration traversals for easy ordering of side effects such as code generation. Ox handles the tedious and error-prone details of writing code for parse-tree management, so its use eases problems of security and maintainability associated with that aspect of translator development. -

DOT Series at Command Reference Guide DOT SERIES at COMMAND GUIDE

DOT Series AT Command Reference Guide DOT SERIES AT COMMAND GUIDE DOT Series AT Command Guide Models: MTDOT-915-xxx, MTDOT-868-xxx, MTXDOT-915-xx, MTXDOT-898-xx, Part Number: S000643, Version 2.2 Copyright This publication may not be reproduced, in whole or in part, without the specific and express prior written permission signed by an executive officer of Multi-Tech Systems, Inc. All rights reserved. Copyright © 2016 by Multi-Tech Systems, Inc. Multi-Tech Systems, Inc. makes no representations or warranties, whether express, implied or by estoppels, with respect to the content, information, material and recommendations herein and specifically disclaims any implied warranties of merchantability, fitness for any particular purpose and non- infringement. Multi-Tech Systems, Inc. reserves the right to revise this publication and to make changes from time to time in the content hereof without obligation of Multi-Tech Systems, Inc. to notify any person or organization of such revisions or changes. Trademarks and Registered Trademarks MultiTech, and the MultiTech logo, and MultiConnect are registered trademarks and mDot, xDot, and Conduit are a trademark of Multi-Tech Systems, Inc. All other products and technologies are the trademarks or registered trademarks of their respective holders. Legal Notices The MultiTech products are not designed, manufactured or intended for use, and should not be used, or sold or re-sold for use, in connection with applications requiring fail-safe performance or in applications where the failure of the products would reasonably be expected to result in personal injury or death, significant property damage, or serious physical or environmental damage. -

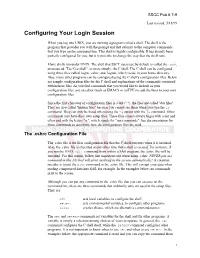

Configuring Your Login Session

SSCC Pub.# 7-9 Last revised: 5/18/99 Configuring Your Login Session When you log into UNIX, you are running a program called a shell. The shell is the program that provides you with the prompt and that submits to the computer commands that you type on the command line. This shell is highly configurable. It has already been partially configured for you, but it is possible to change the way that the shell runs. Many shells run under UNIX. The shell that SSCC users use by default is called the tcsh, pronounced "Tee-Cee-shell", or more simply, the C shell. The C shell can be configured using three files called .login, .cshrc, and .logout, which reside in your home directory. Also, many other programs can be configured using the C shell's configuration files. Below are sample configuration files for the C shell and explanations of the commands contained within these files. As you find commands that you would like to include in your configuration files, use an editor (such as EMACS or nuTPU) to add the lines to your own configuration files. Since the first character of configuration files is a dot ("."), the files are called "dot files". They are also called "hidden files" because you cannot see them when you type the ls command. They can only be listed when using the -a option with the ls command. Other commands may have their own setup files. These files almost always begin with a dot and often end with the letters "rc", which stands for "run commands". -

2017 Noise Requirements

DEPARTMENT OF TRANSPORTATION Noise Requirements for MnDOT and other Type I Federal-aid Projects Satisfies FHWA requirements outlined in 23 CFR 772 Effective Date: July 10, 2017 Website address where additional information can be found or inquiries sent: http://www.dot.state.mn. u /environment/noise/index.html "To request this document in an alternative format, contact Janet Miller at 651-366-4720 or 1-800-657-3774 (Greater Minnesota); 711or1-800-627-3529 (Minnesota Relay). You may also send an e-mail to [email protected]. (Please request at least one week in advance). MnDOT Noise Requirements: Effective Date July 10, 2017 This document contains the Minnesota Department of Transportation Noise Requirements (hereafter referred to as 'REQUIREMENTS') which describes the implementation of the requirements set forth by the Federal Highway Administration Title 23 Code of Federal Regulations Part 772: Procedures for Abatement of Highway Traffic Noise and Construction Noise. These REQUIREMENTS also describe the implementation of the requirements set forth by Minnesota Statute 116.07 Subd.2a: Exemptions from standards, and Minnesota Rule 7030: Noise Pollution Control. These REQUIREMENTS were developed by the Minnesota Department of Transportation and reviewed and approved with by the Federal Highway Administration. C,- 2.8-{7 Charles A. Zelle, Commissioner Date Minnesota Department of Transportation toJ;.11/11 Arlene Kocher, Divi lronAdmini ~ rator Date I Minnesota Division Federal Highway Administration MnDOT Noise Requirements: Effective