Numerical Solution of Ordinary Differential Equations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to Differential Equations

Introduction to Differential Equations Lecture notes for MATH 2351/2352 Jeffrey R. Chasnov k kK m m x1 x2 The Hong Kong University of Science and Technology The Hong Kong University of Science and Technology Department of Mathematics Clear Water Bay, Kowloon Hong Kong Copyright ○c 2009–2016 by Jeffrey Robert Chasnov This work is licensed under the Creative Commons Attribution 3.0 Hong Kong License. To view a copy of this license, visit http://creativecommons.org/licenses/by/3.0/hk/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Preface What follows are my lecture notes for a first course in differential equations, taught at the Hong Kong University of Science and Technology. Included in these notes are links to short tutorial videos posted on YouTube. Much of the material of Chapters 2-6 and 8 has been adapted from the widely used textbook “Elementary differential equations and boundary value problems” by Boyce & DiPrima (John Wiley & Sons, Inc., Seventh Edition, ○c 2001). Many of the examples presented in these notes may be found in this book. The material of Chapter 7 is adapted from the textbook “Nonlinear dynamics and chaos” by Steven H. Strogatz (Perseus Publishing, ○c 1994). All web surfers are welcome to download these notes, watch the YouTube videos, and to use the notes and videos freely for teaching and learning. An associated free review book with links to YouTube videos is also available from the ebook publisher bookboon.com. I welcome any comments, suggestions or corrections sent by email to [email protected]. -

3 Runge-Kutta Methods

3 Runge-Kutta Methods In contrast to the multistep methods of the previous section, Runge-Kutta methods are single-step methods — however, with multiple stages per step. They are motivated by the dependence of the Taylor methods on the specific IVP. These new methods do not require derivatives of the right-hand side function f in the code, and are therefore general-purpose initial value problem solvers. Runge-Kutta methods are among the most popular ODE solvers. They were first studied by Carle Runge and Martin Kutta around 1900. Modern developments are mostly due to John Butcher in the 1960s. 3.1 Second-Order Runge-Kutta Methods As always we consider the general first-order ODE system y0(t) = f(t, y(t)). (42) Since we want to construct a second-order method, we start with the Taylor expansion h2 y(t + h) = y(t) + hy0(t) + y00(t) + O(h3). 2 The first derivative can be replaced by the right-hand side of the differential equation (42), and the second derivative is obtained by differentiating (42), i.e., 00 0 y (t) = f t(t, y) + f y(t, y)y (t) = f t(t, y) + f y(t, y)f(t, y), with Jacobian f y. We will from now on neglect the dependence of y on t when it appears as an argument to f. Therefore, the Taylor expansion becomes h2 y(t + h) = y(t) + hf(t, y) + [f (t, y) + f (t, y)f(t, y)] + O(h3) 2 t y h h = y(t) + f(t, y) + [f(t, y) + hf (t, y) + hf (t, y)f(t, y)] + O(h3(43)). -

Runge-Kutta Scheme Takes the Form K1 = Hf (Tn, Yn); K2 = Hf (Tn + Αh, Yn + Βk1); (5.11) Yn+1 = Yn + A1k1 + A2k2

Approximate integral using the trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ))] ; t = t + h: n+1 n 2 n n n+1 n+1 n+1 n Use Euler's method to approximate Y (tn+1) ≈ Y (tn) + hf (tn; Y (tn)) in trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ) + hf (t ; Y (t )))] : n+1 n 2 n n n+1 n n n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = f (t; Y ) , Y (tn+1) = Y (tn) + f (t; Y (t)) dt: dt tn Use Euler's method to approximate Y (tn+1) ≈ Y (tn) + hf (tn; Y (tn)) in trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ) + hf (t ; Y (t )))] : n+1 n 2 n n n+1 n n n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = f (t; Y ) , Y (tn+1) = Y (tn) + f (t; Y (t)) dt: dt tn Approximate integral using the trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ))] ; t = t + h: n+1 n 2 n n n+1 n+1 n+1 n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = -

The Original Euler's Calculus-Of-Variations Method: Key

Submitted to EJP 1 Jozef Hanc, [email protected] The original Euler’s calculus-of-variations method: Key to Lagrangian mechanics for beginners Jozef Hanca) Technical University, Vysokoskolska 4, 042 00 Kosice, Slovakia Leonhard Euler's original version of the calculus of variations (1744) used elementary mathematics and was intuitive, geometric, and easily visualized. In 1755 Euler (1707-1783) abandoned his version and adopted instead the more rigorous and formal algebraic method of Lagrange. Lagrange’s elegant technique of variations not only bypassed the need for Euler’s intuitive use of a limit-taking process leading to the Euler-Lagrange equation but also eliminated Euler’s geometrical insight. More recently Euler's method has been resurrected, shown to be rigorous, and applied as one of the direct variational methods important in analysis and in computer solutions of physical processes. In our classrooms, however, the study of advanced mechanics is still dominated by Lagrange's analytic method, which students often apply uncritically using "variational recipes" because they have difficulty understanding it intuitively. The present paper describes an adaptation of Euler's method that restores intuition and geometric visualization. This adaptation can be used as an introductory variational treatment in almost all of undergraduate physics and is especially powerful in modern physics. Finally, we present Euler's method as a natural introduction to computer-executed numerical analysis of boundary value problems and the finite element method. I. INTRODUCTION In his pioneering 1744 work The method of finding plane curves that show some property of maximum and minimum,1 Leonhard Euler introduced a general mathematical procedure or method for the systematic investigation of variational problems. -

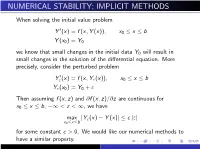

Numerical Stability; Implicit Methods

NUMERICAL STABILITY; IMPLICIT METHODS When solving the initial value problem 0 Y (x) = f (x; Y (x)); x0 ≤ x ≤ b Y (x0) = Y0 we know that small changes in the initial data Y0 will result in small changes in the solution of the differential equation. More precisely, consider the perturbed problem 0 Y"(x) = f (x; Y"(x)); x0 ≤ x ≤ b Y"(x0) = Y0 + " Then assuming f (x; z) and @f (x; z)=@z are continuous for x0 ≤ x ≤ b; −∞ < z < 1, we have max jY"(x) − Y (x)j ≤ c j"j x0≤x≤b for some constant c > 0. We would like our numerical methods to have a similar property. Consider the Euler method yn+1 = yn + hf (xn; yn) ; n = 0; 1;::: y0 = Y0 and then consider the perturbed problem " " " yn+1 = yn + hf (xn; yn ) ; n = 0; 1;::: " y0 = Y0 + " We can show the following: " max jyn − ynj ≤ cbj"j x0≤xn≤b for some constant cb > 0 and for all sufficiently small values of the stepsize h. This implies that Euler's method is stable, and in the same manner as was true for the original differential equation problem. The general idea of stability for a numerical method is essentially that given above for Eulers's method. There is a general theory for numerical methods for solving the initial value problem 0 Y (x) = f (x; Y (x)); x0 ≤ x ≤ b Y (x0) = Y0 If the truncation error in a numerical method has order 2 or greater, then the numerical method is stable if and only if it is a convergent numerical method. -

Leonhard Euler: His Life, the Man, and His Works∗

SIAM REVIEW c 2008 Walter Gautschi Vol. 50, No. 1, pp. 3–33 Leonhard Euler: His Life, the Man, and His Works∗ Walter Gautschi† Abstract. On the occasion of the 300th anniversary (on April 15, 2007) of Euler’s birth, an attempt is made to bring Euler’s genius to the attention of a broad segment of the educated public. The three stations of his life—Basel, St. Petersburg, andBerlin—are sketchedandthe principal works identified in more or less chronological order. To convey a flavor of his work andits impact on modernscience, a few of Euler’s memorable contributions are selected anddiscussedinmore detail. Remarks on Euler’s personality, intellect, andcraftsmanship roundout the presentation. Key words. LeonhardEuler, sketch of Euler’s life, works, andpersonality AMS subject classification. 01A50 DOI. 10.1137/070702710 Seh ich die Werke der Meister an, So sehe ich, was sie getan; Betracht ich meine Siebensachen, Seh ich, was ich h¨att sollen machen. –Goethe, Weimar 1814/1815 1. Introduction. It is a virtually impossible task to do justice, in a short span of time and space, to the great genius of Leonhard Euler. All we can do, in this lecture, is to bring across some glimpses of Euler’s incredibly voluminous and diverse work, which today fills 74 massive volumes of the Opera omnia (with two more to come). Nine additional volumes of correspondence are planned and have already appeared in part, and about seven volumes of notebooks and diaries still await editing! We begin in section 2 with a brief outline of Euler’s life, going through the three stations of his life: Basel, St. -

A Brief Introduction to Numerical Methods for Differential Equations

A Brief Introduction to Numerical Methods for Differential Equations January 10, 2011 This tutorial introduces some basic numerical computation techniques that are useful for the simulation and analysis of complex systems modelled by differential equations. Such differential models, especially those partial differential ones, have been extensively used in various areas from astronomy to biology, from meteorology to finance. However, if we ignore the differences caused by applications and focus on the mathematical equations only, a fundamental question will arise: Can we predict the future state of a system from a known initial state and the rules describing how it changes? If we can, how to make the prediction? This problem, known as Initial Value Problem(IVP), is one of those problems that we are most concerned about in numerical analysis for differential equations. In this tutorial, Euler method is used to solve this problem and a concrete example of differential equations, the heat diffusion equation, is given to demonstrate the techniques talked about. But before introducing Euler method, numerical differentiation is discussed as a prelude to make you more comfortable with numerical methods. 1 Numerical Differentiation 1.1 Basic: Forward Difference Derivatives of some simple functions can be easily computed. However, if the function is too compli- cated, or we only know the values of the function at several discrete points, numerical differentiation is a tool we can rely on. Numerical differentiation follows an intuitive procedure. Recall what we know about the defini- tion of differentiation: df f(x + h) − f(x) = f 0(x) = lim dx h!0 h which means that the derivative of function f(x) at point x is the difference between f(x + h) and f(x) divided by an infinitesimal h. -

Oscillation-Free Method for Semilinear Diffusion Equations Under

Oscillation-free method for semilinear diffusion equations under noisy initial conditions R. C. Harwooda,∗, Likun Zhangb, V. S. Manoranjanc aDepartment of Mathematics, George Fox University, Newberg, OR 97132, USA bDepartment of Physics and Center for Nonlinear Dynamics, University of Texas at Austin, Austin, TX 78712, USA cDepartment of Mathematics, Washington State University, Pullman, WA 99164, USA Abstract Noise in initial conditions from measurement errors can create unwanted oscillations which propagate in numerical solutions. We present a technique of prohibiting such oscillation errors when solving initial-boundary-value problems of semilinear diffusion equations. Symmetric Strang splitting is applied to the equation for solving the linear diffusion and nonlinear remainder separately. An oscillation-free scheme is developed for overcoming any oscillatory behavior when numerically solving the linear diffusion portion. To demonstrate the ills of stable oscillations, we compare our method using a weighted implicit Euler scheme to the Crank-Nicolson method. The oscillation-free feature and stability of our method are analyzed through a local linearization. The accuracy of our oscillation-free method is proved and its usefulness is further verified through solving a Fisher-type equation where oscillation-free solutions are successfully produced in spite of random errors in the initial conditions. Keywords: numerical oscillations, splitting methods, finite difference, Euler method, reaction-diffusion, semilinear parabolic partial differential equation arXiv:1607.07433v1 [math.NA] 23 Jul 2016 1. Introduction Before analyzing numerical methods for stability it is necessary that the equations they solve be well-posed and stable themselves. In this paper, we consider the ∗Corresponding author Email addresses: [email protected] (R. -

Numerical Solution of Ordinary Differential Equations Time-Stepping

APMA 0160 (A. Yew) Spring 2011 Numerical solution of ordinary differential equations Differential equations|equations that relate one or more functions and their derivatives|frequently arise as models of physical processes (because derivatives measure rates of change). However, most problems originating from the study of real-world phenomena cannot be solved exactly, with the solution expressed in terms of elementary functions. Therefore, obtaining approximate solutions to differential equations is a very important area of scientific computing. If a differential equation involves only one independent variable, it is an ordinary differential equation (ODE); if there are two or more independent variables, it is a partial differential equation (PDE). We will begin by considering the problem of approximating the solution u(t) to an ODE du = f(t; u); given that u(t ) = u dt 0 0 where t0 and u0 are fixed values. The techniques developed will also be useful for obtaining numerical solutions to PDEs. Time-stepping We will call the independent variable t and think of it as \time". The basic idea is to choose a sequence of t-values t0; t1; t2; : : : ; tN and \step" from one to the next, computing the approximate value of u at each successive tn. We will use un to denote the approximate value of u(tn), for n = 1; 2;:::;N. Supposing that we have already computed un ≈ u(tn), the aim is to derive formulas/algorithms for obtaining un+1 ≈ u(tn+1). The formulas to be discussed will be interpreted in one or both of the following ways: du • The ODE = ft; u(t) says that the slope of the solution curve u(t) at any point t is given dt by f(t; u); values of f are slopes in the (t; u) plane. -

Leonhard Euler English Version

LEONHARD EULER (April 15, 1707 – September 18, 1783) by HEINZ KLAUS STRICK , Germany Without a doubt, LEONHARD EULER was the most productive mathematician of all time. He wrote numerous books and countless articles covering a vast range of topics in pure and applied mathematics, physics, astronomy, geodesy, cartography, music, and shipbuilding – in Latin, French, Russian, and German. It is not only that he produced an enormous body of work; with unbelievable creativity, he brought innovative ideas to every topic on which he wrote and indeed opened up several new areas of mathematics. Pictured on the Swiss postage stamp of 2007 next to the polyhedron from DÜRER ’s Melencolia and EULER ’s polyhedral formula is a portrait of EULER from the year 1753, in which one can see that he was already suffering from eye problems at a relatively young age. EULER was born in Basel, the son of a pastor in the Reformed Church. His mother also came from a family of pastors. Since the local school was unable to provide an education commensurate with his son’s abilities, EULER ’s father took over the boy’s education. At the age of 14, EULER entered the University of Basel, where he studied philosophy. He completed his studies with a thesis comparing the philosophies of DESCARTES and NEWTON . At 16, at his father’s wish, he took up theological studies, but he switched to mathematics after JOHANN BERNOULLI , a friend of his father’s, convinced the latter that LEONHARD possessed an extraordinary mathematical talent. At 19, EULER won second prize in a competition sponsored by the French Academy of Sciences with a contribution on the question of the optimal placement of a ship’s masts (first prize was awarded to PIERRE BOUGUER , participant in an expedition of LA CONDAMINE to South America). -

Comparison of Euler and Range-Kutta Methods in Solving Ordinary Differential

Leonardo Journal of Sciences Issue 32, January-June 2018 ISSN 1583-0233 p. 10-37 Comparison of Euler and Range-Kutta methods in solving ordinary differential equations of order two and four 1* 1 1 David I. LANLEGE , Rotimi KEHINDE , Dolapo A. SOBANKE , Abdulrahman ABDULGANIYU2, and Umar M. GARBA2 1Department of Mathematical Science, Federal University Lokoja P.M.B 115 Lokoja Kogi State, Nigeria. 2 Department of Mathematics/Computer Science, Ibrahim Badamasi Babangida University Lapai P.M.B 11 Lapai Niger State, Nigeria. E-mail(s): [email protected] (DIL); [email protected] (REK); [email protected] (UMG) * Corresponding author, phone: +2348030528667, +2348131235684 Abstract The purpose of this to produce efficient numerical methods with the same order of accuracy as that of the main starting values for exact solutions of fourth order differential equation without reducing it to a system of first order differential equations. The methods of the differential systems arising from the approximate solution to the problem are adopted using the Runge-Kutta method and stages. The methods were compared and contrasted based on the results obtained. The comparison shows that Euler method gives accurate approximate result than Runge-Kutta method. After the derivation of the formulae of O(h2), the comparison was done in regards to identify the formula with higher accuracy. Keywords Numerical Analysis; Numerical Approximation; Exact Solution; Accuracy; Runge-Kutta and Euler 10 http://ljs.academicdirect.org/ Leonardo Journal of Sciences Issue 32, January-June 2018 ISSN 1583-0233 p. 10-37 Introduction Numerical analysis is a branch of mathematics that deals with the study of methods and procedures used to obtain approximate solutions to mathematical problems. -

5.3.5 Beyond Euler's Method for Reaction-Diffusion PDE: Diffusion

160 Conservation of Mass 5.3.5 Beyond Euler’s Method For Reaction-Diffusion PDE: Diffusion of Gas in a Tunnel, Gas in Porous Media, Second-Order in Time Methods, and Un- conditional Stability The forward Euler method is easy to program, it runs fast, and with acceler- ation (extrapolation applied to increase accuracy) it can be used to produce reasonably efficient and accurate results. On the other hand, it has at least two weaknesses: (1) the ratio of the time-step size and the squareofthe spatial discretization size must be small to avoid numerical instability (see Exercise 5.62) and (2) the method is first-order in time. Both of these defi- ciencies have been overcome with the development of discretization methods that are unconditionally stable and second-order in time. One such method that is often used is due to John Crank and Phyllis Nicolson [21]; its deriva- tion, order estimates, numerical stability, and implementation are discussed in the following sections. Second-order methods are introduced after development of two model problems: gas diffusion in air and gas motion in porous media. These models have independent interest, but their main function here is to demonstrate via realistic applications that numerical simulations using Euler’s method are not efficient due to the Courant-Lewy-Friedricks restriction on step-size. Of course, to appreciate this fact, the reader is encouraged to perform the suggested exercises. Further on, after second-order methods are introduced, the exercises can be repeated to compare and contrast the efficacy of first and second order numerical algorithms in practice.