4 Stiffness and Stability

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving the Accuracy of Computed Eigenvalues and Eigenvectors*

SIAM J. NUMER. ANAL. 1983 Society for Industrial and Applied Mathematics Vol. 20, No. 1, February 1983 0036-1429/83/2001-0002 $01.25/0 IMPROVING THE ACCURACY OF COMPUTED EIGENVALUES AND EIGENVECTORS* J. J. DONGARRA,t C. B. MOLER AND J. H. WILKINSON Abstract. This paper describes and analyzes several variants of a computational method for improving the numerical accuracy of, and for obtaining numerical bounds on, matrix eigenvalues and eigenvectors. The method, which is essentially a numerically stable implementation of Newton's method, may be used to "fine tune" the results obtained from standard subroutines such as those in EISPACK [Lecture Notes in Computer Science 6, 51, Springer-Verlag, Berlin, 1976, 1977]. Extended precision arithmetic is required in the computation of certain residuals. Introduction. The calculation of an eigenvalue , and the corresponding eigenvec- tor x (here after referred to as an eigenpair) of a matrix A involves the solution of the nonlinear system of equations (A AI)x O. Starting from an approximation h and , a sequence of iterates may be determined using Newton's method or variants of it. The conditions on and guaranteeing convergence have been treated extensively in the literature. For a particularly lucid account the reader is referred to the book by Rail [3]. In a recent paper Wilkinson [7] describes an algorithm for determining error bounds for a computed eigenpair based on these mathematical concepts. Considerations of numerical stability were an essential feature of that paper and indeed were its main raison d'etre. In general this algorithm provides an improved eigenpair and error bounds for it; unless the eigenpair is very ill conditioned the improved eigenpair is usually correct to the precision of the computation used in the main body of the algorithm. -

Introduction to Differential Equations

Introduction to Differential Equations Lecture notes for MATH 2351/2352 Jeffrey R. Chasnov k kK m m x1 x2 The Hong Kong University of Science and Technology The Hong Kong University of Science and Technology Department of Mathematics Clear Water Bay, Kowloon Hong Kong Copyright ○c 2009–2016 by Jeffrey Robert Chasnov This work is licensed under the Creative Commons Attribution 3.0 Hong Kong License. To view a copy of this license, visit http://creativecommons.org/licenses/by/3.0/hk/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Preface What follows are my lecture notes for a first course in differential equations, taught at the Hong Kong University of Science and Technology. Included in these notes are links to short tutorial videos posted on YouTube. Much of the material of Chapters 2-6 and 8 has been adapted from the widely used textbook “Elementary differential equations and boundary value problems” by Boyce & DiPrima (John Wiley & Sons, Inc., Seventh Edition, ○c 2001). Many of the examples presented in these notes may be found in this book. The material of Chapter 7 is adapted from the textbook “Nonlinear dynamics and chaos” by Steven H. Strogatz (Perseus Publishing, ○c 1994). All web surfers are welcome to download these notes, watch the YouTube videos, and to use the notes and videos freely for teaching and learning. An associated free review book with links to YouTube videos is also available from the ebook publisher bookboon.com. I welcome any comments, suggestions or corrections sent by email to [email protected]. -

3 Runge-Kutta Methods

3 Runge-Kutta Methods In contrast to the multistep methods of the previous section, Runge-Kutta methods are single-step methods — however, with multiple stages per step. They are motivated by the dependence of the Taylor methods on the specific IVP. These new methods do not require derivatives of the right-hand side function f in the code, and are therefore general-purpose initial value problem solvers. Runge-Kutta methods are among the most popular ODE solvers. They were first studied by Carle Runge and Martin Kutta around 1900. Modern developments are mostly due to John Butcher in the 1960s. 3.1 Second-Order Runge-Kutta Methods As always we consider the general first-order ODE system y0(t) = f(t, y(t)). (42) Since we want to construct a second-order method, we start with the Taylor expansion h2 y(t + h) = y(t) + hy0(t) + y00(t) + O(h3). 2 The first derivative can be replaced by the right-hand side of the differential equation (42), and the second derivative is obtained by differentiating (42), i.e., 00 0 y (t) = f t(t, y) + f y(t, y)y (t) = f t(t, y) + f y(t, y)f(t, y), with Jacobian f y. We will from now on neglect the dependence of y on t when it appears as an argument to f. Therefore, the Taylor expansion becomes h2 y(t + h) = y(t) + hf(t, y) + [f (t, y) + f (t, y)f(t, y)] + O(h3) 2 t y h h = y(t) + f(t, y) + [f(t, y) + hf (t, y) + hf (t, y)f(t, y)] + O(h3(43)). -

Runge-Kutta Scheme Takes the Form K1 = Hf (Tn, Yn); K2 = Hf (Tn + Αh, Yn + Βk1); (5.11) Yn+1 = Yn + A1k1 + A2k2

Approximate integral using the trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ))] ; t = t + h: n+1 n 2 n n n+1 n+1 n+1 n Use Euler's method to approximate Y (tn+1) ≈ Y (tn) + hf (tn; Y (tn)) in trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ) + hf (t ; Y (t )))] : n+1 n 2 n n n+1 n n n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = f (t; Y ) , Y (tn+1) = Y (tn) + f (t; Y (t)) dt: dt tn Use Euler's method to approximate Y (tn+1) ≈ Y (tn) + hf (tn; Y (tn)) in trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ) + hf (t ; Y (t )))] : n+1 n 2 n n n+1 n n n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = f (t; Y ) , Y (tn+1) = Y (tn) + f (t; Y (t)) dt: dt tn Approximate integral using the trapezium rule: h Y (t ) ≈ Y (t ) + [f (t ; Y (t )) + f (t ; Y (t ))] ; t = t + h: n+1 n 2 n n n+1 n+1 n+1 n Hence the modified Euler's scheme 8 K1 = hf (tn; yn) > h <> y = y + [f (t ; y ) + f (t ; y + hf (t ; y ))] , K2 = hf (tn+1; yn + K1) n+1 n 2 n n n+1 n n n > K1 + K2 :> y = y + n+1 n 2 5.3.1 Modified Euler Method Numerical solution of Initial Value Problem: dY Z tn+1 = -

Numerical Analysis Notes for Math 575A

Numerical Analysis Notes for Math 575A William G. Faris Program in Applied Mathematics University of Arizona Fall 1992 Contents 1 Nonlinear equations 5 1.1 Introduction . 5 1.2 Bisection . 5 1.3 Iteration . 9 1.3.1 First order convergence . 9 1.3.2 Second order convergence . 11 1.4 Some C notations . 12 1.4.1 Introduction . 12 1.4.2 Types . 13 1.4.3 Declarations . 14 1.4.4 Expressions . 14 1.4.5 Statements . 16 1.4.6 Function definitions . 17 2 Linear Systems 19 2.1 Shears . 19 2.2 Reflections . 24 2.3 Vectors and matrices in C . 27 2.3.1 Pointers in C . 27 2.3.2 Pointer Expressions . 28 3 Eigenvalues 31 3.1 Introduction . 31 3.2 Similarity . 31 3.3 Orthogonal similarity . 34 3.3.1 Symmetric matrices . 34 3.3.2 Singular values . 34 3.3.3 The Schur decomposition . 35 3.4 Vector and matrix norms . 37 3.4.1 Vector norms . 37 1 2 CONTENTS 3.4.2 Associated matrix norms . 37 3.4.3 Singular value norms . 38 3.4.4 Eigenvalues and norms . 39 3.4.5 Condition number . 39 3.5 Stability . 40 3.5.1 Inverses . 40 3.5.2 Iteration . 40 3.5.3 Eigenvalue location . 41 3.6 Power method . 43 3.7 Inverse power method . 44 3.8 Power method for subspaces . 44 3.9 QR method . 46 3.10 Finding eigenvalues . 47 4 Nonlinear systems 49 4.1 Introduction . 49 4.2 Degree . 51 4.2.1 Brouwer fixed point theorem . -

Numerically Stable Generation of Correlation Matrices and Their Factors ∗

BIT 0006-3835/00/4004-0640 $15.00 2000, Vol. 40, No. 4, pp. 640–651 c Swets & Zeitlinger NUMERICALLY STABLE GENERATION OF CORRELATION MATRICES AND THEIR FACTORS ∗ ‡ PHILIP I. DAVIES† and NICHOLAS J. HIGHAM Department of Mathematics, University of Manchester, Manchester, M13 9PL, England email: [email protected], [email protected] Abstract. Correlation matrices—symmetric positive semidefinite matrices with unit diagonal— are important in statistics and in numerical linear algebra. For simulation and testing it is desirable to be able to generate random correlation matrices with specified eigen- values (which must be nonnegative and sum to the dimension of the matrix). A popular algorithm of Bendel and Mickey takes a matrix having the specified eigenvalues and uses a finite sequence of Givens rotations to introduce 1s on the diagonal. We give im- proved formulae for computing the rotations and prove that the resulting algorithm is numerically stable. We show by example that the formulae originally proposed, which are used in certain existing Fortran implementations, can lead to serious instability. We also show how to modify the algorithm to generate a rectangular matrix with columns of unit 2-norm. Such a matrix represents a correlation matrix in factored form, which can be preferable to representing the matrix itself, for example when the correlation matrix is nearly singular to working precision. Key words: Random correlation matrix, Bendel–Mickey algorithm, eigenvalues, sin- gular value decomposition, test matrices, forward error bounds, relative error bounds, IMSL, NAG Library, Jacobi method. AMS subject classification: 65F15. 1 Introduction. An important class of symmetric positive semidefinite matrices is those with unit diagonal. -

The Original Euler's Calculus-Of-Variations Method: Key

Submitted to EJP 1 Jozef Hanc, [email protected] The original Euler’s calculus-of-variations method: Key to Lagrangian mechanics for beginners Jozef Hanca) Technical University, Vysokoskolska 4, 042 00 Kosice, Slovakia Leonhard Euler's original version of the calculus of variations (1744) used elementary mathematics and was intuitive, geometric, and easily visualized. In 1755 Euler (1707-1783) abandoned his version and adopted instead the more rigorous and formal algebraic method of Lagrange. Lagrange’s elegant technique of variations not only bypassed the need for Euler’s intuitive use of a limit-taking process leading to the Euler-Lagrange equation but also eliminated Euler’s geometrical insight. More recently Euler's method has been resurrected, shown to be rigorous, and applied as one of the direct variational methods important in analysis and in computer solutions of physical processes. In our classrooms, however, the study of advanced mechanics is still dominated by Lagrange's analytic method, which students often apply uncritically using "variational recipes" because they have difficulty understanding it intuitively. The present paper describes an adaptation of Euler's method that restores intuition and geometric visualization. This adaptation can be used as an introductory variational treatment in almost all of undergraduate physics and is especially powerful in modern physics. Finally, we present Euler's method as a natural introduction to computer-executed numerical analysis of boundary value problems and the finite element method. I. INTRODUCTION In his pioneering 1744 work The method of finding plane curves that show some property of maximum and minimum,1 Leonhard Euler introduced a general mathematical procedure or method for the systematic investigation of variational problems. -

Model Problems in Numerical Stability Theory for Initial Value Problems*

SIAM REVIEW () 1994 Society for Industrial and Applied Mathematics Vol. 36, No. 2, pp. 226-257, June 1994 004 MODEL PROBLEMS IN NUMERICAL STABILITY THEORY FOR INITIAL VALUE PROBLEMS* A.M. STUART AND A.R. HUMPHRIES Abstract. In the past numerical stability theory for initial value problems in ordinary differential equations has been dominated by the study of problems with simple dynamics; this has been motivated by the need to study error propagation mechanisms in stiff problems, a question modeled effectively by contractive linear or nonlinear problems. While this has resulted in a coherent and self-contained body of knowledge, it has never been entirely clear to what extent this theory is relevant for problems exhibiting more complicated dynamics. Recently there have been a number of studies of numerical stability for wider classes of problems admitting more complicated dynamics. This on-going work is unified and, in particular, striking similarities between this new developing stability theory and the classical linear and nonlinear stability theories are emphasized. The classical theories of A, B and algebraic stability for Runge-Kutta methods are briefly reviewed; the dynamics of solutions within the classes of equations to which these theories apply--linear decay and contractive problemsw are studied. Four other categories of equationsmgradient, dissipative, conservative and Hamiltonian systemsmare considered. Relationships and differences between the possible dynamics in each category, which range from multiple competing equilibria to chaotic solutions, are highlighted. Runge-Kutta schemes that preserve the dynamical structure of the underlying problem are sought, and indications of a strong relationship between the developing stability theory for these new categories and the classical existing stability theory for the older problems are given. -

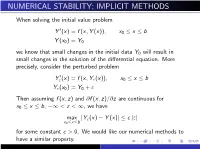

Numerical Stability; Implicit Methods

NUMERICAL STABILITY; IMPLICIT METHODS When solving the initial value problem 0 Y (x) = f (x; Y (x)); x0 ≤ x ≤ b Y (x0) = Y0 we know that small changes in the initial data Y0 will result in small changes in the solution of the differential equation. More precisely, consider the perturbed problem 0 Y"(x) = f (x; Y"(x)); x0 ≤ x ≤ b Y"(x0) = Y0 + " Then assuming f (x; z) and @f (x; z)=@z are continuous for x0 ≤ x ≤ b; −∞ < z < 1, we have max jY"(x) − Y (x)j ≤ c j"j x0≤x≤b for some constant c > 0. We would like our numerical methods to have a similar property. Consider the Euler method yn+1 = yn + hf (xn; yn) ; n = 0; 1;::: y0 = Y0 and then consider the perturbed problem " " " yn+1 = yn + hf (xn; yn ) ; n = 0; 1;::: " y0 = Y0 + " We can show the following: " max jyn − ynj ≤ cbj"j x0≤xn≤b for some constant cb > 0 and for all sufficiently small values of the stepsize h. This implies that Euler's method is stable, and in the same manner as was true for the original differential equation problem. The general idea of stability for a numerical method is essentially that given above for Eulers's method. There is a general theory for numerical methods for solving the initial value problem 0 Y (x) = f (x; Y (x)); x0 ≤ x ≤ b Y (x0) = Y0 If the truncation error in a numerical method has order 2 or greater, then the numerical method is stable if and only if it is a convergent numerical method. -

Geometric Algebra and Covariant Methods in Physics and Cosmology

GEOMETRIC ALGEBRA AND COVARIANT METHODS IN PHYSICS AND COSMOLOGY Antony M Lewis Queens' College and Astrophysics Group, Cavendish Laboratory A dissertation submitted for the degree of Doctor of Philosophy in the University of Cambridge. September 2000 Updated 2005 with typo corrections Preface This dissertation is the result of work carried out in the Astrophysics Group of the Cavendish Laboratory, Cambridge, between October 1997 and September 2000. Except where explicit reference is made to the work of others, the work contained in this dissertation is my own, and is not the outcome of work done in collaboration. No part of this dissertation has been submitted for a degree, diploma or other quali¯cation at this or any other university. The total length of this dissertation does not exceed sixty thousand words. Antony Lewis September, 2000 iii Acknowledgements It is a pleasure to thank all those people who have helped me out during the last three years. I owe a great debt to Anthony Challinor and Chris Doran who provided a great deal of help and advice on both general and technical aspects of my work. I thank my supervisor Anthony Lasenby who provided much inspiration, guidance and encouragement without which most of this work would never have happened. I thank Sarah Bridle for organizing the useful lunchtime CMB discussion group from which I learnt a great deal, and the other members of the Cavendish Astrophysics Group for interesting discussions, in particular Pia Mukherjee, Carl Dolby, Will Grainger and Mike Hobson. I gratefully acknowledge ¯nancial support from PPARC. v Contents Preface iii Acknowledgements v 1 Introduction 1 2 Geometric Algebra 5 2.1 De¯nitions and basic properties . -

Leonhard Euler: His Life, the Man, and His Works∗

SIAM REVIEW c 2008 Walter Gautschi Vol. 50, No. 1, pp. 3–33 Leonhard Euler: His Life, the Man, and His Works∗ Walter Gautschi† Abstract. On the occasion of the 300th anniversary (on April 15, 2007) of Euler’s birth, an attempt is made to bring Euler’s genius to the attention of a broad segment of the educated public. The three stations of his life—Basel, St. Petersburg, andBerlin—are sketchedandthe principal works identified in more or less chronological order. To convey a flavor of his work andits impact on modernscience, a few of Euler’s memorable contributions are selected anddiscussedinmore detail. Remarks on Euler’s personality, intellect, andcraftsmanship roundout the presentation. Key words. LeonhardEuler, sketch of Euler’s life, works, andpersonality AMS subject classification. 01A50 DOI. 10.1137/070702710 Seh ich die Werke der Meister an, So sehe ich, was sie getan; Betracht ich meine Siebensachen, Seh ich, was ich h¨att sollen machen. –Goethe, Weimar 1814/1815 1. Introduction. It is a virtually impossible task to do justice, in a short span of time and space, to the great genius of Leonhard Euler. All we can do, in this lecture, is to bring across some glimpses of Euler’s incredibly voluminous and diverse work, which today fills 74 massive volumes of the Opera omnia (with two more to come). Nine additional volumes of correspondence are planned and have already appeared in part, and about seven volumes of notebooks and diaries still await editing! We begin in section 2 with a brief outline of Euler’s life, going through the three stations of his life: Basel, St. -

Stability Analysis for Systems of Differential Equations

Stability Analysis for Systems of Differential Equations David Eberly, Geometric Tools, Redmond WA 98052 https://www.geometrictools.com/ This work is licensed under the Creative Commons Attribution 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042, USA. Created: February 8, 2003 Last Modified: March 2, 2008 Contents 1 Introduction 2 2 Physical Stability 2 3 Numerical Stability 4 3.1 Stability for Single-Step Methods..................................4 3.2 Stability for Multistep Methods...................................5 3.3 Choosing a Stable Step Size.....................................7 4 The Simple Pendulum 7 4.1 Numerical Solution of the ODE...................................8 4.2 Physical Stability for the Pendulum................................ 13 4.3 Numerical Stability of the ODE Solvers.............................. 13 1 1 Introduction In setting up a physical simulation involving objects, a primary step is to establish the equations of motion for the objects. These equations are formulated as a system of second-order ordinary differential equations that may be converted to a system of first-order equations whose dependent variables are the positions and velocities of the objects. Such a system is of the generic form x_ = f(t; x); t ≥ 0; x(0) = x0 (1) where x0 is a specified initial condition for the system. The components of x are the positions and velocities of the objects. The function f(t; x) includes the external forces and torques of the system. A computer implementation of the physical simulation amounts to selecting a numerical method to approximate the solution to the system of differential equations.