Reanalysis of Ecological Data from the 1989 China Study Using SAS® Enterprise Guide® Thomas E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Of Becoming and Remaining Vegetarian

Wang, Yahong (2020) Vegetarians in modern Beijing: food, identity and body techniques in everyday experience. PhD thesis. http://theses.gla.ac.uk/77857/ Copyright and moral rights for this work are retained by the author A copy can be downloaded for personal non-commercial research or study, without prior permission or charge This work cannot be reproduced or quoted extensively from without first obtaining permission in writing from the author The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the author When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given Enlighten: Theses https://theses.gla.ac.uk/ [email protected] Vegetarians in modern Beijing: Food, identity and body techniques in everyday experience Yahong Wang B.A., M.A. Submitted in fulfilment of the requirements for the Degree of Doctor of Philosophy School of Social and Political Sciences College of Social Sciences University of Glasgow March 2019 1 Abstract This study investigates how self-defined vegetarians in modern Beijing construct their identity through everyday experience in the hope that it may contribute to a better understanding of the development of individuality and self-identity in Chinese society in a post-traditional order, and also contribute to understanding the development of the vegetarian movement in a non-‘Western’ context. It is perhaps the first scholarly attempt to study the vegetarian community in China that does not treat it as an Oriental phenomenon isolated from any outside influence. -

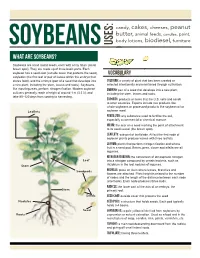

What Are Soybeans?

candy, cakes, cheeses, peanut butter, animal feeds, candles, paint, body lotions, biodiesel, furniture soybeans USES: What are soybeans? Soybeans are small round seeds, each with a tiny hilum (small brown spot). They are made up of three basic parts. Each soybean has a seed coat (outside cover that protects the seed), VOCABULARY cotyledon (the first leaf or pair of leaves within the embryo that stores food), and the embryo (part of a seed that develops into Cultivar: a variety of plant that has been created or a new plant, including the stem, leaves and roots). Soybeans, selected intentionally and maintained through cultivation. like most legumes, perform nitrogen fixation. Modern soybean Embryo: part of a seed that develops into a new plant, cultivars generally reach a height of around 1 m (3.3 ft), and including the stem, leaves and roots. take 80–120 days from sowing to harvesting. Exports: products or items that the U.S. sells and sends to other countries. Exports include raw products like whole soybeans or processed products like soybean oil or Leaflets soybean meal. Fertilizer: any substance used to fertilize the soil, especially a commercial or chemical manure. Hilum: the scar on a seed marking the point of attachment to its seed vessel (the brown spot). Leaflets: sub-part of leaf blade. All but the first node of soybean plants produce leaves with three leaflets. Legume: plants that perform nitrogen fixation and whose fruit is a seed pod. Beans, peas, clover and alfalfa are all legumes. Nitrogen Fixation: the conversion of atmospheric nitrogen Leaf into a nitrogen compound by certain bacteria, such as Stem rhizobium in the root nodules of legumes. -

Review of the China Study

FACT SHEET REVIEW OF THE CHINA STUDY By Team, General Conference Nutrition Council In 1905 Ellen White described the diet our Creator chose for us as a balanced plant-based diet including foods such as grains, fruit and vegetables, and nuts (1). Such a diet provides physical and mental vigor and endurance. She also recognized that such a diet may need to be adjusted according to the season, the climate, occupation, individual tolerance, and what foods are locally available (2). The General Conference Nutrition Council (GCNC) therefore recommends the consumption of a balanced vegetarian diet consisting of a rich variety of plant-based foods. Wherever possible those should be whole foods. Thousands of peer-reviewed research papers have been published over the last seven decades validating a balanced vegetarian eating plan. With so much support for our advocacy of vegetarian nutrition we have no need to fortify our well-founded position with popular anecdotal information or flawed science just because it agrees with what we believe. The methods used to arrive at a conclusion are very important as they determine the validity of the conclusion. We must demonstrate careful, transparent integrity at every turn in formulating a sound rationale to support our health message. It is with this in mind that we have carefully reviewed the book, The China Study (3). This book, published in 2004, was written by T. Colin Campbell, PhD, an emeritus professor of Nutritional Biochemistry at Cornell University and the author of over 300 research papers. In it Campbell describes his personal journey to a plants-only diet. -

Soy Free Diet Avoiding Soy

SOY FREE DIET AVOIDING SOY An allergy to soy is common in babies and young children, studies show that often children outgrow a soy allergy by age 3 years and the majority by age 10. Soybeans are a member of the legume family; examples of other legumes include beans, peas, lentils and peanut. It is important to remember that children with a soy allergy are not necessarily allergic to other legumes, request more clarification from your allergist if you are concerned. Children with a soy allergy may have nausea, vomiting, abdominal pain, diarrhea, bloody stool, difficulty breathing, and or a skin reaction after eating or drinking soy products. These symptoms can be avoided by following a soy free diet. What foods are not allowed on a soy free diet? Soy beans and edamame Soy products, including tofu, miso, natto, soy sauce (including sho yu, tamari), soy milk/creamer/ice cream/yogurt, soy nuts and soy protein, tempeh, textured vegetable protein (TVP) Caution with processed foods - soy is widely used manufactured food products – remember to carefully read labels. o Soy products and derivatives can be found in many foods, including baked goods, canned tuna and meat, cereals, cookies, crackers, high-protein energy bars, drinks and snacks, infant formulas, low- fat peanut butter, processed meats, sauces, chips, canned broths and soups, condiments and salad dressings (Bragg’s Liquid Aminos) USE EXTRA CAUTION WITH ASIAN CUISINE: Asian cuisine are considered high-risk for people with soy allergy due to the common use of soy as an ingredient and the possibility of cross-contamination, even if a soy-free item is ordered. -

T. Colin Campbell, Ph.D. Thomas M. Campbell II

"Everyone in the field of nutrition science stands on the shoulders of Dr. Campbell, who is one of the giants in the field. This is one of the most important books about nutrition ever written - reading it may save your life." - Dean Ornish, MD THE MOST COMPREHENSIVE STUDY OF NUTRITION EVER CONDUCTED --THE-- STARTLING IMPLICATIONS FOR DIET, WEIGHT Loss AND LONG-TERM HEALTH T. COLIN CAMPBELL, PHD AND THOMAS M. CAMPBELL II FOREWORD BY JOHN ROBBINS, AUTHOR, DIET FOR A NEW AMERICA PRAISE FOR THE CHINA STUDY "The China Study gives critical, life-saving nutritional information for ev ery health-seeker in America. But it is much more; Dr. Campbell's expose of the research and medical establishment makes this book a fascinating read and one that could change the future for all of us. Every health care provider and researcher in the world must read it." -JOEl FUHRMAN, M.D. Author of the Best-Selling Book, Eat To Live . ', "Backed by well-documented, peer-reviewed studies and overwhelming statistics the case for a vegetarian diet as a foundation for a healthy life t style has never been stronger." -BRADLY SAUL, OrganicAthlete.com "The China Study is the most important book on nutrition and health to come out in the last seventy-five years. Everyone should read it, and it should be the model for all nutrition programs taught at universities, The reading is engrossing if not astounding. The science is conclusive. Dr. Campbells integrity and commitment to truthful nutrition education shine through." -DAVID KLEIN, PublisherlEditor Living Nutrition MagaZine "The China Study describes a monumental survey of diet and death rates from cancer in more than 2,400 Chinese counties and the equally monu mental efforts to explore its Significance and implications for nutrition and health. -

ISR Summer Camp Lunch Menu 28.06.-02.07

Meat option 28.06. Meatless Monday Allergens Legume curry Tofu cheese Mayonnaise and tomato sauce 3,7,10 Fresh cucumbers Rye bread / sweet and sour bread 1 Curd snack Baltais 7 Banana 29.06.Tuesday Allergens Chicken fillet chop 1,3 Pasta 1,3 Steamed broccoli and cauliflower with bean pods Basil, mayonnaise and sour cream sauce 3,7,10 Rye bread / sweet and sour bread 1 Fruit and berry salad 30.06. Wednesday Allergens Turkey goulash 1,7,9 Mashed potatoes 7 Fresh cabbage salad with oil dressing Rye bread / sweet and sour bread 1 Peach, kiwi and strawberry cocktail 01.07.Thursday Allergens Beef rissole 1,3 Rice Tomato sauce Beet salad with oil dressing Rye bread / sweet and sour bread 1 Sweet bun 1,3 02.07. Friday Allergens Chicken sausages 1,3 Fried potato wedges Tomato sauce Tomatoes Rye bread / sweet and sour bread 1 Sweet cherries Lactose-free 28.06. Meatless Monday Allergens Legume curry Tofu cheese Tomato sauce Fresh cucumbers Rye bread / sweet and sour bread 1 Muesli bar Banana 29.06.Tuesday Allergens Chicken fillet chop 1,3 Pasta 1,3 Steamed broccoli and cauliflower with bean pods Basil and oil dressing Rye bread / sweet and sour bread 1 Fruit and berry salad 30.06. Wednesday Allergens Turkey pieces Boiled potatoes Fresh cabbage salad with oil dressing Rye bread / sweet and sour bread 1 Peach, kiwi and strawberry cocktail 01.07.Thursday Allergens Beef rissole 1,3 Rice Tomato sauce Beet salad with oil dressing Rye bread / sweet and sour bread 1 Strawberries 02.07. -

Legume Living Mulches in Corn and Soybean

Legume Living Mulches in Corn and Soybean By Jeremy Singer, USDA-ARS National Soil Tilth Laboratory Palle Pedersen, Iowa State University PM 2006 August 2005 Living mulches are an extension of cover crops used perennial life cycle, been successfully grown as a living to decrease soil erosion, suppress weeds, improve mulch, and have been reported in the scientific literature. soil structure and nutrient cycling, and in the case of For detailed information on plant adaptability, tolerances, legumes, supply nitrogen to a grain crop. Unlike cover and management information refer to ISU Extension crops that are killed before planting the grain crop, living publication Selecting Forage Species (PM 1792). mulches co-exist with the crops during the growing season and continue to grow after the crop is harvested. Alfalfa Mature plants may have from 5 to 25 stems with a height The living mulch can be an annual or perennial plant ranging from 24 to 36 inches. Alfalfa varieties differ in interseeded with a grain crop, or it can be an existing their ability to spread by underground stems (rhizomes). perennial grass or legume stand into which another crop Varieties that can form new stems from rhizomes may be is planted. The latter system usually requires suppression superior for living mulch systems because they can of the existing “living mulch” with herbicides or cultural spread short distances to cover the soil after the grain practices such as tillage or mowing to create what has crop has been harvested. Alfalfa yield may be higher than been referred to as a “sleeping sod.” Interest in living other forage legumes if the living mulch is grazed or mulch systems developed as a tool to mitigate soil mechanically harvested in the spring prior to crop erosion on highly erosive land. -

The EAT Lancet Publication: Implications for Nutrition Health and Planet

The EAT Lancet Publication: Implications for Nutrition Health and Planet 12 December 2019 Namukolo Covic (PhD) Senior Research Coordinator, IFPRI/A4NH Addis Ababa, Ethiopia Why and who? • Address absence of scientific targets for achieving healthy diets from sustainable food systems limiting large scale coordinated efforts to transform the global food system. • The Commission: 19 Commissioners and 18 co-authors from 16 countries and areas of specialization (human health, agriculture, political sciences, and environmental sustainability) • Develop global scientific targets informed by the best evidence available for healthy diets and sustainable food production. • Define a safe operating space for food systems that address nutrition, health and a sustainable planetary system. • Ensure meeting Sustainable Development Goals (SDGs) and Paris Climate Agreement. https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(18)31788-4/fulltext# The scale of the challenge And the world is off track to meet all global nutrition targets EAT-Lancet Commission Approach Define a healthy reference diet using the best available evidence (controlled feeding studies, long-term cohort studies, randomized trials). Define planetary boundaries for 6 key environmental systems and processes (GHG, cropland use, water use, nitrogen and phosphorus application, extinction rate). Apply a global food systems modeling framework to analyze what combinations of readily implementable measures are needed to stay within food production boundaries while still delivering -

Fruits and Seeds of Genera in the Subfamily Faboideae (Fabaceae)

Fruits and Seeds of United States Department of Genera in the Subfamily Agriculture Agricultural Faboideae (Fabaceae) Research Service Technical Bulletin Number 1890 Volume I December 2003 United States Department of Agriculture Fruits and Seeds of Agricultural Research Genera in the Subfamily Service Technical Bulletin Faboideae (Fabaceae) Number 1890 Volume I Joseph H. Kirkbride, Jr., Charles R. Gunn, and Anna L. Weitzman Fruits of A, Centrolobium paraense E.L.R. Tulasne. B, Laburnum anagyroides F.K. Medikus. C, Adesmia boronoides J.D. Hooker. D, Hippocrepis comosa, C. Linnaeus. E, Campylotropis macrocarpa (A.A. von Bunge) A. Rehder. F, Mucuna urens (C. Linnaeus) F.K. Medikus. G, Phaseolus polystachios (C. Linnaeus) N.L. Britton, E.E. Stern, & F. Poggenburg. H, Medicago orbicularis (C. Linnaeus) B. Bartalini. I, Riedeliella graciliflora H.A.T. Harms. J, Medicago arabica (C. Linnaeus) W. Hudson. Kirkbride is a research botanist, U.S. Department of Agriculture, Agricultural Research Service, Systematic Botany and Mycology Laboratory, BARC West Room 304, Building 011A, Beltsville, MD, 20705-2350 (email = [email protected]). Gunn is a botanist (retired) from Brevard, NC (email = [email protected]). Weitzman is a botanist with the Smithsonian Institution, Department of Botany, Washington, DC. Abstract Kirkbride, Joseph H., Jr., Charles R. Gunn, and Anna L radicle junction, Crotalarieae, cuticle, Cytiseae, Weitzman. 2003. Fruits and seeds of genera in the subfamily Dalbergieae, Daleeae, dehiscence, DELTA, Desmodieae, Faboideae (Fabaceae). U. S. Department of Agriculture, Dipteryxeae, distribution, embryo, embryonic axis, en- Technical Bulletin No. 1890, 1,212 pp. docarp, endosperm, epicarp, epicotyl, Euchresteae, Fabeae, fracture line, follicle, funiculus, Galegeae, Genisteae, Technical identification of fruits and seeds of the economi- gynophore, halo, Hedysareae, hilar groove, hilar groove cally important legume plant family (Fabaceae or lips, hilum, Hypocalypteae, hypocotyl, indehiscent, Leguminosae) is often required of U.S. -

Consumer Understanding and Culinary Use of Legumes in Australia

University of Wollongong Research Online Faculty of Science, Medicine and Health - Papers: Part B Faculty of Science, Medicine and Health 2019 Consumer Understanding and Culinary Use of Legumes in Australia Natalie Figueira University of Wollongong Felicity Curtain Grains and Legumes Nutrition Council Eleanor J. Beck University of Wollongong, [email protected] Sara J. Grafenauer University of Wollongong, [email protected] Follow this and additional works at: https://ro.uow.edu.au/smhpapers1 Publication Details Citation Figueira, N., Curtain, F., Beck, E. J., & Grafenauer, S. J. (2019). Consumer Understanding and Culinary Use of Legumes in Australia. Faculty of Science, Medicine and Health - Papers: Part B. Retrieved from https://ro.uow.edu.au/smhpapers1/947 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Consumer Understanding and Culinary Use of Legumes in Australia Abstract While health benefits of legume consumption are well documented, intake is well below recommendations in many Western cultures, and little is known regarding culinary use and consumer understanding of these foods. This study aimed to investigate consumption, knowledge, attitudes, and culinary use of legumes in a convenience sample of Australians. An online computer-based survey was used to gather data and demographic characteristics. Respondents (505 individuals answered in full or in part) were regular consumers of legumes (177/376 consumed legumes 2-4 times weekly). Chickpeas, green peas, and kidney beans were most often consumed, and were made into most commonly Mexican, then Indian and Middle Eastern meals. Consumers correctly identified protein and dietary fibre (37%) as key nutritional attributes. -

Legume Beverages from Chickpea and Lupin, As New Milk Alternatives

foods Article Legume Beverages from Chickpea and Lupin, as New Milk Alternatives Mariana Lopes 1, Chloé Pierrepont 1, Carla Margarida Duarte 1,* , Alexandra Filipe 2, Bruno Medronho 3,4 and Isabel Sousa 1 1 LEAF-Linking Landscape, Environment, Agriculture and Food, Instituto Superior de Agronomia, University of Lisbon, Tapada da Ajuda, 1349-017 Lisboa, Portugal; [email protected] (M.L.); [email protected] (C.P.); [email protected] (I.S.) 2 CIEPQPF, Department of Chemical Engineering, University of Coimbra, Pólo II–R. Silvio Lima, 3030-790 Coimbra, Portugal; xanafi[email protected] 3 MED–Mediterranean Institute for Agriculture, Environment and Development, University of Algarve, Faculty of Sciences and Technology, Campus de Gambelas, Ed. 8, 8005-139 Faro, Portugal; [email protected] 4 FSCN, Surface and Colloid Engineering, Mid Sweden University, SE-851 70 Sundsvall, Sweden * Correspondence: [email protected] Received: 31 August 2020; Accepted: 9 October 2020; Published: 14 October 2020 Abstract: Recently, milk consumption has been declining and there is a high demand for non-dairy beverages. However, market offers are mainly cereal and nut-based beverages, which are essentially poor in protein (typically, less than 1.5% against the 3.5% in milk) and are not true milk replacers in that sense. In this work, new beverages from different pulses (i.e., pea, chickpea and lupin) were developed using technologies that enable the incorporation of a high level of seed components, with low or no discharge of by-products. Different processing steps were sequentially tested and discussed for the optimization of the sensorial features and stability of the beverage, considering the current commercial non-dairy beverages trends. -

Death by Food Pyramid by Dr

Death By Food Pyramid By Dr. Ron Hoffman The Food Pyramid, created by the USDA in the 1992, has now been conclusively demonstrated to have undermined Americans’ health. While it has held sway, diabetes and obesity rates have soared in America. Food manufacturers have capitalized on the Food Pyramid’s recommendations to add token amounts of “whole grains” to foods like bagels and breakfast cereals to confer a false aura of health upon their caloric, processed, high-glycemic index products. I recently had the opportunity to interview Denise Minger, author of “Death by Food Pyramid: How Shoddy Science, Sketchy Politics and Shady Special Interests Ruined Your Health”. http://shar.es/FrXd7 The book provides background and historical context for how we took a huge wrong turn in our public policy toward nutrition. As Minger relates, the public implicitly trusts “health authorities”, particularly from the government, who wrongly assert: Saturated fat clogs your arteries Whole grains are heart-healthy Low-fat dairy makes your bones strong White meat is better than red Vegetable oils are healthier than butter High-cholesterol foods cause heart disease “Death by Food Pyramid” chronicles how well-meaning but myopic scientists found their recommendations subverted by agribusiness interest groups. The key problem: the USDA has conflicted missions. On the one-hand, we look to the USDA to encourage healthy diet; on the other, its primary purpose is to encourage US agriculture, inextricably intertwined with the profit motive and consumption. The two agendas are often irreconcilable. If you want the dish on how corruption permeates the food policy process in America, this book is for you.