Locating Spambots on the Internet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical and Legal Approaches to Unsolicited Electronic Mail, 35 USFL Rev

UIC School of Law UIC Law Open Access Repository UIC Law Open Access Faculty Scholarship 1-1-2001 Technical and Legal Approaches to Unsolicited Electronic Mail, 35 U.S.F. L. Rev. 325 (2001) David E. Sorkin John Marshall Law School, [email protected] Follow this and additional works at: https://repository.law.uic.edu/facpubs Part of the Computer Law Commons, Internet Law Commons, Marketing Law Commons, and the Privacy Law Commons Recommended Citation David E. Sorkin, Technical and Legal Approaches to Unsolicited Electronic Mail, 35 U.S.F. L. Rev. 325 (2001). https://repository.law.uic.edu/facpubs/160 This Article is brought to you for free and open access by UIC Law Open Access Repository. It has been accepted for inclusion in UIC Law Open Access Faculty Scholarship by an authorized administrator of UIC Law Open Access Repository. For more information, please contact [email protected]. Technical and Legal Approaches to Unsolicited Electronic Mailt By DAVID E. SORKIN* "Spamming" is truly the scourge of the Information Age. This problem has become so widespread that it has begun to burden our information infrastructure. Entire new networks have had to be constructed to deal with it, when resources would be far better spent on educational or commercial needs. United States Senator Conrad Burns (R-MT)1 UNSOLICITED ELECTRONIC MAIL, also called "spain," 2 causes or contributes to a wide variety of problems for network administrators, t Copyright © 2000 David E. Sorkin. * Assistant Professor of Law, Center for Information Technology and Privacy Law, The John Marshall Law School; Visiting Scholar (1999-2000), Center for Education and Research in Information Assurance and Security (CERIAS), Purdue University. -

Show Me the Money: Characterizing Spam-Advertised Revenue

Show Me the Money: Characterizing Spam-advertised Revenue Chris Kanich∗ Nicholas Weavery Damon McCoy∗ Tristan Halvorson∗ Christian Kreibichy Kirill Levchenko∗ Vern Paxsonyz Geoffrey M. Voelker∗ Stefan Savage∗ ∗ y Department of Computer Science and Engineering International Computer Science Institute University of California, San Diego Berkeley, CA z Computer Science Division University of California, Berkeley Abstract money at all [6]. This situation has the potential to distort Modern spam is ultimately driven by product sales: policy and investment decisions that are otherwise driven goods purchased by customers online. However, while by intuition rather than evidence. this model is easy to state in the abstract, our under- In this paper we make two contributions to improving standing of the concrete business environment—how this state of affairs using measurement-based methods to many orders, of what kind, from which customers, for estimate: how much—is poor at best. This situation is unsurpris- ing since such sellers typically operate under question- • Order volume. We describe a general technique— able legal footing, with “ground truth” data rarely avail- purchase pair—for estimating the number of orders able to the public. However, absent quantifiable empiri- received (and hence revenue) via on-line store order cal data, “guesstimates” operate unchecked and can dis- numbering. We use this approach to establish rough, tort both policy making and our choice of appropri- but well-founded, monthly order volume estimates ate interventions. In this paper, we describe two infer- for many of the leading “affiliate programs” selling ence techniques for peering inside the business opera- counterfeit pharmaceuticals and software. tions of spam-advertised enterprises: purchase pair and • Purchasing behavior. -

Spamming Botnets: Signatures and Characteristics

Spamming Botnets: Signatures and Characteristics Yinglian Xie, Fang Yu, Kannan Achan, Rina Panigrahy, Geoff Hulten+,IvanOsipkov+ Microsoft Research, Silicon Valley +Microsoft Corporation {yxie,fangyu,kachan,rina,ghulten,ivano}@microsoft.com ABSTRACT botnet infection and their associated control process [4, 17, 6], little In this paper, we focus on characterizing spamming botnets by effort has been devoted to understanding the aggregate behaviors of leveraging both spam payload and spam server traffic properties. botnets from the perspective of large email servers that are popular Towards this goal, we developed a spam signature generation frame- targets of botnet spam attacks. work called AutoRE to detect botnet-based spam emails and botnet An important goal of this paper is to perform a large scale analy- membership. AutoRE does not require pre-classified training data sis of spamming botnet characteristics and identify trends that can or white lists. Moreover, it outputs high quality regular expression benefit future botnet detection and defense mechanisms. In our signatures that can detect botnet spam with a low false positive rate. analysis, we make use of an email dataset collected from a large Using a three-month sample of emails from Hotmail, AutoRE suc- email service provider, namely, MSN Hotmail. Our study not only cessfully identified 7,721 botnet-based spam campaigns together detects botnet membership across the Internet, but also tracks the with 340,050 unique botnet host IP addresses. sending behavior and the associated email content patterns that are Our in-depth analysis of the identified botnets revealed several directly observable from an email service provider. Information interesting findings regarding the degree of email obfuscation, prop- pertaining to botnet membership can be used to prevent future ne- erties of botnet IP addresses, sending patterns, and their correlation farious activities such as phishing and DDoS attacks. -

Zambia and Spam

ZAMNET COMMUNICATION SYSTEMS LTD (ZAMBIA) Spam – The Zambian Experience Submission to ITU WSIS Thematic meeting on countering Spam By: Annabel S Kangombe – Maseko June 2004 Table of Contents 1.0 Introduction 1 1.1 What is spam? 1 1.2 The nature of Spam 1 1.3 Statistics 2 2.0 Technical view 4 2.1 Main Sources of Spam 4 2.1.1 Harvesting 4 2.1.2 Dictionary Attacks 4 2.1.3 Open Relays 4 2.1.4 Email databases 4 2.1.5 Inadequacies in the SMTP protocol 4 2.2 Effects of Spam 5 2.3 The fight against spam 5 2.3.1 Blacklists 6 2.3.2 White lists 6 2.3.3 Dial‐up Lists (DUL) 6 2.3.4 Spam filtering programs 6 2.4 Challenges of fighting spam 7 3.0 Legal Framework 9 3.1 Laws against spam in Zambia 9 3.2 International Regulations or Laws 9 3.2.1 US State Laws 9 3.2.2 The USA’s CAN‐SPAM Act 10 4.0 The Way forward 11 4.1 A global effort 11 4.2 Collaboration between ISPs 11 4.3 Strengthening Anti‐spam regulation 11 4.4 User education 11 4.5 Source authentication 12 4.6 Rewriting the Internet Mail Exchange protocol 12 1.0 Introduction I get to the office in the morning, walk to my desk and switch on the computer. One of the first things I do after checking the status of the network devices is to check my email. -

Email Phishing for IT Providers How Phishing Emails Have Changed and How to Protect Your IT Clients

Email Phishing for IT Providers How phishing emails have changed and how to protect your IT clients 1 © 2016 Calyptix Security Corporation. All rights reserved. I [email protected] I (800) 650 – 8930 (800) 650-8930 I [email protected] Contents Introduction ............................................................................................ 2 Phishing overview .................................................................................. 3 Trends in phishing emails ...................................................................... 6 Email phishing tactics .......................................................................... 11 Steps for MSP & VARS .......................................................................... 24 Advice for your clients .......................................................................... 29 Sources .................................................................................................. 35 1 © 2016 Calyptix Security Corporation. All rights reserved. I [email protected] I (800) 650 – 8930 Introduction There are only so many ways to break into a bank. You can march through the door. You can climb through a window. You can tunnel through the floor. There is the service entrance, the employee entrance, and access on the roof. Criminals who want to rob a bank will probably use an open route – such as a side door. It’s easier than breaking down a wall. Criminals who want to break into your network face a similar challenge. They need to enter. They can look for a weakness in your -

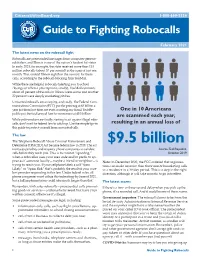

CUB Guide to Fighting Robocalls

CitizensUtilityBoard.org 1-800-669-5556 Guide to Fighting Robocalls February 2021 The latest news on the robocall fi ght Robocalls are prerecorded messages from computer-generat- ed dialers, and Illinois is one of the nation’s hardest hit states. In early 2021, for example, the state received more than 153 million robocalls (about 57 per second) in the span of just one month. That ranked Illinois eighth in the country for these calls, according to the robocall-blocking fi rm YouMail. While there are helpful robocalls (alerting you to school closings or when a prescription is ready), YouMail estimates about 42 percent of the calls in Illinois were scams and another 22 percent were simply marketing pitches. Unwanted robocalls are annoying, and costly. The Federal Com- munications Commission (FCC) put the price tag at $3 billion a year just from lost time, not even counting any fraud. TechRe- One in 10 Americans public put the total annual loss for consumers at $9.5 billion. are scammed each year, While policymakers are fi nally starting to act against illegal robo- calls, don’t wait for federal law to catch up. Use the simple tips in resulting in an annual loss of this guide to protect yourself from unwanted calls. The law The Telephone Robocall Abuse Criminal Enforcement and $9.5 billion Deterrence (TRACED) Act became federal law in 2019. The act increases penalties and requires phone companies to validate Source: TechRepublic, calls before they reach you. This is to combat “spoofi ng,” October 2019 when a robocaller uses your area code and/or prefi x to ap- pear as if someone locally—maybe a friend or neighbor—is Note: In December 2020, the FCC ordered that organiza- trying to reach you. -

Enisa Etl2020

EN From January 2019 to April 2020 Spam ENISA Threat Landscape Overview The first spam message was sent in 1978 by a marketing manager to 393 people via ARPANET. It was an advertising campaign for a new product from the company he worked for, the Digital Equipment Corporation. For those first 393 spammed people it was as annoying as it would be today, regardless of the novelty of the idea.1 Receiving spam is an inconvenience, but it may also create an opportunity for a malicious actor to steal personal information or install malware.2 Spam consists of sending unsolicited messages in bulk. It is considered a cybersecurity threat when used as an attack vector to distribute or enable other threats. Another noteworthy aspect is how spam may sometimes be confused or misclassified as a phishing campaign. The main difference between the two is the fact that phishing is a targeted action using social engineering tactics, actively aiming to steal users’ data. In contrast spam is a tactic for sending unsolicited e-mails to a bulk list. Phishing campaigns can use spam tactics to distribute messages while spam can link the user to a compromised website to install malware and steal personal data. Spam campaigns, during these last 41 years have taken advantage of many popular global social and sports events such as UEFA Europa League Final, US Open, among others. Even so, nothing compared with the spam activity seen this year with the COVID-19 pandemic.8 2 __Findings 85%_of all e-mails exchanged in April 2019 were spam, a 15-month high1 14_million -

Spambots: Creative Deception

PATTERNS 2020 : The Twelfth International Conference on Pervasive Patterns and Applications Spambots: Creative Deception Hayam Alamro Costas S. Iliopoulos Department of Informatics Department of Informatics King’s College London, UK King’s College London, UK Department of Information Systems email: costas.iliopoulos Princess Nourah bint Abdulrahman University @kcl.ac.uk Riyadh, KSA email: [email protected] Abstract—In this paper, we present our spambot overview on the intent of building mailing lists to send unsolicited or phishing creativity of the spammers, and the most important techniques emails. Furthermore, spammers can create fake accounts to that the spammer might use to deceive current spambot detection target specific websites or domain specific users and start send- tools to bypass detection. These techniques include performing a ing predefined designed actions which are known as scripts. series of malicious actions with variable time delays, repeating the Moreover, spammers can work on spreading malwares to steal same series of malicious actions multiple times, and interleaving other accounts or scan the web to obtain customers contact legitimate and malicious actions. In response, we define our problems to detect the aforementioned techniques in addition information to carry out credential stuffing attacks, which is to disguised and "don’t cares" actions. Our proposed algorithms mainly used to login to another unrelated service. In addition, to solve those problems are based on advanced data structures spambots can be designed to participate in deceiving users on that are able to detect malicious actions efficiently in linear time, online social networks through the spread of fake news, for and in an innovative way. -

A Visual Analytics Toolkit for Social Spambot Labeling

© 2019 IEEE. This is the author’s version of the article that has been published in IEEE Transactions on Visualization and Computer Graphics. The final version of this record is available at: 10.1109/TVCG.2019.2934266 VASSL: A Visual Analytics Toolkit for Social Spambot Labeling Mosab Khayat, Morteza Karimzadeh, Jieqiong Zhao, David S. Ebert, Fellow, IEEE G A H B C E I D F Fig. 1. The default layout of the front-end of VASSL: A) the timeline view, B) the dimensionality reduction view, C) the user/tweet detail views, D) & E) the topics view (clustering / words), F) the feature explorer view, G) the general control panel, H) the labeling panel, and I) the control panels for all the views (the opened control panel in the figure is for topics clustering view). Abstract—Social media platforms are filled with social spambots. Detecting these malicious accounts is essential, yet challenging, as they continually evolve to evade detection techniques. In this article, we present VASSL, a visual analytics system that assists in the process of detecting and labeling spambots. Our tool enhances the performance and scalability of manual labeling by providing multiple connected views and utilizing dimensionality reduction, sentiment analysis and topic modeling, enabling insights for the identification of spambots. The system allows users to select and analyze groups of accounts in an interactive manner, which enables the detection of spambots that may not be identified when examined individually. We present a user study to objectively evaluate the performance of VASSL users, as well as capturing subjective opinions about the usefulness and the ease of use of the tool. -

Detection of Spambot Groups Through DNA-Inspired Behavioral Modeling

IEEE TRANSACTIONS ON DEPENDABLE AND SECURE COMPUTING 1 Social Fingerprinting: detection of spambot groups through DNA-inspired behavioral modeling Stefano Cresci, Roberto Di Pietro, Marinella Petrocchi, Angelo Spognardi, and Maurizio Tesconi Abstract—Spambot detection in online social networks is a long-lasting challenge involving the study and design of detection techniques capable of efficiently identifying ever-evolving spammers. Recently, a new wave of social spambots has emerged, with advanced human-like characteristics that allow them to go undetected even by current state-of-the-art algorithms. In this paper, we show that efficient spambots detection can be achieved via an in-depth analysis of their collective behaviors exploiting the digital DNA technique for modeling the behaviors of social network users. Inspired by its biological counterpart, in the digital DNA representation the behavioral lifetime of a digital account is encoded in a sequence of characters. Then, we define a similarity measure for such digital DNA sequences. We build upon digital DNA and the similarity between groups of users to characterize both genuine accounts and spambots. Leveraging such characterization, we design the Social Fingerprinting technique, which is able to discriminate among spambots and genuine accounts in both a supervised and an unsupervised fashion. We finally evaluate the effectiveness of Social Fingerprinting and we compare it with three state-of-the-art detection algorithms. Among the peculiarities of our approach is the possibility to apply off-the-shelf DNA analysis techniques to study online users behaviors and to efficiently rely on a limited number of lightweight account characteristics. Index Terms—Spambot detection, social bots, online social networks, Twitter, behavioral modeling, digital DNA. -

The History of Spam Timeline of Events and Notable Occurrences in the Advance of Spam

The History of Spam Timeline of events and notable occurrences in the advance of spam July 2014 The History of Spam The growth of unsolicited e-mail imposes increasing costs on networks and causes considerable aggravation on the part of e-mail recipients. The history of spam is one that is closely tied to the history and evolution of the Internet itself. 1971 RFC 733: Mail Specifications 1978 First email spam was sent out to users of ARPANET – it was an ad for a presentation by Digital Equipment Corporation (DEC) 1984 Domain Name System (DNS) introduced 1986 Eric Thomas develops first commercial mailing list program called LISTSERV 1988 First know email Chain letter sent 1988 “Spamming” starts as prank by participants in multi-user dungeon games by MUDers (Multi User Dungeon) to fill rivals accounts with unwanted electronic junk mail. 1990 ARPANET terminates 1993 First use of the term spam was for a post from USENET by Richard Depew to news.admin.policy, which was the result of a bug in a software program that caused 200 messages to go out to the news group. The term “spam” itself was thought to have come from the spam skit by Monty Python's Flying Circus. In the sketch, a restaurant serves all its food with lots of spam, and the waitress repeats the word several times in describing how much spam is in the items. When she does this, a group of Vikings in the corner start a song: "Spam, spam, spam, spam, spam, spam, spam, spam, lovely spam! Wonderful spam!" Until told to shut up. -

A Survey of Learning-Based Techniques of Email Spam Filtering

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Unitn-eprints Research A SURVEY OF LEARNING-BASED TECHNIQUES OF EMAIL SPAM FILTERING Enrico Blanzieri and Anton Bryl January 2008 (Updated version) Technical Report # DIT-06-056 A Survey of Learning-Based Techniques of Email Spam Filtering Enrico Blanzieri, University of Trento, Italy, and Anton Bryl University of Trento, Italy, Create-Net, Italy [email protected] January 11, 2008 Abstract vertising pornography, pyramid schemes, etc. [68]. The total worldwide financial losses caused by spam Email spam is one of the major problems of the to- in 2005 were estimated by Ferris Research Analyzer day’s Internet, bringing financial damage to compa- Information Service at $50 billion [31]. nies and annoying individual users. Among the ap- Lately, Goodman et al. [39] presented an overview proaches developed to stop spam, filtering is an im- of the field of anti-spam protection, giving a brief portant and popular one. In this paper we give an history of spam and anti-spam and describing major overview of the state of the art of machine learn- directions of development. They are quite optimistic ing applications for spam filtering, and of the ways in their conclusions, indicating learning-based spam of evaluation and comparison of different filtering recognition, together with anti-spoofing technologies methods. We also provide a brief description of and economic approaches, as one of the measures other branches of anti-spam protection and discuss which together will probably lead to the final victory the use of various approaches in commercial and non- over email spammers in the near future.