Qualitative Synthesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Document Title

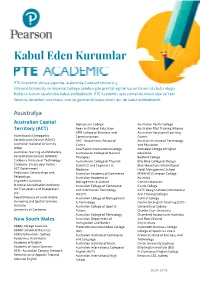

Kabul Eden Kurumlar PTE Academic dünya çapında, aralarında Stanford University, Harvard University ve Imperial College London gibi prestijli eğitim kurumlarının da bulunduğu, binlerce kurum tarafından kabul edilmektedir. PTE Academic aynı zamanda Avustralya ve Yeni Zelanda devletleri tarafından vize ve göçmenlik başvuruları için de kabul edilmektedir. Avustralya Australian Capital Alphacrucis College Australian Pacific College Territory (ACT) Apex Institute of Education Australian Pilot Training Alliance APM College of Business and Australian Vocational Learning Australasian Osteopathic Communication Centre Accreditation Council (AOAC) ARC - Accountants Resource Australis Institute of Technology Australian National University Centre and Education (ANU) Asia Pacific International College Avondale College of Higher Australian Nursing and Midwifery Australasian College of Natural Education Accreditation Council (ANMAC) Therapies Bedford College Canberra Institute of Technology Australasian College of Physical Billy Blue College of Design Canberra. Create your future - Scientists and Engineers in Blue Mountains International ACT Government Medicine Hotel Management School Endeavour Scholarships and Australian Academy of Commerce (BMIHMS) Campion College Fellowships Australian Academy of Australia Engineers Australia Management & Science Carrick Education National Accreditation Authority Australian College of Commerce Castle College for Translators and Interpreters and Information Technology CATC Design School (Commercial Ltd (ACCIT) Arts Training -

New Research Offers Hope for Parents of Picky Eaters

UCL/Weight Concern Press Release Embargo: 00.01 hours Eastern Standard Time/05.00 hours British Summer Time, Monday 30 September 2013 NEW RESEARCH OFFERS HOPE FOR PARENTS OF PICKY EATERS An intervention developed by UCL psychologists significantly increases consumption of fruit and vegetables commonly disliked among picky young children, new research has found. The research, published in the Journal of the Academy of Nutrition and Dietetics, showed that in a randomised controlled trial involving 450 young children, a new method of taste exposure significantly increased the proportion of children willing to try new foods and to continue eating them. In the trial of the method with over 200 families, toddlers showed a 61% increase in their liking of a specific vegetable, and the amount of the vegetable they were willing to eat trebled. A new pack called Tiny Tastes - based on this method - has been developed by UCL in partnership with Weight Concern, a charitable organisation set up by a group of academics and clinicians to combat the epidemic of obesity sweeping the UK by supporting and empowering people to live a healthy lifestyle. Mealtimes can frequently be a battle-ground for many parents, with up to 40 per cent of toddlers becoming picky eaters at some point during childhood, with more than a quarter refusing food every day. In additional, despite the importance of fruit and vegetables in a healthy diet, only 20 per cent of children actually eat the recommended five portions a day, according to the 2010 Health Survey for England. Vegetables are among children’s most disliked foods, and vegetable intake consistently falls short of dietary guidelines. -

Review 2011 1 Research

LONDON’S GLOBAL UNIVERSITY ReviewHighlights 2011 2011 Walking on Mars © Angeliki Kapoglou Over summer 2011, UCL Communications held a The winning entry was by Angeliki Kapoglou (UCL Space photography competition, open to all students, calling for & Climate Physics), who was selected to serve as a member images that demonstrated how UCL students contribute of an international crew on the Mars Desert Research Station, to society as global citizens. The term ‘education for global which simulates the Mars environment in the Utah desert. citizenship’ encapsulates all that UCL does to enable Researchers at the station work to develop key knowledge students to respond to the intellectual, social and personal needed to prepare for the human exploration of Mars. challenges that they will encounter throughout their future careers and lives. The runners-up and other images of UCL life can be seen at: www.flickr.com/uclnews Contents Research 2 Follow UCL news www.ucl.ac.uk Health 5 Insights: a fortnightly email summary Global 8 of news, comment and events: www.ucl.ac.uk/news/insights Teaching & Learning 11 Events calendar: Enterprise 14 www.events.ucl.ac.uk Highlights 2011 17 Twitter: @uclnews UCL Council White Paper 2011–2021 YouTube: UCLTV Community 21 In images: www.flickr.com/uclnews Finance & Investment 25 SoundCloud: Awards & Appointments 30 www.soundcloud.com/uclsound iTunes U: People 36 http://itunes.ucl.ac.uk Leadership 37 UCL – London’s Global University Our vision Our values • An outstanding institution, recognised as one of the world’s -

London's Global University

LONDON’S GLOBAL UNIVERSITY ACADEMIC BOARD Wednesday 13th May 2015 M I N U T E S PRESENT1: President and Provost (Chair) Ms Wendy Appleby, Ms Nicola Arnold, Dr Simon Banks, Mr Francis Brako, Dame Nicola Brewer, Ms Annabel Brown, Ms Jane Burns, Professor Stephen Caddick, Dr Ben Campkin, Ms Sue Chick, Professor Lucie Clapp, Ms Charlotte Croffie, Professor Izzat Darwazeh, Professor Julio D. Davila, Ms Eleanor Day, Dr Sally Day, Dr Rachele De Felice, Dr Rosaline Duhs, Dr Melanie Ehren, Dr Caroline Essex, Professor Susan Evans, Ms Ava Fatah gen. Schieck, Dr Martin Fry, Professor Mary Fulbrook, Mr Philip Gardner, Dr Hugh Goodacre, Dr Lesley Gourlay, Dr George Grimble, Dr Paul Groves, Professor Valerie Hazan, Professor Jenny Head, Professor Michael Heinrich, Dr Evangelos Himonides, Dr John Hurst, Ms Liz Jones, Ms Lina Kamenova, Mr Rex Knight, Mr Lukmaan Kolia, Professor Susanne Kord, Professor Jan Kubik, Dr Sarabajaya Kumar, Dr Andrew Logsdail, Dr Helga Lúthersdóttir, Professor Raymond MacAllister, Professor Sandy MacRobert, Dr Merle Mahon, Professor Genise Manuwald, Dr Lilijana Marjanovic-Halburd, Professor Charles Marson, Dr Helen Matthews, Ms Fiona McClement, Dr Saladin Meckled-Garcia, Dr Jenny Mindell, Dr John Mitchell, Dr Richard Mole, Dr Caroline Newton, Professor Martin Oliver, Professor Norbert Pachler, Professor Alan Penn, Dr Brent Pilkey, Mr Mike Rowson, Professor Tom Salt, Professor Elizabeth Shepherd, Professor Richard R Simons, Professor Lucia Sivilotti, Dr Hazel Smith, Dr Fiona Strawbridge, Professor Stephen Smith, Professor Sacha Stern, Ms Emanuela Tilley, Mr Simon To, Professor Derek Tocher, Professor Jonathan Wolff, Professor Steve Wood. In attendance: Ms Clare Goudy, Mr Dominique Fourniol (Media), Dr Patty Kostkova, Mr Derfel Owen (Secretary), Ms Chandan Shah. -

11/12 Centre for English Language University of South Australia (CELUSA)

matt cello varnish on outer front cover only Centre for English Language University of South Australia 11/12 (CELUSA) Programs Academic English (AE) The AE program is for students planning to study at an Australian education institution. The higher levels of AE are designed for students who need to meet the English language admission requirement of the University of South Australia (UniSA), the South Australian Institute of Business and Technology (SAIBT), Le Cordon Bleu (LCB) CELUSA is the Centre for English Language in the University of or University College London (UCL). South Australia. CELUSA specialises in providing high quality Students applying directly to these institutions will not need to take the IELTS or other English English language preparation programs for international language test if they successfully complete the undergraduate and postgraduate students. CELUSA students appropriate AE level program. continue on to study at UniSA, SAIBT, Le Cordon Bleu, and Academic English develops the skills necessary for academic study, such as listening to UCL. CELUSA is Adelaide’s IELTS test centre. lectures and note-taking, planning and writing essays and reports, and taking part in group discussions and spoken presentations. UniSA Students will normally need to complete UniSA is the largest university in South Australia with over 10 weeks of study for every 0.5 improvement 36,000 students and is the leading provider of international needed in the overall IELTS score. Additional education in the state. UniSA is committed to educating weeks may be required if a student’s score professionals, creating and applying knowledge, engaging in one IELTS band is lower than their overall the community and maintaining cultural diversity among staff score. -

37Th IAEE International Conference | Energy & the Economy

TH 37 . IAEE INTERNATIONAL CONFERENCE JUNE 15–18, 2014 | NEW YORKER HOTEL | NEW YORK CITY, USA ENERGY & THE ECONOMY PLATINUM SPONSORS GOLD SPONSORS SILVER SPONSORS PARTNERS Robert Eric Borgström COMMUNICATIONS PARTNERS SUPPORTING ORGANIZATIONS MEETING ROOM EXPLANATION & WALKING DIRECTIONS The 37th IAEE International Conference will take place in three separate properties. These are the Wyndham New Yorker Hotel, the Manhattan 8 Center Grand Ballroom (for all lunches and the Monday night Awards TH dinner) and the Loews Theater where, on the fourth floor, four concurrent AVE. sessions will take place during any given concurrent session time block. The Loews Theater is located directly across from the Manhattan Center. The map at the right is designed to help you visualize where meeting rooms are located. Note that both the Wyndham New Yorker Hotel and the Manhattan Center have a Grand Ballroom. It is easiest to recall that the W 34TH ST. Manhattan Center Grand Ballroom is only used for lunches and the dinner. Further note that coffee breaks will be offered in both the Wyndham New Yorker Hotel and in the Loews Theater, fourth floor lobby. Plenty of food and beverages will be offered during each coffee break to enhance your networking opportunities with colleagues and new friends. MANHATTAN CENTER TO GET TO THE TH MANHATTAN CENTER GRAND BALLROOM: 7 FLOOR • From the lobby of the Wyndham New Yorker Hotel look in the corner for the Tick Tock Restaurant and the Transportation Desk. There will be a conference sign that says “Manhattan Center Grand Ballroom / Loews Theater Concurrent Sessions.” Follow these signs to exit the hotel on to 34th street. -

UCL Australia

UCL Australia • International University Precinct in Adelaide • School of Energy & Resources (SERAus) – opened in 2010 • International Energy Policy Institute (IEPI), Mullard Space Science Laboratory (MSSL) Overview UCL Australia applies a systems approach to [energy and resources] research across sectors and disciplines (technology, natural and social sciences, economics, law, etc.) with particular focus on: • Systems modelling (process optimisation, project organisation and management); • Impact assessment (environment, society, economy); • Decision support (governance, business models, big data analysis); and • Policy analysis and assessment (energy security, climate change, regional and national priorities). MSc in Energy and Resources Management (Two years - full time study) CORE COURSES • Economics of Energy, Resources and the Environment • Energy Technology Perspectives • Law for Energy and Resources • Resources Development and Sustainable Management ELECTIVE COURSES • Project Management for Energy and Resources • Energy Efficiency and Conservation • International Policy and Geopolitics of Energy and Resources • Macroeconomics and Sustainability • Political Economy of Oil and Gas • Financing Resource Projects • Social Licensing • Water Resources Management • Research Project A and Research Project B Current PhD Research • Market rules and regulations for an Australian renewable energy “Super Grid” • Investment irreversibility, uncertainty and disruptive technology innovation • Stakeholder engagement & (mega) project management -

About Studying in Australia?

about studying in Australia? Explore your study options, career outcomes and scholarship opportunities with us. International student guide 2020/2021 Copyright © Studynet 2020. All Rights Reserved. CONTENT 3 About Us 20 English Requirements 4 Our Services 24 Financial Capacity for Your Student Visa Application 5 Our Partners 26 OSHC Cover 6 Awards and Certificates 27 Scholarships in Australia 7 Our Branches 32 Work while You Study 8 Brief Ideas of Australia 34 Tips How to Choose Your Study Destination in Australia 9 Australian Education System 35 Incentive for Regional Study 11 Australia Student Visa Process Flow 36 Regional Study Options with StudyNet 12 Top I0 Reasons to Study in Australia 37 Migration Services 14 Student Visa 41 PR Pathway Courses 15 Education Cost 43 Sample of Planned Items You can Bring Into Australia 16 How to Estimate the Cost of Study in Australia 44 Safe Accommodation 17 How to Plan your Study in Australia 45 Tips on How to Make New Friends in Australia 18 How to Apply Through Us 46 What Our Students Say about Us 19 Documents Checklist Copyright © Studynet 2020. All Rights Reserved. ABOUT US We help International Students to Study in Australia Key facts STUDYNET is an Australian leading Education & Migration Consulting Company headquartered in Sydney and has city offices in Melbourne and Brisbane in Australia and Multilingual counsellors 1500+ 9Offices in 6 countries globally. StudyNet Pty Ltd is specialised in providing online and 30+ students trust us around the world face-to-face educational and professional information and services to international per year Students from around the world, to study in Australia. -

The Entry and Experience of Private Providers of Higher Education in Six

The entry and Stephen Hunt, UCL IOE experience of private Claire Callender, UCL IOE & Birkbeck College providers of higher Gareth Parry, University of Sheffield education in six August 2016 countries Published by the Centre for Global Higher Education, UCL Institute of Education, London WC1H 0AL www.researchcghe.org © Centre for Global Higher Education 2016 ISBN 978-0-9955538-0-4 ISSN 2398-564X The Centre for Global Higher Education (CGHE) is the largest research centre in the world specifically focused on higher education and its future development. Its research integrates local, national and global perspectives and aims to inform and improve higher education policy and practice. CGHE is a partnership led by the UCL Institute of Education with Lancaster University, the University of Sheffield and international universities Australian National University (Australia), Dublin Institute of Technology (Ireland), Hiroshima University (Japan), Leiden University (Netherlands), Lingnan University (Hong Kong), Shanghai Jiao Tong University (China), the University of Cape Town (South Africa) and the University of Michigan (US). CGHE is an Economic and Social Research Council (ESRC) and Higher Education Funding Council for England (HEFCE) investment and their support is gratefully acknowledged. Acknowledgements The authors are grateful to the following for their ongoing support and guidance: Andrea Detmer, UCL IOE Kevin Dougherty, ColumBia University and FulBright Scholar, BirkBeck College Carolina Guzman, University of Chile Richard James, MelBourne University BarBara Kehm, University of Glasgow Daniel Levy, University of AlBany, State University of New York William Locke, UCL IOE Andrew Norton, Grattan Institute Simon Marginson, UCL IOE Tristan McCowan, UCL IOE Len Ole Schafer, University of BamBerg Michael Wells, Wells Advisory Pty Ltd This review was undertaken after discussions with the Department for Business Innovation and Skills, to inform decisions on the new HE regulatory framework. -

Engineering Grand Challenges

Engineering Grand Challenges Report on outcomes of a retreat – 07 and 08 May 2014 Ettington Chase, Stratford-upon-Avon Foreword Professor Sir Peter Gregson (Chief Executive and Vice- Chancellor, Cranfield University) When EPSRC asked me to chair the Engineering Grand Challenges Retreat, I accepted without hesitation. It was my great pleasure to work with a group of 20 enthusiastic and talented UK Engineers from academia and industry, alongside EPSRC colleagues, at a retreat on 7-8 May 2014 at Ettington Chase, near Stratford-upon-Avon. I was exposed to a great blend of creativity, commitment and intellectual horsepower during the meeting, and this report is a stepping stone towards identifying Engineering Grand Challenges for the next decade. Our work built on that initiated by the Royal Academy of Engineering (RAEng), in partnership with the IET and EPSRC, through the Global Grand Challenges Summit 2013. Many of the themes discussed at the Summit (e.g. sustainability, health, resilience, technology, etc.) were considered further during our retreat. EPSRC has also sought views from their UK Strategic University and Business Partners, and their responses were used as an input into the retreat. I was particularly delighted to welcome Lord Alec Broers, who was involved in steering the US National Academy of Engineering (NAE) Grand Challenges initiative, and who emphasised the need for novel and exciting engineering solutions to the very big challenges of our time. We also heard from Prof Paul Raithby (University of Bath), who leads the EPSRC-funded Directed-Assembly Chemistry Grand Challenge Network, and who provided valuable insights about the impact of the chemistry grand challenges on UK research. -

Machine Learning Technologies • Discuss Methodological Issues, Their Implementation and Software Options

Machine-assisted searching and study selection in systematic reviews ICML + EAHIL 2017 Dublin, 12-16 June James Thomas, Claire Stansfield, Alison O’Mara-Eves, Ian Shemilt Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) Social Science Research Unit UCL Institute of Education University College London Acknowledgements & declaration of interest • Many people, including: Sergio Graziosi and Jeff Brunton (EPPI- Centre); National Centre for Text Mining (NaCTeM, University of Manchester); Chris Mavergames and Cochrane IKMD team; Julian Elliott and others on Cochrane Transform project; Iain Marshall (Kings College); Byron Wallace (Northeastern University) • James Thomas receives funding from Cochrane and the funders below for this and related work; co-lead of Project Transform; lead EPPI-Reviewer software development. • Parts of this work funded by: Cochrane, JISC, Medical Research Council (UK), National Health & Medical Research Council (Australia), Wellcome Trust, Bill & Melinda Gates Foundation. All views expressed are our own, and not necessarily those of these funders. Objectives • Provide an overview of text-mining technologies for searching and study selection • Try out machine learning technologies • Discuss methodological issues, their implementation and software options 3 Automation in systematic reviews – what can be done? – Study identification: • Assisting search development • RCT classifier • Citation screening • Updating reviews – Mapping research activity – Data extraction Increasing interest -

List of Speakers

Shaleology Forum 2018- List of Speakers Since 2013 Dr. Striolo is Professor of Molecular Thermodynamics within the Department of Chemical Engineering at University College London, London’s global university. Prior to this position, Dr. Striolo was the Lloyd and Joyce Austin Presidential Associate Professor within the School of Chemical, Biological and Materials Engineering at the University of Oklahoma, US. During his career, Dr. Striolo has applied an arsenal of modelling and simulation techniques to characterise the structure of fluid at solid-liquid interfaces. He held visiting positions at Lawrence Berkeley National Laboratory, Berkeley, CA, and at Princeton University, NJ, to verify the theoretical predictions using experimental observables and to correlate the interfacial fluids structure to their transport. Striolo is interested in quantifying interfacial effects, especially those that can be related to practical applications such as water desalination, management of hydrates in flow assurance problems, separations, self- and directed assembly, and many others, including shale gas. Alberto Striolo University College London Adrian Jones is the Hayman Reader in Petrology at UCL, and holds an Associate Research post at the Natural History Museum London. Adrian has wide geological, fieldwork, and analytical geochemical expertise, especially on the behaviour of carbon- rich systems during melting and crystallization at high pressures and temperatures. He has supervised >25 PhD students of whom 5 currently hold academic tenured positions in leading UK institutions. He was a Founder of the Deep Carbon Observatory (DCO) supporting education and research of carbon through multidisciplinary science. His UCL lectures and DCO summer schools and workshops aim to inspire early career scientists to imagine new methods and technologies for measuring carbon in the natural environment including bio-rock interaction.