Typefaces and the Perception of Humanness in Natural Language Chatbots

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supreme Court of the State of New York Appellate Division: Second Judicial Department

Supreme Court of the State of New York Appellate Division: Second Judicial Department A GLOSSARY OF TERMS FOR FORMATTING COMPUTER-GENERATED BRIEFS, WITH EXAMPLES The rules concerning the formatting of briefs are contained in CPLR 5529 and in § 1250.8 of the Practice Rules of the Appellate Division. Those rules cover technical matters and therefore use certain technical terms which may be unfamiliar to attorneys and litigants. The following glossary is offered as an aid to the understanding of the rules. Typeface: A typeface is a complete set of characters of a particular and consistent design for the composition of text, and is also called a font. Typefaces often come in sets which usually include a bold and an italic version in addition to the basic design. Proportionally Spaced Typeface: Proportionally spaced type is designed so that the amount of horizontal space each letter occupies on a line of text is proportional to the design of each letter, the letter i, for example, being narrower than the letter w. More text of the same type size fits on a horizontal line of proportionally spaced type than a horizontal line of the same length of monospaced type. This sentence is set in Times New Roman, which is a proportionally spaced typeface. Monospaced Typeface: In a monospaced typeface, each letter occupies the same amount of space on a horizontal line of text. This sentence is set in Courier, which is a monospaced typeface. Point Size: A point is a unit of measurement used by printers equal to approximately 1/72 of an inch. -

Using Typography and Iconography to Express Emotion

Using typography and iconography to express emotion (or meaning) in motion graphics as a learning tool for ESL (English as a second language) in a multi-device platform. A thesis submitted to the School of Visual Communication Design, College of Communication and Information of Kent State University in partial fulfillment of the requirements for the degree of Master of Fine Arts by Anthony J. Ezzo May, 2016 Thesis written by Anthony J. Ezzo B.F.A. University of Akron, 1998 M.F.A., Kent State University, 2016 Approved by Gretchen Caldwell Rinnert, M.G.D., Advisor Jaime Kennedy, M.F.A., Director, School of Visual Communication Design Amy Reynolds, Ph.D., Dean, College of Communication and Information TABLE OF CONTENTS TABLE OF CONTENTS .................................................................................... iii LIST OF FIGURES ............................................................................................ v LIST OF TABLES .............................................................................................. v ACKNOWLEDGEMENTS ................................................................................ vi CHAPTER 1. THE PROBLEM .......................................................................................... 1 Thesis ..................................................................................................... 6 2. BACKGROUND AND CONTEXT ............................................................. 7 Understanding The Ell Process .............................................................. -

Copyrighted Material

INDEX A Bertsch, Fred, 16 Caslon Italic, 86 accents, 224 Best, Mark, 87 Caslon Openface, 68 Adobe Bickham Script Pro, 30, 208 Betz, Jennifer, 292 Cassandre, A. M., 87 Adobe Caslon Pro, 40 Bézier curve, 281 Cassidy, Brian, 268, 279 Adobe InDesign soft ware, 116, 128, 130, 163, Bible, 6–7 casual scripts typeface design, 44 168, 173, 175, 182, 188, 190, 195, 218 Bickham Script Pro, 43 cave drawing, type development, 3–4 Adobe Minion Pro, 195 Bilardello, Robin, 122 Caxton, 110 Adobe Systems, 20, 29 Binner Gothic, 92 centered type alignment Adobe Text Composer, 173 Birch, 95 formatting, 114–15, 116 Adobe Wood Type Ornaments, 229 bitmapped (screen) fonts, 28–29 horizontal alignment, 168–69 AIDS awareness, 79 Black, Kathleen, 233 Century, 189 Akuin, Vincent, 157 black letter typeface design, 45 Chan, Derek, 132 Alexander Isley, Inc., 138 Black Sabbath, 96 Chantry, Art, 84, 121, 140, 148 Alfon, 71 Blake, Marty, 90, 92, 95, 140, 204 character, glyph compared, 49 alignment block type project, 62–63 character parts, typeface design, 38–39 fi ne-tuning, 167–71 Blok Design, 141 character relationships, kerning, spacing formatting, 114–23 Bodoni, 95, 99 considerations, 187–89 alternate characters, refi nement, 208 Bodoni, Giambattista, 14, 15 Charlemagne, 206 American Type Founders (ATF), 16 boldface, hierarchy and emphasis technique, China, type development, 5 Amnesty International, 246 143 Cholla typeface family, 122 A N D, 150, 225 boustrophedon, Greek alphabet, 5 circle P (sound recording copyright And Atelier, 139 bowl symbol), 223 angled brackets, -

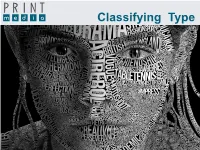

Classifying Type Thunder Graphics Training • Type Workshop Typeface Groups

Classifying Type Thunder Graphics Training • Type Workshop Typeface Groups Cla sifying Type Typeface Groups The typefaces you choose can make or break a layout or design because they set the tone of the message.Choosing The the more right you font know for the about job is type, an important the better design your decision.type choices There will are be. so many different fonts available for the computer that it would be almost impossible to learn the names of every one. However, manys typefaces share similar qualities. Typographers classify fonts into groups to help Typographers classify type into groups to help remember the different kinds. Often, a font from within oneremember group can the be different substituted kinds. for Often, one nota font available from within to achieve one group the samecan be effect. substituted Different for anothertypographers usewhen different not available groupings. to achieve The classifi the samecation effect. system Different used by typographers Thunder Graphics use different includes groups. seven The major groups.classification system used byStevenson includes seven major groups. Use the Right arrow key to move to the next page. • Use the Left arrow key to move back a page. Use the key combination, Command (⌘) + Q to quit the presentation. Thunder Graphics Training • Type Workshop Typeface Groups ����������������������� ��������������������������������������������������������������������������������� ���������������������������������������������������������������������������� ������������������������������������������������������������������������������ -

Vision Performance Institute

Vision Performance Institute Technical Report Individual character legibility James E. Sheedy, OD, PhD Yu-Chi Tai, PhD John Hayes, PhD The purpose of this study was to investigate the factors that influence the legibility of individual characters. Previous work in our lab [2], including the first study in this sequence, has studied the relative legibility of fonts with different anti- aliasing techniques or other presentation medias, such as paper. These studies have tested the relative legibility of a set of characters configured with the tested conditions. However the relative legibility of individual characters within the character set has not been studied. While many factors seem to affect the legibility of a character (e.g., character typeface, character size, image contrast, character rendering, the type of presentation media, the amount of text presented, viewing distance, etc.), it is not clear what makes a character more legible when presenting in one way than in another. In addition, the importance of those different factors to the legibility of one character may not be held when the same set of factors was presented in another character. Some characters may be more legible in one typeface and others more legible in another typeface. What are the character features that affect legibility? For example, some characters have wider openings (e.g., the opening of “c” in Calibri is wider than the character “c” in Helvetica); some letter g’s have double bowls while some have single (e.g., “g” in Batang vs. “g” in Verdana); some have longer ascenders or descenders (e.g., “b” in Constantia vs. -

Typographers'

TUGboat, Volume 39 (2018), No. 3 171 Typographers’ Inn Table 1: Widths of set for some related serif, sans-serif, and monospace fonts Peter Flynn CMR abcdefghijlkmnopqrstuvwxyz O0|I1l Font tables CMSS abcdefghijlkmnopqrstuvwxyz O0|I1l CMTT abcdefghijlkmnopqrstuvwxyz O0|I1l Peter Wilson has rightly called me to account for PT Serif abcdefghijlkmnopqrstuvwxyz O0|I1l missing out the fonttable (two t’s) package in the de- PT Sans abcdefghijlkmnopqrstuvwxyz O0|I1l scription of my experimental fontable (one t) package PT Mono abcdefghijlkmnopqrstuvwxyz O0|I1l [4, p 17]. Libertine abcdefghijlkmnopqrstuvwxyz O0|I1l The fonttable package is much more powerful Biolinum abcdefghijlkmnopqrstuvwxyz O0|I1l than the one I am [still] working on, and I was so Lib. Mono abcdefghijlkmnopqrstuvwxyz O0|I1l intent on reimplementing the specific requirements Plex Serif abcdefghijlkmnopqrstuvwxyz O0|I1l abcdefghijlkmnopqrstuvwxyz O0|I1l A Plex Sans of the allfnt8.tex file in X LE TEX to the exclusion Plex Mono abcdefghijlkmnopqrstuvwxyz O0|I1l of pretty much everything else that I didn’t do any Nimbus Serif abcdefghijlkmnopqrstuvwxyz O0|I1l justice to fonttable (and a number of other test and do. Sans abcdefghijlkmnopqrstuvwxyz O0|I1l display tools). do. Mono abcdefghijlkmnopqrstuvwxyz O0|I1l I am expecting shortly to have more time at my do. Mono N abcdefghijlkmnopqrstuvwxyz O0|I1l disposal to remedy this and other neglected projects. Times abcdefghijlkmnopqrstuvwxyz O0|I1l Helvetica abcdefghijlkmnopqrstuvwxyz O0|I1l Monospace that fits Courier abcdefghijlkmnopqrstuvwxyz O0|I1l Luxi Mono * abcdefghijlkmnopqrstuvwxyz O0|I1l One of the recurrent problems in documentation is finding a suitable monospace font for program listings Times, Helvetica, and Courier (unrelated) are included for or other examples of code. -

Design and Evaluation of Dynamic Text-Editing Methods Using Foot Pedals

ARTICLE IN PRESS International Journal of Industrial Ergonomics xxx (2008) 1–8 Contents lists available at ScienceDirect International Journal of Industrial Ergonomics journal homepage: www.elsevier.com/locate/ergon Design and evaluation of dynamic text-editing methods using foot pedals Sang-Hwan Kim, David B. Kaber* Edward P. Fitts Department of Industrial and Systems Engineering, North Carolina State University, 111 Lampe Dr., 438 Daniels Hall, Raleigh, NC 27695-7906, USA article info abstract Article history: The objective of this study was to design and evaluate new dynamic text-editing methods (chatting, instant Received 11 February 2008 messenger) using a foot pedal control. A first experiment was to assess whether the foot-based method Received in revised form 30 June 2008 enhanced editing performance compared to conventional mouse use and to identify which type of foot Accepted 15 July 2008 control is most convenient for users. Five prototype methods including four new methods (two pedals or Available online xxx one pedal, 0 order or 1st order control), and one mouse method were developed and tested by performing a task requiring changing text sizes, dynamically. Results revealed methods involving 1st order pedal control Keywords: to be comparable to the conventional method in task completion time, accuracy and subjective workload. Foot pedals Text editing Among the four foot control prototypes, two pedals with 1st order control was superior to in performance. A Control systems second experiment was conducted to test another prototype foot-based method for controlling font face, size, and color through feature selection with the left pedal and level selection with the right pedal. -

CVAA Support Within the Kaltura Player Toolkit Last Modified on 06/21/2020 4:25 Pm IDT

CVAA Support within the Kaltura Player Toolkit Last Modified on 06/21/2020 4:25 pm IDT The Twenty-First Century Communications and Video Accessibility Act of 2010 (CVAA) focuses on ensuring that communications and media services, content, equipment, emerging technologies, and new modes of transmission are accessible to users with disabilities. All Kaltura players that use the Kaltura Player Toolkit are now CVAA compliant by default and are based on on Twenty-First Century Communications and Video Accessibility Act of 2010 (CVAA). The player includes capabilities for editing the style and display of captions and can be modified by the end user. The closed captions styling editor includes easy to use markup and testing controls. The Kaltura Player v2 delivers a great keyboard input experience for users and a seamless browser-managed experience for better integration with web accessibility tools. This is in addition to including the capability of turning closed captions on or off. Features The Kaltura Player v2 CVAA features include: Studio support - Enable options menu Captions types: XML, SRT/DFXP, VTT(outband) Displaying and changing fonts in 64 color combinations using eight standard caption colors currently required for television sets. Adjusting character opacity Ability to adjust caption background in eight specified colors. Copyright ©️ 2019 Kaltura Inc. All Rights Reserved. Designated trademarks and brands are the property of their respective owners. Use of this document constitutes acceptance of the Kaltura Terms of Use and Privacy Policy. 1 Ability to adjust character edge (i.e., non, raised, depressed, uniform or drop shadow). Ability to adjust caption window color and opacity. -

{TEXTBOOK} Transforming Type : New Directions in Kinetic Typography Pdf Free Download

TRANSFORMING TYPE : NEW DIRECTIONS IN KINETIC TYPOGRAPHY PDF, EPUB, EBOOK Barbara Brownie | 128 pages | 12 Feb 2015 | Bloomsbury Publishing PLC | 9780857856333 | English | London, United Kingdom Transforming Type : New Directions in Kinetic Typography PDF Book As demonstrated in J. Lee et al. To see previews of the content, uncheck the box above. Wong and Eduardo Kac. Email required Address never made public. Among the topics covered are artistic imaging, tools and methods in typography, non-latin type, typographic creation, imaging, character recognition, handwriting models, legibility and design issues, fonts and design, time and multimedia, electronic and paper documents, document engineering, documents and linguistics, document reuse, hypertext and the Web, and hypertext creation and management. Published by the Association for Computing Machinery. Type Object ArtPower, Email x Transforming Type. Copyright Barbara Brownie Brownie Ed. It looks like you are located in Australia or New Zealand Close. These environments invite new discussions about the difference between motion and change, global and local transformation, and the relationship between word and image. Chapter 5 addresses the consequences of typographic transformation. Transforming Type examines kinetic or moving type in a range of fields including film credits, television idents, interactive poetry and motion graphics. This term is applicable in many cases, as letters may be written, drawn or typed. This comprehensive, well-illustrated volume ranges from the earliest pictographs and hieroglyphics to the work of 20th-century designers. Local kineticism and fluctuating identity. Includes a thorough examination of the history of title design from the earliest films through the present. They were placed on your computer when you launched this website. -

Typeface Classification Serif Or Sans Serif?

Typography 1: Typeface Classification Typeface Classification Serif or Sans Serif? ABCDEFG ABCDEFG abcdefgo abcdefgo Adobe Jenson DIN Pro Book Typography 1: Typeface Classification Typeface Classification Typeface or font? ABCDEFG Font: Adobe Jenson Regular ABCDEFG Font: Adobe Jenson Italic TYPEFACE FAMILY ABCDEFG Font: Adobe Jenson Bold ABCDEFG Font: Adobe Jenson Bold Italic Typography 1: Typeface Classification Typeface Timeline Blackletter Humanist Old Style Transitional Modern Bauhaus Digital (aka Venetian) sans serif 1450 1460- 1716- 1700- 1780- 1920- 1980-present 1470 1728 1775 1880 1960 Typography 1: Typeface Classification Typeface Classification Humanist | Old Style | Transitional | Modern |Slab Serif (Egyptian) | Sans Serif The model for the first movable types was Blackletter (also know as Block, Gothic, Fraktur or Old English), a heavy, dark, at times almost illegible — to modern eyes — script that was common during the Middle Ages. from I Love Typography http://ilovetypography.com/2007/11/06/type-terminology-humanist-2/ Typography 1: : Typeface Classification Typeface Classification Humanist | Old Style | Transitional | Modern |Slab Serif (Egyptian) | Sans Serif Types based on blackletter were soon superseded by something a little easier Humanist (also refered to Venetian).. ABCDEFG ABCDEFG > abcdefg abcdefg Adobe Jenson Fette Fraktur Typography 1: : Typeface Classification Typeface Classification Humanist | Old Style | Transitional | Modern |Slab Serif (Egyptian) | Sans Serif The Humanist types (sometimes referred to as Venetian) appeared during the 1460s and 1470s, and were modelled not on the dark gothic scripts like textura, but on the lighter, more open forms of the Italian humanist writers. The Humanist types were at the same time the first roman types. Typography 1: : Typeface Classification Typeface Classification Humanist | Old Style | Transitional | Modern |Slab Serif (Egyptian) | Sans Serif Characteristics 1. -

Kinetic Typography Studies Today in Japan

The 2nd International Conference on Design Creativity (ICDC2012) Glasgow, UK, 18th-20th September 2012 KINETIC TYPOGRAPHY STUDIES TODAY IN JAPAN J.E.Lee IMCTS / Hokkaido University, Sapporo, Japan Abstract: The movement of Western Kinetic typography had started in the late 1990‘s while Japanese kinetic typography appeared from 2007. Japanese kinetic typography just seems to have started late or has been developing very slowly. The reason requires consideration from various angles. In this study, the writer researched on the reasons why Japanese kinetic typography could not have been active, taking the Japanese language characteristics into consideration. The writer also studied the careful points and its potential when producing Japanese kinetic typography. Keywords: Kinetic typography, Typography, Japanese characteristics 1. Introduction When searching for "kinetic typography" as the keyword, ten papers were appeared on CiNii(Citation Information by NII), which is the information database of art and science managed by NII (National institute of infomatics) ,as of January 2012. Kinetic typography is used as a key word in eight papers except this author‘s. The articles about educational experiments and application of Japanese kinetic typography in design education were written between 1998 and 2001, and most of them were presented by the Japanese Society for the Science of Design. On the other hand, when searching for "MOJI animation (character animation)" as a key word, eight articles appear on CiNii. Two of the papers were about substitutes for sign language for elderly people and hearing-impaired people. One of articles was written in 2004, and the other was written in 2005. It was between 2007 and 2011 when the movement on kinetic typography itself was focused and most of articles were presented by Information Processing Society of Japan. -

A Kinetic Typography Motion Graphic About the Pursuit of Happiness

Rochester Institute of Technology RIT Scholar Works Theses 12-2014 Nowhere: A kinetic typography motion graphic about the pursuit of happiness I-Cheng Lee Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Lee, I-Cheng, "Nowhere: A kinetic typography motion graphic about the pursuit of happiness" (2014). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. nowhere A kinetic typography motion graphic about the pursuit of happiness I-Cheng Lee A Thesis submitted in partial fulfillment of the requirements for the degree of: Master of Fine Arts in Visual Communication Design School of Design College of Imaging Arts and Sciences Rochester Institute of Technology December 2014 Thesis Committee Approvals Chief Thesis Adviser Daniel DeLuna Associate Professor Visual Communication Design Associate Thesis Adviser David Halbstein Assistant Professor 3D Digital Design Associate Thesis Adviser Shaun Foster Assistant Professor Visual Communication Design School of Design Administrative Chair Peter Byrne MFA Thesis Candidate I-Cheng Lee Table of Contents 1 Abstract 2 Introduction 3 Review of Literature The Topic: Happiness Visual and Technical Researches 7 Process Thesis Parameters Concept Story and Script Storyboard and Animatic Symbols Typography and Hand Lettering Transitions Compositing Audio Editing and Final Adjustments 19 Summary 20 Conclusion 21 Appendix Thesis Proposal 38 Bibliography 1 Abstract nowhere is a motion graphic project that expresses my personal journey of pursuing happiness.