Musical Instruments Simulation on Mobile Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UNIVERZITET UMETNOSTI U BEOGRADU FAKULTET MUZIČKE UMETNOSTI Katedra Za Muzikologiju

UNIVERZITET UMETNOSTI U BEOGRADU FAKULTET MUZIČKE UMETNOSTI Katedra za muzikologiju Milan Milojković DIGITALNA TEHNOLOGIJA U SRPSKOJ UMETNIČKOJ MUZICI Doktorska disertacija Beograd, 2017. Mentor: dr Vesna Mikić, redovni profesor, Univerzitet umetnosti u Beogradu, Fakultet muzičke umetnosti, Katedra za muzikologiju Članovi komisije: 2 Digitalna tehnologija u srpskoj umetničkoj muzici Rezime Od prepravke vojnog digitalnog hardvera entuzijasta i amatera nakon Drugog svetskog rata, preko institucionalnog razvoja šezdesetih i sedamdesetih i globalne ekspanzije osamdesetih i devedesetih godina prošlog veka, računari su prešli dug put od eksperimenta do podrazumevanog sredstva za rad u gotovo svakoj ljudskoj delatnosti. Paralelno sa ovim razvojem, praćena je i nit njegovog „preseka“ sa umetničkim muzičkim poljem, koja se manifestovala formiranjem interdisciplinarne umetničke prakse računarske muzike koju stvaraju muzički inženjeri – kompozitori koji vladaju i veštinama programiranja i digitalne sinteze zvuka. Kako bi se muzički sistemi i teorije preveli u računarske programe, bilo je neophodno sakupiti i obraditi veliku količinu podataka, te je uspostavljena i zajednička humanistička disciplina – computational musicology. Tokom osamdesetih godina na umetničku scenu stupa nova generacija autora koji na računaru postepeno počinju da obavljaju sve više poslova, te se pojava „kućnih“ računara poklapa sa „prelaskom“ iz modernizma u postmodernizam, pa i ideja muzičkog inženjeringa takođe proživljava transformaciju iz objektivističke, sistematske autonomne -

NEA Grant Search - Data As of 02-10-2020 532 Matches

NEA Grant Search - Data as of 02-10-2020 532 matches Bay Street Theatre Festival, Inc. (aka Bay Street Theater and Sag Harbor 1853707-32-19 Center for the Arts) Sag Harbor, NY 11963-0022 To support Literature Live!, a theater education program that presents professional performances based on classic literature for middle and high school students. Plays are selected to support the curricula of local schools and New York State learning standards. The program includes talkbacks with the cast, and teachers are provided with free study guides and lesson plans. Fiscal Year: 2019 Congressional District: 1 Grant Amount: $10,000 Category: Art Works Discipline: Theater Grant Period: 06/2019 - 12/2019 Herstory Writers Workshop, Inc 1854118-52-19 Centereach, NY 11720-3597 To support writing workshops in correctional facilities and for public school students. Herstory will offer weekly literary memoir writing workshops for women and adolescent girls in Long Island jails. In addition, the organization's program for young writers will bring students from Long Island and Queens County school districts to college campuses to develop their craft. Fiscal Year: 2019 Congressional District: 1 Grant Amount: $20,000 Category: Art Works Discipline: Literature Grant Period: 06/2019 - 05/2020 Lindenhurst Memorial Library 1859011-59-19 Lindenhurst, NY 11757-5399 To support multidisciplinary performances and public programming in community locations throughout Lindenhurst, New York. Programming will include events such as live performances, exhibitions, local author programs, and other arts activities selected based on feedback from local residents. The library will feature cultural events reflecting the diversity of the area. Fiscal Year: 2019 Congressional District: 2 Grant Amount: $10,000 Category: Challenge America: Arts Discipline: Arts Engagement in American Grant Period: 07/2019 - 06/2020 Engagement in American Communities Communities Quintet of the Americas, Inc. -

What Is an Instrument?

What is an instrument? • Anything with which we can make music…? • Rhythm, melody, chords, • Pitched or un-pitched sounds… • Narrow definitions vs. Extremely broad definitions Tone Colour or Timbre (pronounced TAM-ber) • Refers to the sound of a note or pitch – Not the highness or lowness of the pitch itself • Different instruments have different timbres – We use words like smooth, rough, sweet, dark – Ineffable? Range • Instruments and voices have a range of notes they can play or sing – Demo guitar and voice • Lowest to highest sounds • Ways to push beyond the standard range Five Categories of Musical Instruments Classification system devised in India in the 3rd or 4th century B.C. 1. Aerophones • Wind instruments, anything using air 2. Chordophones • Stringed instruments 3. Membranophones • Drums with heads 4. Idiophones • Non-drum percussion 5. Electrophones • Electronic sounds 1. Aerophones • Wind instruments, anything using air • Aerophones are generally either: • Woodwind (Doesn’t have to be wood i.e. flute) • Reed (Small piece of wood i.e. saxophone) • Brass (Lip vibration i.e. trumpet) Flute • Woodwind family • At least 30,000 years old (bone) Ex: Claude Debussy – “Syrinx” (1913) https://www.youtube.com/watch?v=C_yf7FIyu1Y Ex: Jurassic 5 – “Flute Loop” (2000) Ex: Van Morrison – “Moondance” (1970) (chorus) Ex: Gil Scott-Heron – “The Bottle” (1974) Ex: Anchorman “Jazz Flute”(0:55) https://www.youtube.com/watch?v=Dh95taIdCo0 Bass Flute • One octave lower than a regular flute Ex: Overture from The Jungle Bookhttps://www.youtube.com/watch?v=UUH42ciR5SA • Other related instruments: • Piccolo (one octave higher than a flute) • Pan flutes • Bone or wooden flutes Accordion • Modern accordion: early 19th C. -

Fwg 1010 English

FWG 1010 ENGLISH UHF Wireless System™ ESPAÑOL FRANÇAIS PORTUGUÊS ITALIANO DEUTSCH OWNER’S MANUAL — p. 1 MANUAL DE INSTRUCCIONES — p. 6 MODE D’EMPLOI — p. 11 MANUAL DO PROPRIETÁRIO — p. 16 MANUALE UTENTE — p. 21 BEDIENUNGSHANDBUCH — S. 26 FWG 1010 Symbols Used Environment The lightning flash with arrow point in an equilateral trian- gle means that there are dangerous voltages present • The power supply unit consumes a small amount of electricity within the unit. even when the unit is switched off. To save energy, unplug the power supply unit from the socket if you are not going to be us- The exclamation point in an equilateral triangle on the ing the unit for some time. equipment indicates that it is necessary for the user to ENGLISH refer to the User Manual. In the User Manual, this symbol • The packaging is recyclable. Dispose of the packaging in an ap- marks instructions that the user must follow to ensure propriate recycling collection system. safe operation of the equipment. • If you scrap the unit, separate the case, electronics and cables and dispose of all the components in accordance with the appro- Safety and Environment priate waste disposal regulations. FCC STATEMENT Safety The transmitter has been tested and found to comply with the limits for a low-power auxiliary station pursuant to Part 74 of the • Do not spill any liquids on the equipment. FCC Rules. The receiver has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC • Do not place any containers containing liquid on the device or Rules. -

Make It New: Reshaping Jazz in the 21St Century

Make It New RESHAPING JAZZ IN THE 21ST CENTURY Bill Beuttler Copyright © 2019 by Bill Beuttler Lever Press (leverpress.org) is a publisher of pathbreaking scholarship. Supported by a consortium of liberal arts institutions focused on, and renowned for, excellence in both research and teaching, our press is grounded on three essential commitments: to be a digitally native press, to be a peer- reviewed, open access press that charges no fees to either authors or their institutions, and to be a press aligned with the ethos and mission of liberal arts colleges. This work is licensed under the Creative Commons Attribution- NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/ by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. DOI: https://doi.org/10.3998/mpub.11469938 Print ISBN: 978-1-64315-005- 5 Open access ISBN: 978-1-64315-006- 2 Library of Congress Control Number: 2019944840 Published in the United States of America by Lever Press, in partnership with Amherst College Press and Michigan Publishing Contents Member Institution Acknowledgments xi Introduction 1 1. Jason Moran 21 2. Vijay Iyer 53 3. Rudresh Mahanthappa 93 4. The Bad Plus 117 5. Miguel Zenón 155 6. Anat Cohen 181 7. Robert Glasper 203 8. Esperanza Spalding 231 Epilogue 259 Interview Sources 271 Notes 277 Acknowledgments 291 Member Institution Acknowledgments Lever Press is a joint venture. This work was made possible by the generous sup- port of -

Design for a New Type of Electronic Musical Instrument

Reimagining the synthesizer for an acoustic setting; Design for a new type of electronic musical instrument Industrial Design Engineering Bachelor Thesis Arvid Jense s0180831 5-3-2013 University of Twente Assessors: 1st: Ing. P. van Passel 2nd: Dr.Ir. M.C. van der Voort Reimagining the synthesizer for an acoustic setting; Design for a new type of electronic musical instrument By: Arvid Jense S0180831 Industrial Design Engineering University of Twente Presentation: 5-3-2013 Contact: Create Digital Media/Meeblip Betahaus, to Peter Kirn Prinzessinnenstrasse 19-20 BERLIN, 10969 Germany www.creadigitalmusic.com www.meeblip.com Examination committee: Dr.Ir. M.C. van der Voort Ing. P. van Passel Peter Kirn ii iii ABSTRACT A design of a new type of electronic instrument was made to allow usage in a setting previously not suitable for these types of instrument. Following an open-ended design brief, an analysis of the Meeblip market, synthesizer design literature and three case-studies, a new usage scenario was chosen. The scenario describes a situation of spontaneous music creation at an outside location. A rapidly iterating design process produced a wooden, semi-computational operated synthesizer which has an integrated power supply, amplifier and speaker. Care was taken to allow for rich and musical interaction as well as making the sound quality of the instrument on a similar level as acoustic instruments. Keywords: Synthesizer design, Electronics design, Open source, Arduino, Meeblip, Interaction design SAMENVATTING (DUTCH) Een ontwerp is gemaakt voor een nieuw type elektronisch muziek instrument welke gebruikt kan worden in een setting die eerder niet geschikt was voor elektronische instrumenten. -

Grame Musiques Exploratoires Saison 2O/21

GRAME MUSIQUES EXPLORATOIRES SAISON 2O/21 P.1 26 SEPTEMBRE 17 OCTOBRE 1 ER DÉCEMBRE 6 DÉCEMBRE 10 FÉVRIER 19H 20 AVRIL 17H 20H 20H 16H 12H30 SAISON UNIVERSITÉ JEAN MONNET, FESTIVAL MUSICA, LES SUBS, LYON THÉÂTRE DE LA CÉLESTINS, SAINT-ÉTIENNE BOURSE DU TRAVAIL 2O/21 STRASBOURG Star Me Kitten RENAISSANCE, OULLINS THÉÂTRE DE LYON Constellations DE SAINT-ÉTIENNE Bibilolo Diffractions Je vois le feu Concert Apéritif Soundinitiative & Nébuleuses Marc Monnet, compositeur Création de Gwen Rouger (Un voyage Yannick Haenel, texte, récitant Ensemble Orchestral Contemporain Arno Fabre, metteur en scène → Tarif plein : 16€ / Nicolas Crosse, composition Étudiants de l’Université Création mondiale Tarif réduit : 5 à 13€ de l'écoute) → Tarif plein : 10€ / réduit : 5€ Jean Monnet de Saint-Étienne → Tarifs : de 6€ à 20€ Billet combiné avec la Lecture de Créations de Vincent-Raphaël Ensemble TM+ Charles Berling & Yannick Haenel : Carinola et Luis Quintana → Tarifs : de 5 à 26€ Tarif plein : 25€ / réduit : 12€ → Gratuit ! 2 SEPTEMBRE 16 & 17 OCTOBRE DU 12 3 DÉCEMBRE DU 9 27 FÉVRIER 25 AVRIL 20H30 AU 14 NOVEMBRE AU 12 DÉCEMBRE 20H 18H LES SUBS, LYON CENTRE POMPIDOU, (VERRIÈRE) THÉÂTRE CRR DE CRÉTEIL THÉÂTRE DE LA THÉÂTRE DE LA CNSMD DE LYON PARIS DE LA RENAISSANCE, RENAISSANCE, OULLINS RENAISSANCE, OULLINS La Bulle- OULLINS Geek Bagatelles Diffrakt Leading Lines Tourniquet La Ralentie Environnement Bernard Cavana, composition Ensemble Orchestral Contemporain Quatuor Tana, L’Enfant inouï Antoine Arnera, musique Pierre Jodlowski, musique, → Tarif : 10€ Créations de Daniel D’Adamo, La Bulle-Environnement accueillera Laurent Cuniot, musique → Tarifs : de 5 à 26€ lumières, vidéo Philippe Hurel et Ivan Fedele de courtes formes artistiques et Sylvain Maurice, texte → Tarifs : de 5 à 26€ → Tarif plein : 18€ / réduit : 14€ des ateliers pour petits et grands. -

Challenges and Perspectives on Real-Time Singing Voice Synthesis S´Intesede Voz Cantada Em Tempo Real: Desafios E Perspectivas

Revista de Informatica´ Teorica´ e Aplicada - RITA - ISSN 2175-2745 Vol. 27, Num. 04 (2020) 118-126 RESEARCH ARTICLE Challenges and Perspectives on Real-time Singing Voice Synthesis S´ıntesede voz cantada em tempo real: desafios e perspectivas Edward David Moreno Ordonez˜ 1, Leonardo Araujo Zoehler Brum1* Abstract: This paper describes the state of art of real-time singing voice synthesis and presents its concept, applications and technical aspects. A technological mapping and a literature review are made in order to indicate the latest developments in this area. We made a brief comparative analysis among the selected works. Finally, we have discussed challenges and future research problems. Keywords: Real-time singing voice synthesis — Sound Synthesis — TTS — MIDI — Computer Music Resumo: Este trabalho trata do estado da arte do campo da s´ıntese de voz cantada em tempo real, apre- sentando seu conceito, aplicac¸oes˜ e aspectos tecnicos.´ Realiza um mapeamento tecnologico´ uma revisao˜ sistematica´ de literatura no intuito de apresentar os ultimos´ desenvolvimentos na area,´ perfazendo uma breve analise´ comparativa entre os trabalhos selecionados. Por fim, discutem-se os desafios e futuros problemas de pesquisa. Palavras-Chave: S´ıntese de Voz Cantada em Tempo Real — S´ıntese Sonora — TTS — MIDI — Computac¸ao˜ Musical 1Universidade Federal de Sergipe, Sao˜ Cristov´ ao,˜ Sergipe, Brasil *Corresponding author: [email protected] DOI: http://dx.doi.org/10.22456/2175-2745.107292 • Received: 30/08/2020 • Accepted: 29/11/2020 CC BY-NC-ND 4.0 - This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. 1. Introduction voice synthesis embedded systems, through the description of its concept, theoretical premises, main used techniques, latest From a computational vision, the aim of singing voice syn- developments and challenges for future research. -

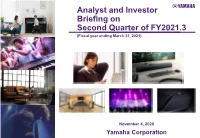

Presentation of Financial Statements (PDF 2.1

Analyst and Investor Briefing on Second Quarter of FY2021.3 (Fiscal year ending March 31, 2021) November 4, 2020 Yamaha Corporation FY2021.3 1H Highlights Overview Figures in parentheses are year-on-year comparisons FY2021.3 1H Achievements - The market is on the recovery trend due to stay-at-home demand, but both revenue and profit declined in part due to supply shortages caused by factory operating restrictions - The first half revenue amounted to ¥164.8 billion (down 21.0%), core operating profit totalled ¥13.0 billion (down 50.2%), and the core operating profit ratio was 7.9% (down 4.6 percentage point) Outlook FY2021.3 Full Year Outlook - Business conditions are improving as market conditions recover and the Group’s plants are making progress in resolving supply shortages. However, a fire at a parts supplier's plant at the end of October 2020 may have an impact on future results due to difficulties in procuring electronic parts. At this point in time, it is difficult to estimate the impact of the fire on our financial results for the current fiscal year, and therefore we are not revising our full-year results. 1 1. Performance Summary FY2021.3 1H Performance FY2021.3 (six months) Full Year Outlook FY2021.3 1H (Six Months) Summary (billions of yen) FY2020.3 1H FY2021.3 1H Change *2 Revenue 208.5 164.8 -43.7 -21.0% Core Operating Profit 26.1 13.0 -13.1 -50.2% (Core Operating Profit Ratio) (12.5%) (7.9%) *1 Net Profit 21.1 7.1 -14.0 -66.5% *2 -19.7% Exchange Rate (yen) (Excluding the impact of exchange rate) Revenue US$ 109 107 (Average rate during the period) EUR 121 121 Profit US$ 109 107 (Settlement rate) EUR 124 119 *1 Net profit is presented as profit attributable to owners of the parent on the consolidated financial statements. -

2019 NAMM Press Kit

2019 NAMM Press Kit Press Contact: Company Contact: Robert Clyne Rebecca Eaddy President Global Influencer Relations Manager Clyne Media, Inc. Roland Corporation (615) 662-1616 (323) 890-3718 [email protected] [email protected] Contents: 1. Roland Announces FP-10 Digital Piano 2. Roland Announces Sparkle Clean Tone Capsule for the Blues Cube Guitar Amplifier Series 3. Roland Introduces TM-1 Trigger Module 4. Roland Announces Serato x Roland TR-SYNC Update 5. Roland Unveils the KS-10Z Keyboard Stand and Other Accessories 6. Roland Cloud Announces Globe-Spanning SRX WORLD and Drum Studio- Acoustic One 7. Roland Exhibits GO:PIANO88 Digital Piano 8. Roland Exhibits AX-Edge Keytar 9. Roland Pro AV’s VR-1HD AV Streaming Mixer Lets Streaming Media Creators Engage in Real Time 10. Roland Exhibits LX700 Piano Series 11. Roland Offers VT-4 Voice Transformer 12. Roland Displays Aerophone Digital Wind Instruments 13. Roland Displays V-MODA Crossfade 2 Wireless Codex Edition with Upgraded Award-Winning Predecessor FOR IMMEDIATE RELEASE Press Contact: Company Contact: Robert Clyne Rebecca Eaddy President Global Influencer Relations Manager Clyne Media, Inc. Roland Corporation (615) 662-1616 (323) 890-3718 [email protected] [email protected] Roland Announces FP-10 Digital Piano Affordable 88-note instrument with class-leading sound, features, and portability The NAMM Show, Anaheim, CA, January 23, 2019 — Roland (Exhibit 10702, Hall A) announces the FP-10 Digital Piano, a new entry-level instrument with premium sound and playability. The FP-10 features Roland’s acclaimed piano sound and an expressive 88-note weighted-action keyboard, delivering top-tier performance that was previously unavailable in its price class. -

WOW Hall Notes 2013-02.Indd

FEBRUARY 2013 WOW HALL NOTES g VOL. 25 #2 ★ WOWHALL.ORG Club Bellydance Bellydance Superstars On BDSS tours, we rarely have Productions’ new club-style tour time for our dancers to see how Club Bellydance is touring North Bellydance is advancing across the America and will be making a stop USA and Canada. This led to an at the WOW Hall on Tuesday, idea to create a shorter and smaller February 26! Bellydance Superstars show that Club Bellydance is a two- teams up with dance teachers and act performance concept that troupes in each city. and put on features select dancers from a combined show celebrating the the internationally renowned state of this art in North America Bellydance Superstars and locally- today. based premier bellydancers. Each local Bellydance Bellydance Superstars’ Sabah, community provides the fi rst half Moria, Sabrina, Victoria and of the show and the BDSS dancers Nathalie will showcase new the second half. We call it Club choreography and ideas designed Bellydance. for the intimate, club-style show. MEET THE DANCERS ballet and bellydance was added fusion isolation and individualism. just to name a few. Sabrina is also WHAT IS CLUB BELLYDANCE? Victoria Teel is an award to the show as a special feature. Moria has appeared in several certifi ed in and has been teaching After eight years of traveling the winning performer and instructor. Sabah began her professional Bellydance Superstars performance Vinyasa Flow yoga for 5 years. world as the Bellydance Superstars Beginning in 2006, she studied career performing in numerous DVDs - 30 Days to Vegas, Nathalie is a professional — over 800 shows in 23 countries — multiple dance styles including productions of The Nutcracker for Babelesque: Live from Tokyo, actress, dancer, choreographer and watching this dance art grow as Egyptian, Turkish, American 12 years. -

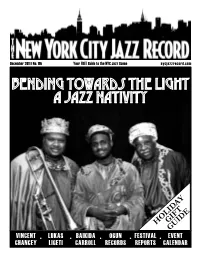

Bending Towards the Light a Jazz Nativity

December 2011 | No. 116 Your FREE Guide to the NYC Jazz Scene nycjazzrecord.com bending towards the light a jazz nativity HOLIDAYGIFT GUIDE VINCENT •••••LUKAS BAIKIDA OGUN FESTIVAL EVENT CHANCEY LIGETI CARROLL RECORDS REPORTS CALENDAR The Holiday Season is kind of like a mugger in a dark alley: no one sees it coming and everyone usually ends up disoriented and poorer after the experience is over. 4 New York@Night But it doesn’t have to be all stale fruit cake and transit nightmares. The holidays should be a time of reflection with those you love. And what do you love more Interview: Vincent Chancey than jazz? We can’t think of a single thing...well, maybe your grandmother. But 6 by Anders Griffen bundle her up in some thick scarves and snowproof boots and take her out to see some jazz this month. Artist Feature: Lukas Ligeti As we battle trying to figure out exactly what season it is (Indian Summer? 7 by Gordon Marshall Nuclear Winter? Fall Can Really Hang You Up The Most?) with snowstorms then balmy days, what is not in question is the holiday gift basket of jazz available in On The Cover: A Jazz Nativity our fine metropolis. Celebrating its 26th anniversary is Bending Towards The 9 by Marcia Hillman Light: A Jazz Nativity (On The Cover), a retelling of the biblical story starring jazz musicians like this year’s Three Kings - Houston Person, Maurice Chestnut and Encore: Lest We Forget: Wycliffe Gordon (pictured on our cover) - in a setting good for the whole family.