Defence Experimentation for Force Development Handbook Defence Experimentation for Force Development Handbook

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Social Studies

201 OAlabama Course of Study SOCIAL STUDIES Joseph B. Morton, State Superintendent of Education • Alabama State Department of Education For information regarding the Alabama Course of Study: Social Studies and other curriculum materials, contact the Curriculum and Instruction Section, Alabama Department of Education, 3345 Gordon Persons Building, 50 North Ripley Street, Montgomery, Alabama 36104; or by mail to P.O. Box 302101, Montgomery, Alabama 36130-2101; or by telephone at (334) 242-8059. Joseph B. Morton, State Superintendent of Education Alabama Department of Education It is the official policy of the Alabama Department of Education that no person in Alabama shall, on the grounds of race, color, disability, sex, religion, national origin, or age, be excluded from participation in, be denied the benefits of, or be subjected to discrimination under any program, activity, or employment. Alabama Course of Study Social Studies Joseph B. Morton State Superintendent of Education ALABAMA DEPARTMENT OF EDUCATION STATE SUPERINTENDENT MEMBERS OF EDUCATION’S MESSAGE of the ALABAMA STATE BOARD OF EDUCATION Dear Educator: Governor Bob Riley The 2010 Alabama Course of Study: Social President Studies provides Alabama students and teachers with a curriculum that contains content designed to promote competence in the areas of ----District economics, geography, history, and civics and government. With an emphasis on responsible I Randy McKinney citizenship, these content areas serve as the four Vice President organizational strands for the Grades K-12 social studies program. Content in this II Betty Peters document focuses on enabling students to become literate, analytical thinkers capable of III Stephanie W. Bell making informed decisions about the world and its people while also preparing them to IV Dr. -

Any Mask Mandate Could Be Challenging to Enforce

Granger mayor charged with theft By DAVID MARTIN fine of up to $10,000 while the the Sweetwater County Sheriff’s Publisher misdemeanor carries a possible Office. sentence of up to one year in jail Sloan said she resigned from The mayor of Granger is fac- and a fine of up to $1,000. her position due to issues with ing allegations he used mu- McCollum made his initial the town’s accounts and voiced nicipal funds for personal use, appearance before Circuit Court concerns McCollum was misus- which include purchasing a Judge Craig Jones Friday after- ing the town’s bank card. furnace part for a rental home noon and was released from the On Nov. 14, 2019, Sloan was he owns in New York state. Sweetwater County Detention interviewed by detectives and al- Bradly McCollum, 55, was Center after posting bail. leged the current town council of arrested and charged last week According to court docu- “total hypocrisy” when members with felony theft and misde- ments, the investigation into spoke badly about the previous meanor wrongful appropriation McCollum’s activities started council’s activities when the cur- of public property. Nov. 8, 2019 when Sharon rent council was doing the same The felony charge carries a Sloan, the former clerk and trea- thing. potential sentence of up to 10 surer for the town had contacted years in prison and a possible Detective Matthew Wharton of Continued on A2 Wednesday, November 11, 2020 130th Year, 25th Issue Green River, WY 82935 Address Service Requested $1.50 County is thoroughly Republican By DAVID MARTIN Publisher If the General Election last week proved one thing about Sweetwater County’s voters, it’s that they’re overwhelming Republican. -

Summer 2005 HNSA Anchor Watch.Qxd

JULY ANCHOR AUGUST SEPTEMBER WATCH 2005 The Official Journal of the Historic Naval Ships Association www.hnsa.org H.N.S.A. MEMBER IN THE SPOTLIGHT THE IMPERIAL WAR MUSEUM’S H.M.S. BELFAST H.M.S. BELFAST DELIVERS A DEADLY CARGO OF FOUR-INCH SHELLS off the Normandy Coast in support of the D-Day Landings, 6 June 1944. The famed Royal Navy cruiser, also a veteran of the Korean War, is today painstakingly preserved in the River Thames at London. Please see page four for more about H.M.S. BELFAST, this month’s H.N.S.A. Member in the Spotlight. Photo courtesy H.M.S. BELFAST. ANCHOR WATCH 2 H.N.S.A. STAFF H.N.S.A. OFFICERS Executive Director CDR Jeffrey S. Nilsson, U.S.N.R. (Ret.) Executive Director Emeritus President CAPT Channing M. Zucker, U.S.N. (Ret.) James B. Sergeant, U.S.S. ALBACORE Executive Secretary Vice President James W. Cheevers William N.Tunnell, Jr., U.S.S. ALABAMA Individual Member Program Manager Secretary CDR Jeffrey S. Nilsson, U.S.N.R. (Ret.) LCDR Sherry Richardson, H.M.C.S. SACKVILLE Anchor Watch Editors Treasurer D. Douglas Buchanan, Jr. James B. Sergeant, U.S.S. ALBACORE Scott D. Kodger Immediate Past President Webmaster David R. Scheu, Sr., U.S.S. NORTH CAROLINA Richard S. Pekelney European Coordinator HONORARY DIRECTORS CAPT Cornelis D. José, R.N.L.N. (Ret.) Vice-Admiral Ron D. Buck, C.F. Chief of the Maritime Staff Dr. Christina Cameron Director General National Historic Sites Admiral Vern E. -

Volume II 2017

THE OFFICIAL MAGAZINE A L A B A M A OF THE ALABAMA STATE PORT AUTHORITY SEAPORT2017 VOL. II Alabama State Port Authority and APM Terminals welcomes Walmart to the Port of Mobile. ALABAMA SEAPORT EST. 1892 PUBLISHED CONTINUOUSLY SINCE 1927 • 2017 VOL. II GLOBAL LOGISTICS • PROJECT CARGO SUPPLY CHAIN MANAGEMENT ON THE COVER: 4 12 AEROSPACE • AUTOMOTIVE • CHEMICALS • ELECTRONICS • FOOD & BEVERAGE • FOREST PRODUCTS The Port of Mobile grows with new FURNITURE • GENERAL & BULK CARGO • MACHINERY • STEEL • TEMPERATURE CONTROLLED Walmart distribution center. See story on page 4 14 26 ALABAMA STATE PORT AUTHORITY The ALABAMA SEAPORT Magazine has been a trusted news and information resource P.O. Box 1588, Mobile, Alabama 36633, USA for customers, elected officials, service providers and communities for news regarding P: 251.441.7200 • F: 251.441.7216 • asdd.com Alabama’s only deepwater Port and its impact throughout the state of Alabama, James K. Lyons, Director, CEO region, nation and abroad. In order to refresh and expand readership of ALABAMA H.S. “Smitty” Thorne, Deputy Director/COO SEAPORT, the Alabama State Port Authority (ASPA) now publishes the magazine Larry R. Downs, Secretary-Treasurer/CFO quarterly, in four editions appearing in winter, spring, summer and fall. Exciting things are happening in business and industry throughout Alabama and the Southeastern FINANCIAL SERVICES Larry Downs, Secretary/Treasurer 251.441.7050 U.S., and the Port Authority has been investing in its terminals to remain competitive Linda K. Paaymans, Sr. Vice President, -

Actual-Lesson-Plan-1.Pdf

The U.s.s. Alabama This 35,000-ton battleship, commissioned as the USS Alabama in August 1942, is one of only two surviving examples of the South Dakota class. Alabama gave distinguished service in the Atlantic and Pacific theaters of World War II. During its 40-month Asiatic- Pacific stint, it participated in the bombardment of Honshu and its 300-member crew earned nine battle stars. Decommissioned in 1947, the ship was transferred to the state of Alabama in 1964 and is now a war memorial, open to the public. National Register of Historic Places Listed 1986-01-14 www.nr.nps.gov/writeups/86000083.nl.pdf table of contents: Introduction……………………………………………….3 Getting Started……………………………………………4 Setting the Stage…………………………………………..5 Locating the Site…………………………………………..7 Determining the Facts…………………………………10 Visual Evidence……………………………………………..26 2 introduction The U.S.S. Alabama is sailing quietly on the Pacific Ocean on the night of 26 November 1943. Most of the sailors are sleeping soundly in their racks while the night shift is on watch. At 22:15 the Officer of the Deck receives word there are enemy planes approaching and gives order to sound General Quarters. General Quarters, General Quarters, all hands man your battle stations, forward starboard side aft port side General Quarters. Sailors jump out of their racks and others run to their battle stations in orderly chaos. As water-tight hatches are being closed, Captain Wilson runs to the bridge to take in the situation and starts giving orders. While signalmen search the skies with their signal lights for the approaching enemy aircraft, gunners and loaders ready their guns waiting for orders. -

2. Location Street a Number Not for Pubhcaoon City, Town Baltimore Vicinity of Ststs Maryland Coot 24 County Independent City Cods 510 3

B-4112 War 1n the Pacific Ship Study Federal Agency Nomination United States Department of the Interior National Park Servica cor NM MM amy National Register of Historic Places Inventory—Nomination Form dato««t«««d See instructions in How to CompMe National Raglatar Forma Type all entries—complsts applicable sections 1. Name m«toMc USS Torsk (SS-423) and or common 2. Location street a number not for pubHcaOon city, town Baltimore vicinity of ststs Maryland coot 24 county Independent City cods 510 3. Classification __ Category Ownership Status Present Use district ±> public _X occupied agriculture _X_ museum bulldlng(s) private unoccupied commercial park structure both work In progress educational private residence site Public Acquisition Accessible entertainment religious JL_ object in process X_ yes: restricted government scientific being considered yes: unrestricted Industrial transportation no military other: 4. Owner of Property name Baltimore Maritime Museum street * number Pier IV Pratt Street city,town Baltimore —vicinltyof state Marvlanrf 5. Location of Legal Description courthouse, registry of deeds, etc. Department of the Navy street * number Naval Sea Systems Command, city, town Washington state pc 20362 6. Representation in Existing Surveys title None has this property been determined eligible? yes no date federal state county local depository for survey records ctty, town . state B-4112 Warships Associated with World War II In the Pacific National Historic Landmark Theme Study" This theme study has been prepared for the Congress and the National Park System Advisory.Board in partial fulfillment of the requirements of Public Law 95-348, August 18, 1978. The purpose of the theme study is to evaluate sur- ~, viving World War II warships that saw action in the Pacific against Japan and '-• to provide a basis for recommending certain of them for designation as National Historic Landmarks. -

Model Ship Book 4Th Issue

A GUIDE TO 1/1200 AND 1/1250 WATERLINE MODEL SHIPS i CONTENTS FOREWARD TO THE 5TH ISSUE 1 CHAPTER 1 INTRODUCTION 2 Aim and Acknowledgements 2 The UK Scene 2 Overseas 3 Collecting 3 Sources of Information 4 Camouflage 4 List of Manufacturers 5 CHAPTER 2 UNITED KINGDOM MANUFACTURERS 7 BASSETT-LOWKE 7 BROADWATER 7 CAP AERO 7 CLEARWATER 7 CLYDESIDE 7 COASTLINES 8 CONNOLLY 8 CRUISE LINE MODELS 9 DEEP “C”/ATHELSTAN 9 ENSIGN 9 FIGUREHEAD 9 FLEETLINE 9 GORKY 10 GWYLAN 10 HORNBY MINIC (ROVEX) 11 LEICESTER MICROMODELS 11 LEN JORDAN MODELS 11 MB MODELS 12 MARINE ARTISTS MODELS 12 MOUNTFORD METAL MINIATURES 12 NAVWAR 13 NELSON 13 NEMINE/LLYN 13 OCEANIC 13 PEDESTAL 14 SANTA ROSA SHIPS 14 SEA-VEE 16 SANVAN 17 SKYTREX/MERCATOR 17 Mercator (and Atlantic) 19 SOLENT 21 TRIANG 21 TRIANG MINIC SHIPS LIMITED 22 ii WASS-LINE 24 WMS (Wirral Miniature Ships) 24 CHAPTER 3 CONTINENTAL MANUFACTURERS 26 Major Manufacturers 26 ALBATROS 26 ARGONAUT 27 RN Models in the Original Series 27 RN Models in the Current Series 27 USN Models in the Current Series 27 ARGOS 28 CM 28 DELPHIN 30 “G” (the models of Georg Grzybowski) 31 HAI 32 HANSA 33 NAVIS/NEPTUN (and Copy) 34 NAVIS WARSHIPS 34 Austro-Hungarian Navy 34 Brazilian Navy 34 Royal Navy 34 French Navy 35 Italian Navy 35 Imperial Japanese Navy 35 Imperial German Navy (& Reichmarine) 35 Russian Navy 36 Swedish Navy 36 United States Navy 36 NEPTUN 37 German Navy (Kriegsmarine) 37 British Royal Navy 37 Imperial Japanese Navy 38 United States Navy 38 French, Italian and Soviet Navies 38 Aircraft Models 38 Checklist – RN & -

Full Issue (6

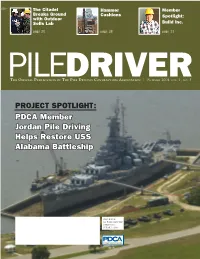

The Citadel Hammer Member Breaks Ground Cushions Spotlight: with Outdoor Soils Lab Build Inc. page 20 page 28 page 33 THE OFFICIAL PUBLICATION OF THE PILE DRIVING CONTRACTORS ASSOCIATION | SUMMER 2004 VOL. 1, NO. 3 PROJECT SPOTLIGHT: PPDCADCA MMemberember JJordanordan PPileile DDrivingriving HHelpselps RRestoreestore UUSSSS AAlabamalabama BBattleshipattleship PRSTD STD US POSTAGE PAID FARGO ND PERMIT #1080 HP14x73 HP12x53 HP10x42 HP8x36 HP14x89 HP12x63 HP10x57 HP14x102 HP12x74 HP14x117 HP12x84 Pipe & Piling Supplies Ltd. U.S.A. George A. Lanxon Piling Sales San Francisco www.pipe-piling.com Washington P. O. Box 3069 P. O. Box 5206 CANADA Auburn, WA Fairview Heights, IL 62208 Fair Oaks, CA 95628-9104 253-939-4700 618-632-2998 916-989-6720 British Columbia [email protected] 618-271-0031 Port Coquitlam, BC Fax: 618-632-9806 Nebraska Regal Steel Supply 604-942-6311 Barbara J. Lanxon, President Northern California Omaha,NE [email protected] West Washington Street 402-896-9611 Midwest Pipe & Steel Inc. at Port Road 23 [email protected] Alberta [email protected] Stockton, CA 95203 Nisku, AB 323 East Berry Street 800-649-3220 Michigan 780-955-0501 P. O. Box 11558 Fax: 209-943-3223 Kincheloe, MI [email protected] Fort Wayne, IN 46859 [email protected] 906-495-2245 800-589-7578 Calgary, AB rgriffi[email protected] 260-422-6541 R.W. Conklin Steel Supply Inc. 403-236-1332 Fax: 260-426-0729 [email protected] Steel & Pipe Supply Co. Inc. www.conklinsteel.com 3336 Carpenters Creek Drive www.spsci.com International Cincinnati, OH 45241-3813 Ontario 555 Poyntz Avenue 888-266-5546 Brampton, ON Manhattan, KS 66502 Construction Services Inc. -

Spring 2008 AW:Winter 2006 HNSA Anchor Watch.Qxd 4/26/2008 11:12 PM Page 1

Spring 2008 AW:Winter 2006 HNSA Anchor Watch.qxd 4/26/2008 11:12 PM Page 1 APRIL ANCHOR MAY JUNE WATCH 2008 The Quarterly Journal of the Historic Naval Ships Association www.hnsa.org U.S.S. ALABAMA..... .....Recovery Complete. Spring 2008 AW:Winter 2006 HNSA Anchor Watch.qxd 4/26/2008 11:12 PM Page 2 2 ANCHOR WATCH H.N.S.A. STAFF H.N.S.A. OFFICERS President William N. Tunnell, Jr., U.S.S. ALABAMA/U.S.S. DRUM Executive Director Vice President CDR Jeffrey S. Nilsson, U.S.N. (Ret.) RADM John P. (Mac) McLaughlin, U.S.S. MIDWAY Executive Director Emeritus Secretary CAPT Channing M. Zucker, U.S.N. (Ret.) LCDR Sherry Richardson, H.M.C.S. SACKVILLE Executive Secretary Treasurer James W. Cheevers COL Patrick J. Cunningham Individual Member Program Manager Buffalo & Erie County Naval & Military Park CDR Jeffrey S. Nilsson, U.S.N. (Ret.) Immediate Past President Anchor Watch Editor Captain F. W. "Rocco" Montesano, U.S.S. LEXINGTON Jason W. Hall Battleship NEW JERSEY Museum HONORARY DIRECTORS Webmaster Admiral Thad W. Allen, U.S. Coast Guard Richard S. Pekelney Sean Connaughton, MARAD International Coordinator Admiral Michael G. Mullen, U.S. Navy Brad King Larry Ostola, Parks Canada Vice Admiral Drew Robertson, Royal Canadian Navy H.M.S. BELFAST Admiral Sir Alan West, GCB DCD, Royal Navy DIRECTORS AT LARGE Captain Jack Casey, U.S.N. (Ret) H.N.S.A. COMMITTEE U.S.S. MASSACHUSETTS Memorial CHAIRPERSONS Troy Collins Battleship NEW JERSEY Museum Maury Drummond U.S.S. KIDD Annual Conference Robert Howard, Patriots Point Alyce N. -

2010-Summer.Pdf

SUMMER 2010 - Volume 57, Number 2 WWW.AFHISTORICALFOUNDATION.ORG The Air Force Historical Foundation Founded on May 27, 1953 by Gen Carl A. “Tooey” Spaatz MEMBERSHIP BENEFITS and other air power pioneers, the Air Force Historical All members receive our exciting and informative Foundation (AFHF) is a nonprofi t tax exempt organization. Air Power History Journal, either electronically or It is dedicated to the preservation, perpetuation and on paper, covering: all aspects of aerospace history appropriate publication of the history and traditions of American aviation, with emphasis on the U.S. Air Force, its • Chronicles the great campaigns and predecessor organizations, and the men and women whose the great leaders lives and dreams were devoted to fl ight. The Foundation • Eyewitness accounts and historical articles serves all components of the United States Air Force— Active, Reserve and Air National Guard. • In depth resources to museums and activities, to keep members connected to the latest and AFHF strives to make available to the public and greatest events. today’s government planners and decision makers information that is relevant and informative about Preserve the legacy, stay connected: all aspects of air and space power. By doing so, the • Membership helps preserve the legacy of current Foundation hopes to assure the nation profi ts from past and future US air force personnel. experiences as it helps keep the U.S. Air Force the most modern and effective military force in the world. • Provides reliable and accurate accounts of historical events. The Foundation’s four primary activities include a quarterly journal Air Power History, a book program, a • Establish connections between generations. -

ISSUE XXXIX, Summer 2007 Sinking the Rising Sun

World War II Chronicles A Quarterly Publication of the World War II Veterans Committee ISSUE XXXIX, Summer 2007 Sinking the Rising Sun PLUS Baseball Goes to War Okinawa: The Typhoon of Steel Currahee! World War II Chronicles A Quarterly Publication of the World War II Veterans Committee WWW.WWIIVETS.COM ISSUE XXXIX, Summer 2007 Articles -In This Issue- Sinking the Rising Sun: Dog Fighting and Dive On December 7, 1941, hundreds of Japa- Bombing in World War II by 6 nese fighters and bombers launched from William E. Davis the aircraft carriers Akagi, Kaga, Soryu, The man who helped deliver the final blow to the Hiryu, Shôkaku, and Zuikaku on their last Japanese aircraft carrier to take part in the fateful mission to attack the American attack on Pearl Harbor remembers his experience. base at Pearl Harbor, plunging the U.S. Baseball Goes to War by into World War II. Three and a half years 13 James C. Roberts later, all but the Zuikaku had been sunk. After the attack on Pearl Harbor, the stars of In October of 1944, as the Japanese navy America’s pastime knew there was only one team steamed toward Leyte in an attempt to they wanted to play for: the United States thwart General MacArthur’s return, the Zuikaku—the last Japanese carrier to take military. part in the attack on Pearl Harbor—presented itself as a ripe target for one American pilot, allowing him to personally avenge the treachery of December 7. Okinawa: The Typhoon of Steel with 19 Donald Dencker, John Ensor, Leonard Lazarick, Features & Renwyn Triplett The Japanese Empire makes its last stand in the An Unstable Past by final major battle of history’s greatest war. -

Volume I 2019

THE OFFICIAL MAGAZINE A L A B A M A OF THE ALABAMA STATE PORT AUTHORITY SEAPORT2019 VOL. I THANK YOU, ALABAMA! The Mobile Harbor receives state of Alabama funding through the Rebuild Alabama Act. GLOBAL LOGISTICS | SUPPLY CHAIN MANAGEMENT | PROJECT CARGO ALABAMA SEAPORT AEROSPACE · AUTOMOTIVE · CHEMICALS · ELECTRONICS · FOOD & BEVERAGE · FOREST PRODUCTS PUBLISHED CONTINUOUSLY SINCE 1927 • 2019 VOL. I FURNITURE · GENERAL & BULK CARGO · MACHINERY · STEEL · TEMPERATURE CONTROLLED ON THE COVER: The Mobile Harbor receives state of Alabama 4 18 funding through the Rebuild Alabama Act. See story on page 4 20 28 ALABAMA STATE PORT AUTHORITY The ALABAMA SEAPORT Magazine has been a trusted news and information resource P.O. Box 1588, Mobile, Alabama 36633, USA for customers, elected officials, service providers and communities for news regarding P: 251.441.7200 • F: 251.441.7216 • asdd.com Alabama’s only deepwater Port and its impact throughout the state of Alabama, James K. Lyons, Director, CEO region, nation and abroad. In order to refresh and expand readership of ALABAMA H.S. “Smitty” Thorne, Deputy Director, COO SEAPORT, the Alabama State Port Authority (ASPA) now publishes the magazine Linda K. Paaymans, Secretary/Treasurer, CFO quarterly, in four editions appearing in winter, spring, summer and fall. Exciting things Danny Barnett, Manager, Human Resources are happening in business and industry throughout Alabama and the Southeastern FINANCIAL SERVICES U.S., and the Port Authority has been investing in its terminals to remain competitive Linda