A Framework for Identifying Associations in Digital Evidence Using Metadata

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Resizing for ACCC Competition Rev 1.0 9/12/2011

Resizing for ACCC Competition Rev 1.0 9/12/2011 This document contains instructions for resizing your images to comply with the new Digital Image Competition Guidelines. In this document we have attempted to provide instructions that cover most of the image editing programs in common use by our members. However, it is entirely possible that some have been omitted. If you let us know, we will try to incorporate those applications in future versions of these Instructions. In the meantime, studying the options available in other programs will most likely be helpful in figuring out what you need to do in your particular software. Currently, instructions for the following programs are included: • Photoshop • Lightroom • Aperture • Image Processor (from Bridge or Photoshop) • Photoshop Elements • Irfanview • Picasa • Canon Digital Photo Professional 3 • Nikon View NX • Capture NX / NX2 • Paint Shop Pro (links to tutorials) Adobe Photoshop CS5 With your image open in Photoshop, go to Image > Image Size and the following dialog box will open. Make sure that the three boxes on the bottom left are checked and the Resolution is set to 300 pixels/inch. Then, change the image dimensions so that the longest edge of your image (height in the example below) does not exceed 1920 pixels and the shortest edge does not exceed 1080 pixels. In the example above the longest dimension was reduced to 1620 when 1080 was used for the shortest dimension. Under “Resample Image” choose Bicubic Sharper from the drop down box. If you want to use the full allowable dimensions of 1920 X 1080 you must crop the image to achieve the 16:9 aspect ratio. -

And Alternatives to Free Software

Free Software and Alternatives to Free Software Presentation for the: Sarasota Technology Users Group June 5, 2019 7:00 p.m. Presented by: John “Free John” Kennedy [email protected] Member of the East-Central Ohio Technology Users Club Newark, Ohio Brought to you by: APCUG Speakers Bureau One of your benefits of membership. Functional Resources Economically Enticing Functional Resources -- Economically Enticing Functional Resources -- Economically Enticing Functional Resources -- Economically Enticing Functional Resources Economically Enticing FREE My Needs Computer software: ● that does what I want ● and price is reasonable My Problem ● most “packaged” software does way more than what I need ● most “packaged” software costs way more than I can afford What I've Found ● software that costs $$$$ ● software that's FREE ● free software that I like better than other free software Types of Software ● PS = Paid Software ● FS = Free Software ● CSS = Closed Source Software ● OSS = Open Source Software ● POSS = Paid Open Source Software ● FOSS = Free Open Source Software FOSS ● Free and Open Source Software ● Free software vs. Open Source Software; are they the same or different? Recipes! ● Both are free, but open source developers are willing to share the code so that others can help re- write/improve the software (you can also donate to these people as well). Bottom Line = $$$$ ● Free programs may be missing some features. ● So far I haven't missed them, and you may not either. ● But if something is missing, then you decide if it's worth the total price of the program to have that missing feature. ● Start with the free program, if it doesn't meet your needs, purchase the paid program. -

Freeware Irfanview Windows 10 Latest Version Download Freeware Irfanview Windows 10 Latest Version Download

freeware irfanview windows 10 latest version download Freeware irfanview windows 10 latest version download. Advantages of IrfanView 64-bit over 32-bit version: It can load VERY large files/images (image RAM size over 1.3 GB, for special users) Faster for very large images (25+ Megapixels, loading or image operations) Runs 'only' on a 64-bit Windows (Vista, Win7, Win8, Win10) Advantages of IrfanView 32-bit over 64-bit version: Runs on a 32-bit and 64-bit Windows Loads all files/images for normal needs (max. RAM size is about 1.3 GB) Needs less disc space All PlugIns will work: not all PlugIns are ported (yet) to 64-bit (like OCR) and some 32-bit PlugIns must be still used in the 64-bit version, some with limitations (see the "Plugins32" folder) Some old 32-bit PlugIns (like RIOT and Adobe 8BF PlugIn) work only in compatilibilty mode in IrfanView-64 ( only 32-bit 8BF files/effects can be used ) Command line options for scanning (/scan etc.) work only in 32-bit (because no 64-bit TWAIN drivers ) Notes: You can install both versions on the same system, just use different folders . For example: install the 32-bit version in your "Program Files (x86)" folder and the 64-bit version in your "Program Files" folder (install 32-bit PlugIns to IrfanView-32 and 64-bit PlugIns to IrfanView-64, DO NOT mix the PlugIns and IrfanView bit versions) The program name and icon have some extra text in the 64-bit version for better distinguishing. Available 64-bit downloads. -

JPEG Image Compression2.Pdf

JPEG image compression FAQ, part 2/2 2/18/05 5:03 PM Part1 - Part2 - MultiPage JPEG image compression FAQ, part 2/2 There are reader questions on this topic! Help others by sharing your knowledge Newsgroups: comp.graphics.misc, comp.infosystems.www.authoring.images From: [email protected] (Tom Lane) Subject: JPEG image compression FAQ, part 2/2 Message-ID: <[email protected]> Summary: System-specific hints and program recommendations for JPEG images Keywords: JPEG, image compression, FAQ, JPG, JFIF Reply-To: [email protected] Date: Mon, 29 Mar 1999 02:24:34 GMT Sender: [email protected] Archive-name: jpeg-faq/part2 Posting-Frequency: every 14 days Last-modified: 28 March 1999 This article answers Frequently Asked Questions about JPEG image compression. This is part 2, covering system-specific hints and program recommendations for a variety of computer systems. Part 1 covers general questions and answers about JPEG. As always, suggestions for improvement of this FAQ are welcome. New since version of 14 March 1999: * Added entries for PIE (Windows digicam utility) and Cameraid (Macintosh digicam utility). * New version of VuePrint (7.3). This article includes the following sections: General info: [1] What is covered in this FAQ? [2] How do I retrieve these programs? Programs and hints for specific systems: [3] X Windows [4] Unix (without X) [5] MS-DOS [6] Microsoft Windows [7] OS/2 [8] Macintosh [9] Amiga [10] Atari ST [11] Acorn Archimedes [12] NeXT [13] Tcl/Tk [14] Other systems Source code for JPEG: [15] Freely available source code for JPEG Miscellaneous: [16] Which programs support progressive JPEG? [17] Where are FAQ lists archived? This article and its companion are posted every 2 weeks. -

Image Analysis

CMB 551 Module 1A Image processing and quantitative image analysis Sam Johnson Benjamin Carlson http://microscopy.duke.edu/ [email protected] n File: Filename_to_open Microscopy images are somewhat different to other fields Microscopy data are in many formats Microscope specific formats LIF, LEI, LSM, ZVI, STK, OIB, OIF, dv, ICS/IDS, ICS2, r3d, PIC, IPL, CXD, SDT, IPW, ND2, RAW, IMS. Types of images – bitmap/vector and compression Bit-depth, histogram, scaling and gamma Export to standard formats for figures Color use and misuse 3D images – Projections and other views Stitching and alignment Image processing – Filters Segmentation – Regions of interest Quantification – Count, measure, intensity, colocalization, 3D measurements, tracking Data big(ish) data One image = ~1 MB l*3 Z*11 t*360 = ~12 GB Can be a challenge to • Move the data • Store all your original data • Keep it safe • Be able to find it again Some useful things for the microscopist to know about hard-drives and servers Drive formats: • NTFS = PC (and Linux understands) • HFS+ = Mac OS X Extended • Ext* = Linux • FAT32 = All (but with some limitations – 4GB max file size) Hard-drives fail, RAID helps (not always) . Non-redundant, faster write speeds CPU GPU 100 GB/s RAM 60 MB/s Hard Disks <10 MB/s ~25 MB/s 4-40 MB/s Programs for viewing your microscope images on your computer Free basic versions of the confocal software for the particular format (but FIJI opens them all) Irfanview opens 16-bit TIFF (PC only) Download links for all http://microscopy.duke.edu/analysis.html Are we going to use Photoshop? No GNU Image Manipulation Program http://www.gimp.org/ FIJI/ImageJ and reasons to use it FIJI – FIJI Is Just ImageJ http://fiji.sc/wiki/index.php/Fiji (FIJI is ImageJ + a set of useful plugins, an update tool, 3D Java, scripting and . -

Image Preparation of Your Work for Online Entry: the Tips and Guidelines Provided Below Are for the Photo of Your Entry That Is Required When Registering

Image Preparation of Your Work for Online Entry: The tips and guidelines provided below are for the photo of your entry that is required when registering. Please see the department’s competition guide for guidelines regarding your actual entry. Guidelines for Photographing 2-D and 3-D Artwork, Hand Crafts and Hobbies • Avoid using a flash. Natural daylight on an overcast day or shade on a sunny day is the best light for photographing artwork. • Use a neutral toned matte background, such as grey, black or ivory. • Set the camera on a tripod or other solid surface for stability. This is most important when lighting is low or when using a slow shutter speed or a low f-stop. • Check for depth of field. Higher numbers creates a sharper focus. Generally, an f-stop of 5.6 or higher is best. If using auto focus, be sure to tap the focus before shooting. • Take several photos at different settings and angles. Look at them on your computer screen instead of the camera’s viewer screen to select the best image. • Be sure your artwork fills the frame of the photo with some, but minimal, backdrop showing. • Use a macro setting on your camera to get a close detail of the work or crop the photo to show a close-up area of your entry. NOTE: For Fine Arts Divisions 500, 503, 510 and 513, all Woodworking Divisions, all Hand Crafts and Hobbies Divisions, and all Collections Divisions: Exhibitors have the option of adding 2 detailed photos of each of your 3-D entries. -

Suggestions on How to View OCC Document Images on the Web

Suggestions on how to view OCC Document Images on the Web The Oklahoma Corporation Commission black and white document images are saved in TIFF file format with Group IV compression since other image formats generate significantly larger files. To view these documents, your WWW Browser must be configured with a viewer that supports TIFF images and CCITT Group IV compression. If your Operating System is Windows 95, 98, 98SE, or 2000 then you should not have to add anything since Wang or Kodak Imaging is included with Windows and works well with these images. Windows XP or newer no longer includes this so you probably need to install a third party viewer. There are many available for download some of which are suggested below. Some work better than others and you may find one better than what we have listed. The best one we have found for viewing and printing all types and formats of Tiff images is Imaging for Windows Professional listed below. It is the new version of the viewer that came with the pre-XP versions of Windows. That being said please note the following disclaimer. DISCLAIMER: Any reference from the OCC Web server service to any non-government entity, product, service or information does not constitute an endorsement or recommendation by the Oklahoma Corporation Commission or any of its' field offices or employees. We are not responsible for the contents of any "off-site" web pages referenced from OCC web servers. Case 1- You already have a Tiff Viewer (XP/2000 do!): If you already have a viewer installed on your computer, e.g. -

Forensic Analysis of Ordered Data Structures on the Example of JPEG Files

Forensic Analysis of Ordered Data Structures on the Example of JPEG Files Thomas Gloe Institute of Systems Architecture, Technische Universität Dresden 01062 Dresden, Germany [email protected] Abstract—JPEG file format standards define only a limited and metadata, is a problem relevant to computer forensics in number of mandatory data structures and leave room for general [5]. Considering analysed characteristics in current interpretation. Differences between implementations employed in digital cameras, image processing software, and software to edit state-of-the-art image file forensic methods, we will discuss metadata provide valuable clues for basic authentication of digital possibilities to create ‘undetectable’ forgeries using commonly images. We show that there exists a realistic chance to fool state- available image processing tools. The observed weaknesses of-the-art image file forensic methods using available software call for an exploration of the limits of image file forensics. tools and introduce the analysis of ordered data structures Investigating JPEG and EXIF formats in detail, we identified on the example of JPEG file formats and the EXIF metadata format as countermeasure. The proposed analysis approach new characteristics based on the file structure robust against enables basic investigations of image authenticity and documents manipulations using existing tools. In consequence, generat- a much better trustworthiness of EXIF metadata than commonly ing ‘undetectable’ forgeries is much more complicated than accepted. Manipulations created with the renowned metadata commonly expected and requires advanced knowledge of file editor ExifTool and various image processing software can be formats. The proposed analysis approach gives investigators reliably detected. Analysing the sequence of elements in complex data structures is not limited to JPEG files and might be a general a tool for basic image authentication, which can be easily principle applicable to different multimedia formats. -

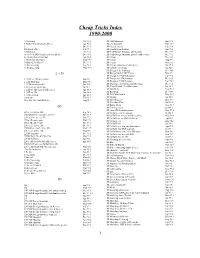

Cheap Tricks Index 1990-2000

Cheap Tricks Index 1990-2000 ?, Wildcards Oct 91 4 3D CAD Shootout Apr 98 1 $, Dollar Sign, Keyboard Macro Apr 91 4 3D cars & people Nov 98 2 $ Dec 99 1 3D Cars & Trucks Feb 99 8 $$$ Marker file Feb 99 6 3D Construction Drawing Apr 95 1 *, Wildcards Oct 91 4 3D CAD Design Shootout, observations Dec 97 3 %1 to %10, DOS Replaceable Parameters Dec 91 5 3D CAD Design Shootout, playoff-caliber teams Dec 97 3 0, Alt-0 keyboard interrupt Apr 93 4 3D Cursor Dec 94 2 1.25 Million Questions May 99 7 3D Cursor Aug 95 4 10-Base-2; 10-Base-T Dec 97 4 3D Cursor Mar 95 3 16 bit processing Sep 96 2 3D Cursor, undocumented features Jun 97 4 16-bit processing May 93 3 3D Cylinder stretching Jan 98 8 3D Design & Presentation Dec 94 1 2 ½ D 3D Design Models, DC Viewer Mar 97 1 3D Designer's CADD Shootout Sep 99 2 2 1/2 D, vs. 3D for sections Jun 96 1 3D Designers CAD Shootout Dec 98 1 2 1/2D Modeling May 94 1 3D Designers CAD Shootout Nov 98 8 2 1/2 D massing model Jan 99 6 3D Drawings, As Construction Drawings Dec 91 2 2 line trim for stretching Jul 96 4 3D, Easy Method, Neal Mortenson Jan 94 3 2 1/2D vs. 3D, chart of differences Apr 00 3 3D Edit Menu May 94 3 2 1/2D vs. 3D Apr 00 2 3D Edit Plane Dec 94 4 2 1/2D, defined Apr 00 2 3D Edit, Won't work May 91 5 20/20 rule Feb 95 2 3D Editing Jan 98 8 286, 386, 486, with DOS 5.0 Aug 91 4 3D, Elevation save Mar 99 5 3D Elevation View Jun 91 2 3D Entity Menu May 94 3 2D 3D Framing macro Oct 94 8 3D, from 2D Transformations May 97 1 2D vs. -

Leica DMS300/DMS1000

Leica DMS300/DMS1000 How to create and install customized overlays Purpose This document describes how to create and install customized overlays which can be imported into the Leica DMS-Series digital microscopes. Overlays are images that can be superimposed over the live and/or captured pictures. Overlays can contain virtually anything, but usually contain a few elements such as a crosshair or company logo. To streamline the process, we recommend that you begin with an existing set of overlays, or modify one of our existing templates a according to your specific needs. A total of 10 overlays can be stored in the Leica DMS300/DMS1000, 9 of which are customized and 1 is a factory installed histogram. Position “01” is reserved for histogram, which is displayed in the lower left- hand corner of the image. The remaining overlay positions “02” through “10” can be freely configured by the user, or they can be a selection of the Leica Standard overlays that are downloadable on the Leica DMS300 or Leica DMS1000 internet sites. Requirements: 1. Leica DMS300 or Leica DMS1000 2. 1920 x 1080 HD monitor with HDMI connector 3. PC with internet access 4. Image drawing software, eg. Microsoft Paint, Photoshop, XnView, IrfanView, PaintBox or similar software. We used Paint because it is easy and already installed on most Windows based computers. 5. PC with SD slot or a SD card reader. 1 / 10 Overlays In General: - Overlays are standard jpg files (24bit RGB) - The filenames of the Overlays must be in the format PN_R_OverlayName.jpg , where “ PN ” designates the position number of the overlay (numbers 02-10 can be used), “ R” is used to define the resolution the overlay will be used with, and “ OverlayName ” is, as it suggests, is for the user-definable name, which should help identify the overlay content. -

Chapter 9 Tech Tips the Data Sets Used for This Chapter Are Simply .Jpg

Chapter 9 Tech Tips The data sets used for this chapter are simply .jpg images of areas taken from aerial imagery downloaded for free from The National Map. As simple .jpg images they can be opened with a variety of different image or photo software programs – but whichever is used should have an option to be able to zoom in closely on the image so students can try and make out details in the picture. If you want to use different (or localized) images in your class, the high-resolution aerial photos are available to download for free from The National Map. Each aerial only covers a small geographic area, so you can preview the ‘footprint’ of the image prior to downloading it to be sure that the features you want to view in the aerial are in the image. The photos used in this chapter’s lab are cropped versions of these. After downloading, the file is available in JPEG 2000 format and will show the entire area covered by the aerial photo. In order to create a .jpg version of these files and to also properly crop out only the area you want to show for the lab, you’ll need to download two free software programs: The free IrfanView program was used for converting the JPEG 2000 files to .jpgs (IrfanView is available for free download here: www.irfanview.com/). The free GIMP (Graphic Image Manipulation Program) was used for cropping the converted .jpgs (GIMP is available for free download here: www.gimp.org/downloads/. The following steps outline the process used in this chapter to create the chapter’s data sets: 1. -

Tips for Simple Image Editing with Irfanview (Windows)

E-Learning-Center Ingrid Dethloff https://www.uni-heidelberg.de/elc Tips for simple image editing with IrfanView (Windows) Status: 11.2.2021, V1.1 IrfanView is provided as freeware for private (non-commercial use) use and is free for educational use (e.g. schools, universities, libraries).1 The range of functions also enables the most important basic image processing functions in a simple way. For study & teaching it is often necessary to reduce file sizes of images, select parts of an image, optimize images (color corrections, sharpen, rotation etc.), add information or convert a file format. Please think about your aim which you want to achieve with the image and where you want to use it (e.g. web page or learning management system), because this should be in relation to the image quality and corresponding legal conditions apply.2 This documentation describes the first steps based on Windows 10 IrfanView version 4.57.3 Content 1. Installation .......................................................................................................................................... 1 2. Fundamentals .................................................................................................................................... 2 3. Optimize images ............................................................................................................................... 2 4. Convert file type ................................................................................................................................ 2 5. Reduce